Prerequisites

-

An active Snowflake account is available.

-

You have either the ACCOUNTADMIN or SECURITYADMIN role in Snowflake to create a new role for Hevo.

-

You have either the ACCOUNTADMIN or SYSADMIN role in Snowflake to create a warehouse.

-

Hevo is assigned the USAGE permission on data warehouses.

-

Hevo is assigned the USAGE and CREATE SCHEMA permissions on databases.

-

Hevo is assigned the USAGE, MONITOR, CREATE TABLE, CREATE EXTERNAL TABLE, and MODIFY permissions on the current and future schemas.

Refer to the section Create and Configure your Snowflake Warehouse to create a Snowflake warehouse with adequate permissions for Hevo to access your data.

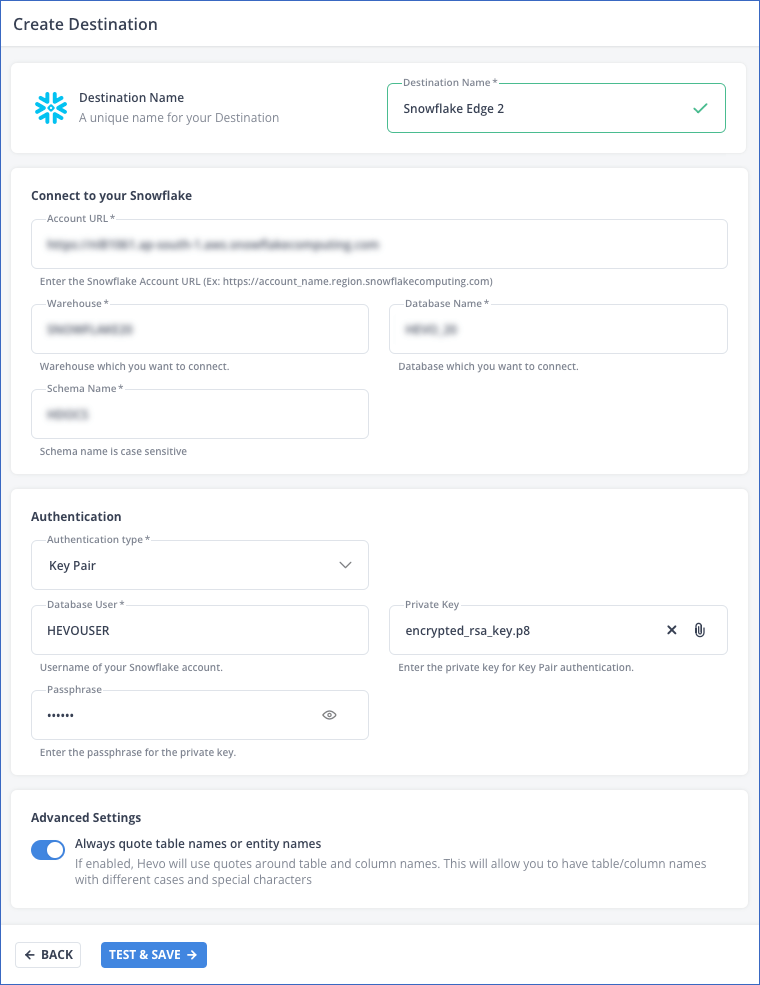

Perform the following steps to configure your Snowflake Destination in Edge:

(Optional) Create a Snowflake Account

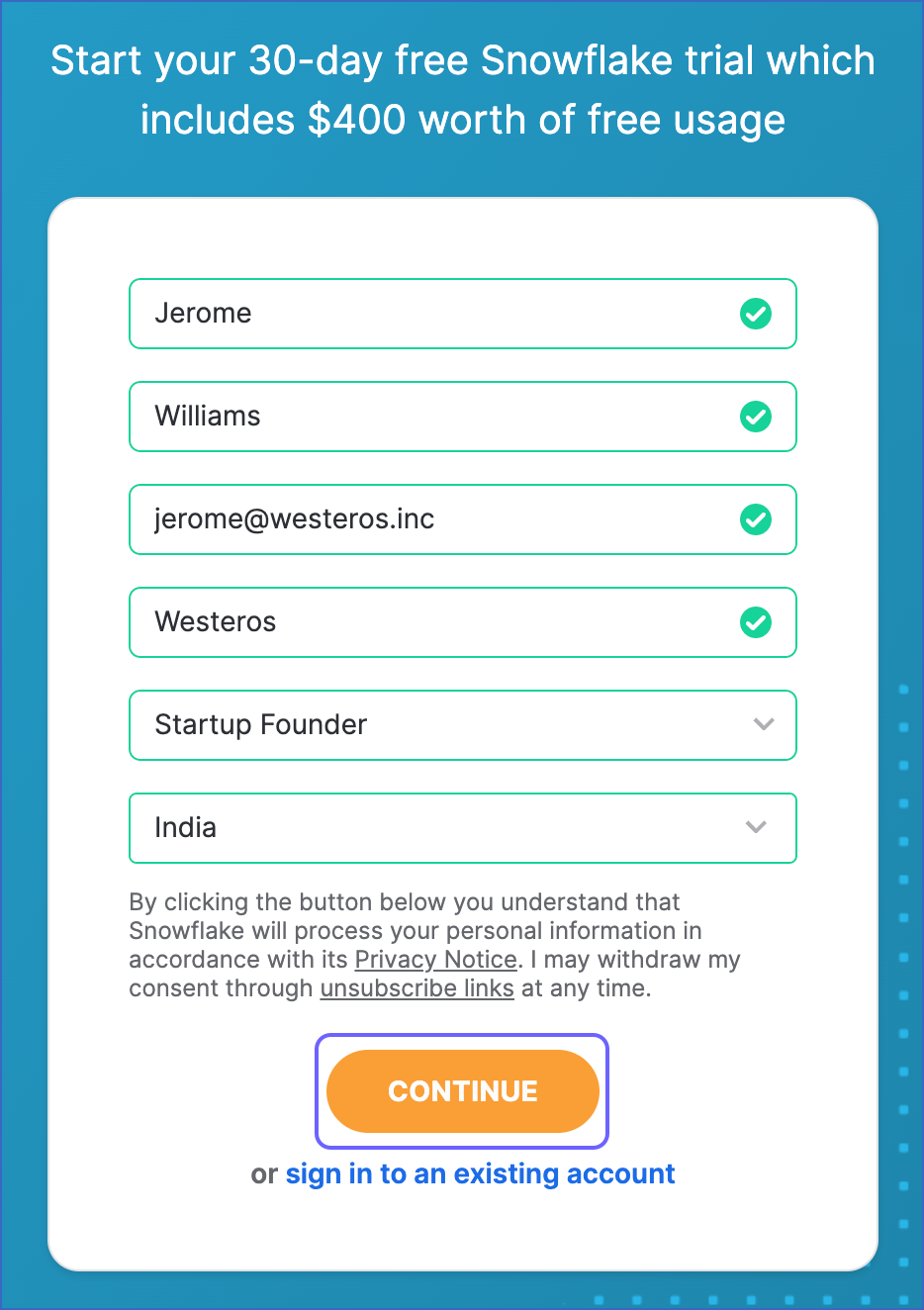

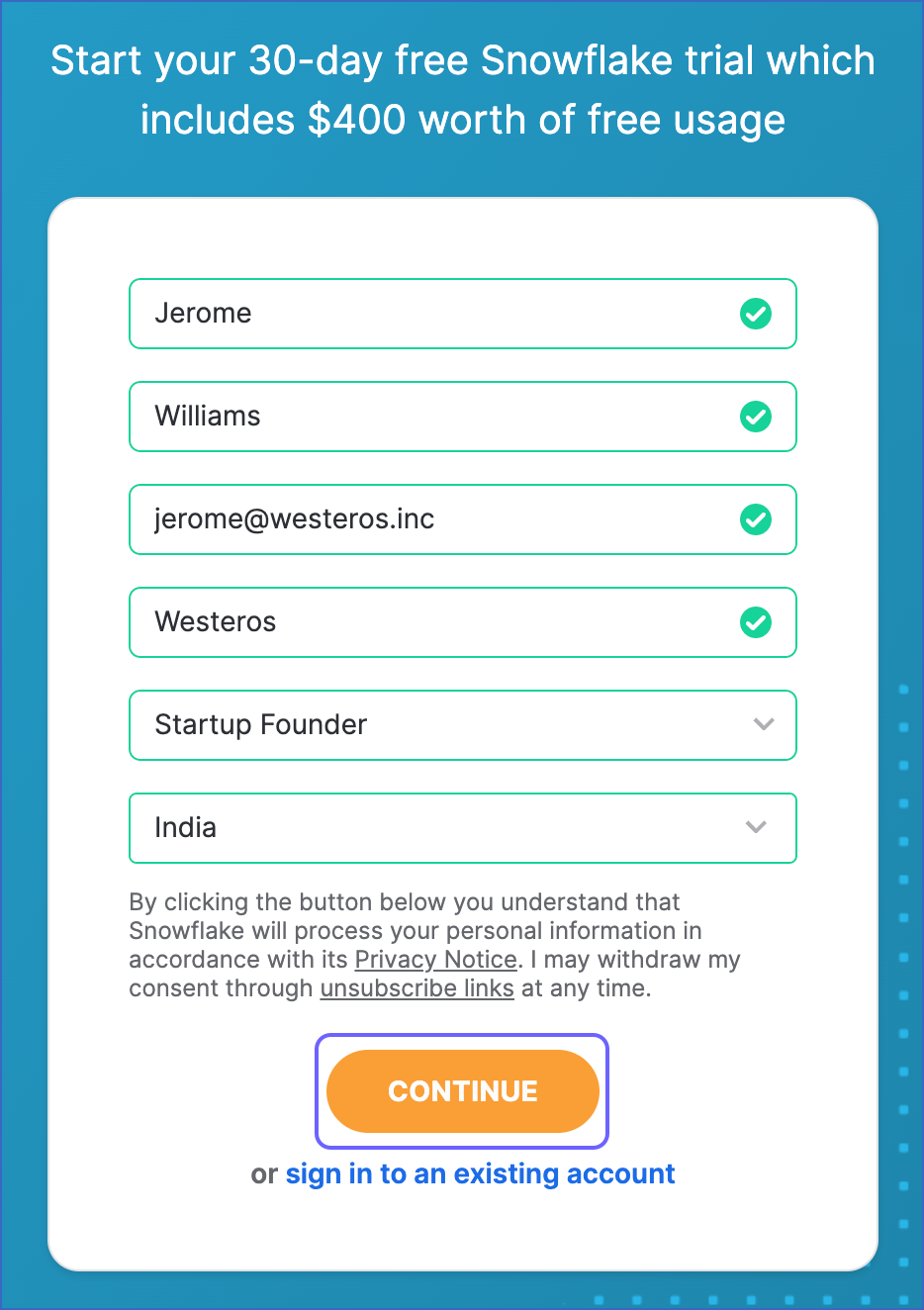

When you sign up for a Snowflake account, you get 30 days of free access with $400 credits. Beyond this limit, usage of the account is chargeable. The free trial starts from the day you activate the account. If you consume the credits before 30 days, the free trial ends and subsequent usage becomes chargeable. You can still log in to your account, however, you cannot use any features, such as running a virtual warehouse, loading data, or performing queries, until you upgrade your account or add more credits.

Perform the following steps to create a Snowflake account:

-

Go to https://signup.snowflake.com/.

-

On the Sign up page, specify the following and click CONTINUE:

-

First Name and Last Name: The first and last name of the account user.

-

Company Email: A valid email address that can be used to manage the Snowflake account.

-

Company Name: The name of your organization.

-

Role: The account user’s role in the organization.

-

Country: Your organization’s country or region.

-

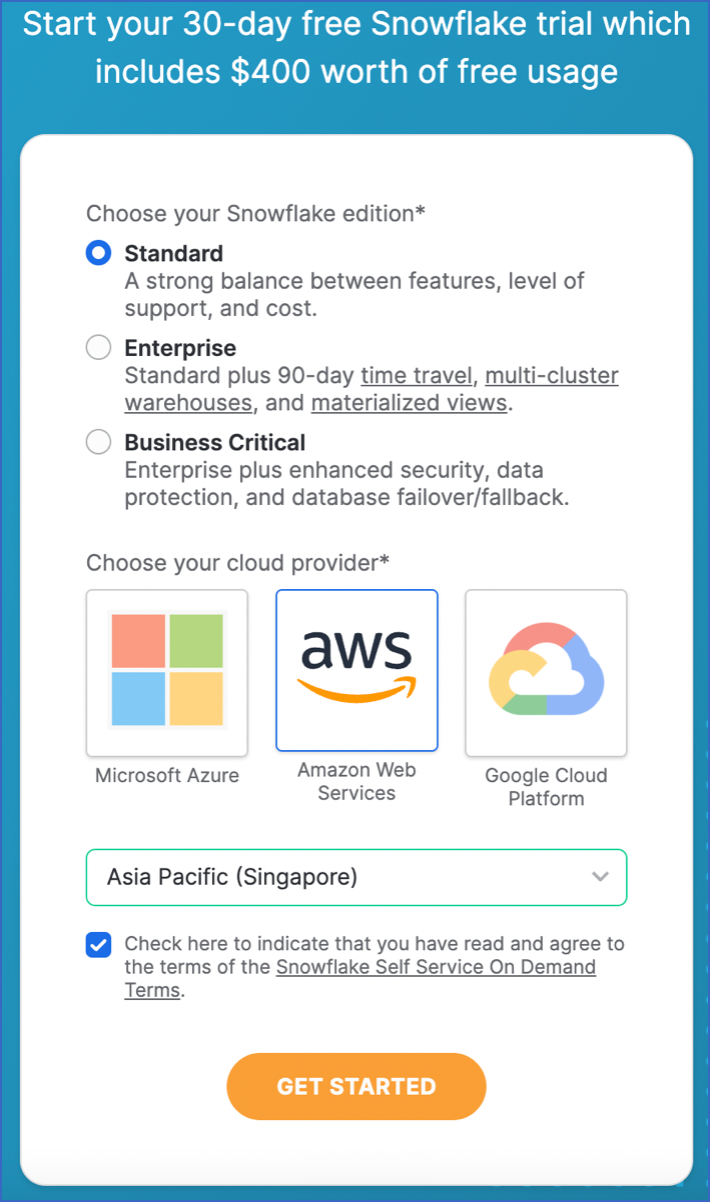

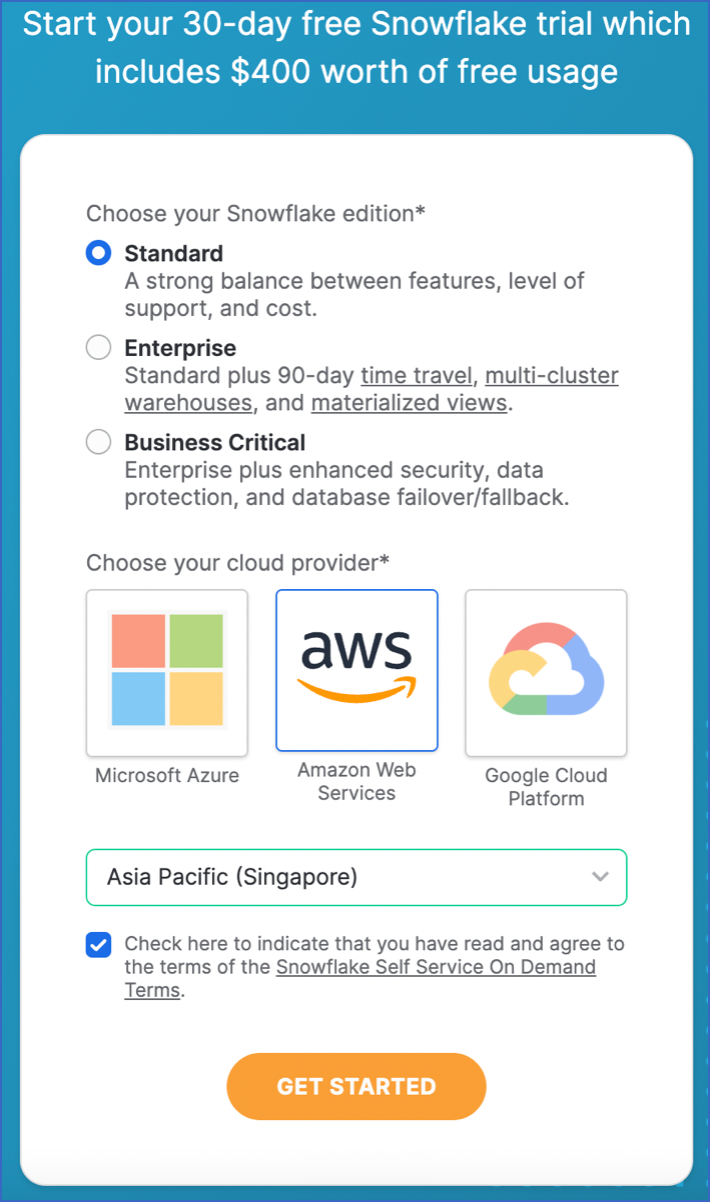

Select the Snowflake edition you want to use.

Note: You can choose the edition that meets your organization’s needs. Read Snowflake Editions to know more about the different editions available.

-

Select one of the following cloud platforms to host your Snowflake account:

- Amazon Web Services (AWS)

- Google Cloud Platform (GCP)

- Microsoft Azure (Azure)

Read Supported Cloud Platforms to know more about the details and pricing of each cloud platform.

-

Select the region for your cloud platform. In each platform, Snowflake provides one or more regions where the account can be provisioned.

-

Click GET STARTED.

An email to activate your account is sent to your registered email address. Click the link in the email to activate and sign in to your Snowflake account.

Hevo provides you with a ready-to-use script to create the resources needed to configure your Snowflake Edge Destination.

Follow these steps to run the script:

-

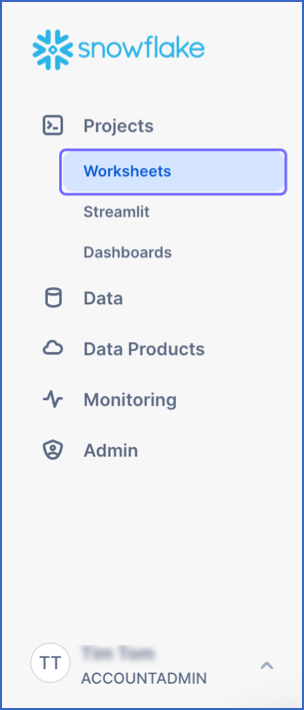

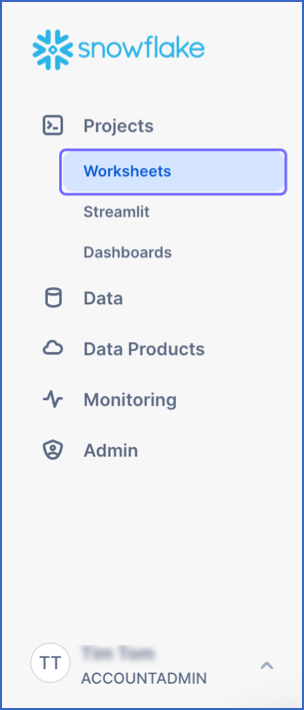

Log in to your Snowflake account.

-

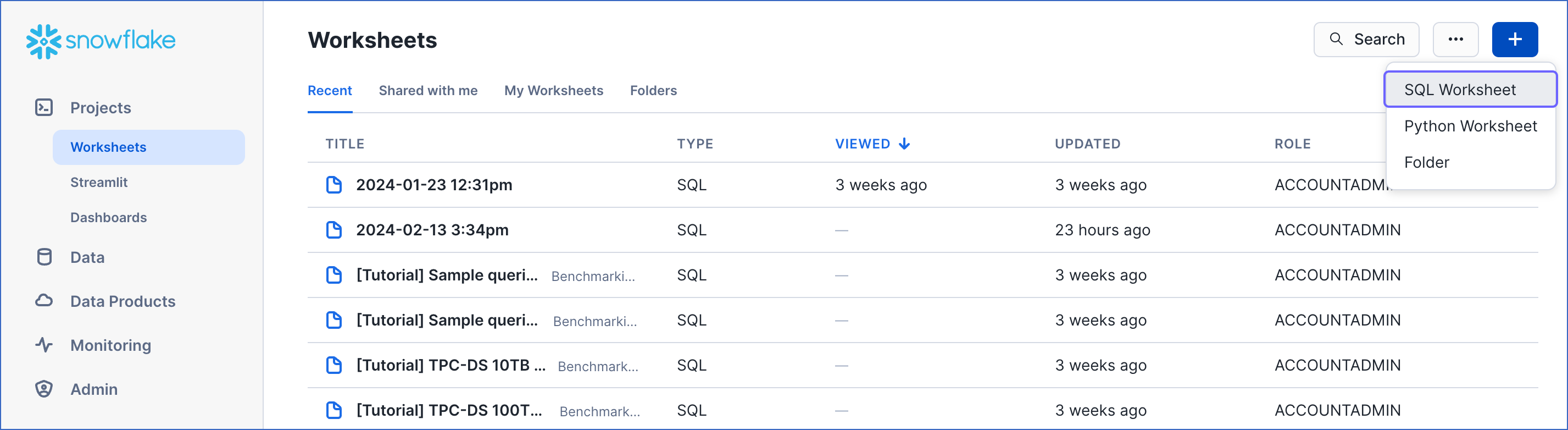

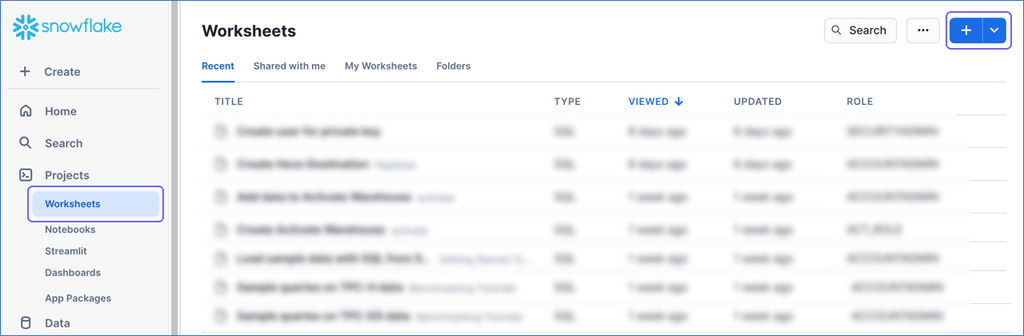

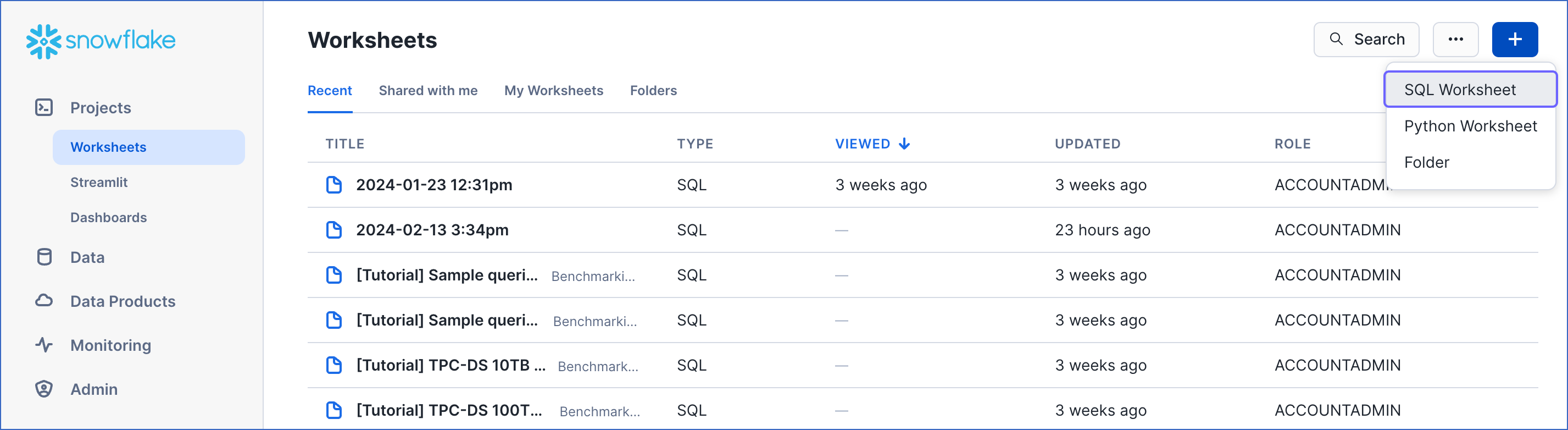

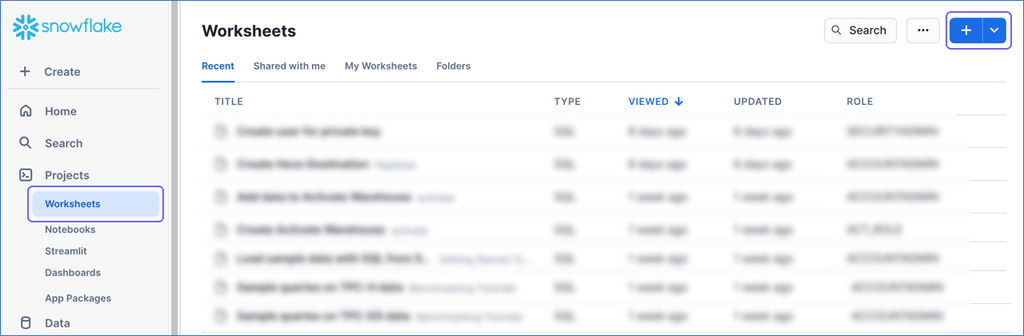

In the left navigation pane, under Projects, click Worksheets.

-

In the top right corner of the Worksheets tab, click the + icon and select SQL Worksheet from the drop-down to create a worksheet.

-

Copy the following script and paste it into the SQL Worksheet. Replace the sample values provided for warehouse_name, database_name, and schemaName (in lines 2-4 of the script) with your own. You can specify a new warehouse, database, and schema name to create them now or use the names of pre-existing resources.

-- Create variables for warehouse/database (needs to be uppercase for objects)

set warehouse_name = 'HOGWARTS'; -- Replace "HOGWARTS" with the name of your warehouse

set database_name = 'RON'; -- Replace "RON" with the name of your database

set schemaName = 'DARK_ARTS'; -- Replace "DARK_ARTS" with the database schema name

set db_schema = concat($database_name, '.', $schemaName);

begin;

-- Change role to sysadmin for the warehouse/database steps

use role sysadmin;

-- Create a warehouse for Hevo only if it does not exist

create

warehouse if not exists identifier($warehouse_name)

warehouse_size = xsmall

warehouse_type = standard

auto_suspend = 60

auto_resume = true

initially_suspended = true;

-- Create a database for Hevo only if it does not exist

create database if not exists identifier($database_name);

-- Create a schema for Hevo only if it does not exist

use role accountadmin;

create schema IF not exists identifier($db_schema);

commit;

Note: The values for warehouse_name, database_name, and schemaName must be in upper case.

-

Press CMD + A (Mac) or CTRL + A (Windows) inside the worksheet area to select the script.

-

Press CMD + return (Mac) or CTRL + Enter (Windows) to run the script.

-

Once the script runs successfully, you can specify the warehouse, database, and schema names (from lines 2-4 of the script) to connect your warehouse to Hevo while creating a Snowflake Destination in Edge.

Create a Snowflake User and Grant Permissions

Hevo does not need a user with the ACCOUNTADMIN role to connect to your Snowflake warehouse. You can create a non-administrative user and assign a custom role to it or use one of the admin roles. Hevo provides you with a ready-to-use script to create the user and grant it only the essential permissions required by Hevo to load data into your Snowflake warehouse.

Perform these steps to run the script:

-

Follow Steps 1-3 from the section above.

-

Copy the following script and paste it into the SQL Worksheet. Replace the sample values provided for role_name, user_name, and user_password (in lines 7-9 of the script) with your own. Also, substitute those for existing_warehouse_name, existing_database_name, and existing_schemaName (in lines 2-4 of the script) with the names of pre-existing resources or those created by the script above.

Note: The following script creates the role and user; it does not create the warehouse, database, or schema.

-- Create variables for the resources created by the script in the Create and Configure your Snowflake Warehouse section

set existing_warehouse_name = 'HOGWARTS'; -- Replace "HOGWARTS" with the name of the warehouse created earlier for Hevo

set existing_database_name = 'RON'; -- Replace "RON" with the name of the database created earlier for Hevo

set existing_schemaName = 'DARK_ARTS'; -- Replace "DARK_ARTS" with the schema name created earlier for Hevo

-- Create variables for user/password/role/warehouse/database/schema

set role_name = 'HEVO'; -- Replace "HEVO" with your role name

set user_name = 'HARRY_POTTER'; -- Replace "HARRY_POTTER" with your username

set user_password = 'Gryffindor'; -- Replace "Gryffindor" with the user password

set db_schema = concat($existing_database_name, '.', $existing_schemaName);

begin;

-- Change the role to securityadmin for user/role steps

use role securityadmin;

-- Create a role for Hevo

create role if not exists identifier($role_name);

grant role identifier($role_name) to role SYSADMIN;

-- Create a user for Hevo

create user if not exists identifier($user_name)

password = $user_password

default_role = $role_name

default_warehouse = $existing_warehouse_name;

-- Grant access to the user

grant role identifier($role_name) to user identifier($user_name);

-- Grant the Hevo role access to the warehouse

use role sysadmin;

grant USAGE

on warehouse identifier($existing_warehouse_name)

to role identifier($role_name);

-- Grant the Hevo role access to the database and existing schemas

use role accountadmin;

grant CREATE SCHEMA, MONITOR, USAGE, MODIFY

on database identifier($existing_database_name)

to role identifier($role_name);

GRANT USAGE, MONITOR, CREATE TABLE, CREATE EXTERNAL TABLE, MODIFY ON SCHEMA identifier($db_schema) TO ROLE identifier($role_name);

-- Grant the Hevo role access to future schemas and tables

use role accountadmin;

grant SELECT on future tables in database identifier($existing_database_name) to role identifier($role_name);

grant MONITOR, USAGE, MODIFY on future schemas in database identifier($existing_database_name) to role identifier($role_name);

commit;

Note: The values for all the variables, such as role_name and user_name, must be in upper case.

-

Press CMD + A (Mac) or CTRL + A (Windows) inside the worksheet area to select the script.

-

Press CMD + return (Mac) or CTRL + Enter (Windows) to run the script.

-

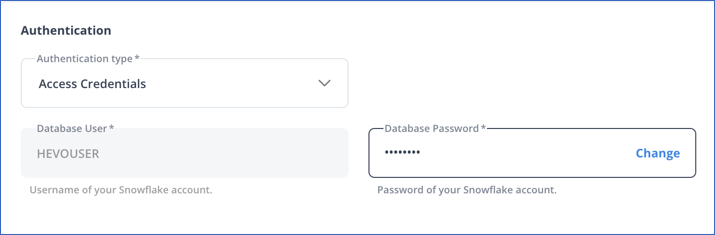

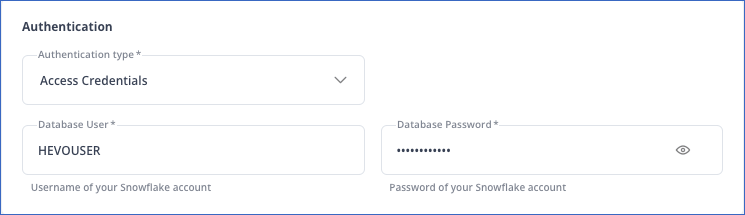

Once the script runs successfully, you can specify the values of user_name and user_password (from lines 8-9 of the script) when connecting to your Snowflake warehouse using access credentials.

Note: Hevo recommends connecting to the Snowflake warehouse using key pair authentication.

If you are a user in a Snowflake account created after the BCR Bundle 2024_08, Snowflake recommends connecting to ETL applications, such as Hevo, through a service user. For this, run the following command:

ALTER USER <your_snowflake_user> SET TYPE = SERVICE;

Replace the placeholder value in the command above with your own. For example, <your_snowflake_user> with HARRY_POTTER.

Note: New service users will not be able to connect to Hevo via password authentication; they must connect with a key pair. Read Obtain a Private and Public Key Pair for the steps to create a key pair.

Obtain a Private and Public Key Pair (Recommended Method)

You can authenticate Hevo’s connection to your Snowflake data warehouse using a public-private key pair. For this, you need to:

-

Generate a private key.

-

Generate the public key for your private key.

-

Assign the public key to your Snowflake user.

1. Generate a private key

You can connect to Hevo using an encrypted or unencrypted private key.

Note: Hevo supports only private keys encrypted using the Public-Key Cryptography Standards (PKCS) #8-based triple DES algorithm.

Open a terminal window, and on the command line, do one of the following:

-

To generate an unencrypted private key, run the command:

openssl genrsa 2048 | openssl pkcs8 -topk8 -inform PEM -out <unencrypted_key_name> -nocrypt

-

To generate an encrypted private key, run the command:

openssl genrsa 2048 | openssl pkcs8 -topk8 -v2 des3 -inform PEM -out <encrypted_key_name>

You will be prompted to set an encryption password. This is the passphrase that you need to provide while connecting to your Snowflake Edge Destination using key pair authentication.

Note: Replace the placeholder values in the commands above with your own. For example, <encrypted_key_name> with encrypted_rsa_key.p8.

The private key is generated in the PEM format.

-----BEGIN ENCRYPTED PRIVATE KEY-----

MIIFJDBWBg...

----END ENCRYPTED PRIVATE KEY-----

Save the private key file in a secure location and provide it while connecting to your Snowflake Edge Destination using key pair authentication.

2. Generate a public key

To use a key pair for authentication, you must generate a public key for the private key created in the step above. For this:

Open a terminal window, and on the command line, run the following command:

openssl rsa -in <private_key_file> -pubout -out <public_key_file>

Note:

-

Replace the placeholder values in the command above with your own. For example, <private_key_file> with encrypted_rsa_key.p8.

-

If you are generating a public key for an encrypted private key, you will need to provide the encryption password used to create the private key.

The public key is generated in the PEM format.

-----BEGIN PUBLIC KEY-----

MIIBIjANBgk...

-----END PUBLIC KEY-----

Save the public key file in a secure location. You must associate this public key with the Snowflake user that you created for Hevo.

3. Assign the public key to a Snowflake user

To authenticate Hevo’s connection to your Snowflake warehouse using a key pair, you must associate the public key generated in the step above with the user that you created for Hevo. To do this:

-

Log in to your Snowflake account as a user with the SECURITYADMIN role or a higher role.

-

In the left navigation bar, click Projects, and then click Worksheets.

-

In the top right corner of the Worksheets tab, click the + icon to create a SQL worksheet.

-

Run the following command in the SQL worksheet:

ALTER USER <your_snowflake_user> SET RSA_PUBLIC_KEY='<public_key>';

// Example

ALTER USER HARRY_POTTER set RSA_PUBLIC_KEY='MIIBIjANBgk...';

Note:

-

Replace the placeholder values in the command above with your own. For example, <your_snowflake_user> with HARRY_POTTER.

-

Set the public key value to the content between -----BEGIN PUBLIC KEY----- and -----END PUBLIC KEY-----.

To check whether the public key is configured correctly, you can follow the steps provided in the verify the user’s public key fingerprint section.

Obtain your Snowflake Account URL

The organization name and account name are visible in your Snowflake web interface URL.

For most accounts, the URL looks like https://<orgname>-<account_name>.snowflakecomputing.com.

For example, https://hevo-westeros.snowflakecomputing.com. Here, hevo is the organization name and westeros is your account name.

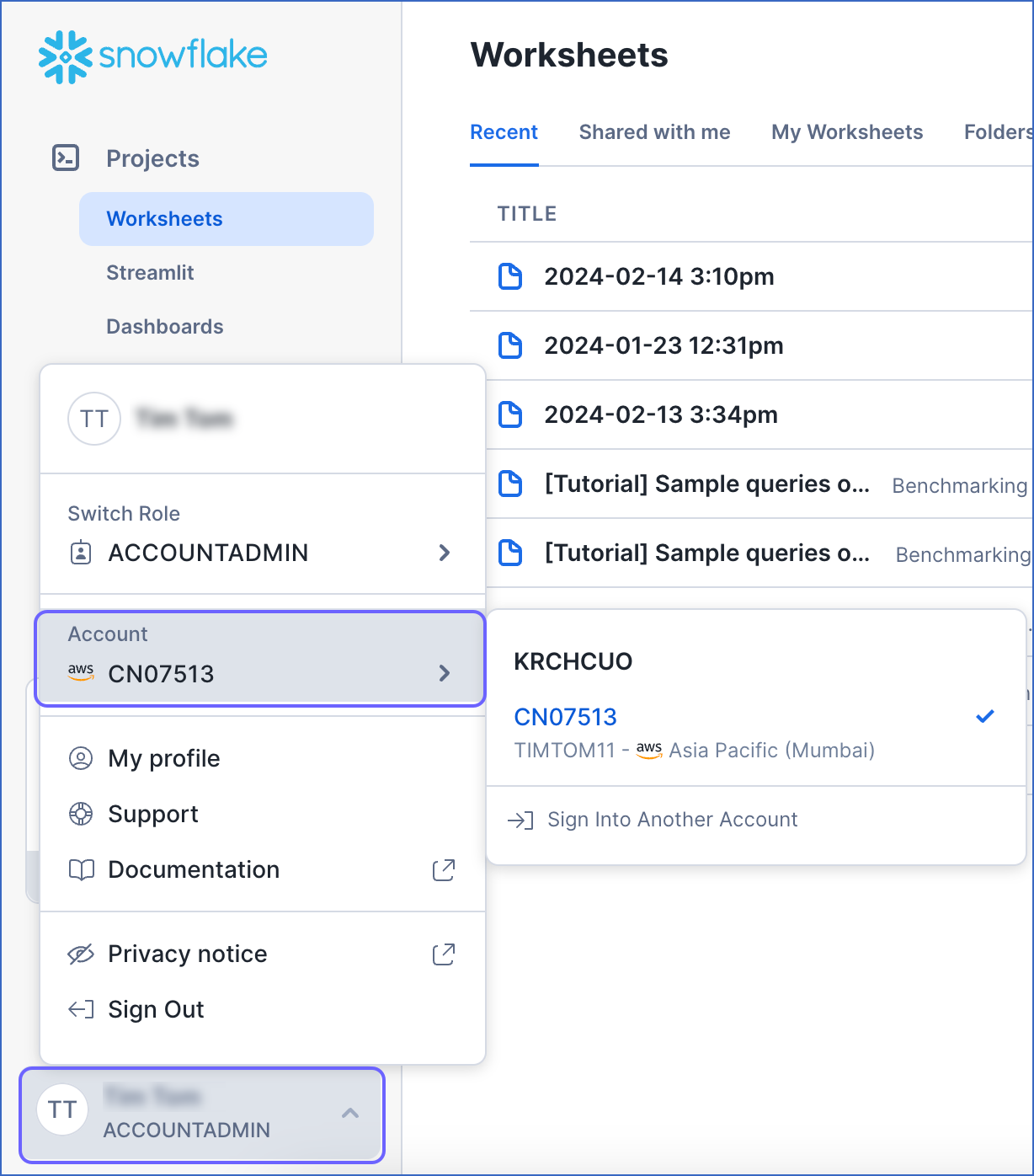

Perform the following steps to obtain your Snowflake account URL:

-

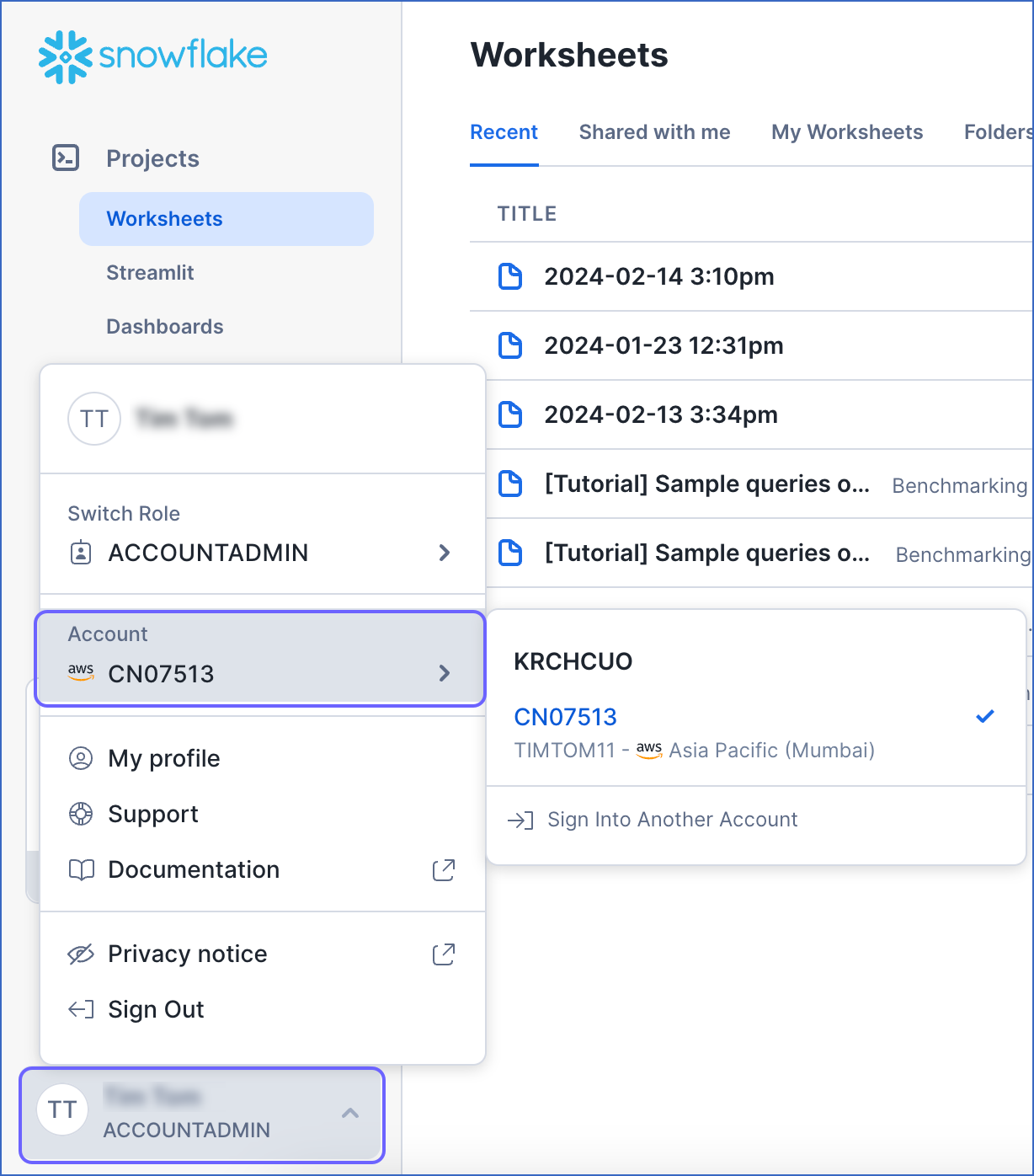

Log in to your Snowflake instance.

-

Navigate to the bottom of the left navigation pane, click the account selector, and then hover over the Account section.

-

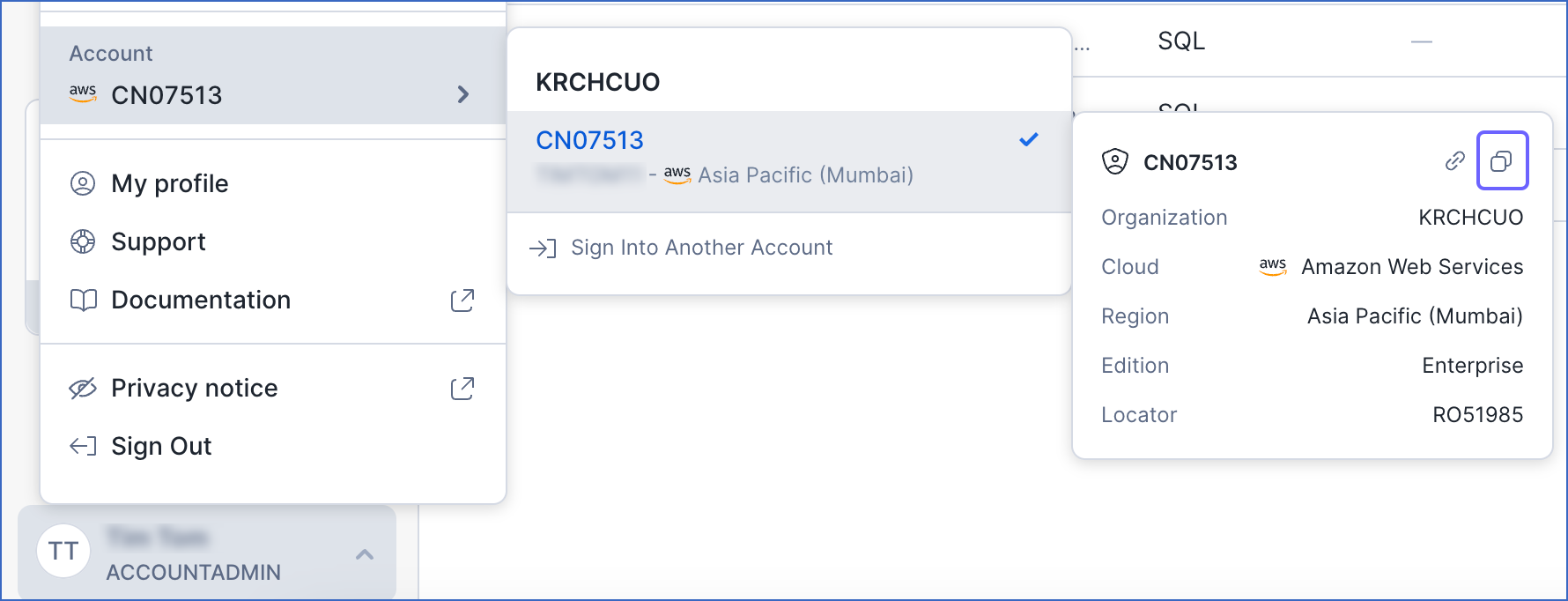

In the pop-up, locate the account whose URL you want to obtain and hover over it.

-

In the account details pop-up dialog, click the Copy icon to copy the account identifier, and save it securely.

The account identifier is provided in the format <orgname>.<account_name>. To convert it to the account URL, substitute the values of orgname and account_name in the format https://<orgname>-<account_name>.snowflakecomputing.com.

For example, if the account identifier is KRCHCUO.CN07513, the account URL is https://krchcuo-cn07513.snowflakecomputing.com.

Use this URL while configuring your Destination.

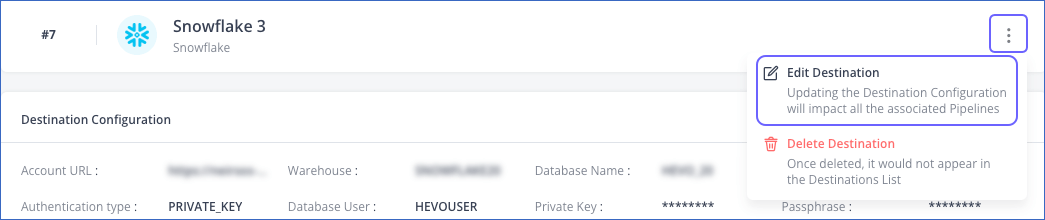

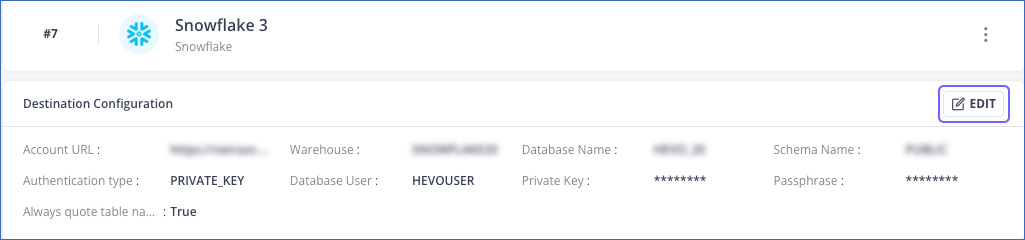

) icon to access the Destination Actions menu, and then click Edit Destination.

) icon to access the Destination Actions menu, and then click Edit Destination.

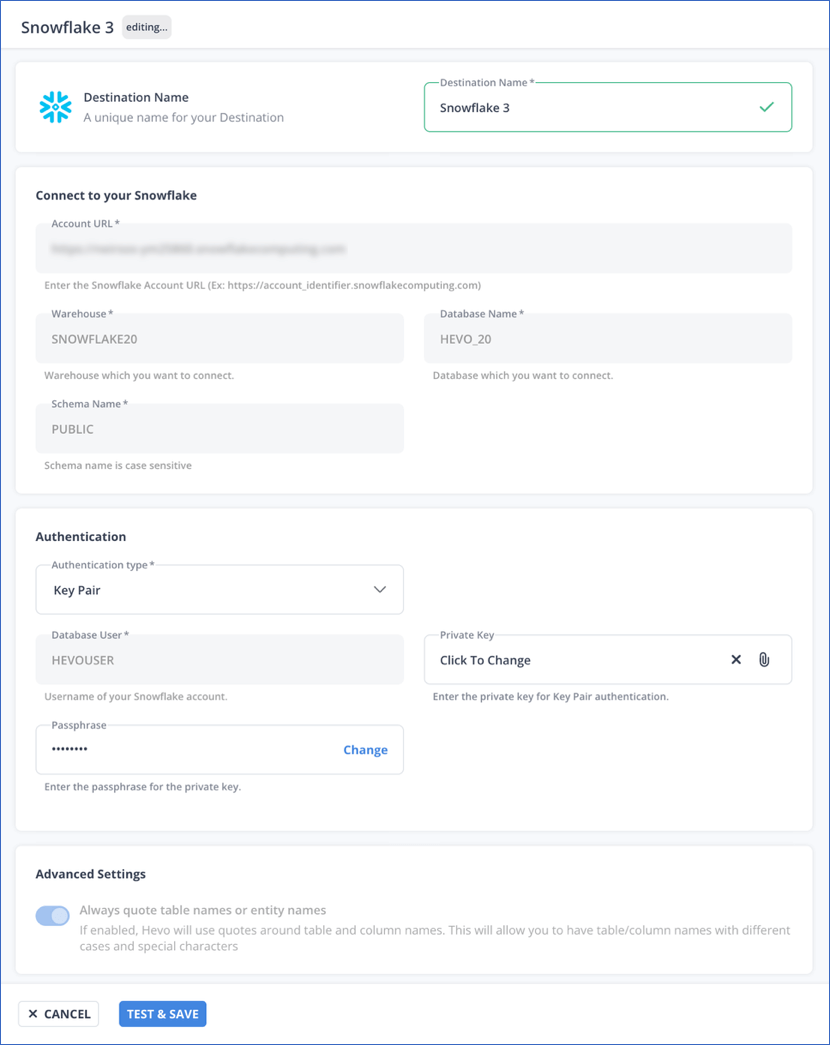

) icon to upload your encrypted or non-encrypted private key file. As the database user configured in your Destination cannot be changed, ensure that the public key corresponding to the uploaded private key is assigned to it.

) icon to upload your encrypted or non-encrypted private key file. As the database user configured in your Destination cannot be changed, ensure that the public key corresponding to the uploaded private key is assigned to it.