Creating an Edge Pipeline

On This Page

Edge Pipeline is now available for Public Review. You can explore and evaluate its features and share your feedback.

You can create a Pipeline in Hevo to synchronize data from your Source with a Destination. To get started with creating a Pipeline, you need:

-

An active Hevo account. You can start with a 14-day full-feature, free trial account.

-

Access to the Source system where your data resides.

-

Access to a Destination system in which the Source data is replicated.

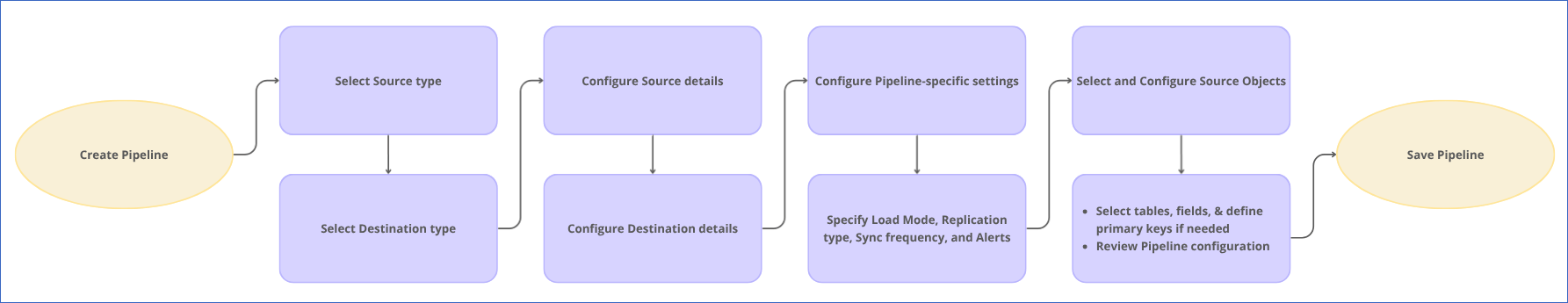

The following diagram illustrates the process to set up an Edge Pipeline.

Refer to the sections below for the detailed steps.

Perform the following steps to create an Edge Pipeline:

-

Log in to your Hevo account. By default, PIPELINES is selected in the Navigation Bar.

-

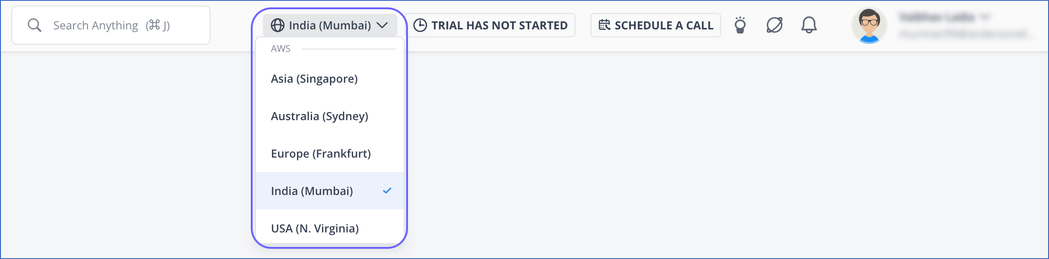

(Optional) In the User Information bar at the top of the page, select the region in which you want to create your Edge Pipeline, if this is different from the default region of your workspace.

-

On the Pipelines page, click + Create Pipeline, and then do the following:

-

Set up your Source from which you want to ingest data.

-

Configure your Destination into which you want to replicate your Source data.

-

Select the Source objects from which you want to ingest data.

-

Once you have created your Edge Pipeline, you are automatically redirected to the Job History tab, which displays the jobs running in your Pipeline. You can click on a job to view its details.

Set up the Source

Perform the following steps to set up your Source in the Edge Pipeline:

-

On the Select Source Type page, select your Source. Here, we are selecting Amazon RDS PostgreSQL.

-

On the Select Destination Type page, select the type of Destination you want to use.

-

On the page that appears, do the following:

-

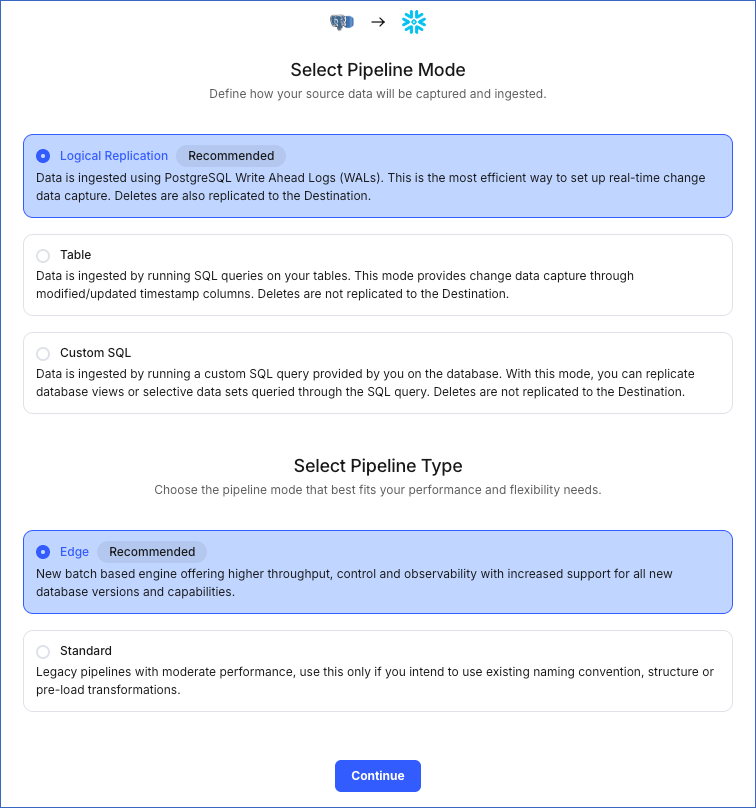

Select Pipeline Mode: Choose Logical Replication. Hevo supports only this mode for Edge Pipelines created with PostgreSQL Source. If you choose any other mode, you can proceed to create a Standard Pipeline.

-

Select Pipeline Type: Choose the type of Pipeline you want to create based on your requirements, and then click Continue.

-

If you select Edge, skip to step 4 below.

-

If you select Standard, read the corresponding Standard Source documentation to configure your Standard Pipeline.

This section is displayed only if all the following conditions are met:

-

The selected Destination type is supported in Edge.

-

The Pipeline mode is set to Logical Replication.

-

Your Team was created before September 15, 2025, and has an existing Pipeline created with the same Destination type and Pipeline mode.

For Teams that do not meet the above criteria, if the selected Destination type is supported in Edge and the Pipeline mode is set to Logical Replication, you can proceed to create an Edge Pipeline. Otherwise, you can proceed to create a Standard Pipeline.

-

-

-

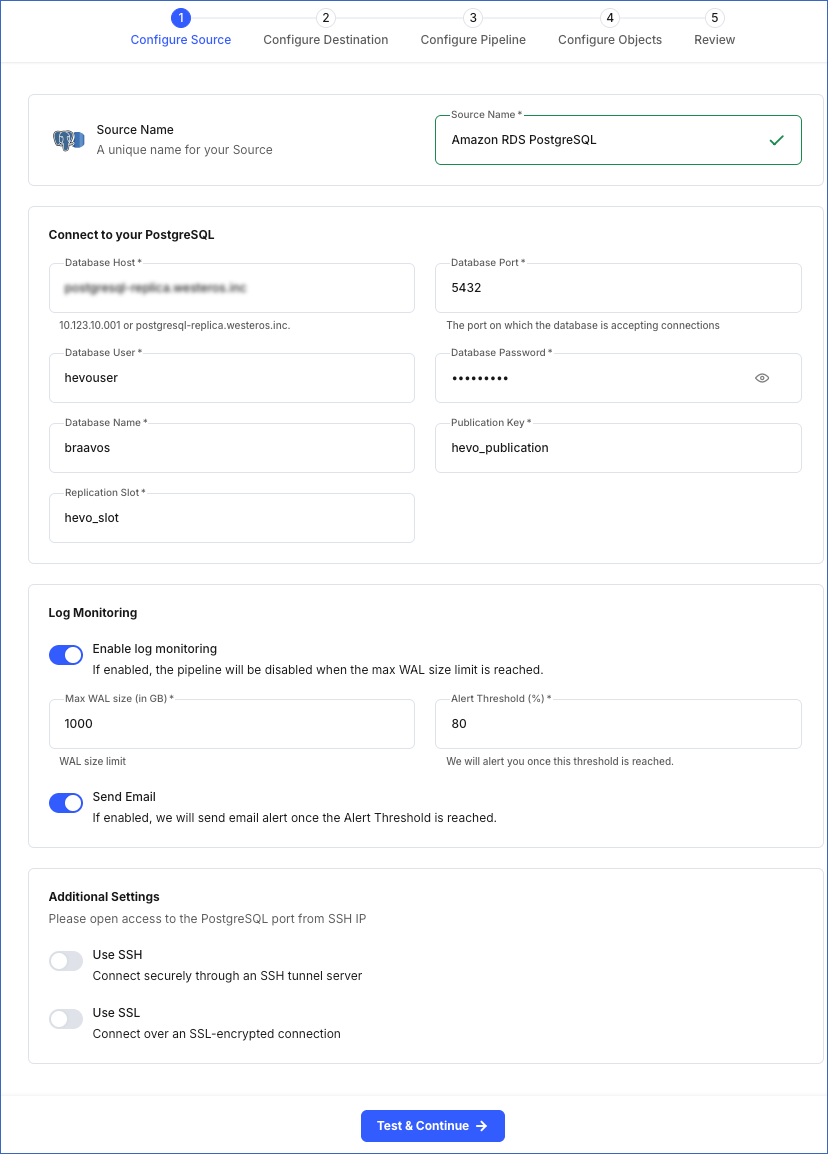

In the Configure Source screen, specify the following:

-

Source Name: A unique name for your Source, not exceeding 255 characters. For example, Amazon RDS PostgreSQL.

-

In the Connect to your PostgreSQL section:

Note: In Edge Pipelines, Hevo replicates data using the database logs.

-

Database Host: The PostgreSQL host’s IP address or DNS. For example, 10.123.10.001 or postgresql.westeros.inc.

Note: For URL-based hostnames, exclude the http:// or https:// part. For example, if the hostname URL is https://postgres.database.com, enter postgres.database.com.

-

Database Port: The port on which your PostgreSQL server listens for connections. Default value: 5432.

-

Database User: The user who has read-only permission on your database. For example, hevouser.

-

Database Password: The password for your database user.

-

Database Name: The database from which you want to replicate data. For example, dvdrental.

-

Publication Key: The name of the publication added in your Source database to track changes in the database tables. Read publications to understand them and learn how to create them.

-

Replication Slot: The name of the replication slot created for your Source database to stream changes from the Write-Ahead Logs (WALs) to Hevo for incremental ingestion. Refer to the Create a replication slot section in the respective Source documentation for the steps to create it.

-

-

Log Monitoring: Enable this option if you want Hevo to disable your Pipeline when the size of the WAL being monitored reaches the set maximum value. Specify the following:

-

Max WAL Size (in GB): The maximum allowable size of the Write-Ahead Logs that you want Hevo to monitor. Specify a number greater than 1.

-

Alert Threshold (%): The percentage limit for the WAL, whose size Hevo is monitoring. An alert is sent when this threshold is reached. Specify a value between 50 to 80. For example, if you set the Alert Threshold to 80, Hevo sends a notification when the WAL size is at 80% of the Max WAL Size specified above.

-

Send Email: Enable this option to send an email when the WAL size has reached the specified Alert Threshold percentage.

If this option is turned off, Hevo does not send an email alert.

-

-

Additional Settings

-

Connect through SSH: Enable this option to connect to Hevo using an SSH tunnel instead of directly connecting your PostgreSQL database host to Hevo. This provides an additional level of security to your database by not exposing your PostgreSQL setup to the public.

If this option is turned off, you must configure your Source to accept connections from Hevo’s IP addresses. You can do this by adding Hevo’s IP addresses to the database IP allowlist. Refer to the content of your PostgreSQL variant for steps to do this.

-

Use SSL: Enable this option to use an SSL-encrypted connection. Specify the following:

-

CA File: The file containing the SSL server certificate authority (CA).

-

Client Certificate: The client’s public key certificate file.

-

Client Key: The client’s private key file.

-

-

-

-

Click Test & Continue to test the connection to your PostgreSQL Source. Once the test is successful, you can set up your Destination.

Select and Configure your Destination

You must configure your Destination after you have set up the Source. To do this:

-

On the Create Pipeline page, in the Selection screen under Configure Destination, do one of the following:

-

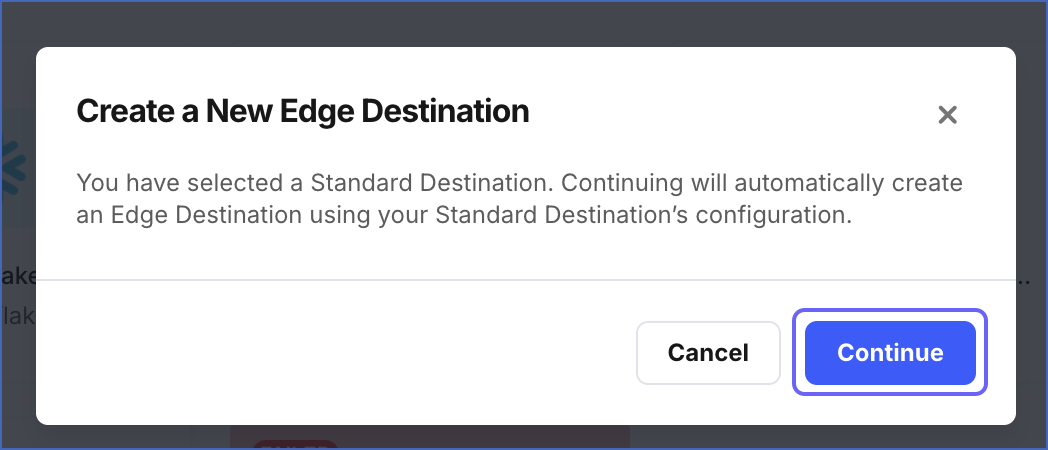

On the Select Destination page, you can select an existing Edge Destination or copy a Standard Destination to reuse its configuration and field values. To copy a Standard Destination:

-

Click the Destination you want to copy.

-

In the Create a New Edge Destination pop-up dialog, click Continue.

-

On the Add Destination page, review and specify values for any fields that were not available in the Standard Destination.

-

-

Click Add Destination to configure a new Destination, and then follow these steps:

Note: You must select Create New Destination if you are creating your first Edge Pipeline or have not created any Edge Destinations.

-

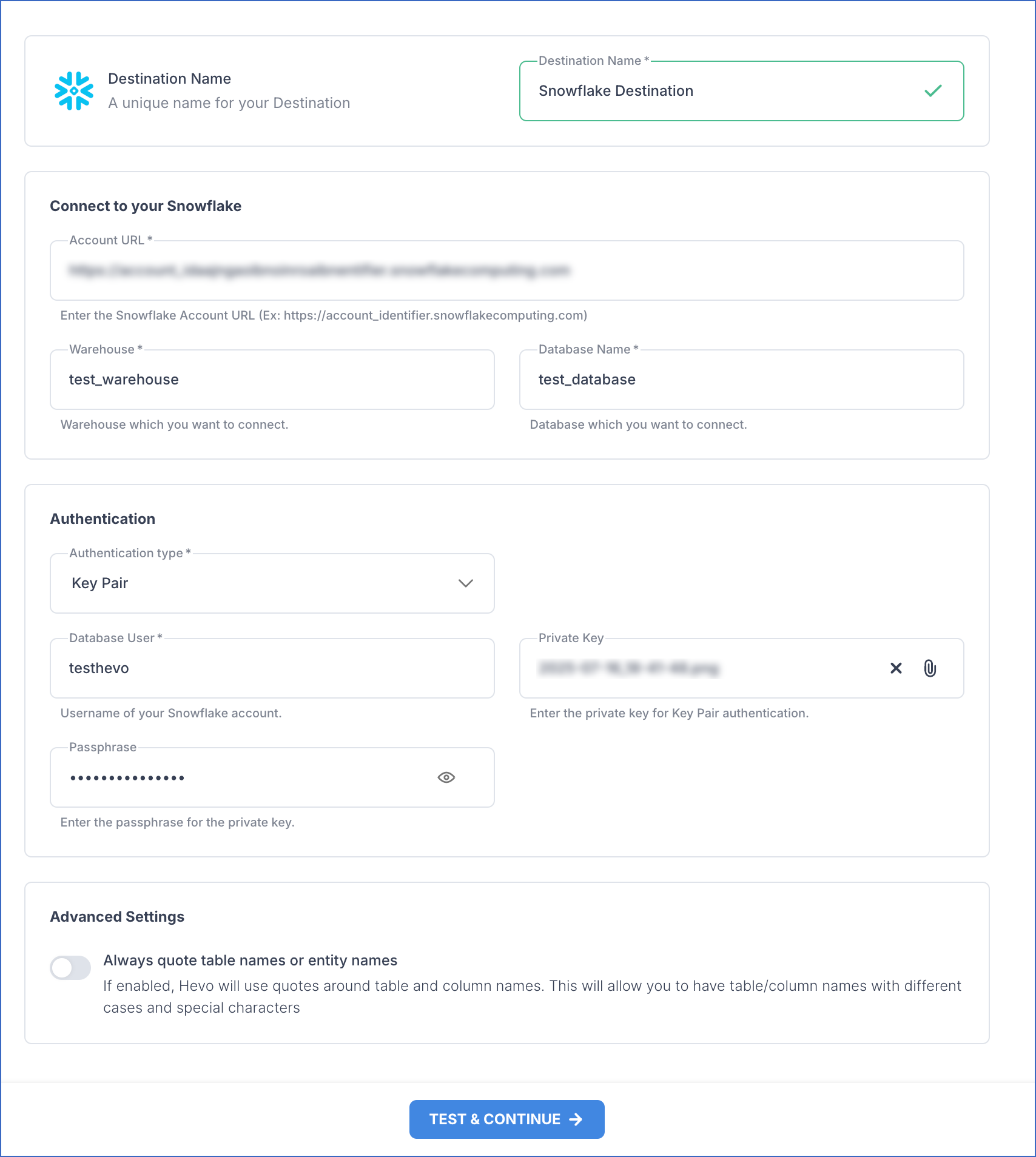

Select your Destination type. Here, we are selecting Snowflake.

-

In the Snowflake screen, specify the following:

-

Destination Name: A unique name for your Destination, not exceeding 255 characters. For example, Snowflake Destination.

-

In the Connect to your Snowflake section:

-

Account URL: The URL for connecting to the Snowflake data warehouse. For example, https://xy12345.ap-southeast-1.snowflakecomputing.com.

-

Warehouse: The name of the Snowflake warehouse where Hevo will run the SQL queries and perform DML operations for data replication. For example, SNOWFLAKE20.

-

Database Name: The name of the database in the Destination warehouse where the data is to be loaded. For example, HEVO_20.

Note: All the field values are case-sensitive.

-

In the Authentication section, select an Authentication type from the drop-down menu. Here, we have chosen Key Pair.

-

Key Pair: Connect to your Snowflake warehouse using a public and private key pair.

-

Database User: A user with a non-administrative role created in the Snowflake. This user must be the one to whom you have assigned the public key associated with your private key.

-

Private Key: A cryptographic password used along with a public key to generate digital signatures. Click the attach (

) icon to upload your private key file.

) icon to upload your private key file. -

Passphrase: The password given while generating the encrypted private key. Leave this field blank if you have attached a non-encrypted private key.

-

-

-

Advanced Settings:

- Always quote table names or entity names: Enable this option to preserve the case of your Source table and column names in the Snowflake Destination.

-

-

-

-

-

Click TEST & CONTINUE to test the connection to your Snowflake Destination. Once the test is successful, you can provide your Pipeline-specific settings.

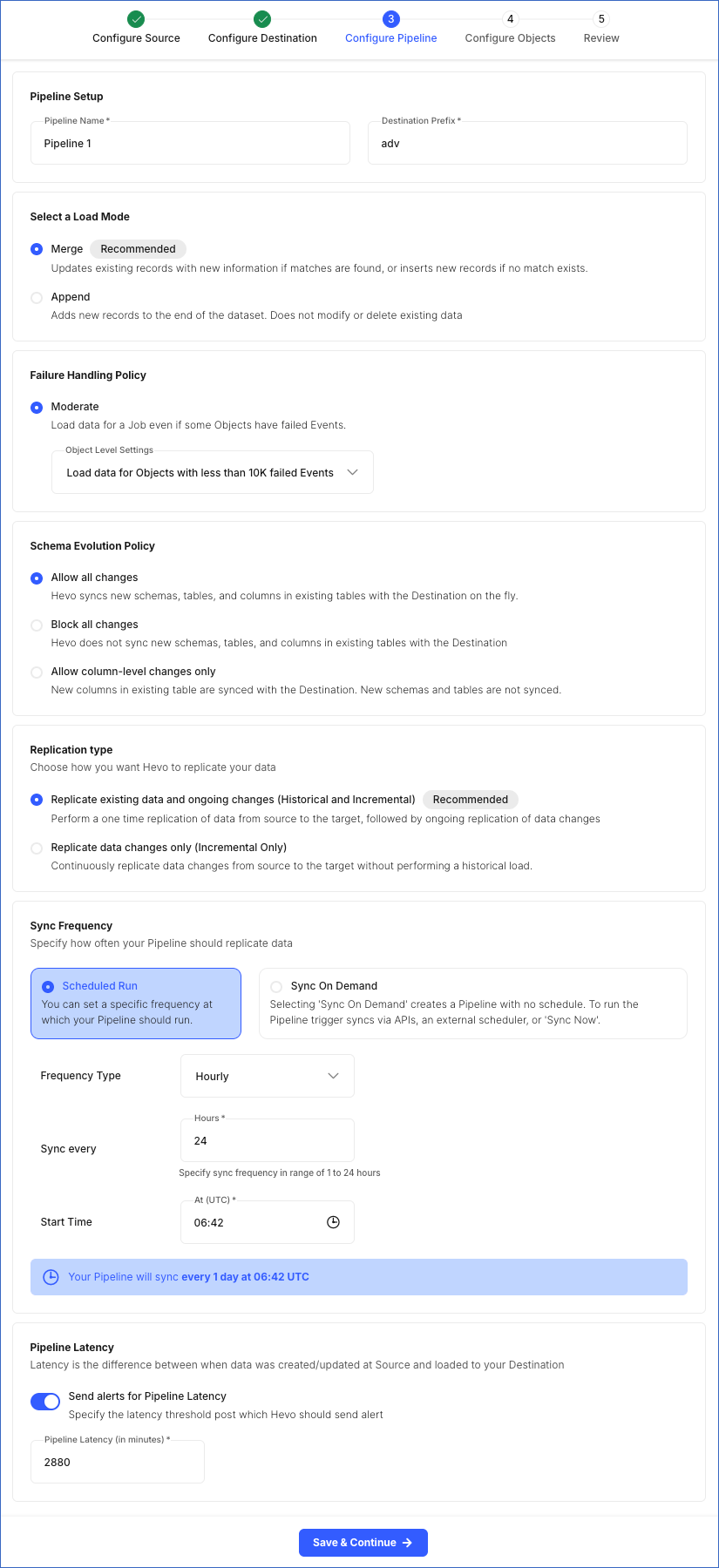

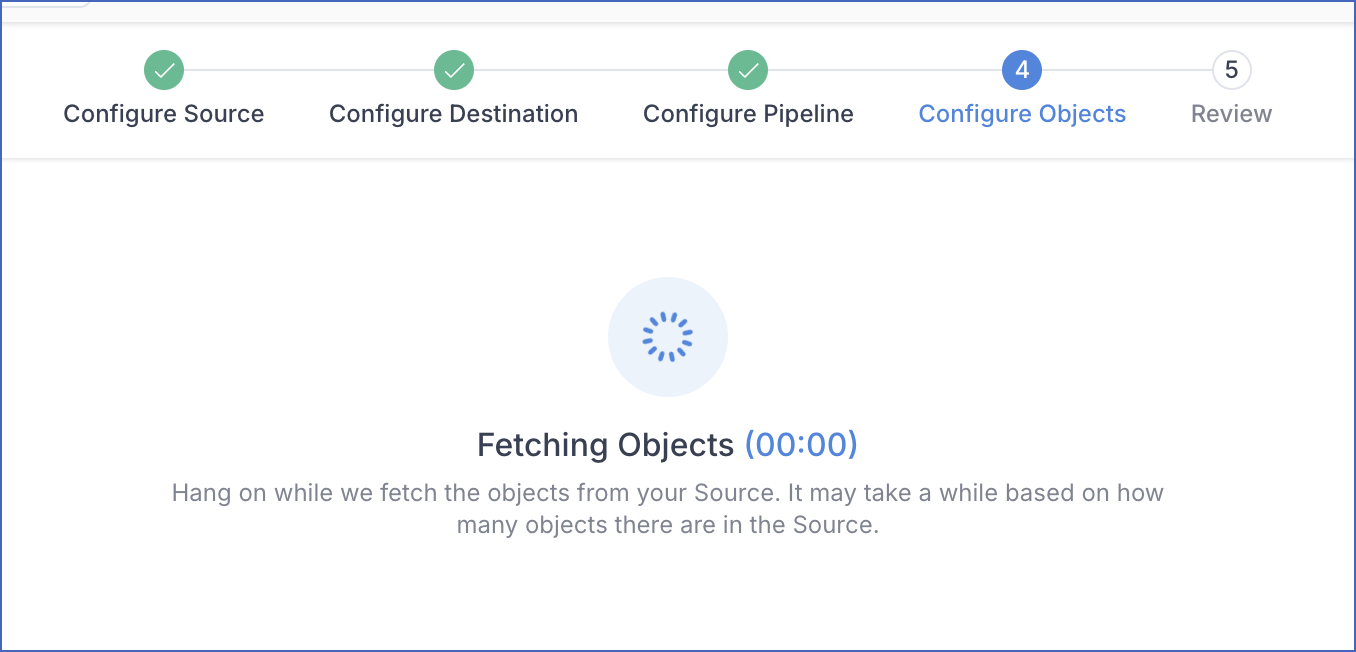

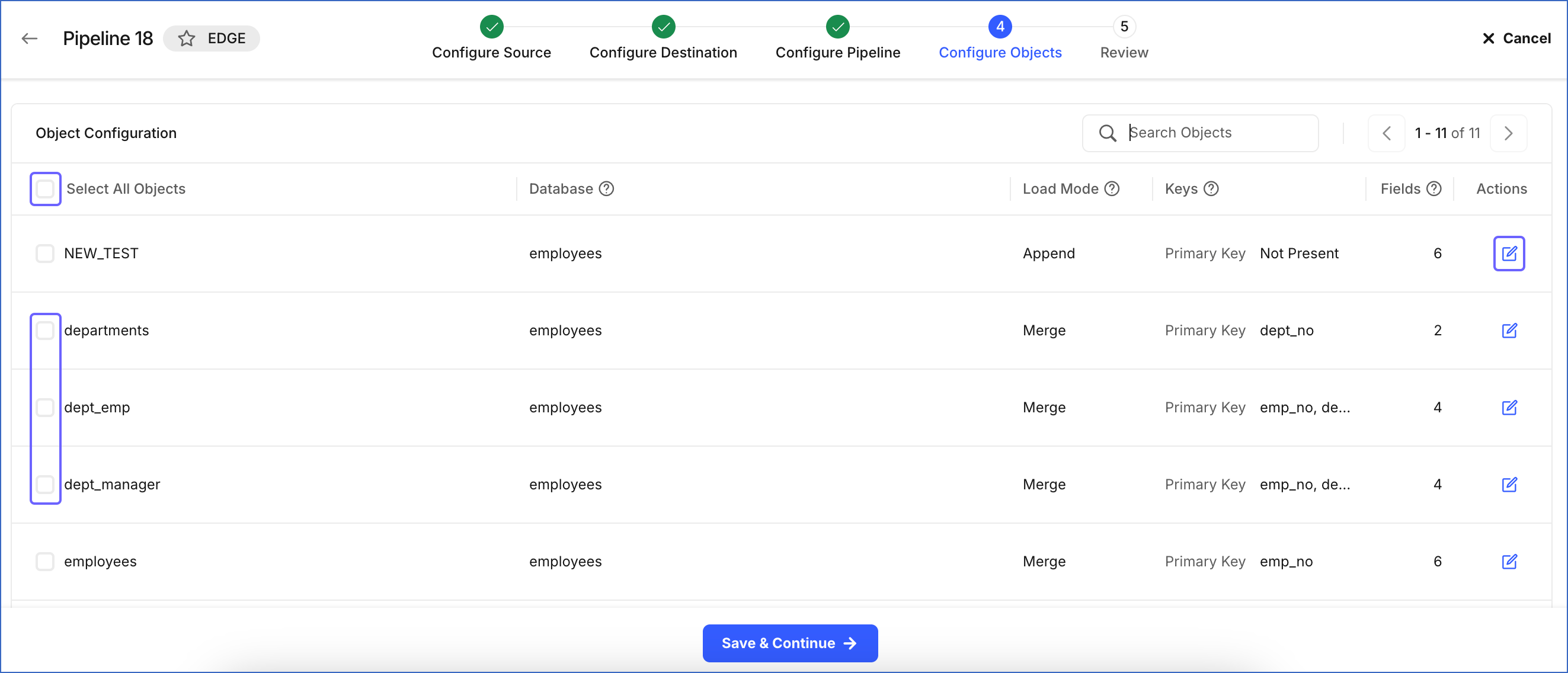

Select and Configure the Source Objects

Once Hevo fetches all the Source objects, you are directed to the Configure Objects page of your Pipeline. On this page, all the objects from the Source database are displayed.

Note: Hevo marks the Source object as Inaccessible if the publication key specified while configuring the PostgreSQL Source does not include the object or if the publication is defined without the insert, update, and delete privileges.

On the Configure Objects page, perform the following steps to configure the Source objects for data ingestion:

-

In the Object Configuration section, do one of the following:

-

Click the check box next to Select All Objects to ingest data from all objects included in the publication key.

-

Select the check box next to each object whose data you want to replicate.

-

-

(Optional) Click the Edit (

) icon under Actions to do the following:

) icon under Actions to do the following:-

Select or deselect the Source object fields.

Note: If data is to be loaded in the Merge mode, you cannot deselect a field that is the primary key of a Source object.

-

Define the primary keys for the selected Source objects if you want to load data to the Destination table in the Merge mode. This step is not required for the Append mode.

-

-

Click Save & Continue. To proceed to the Review page, you must select at least one object for data ingestion.

-

In the Summary screen, review all the configurations defined for the Pipeline.

-

Click Save Pipeline.

You are automatically redirected to the Job History tab, which displays the jobs running in your Pipeline. You can click on a job to view its details.

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

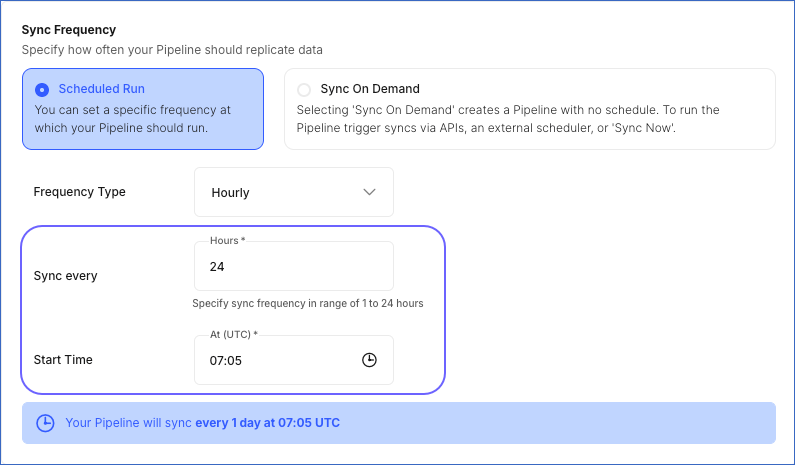

| Feb-04-2026 | NA | Updated section, Configure the Pipeline Settings to add content for syncing data at a specific start time in 24-hour-frequency Pipelines. |

| Jan-23-2026 | NA | Updated section, Configure the Pipeline Settings to clarify that the Destination prefix limitation applies only to Pipelines created with the BigQuery Destination. |

| Jan-20-2026 | NA | Updated section, Configure the Pipeline Settings to add notes for sections that are not displayed for Pipelines created with SaaS Sources. |

| Nov-21-2025 | NA | Added a process flow diagram. |