- Introduction

-

Getting Started

- Creating an Account in Hevo

- Subscribing to Hevo via AWS Marketplace

- Subscribing to Hevo via Snowflake Marketplace

- Connection Options

- Familiarizing with the UI

- Creating your First Pipeline

- Data Loss Prevention and Recovery

-

Data Ingestion

- Types of Data Synchronization

- Ingestion Modes and Query Modes for Database Sources

- Ingestion and Loading Frequency

- Data Ingestion Statuses

- Deferred Data Ingestion

- Handling of Primary Keys

- Handling of Updates

- Handling of Deletes

- Hevo-generated Metadata

- Best Practices to Avoid Reaching Source API Rate Limits

-

Edge

- Getting Started

- Data Ingestion

- Core Concepts

-

Pipelines

- Familiarizing with the Pipelines UI (Edge)

- Creating an Edge Pipeline

- Working with Edge Pipelines

- Object and Schema Management

- Pipeline Job History

- Sources

- Destinations

- Alerts

- Custom Connectors

-

Releases

- Edge Release Notes - February 18, 2026

- Edge Release Notes - February 10, 2026

- Edge Release Notes - February 03, 2026

- Edge Release Notes - January 20, 2026

- Edge Release Notes - December 08, 2025

- Edge Release Notes - December 01, 2025

- Edge Release Notes - November 05, 2025

- Edge Release Notes - October 30, 2025

- Edge Release Notes - September 22, 2025

- Edge Release Notes - August 11, 2025

- Edge Release Notes - July 09, 2025

- Edge Release Notes - November 21, 2024

-

Data Loading

- Loading Data in a Database Destination

- Loading Data to a Data Warehouse

- Optimizing Data Loading for a Destination Warehouse

- Deduplicating Data in a Data Warehouse Destination

- Manually Triggering the Loading of Events

- Scheduling Data Load for a Destination

- Loading Events in Batches

- Data Loading Statuses

- Data Spike Alerts

- Name Sanitization

- Table and Column Name Compression

- Parsing Nested JSON Fields in Events

-

Pipelines

- Data Flow in a Pipeline

- Familiarizing with the Pipelines UI

- Working with Pipelines

- Managing Objects in Pipelines

- Pipeline Jobs

-

Transformations

-

Python Code-Based Transformations

- Supported Python Modules and Functions

-

Transformation Methods in the Event Class

- Create an Event

- Retrieve the Event Name

- Rename an Event

- Retrieve the Properties of an Event

- Modify the Properties for an Event

- Fetch the Primary Keys of an Event

- Modify the Primary Keys of an Event

- Fetch the Data Type of a Field

- Check if the Field is a String

- Check if the Field is a Number

- Check if the Field is Boolean

- Check if the Field is a Date

- Check if the Field is a Time Value

- Check if the Field is a Timestamp

-

TimeUtils

- Convert Date String to Required Format

- Convert Date to Required Format

- Convert Datetime String to Required Format

- Convert Epoch Time to a Date

- Convert Epoch Time to a Datetime

- Convert Epoch to Required Format

- Convert Epoch to a Time

- Get Time Difference

- Parse Date String to Date

- Parse Date String to Datetime Format

- Parse Date String to Time

- Utils

- Examples of Python Code-based Transformations

-

Drag and Drop Transformations

- Special Keywords

-

Transformation Blocks and Properties

- Add a Field

- Change Datetime Field Values

- Change Field Values

- Drop Events

- Drop Fields

- Find & Replace

- Flatten JSON

- Format Date to String

- Format Number to String

- Hash Fields

- If-Else

- Mask Fields

- Modify Text Casing

- Parse Date from String

- Parse JSON from String

- Parse Number from String

- Rename Events

- Rename Fields

- Round-off Decimal Fields

- Split Fields

- Examples of Drag and Drop Transformations

- Effect of Transformations on the Destination Table Structure

- Transformation Reference

- Transformation FAQs

-

Python Code-Based Transformations

-

Schema Mapper

- Using Schema Mapper

- Mapping Statuses

- Auto Mapping Event Types

- Manually Mapping Event Types

- Modifying Schema Mapping for Event Types

- Schema Mapper Actions

- Fixing Unmapped Fields

- Resolving Incompatible Schema Mappings

- Resizing String Columns in the Destination

- Changing the Data Type of a Destination Table Column

- Schema Mapper Compatibility Table

- Limits on the Number of Destination Columns

- File Log

- Troubleshooting Failed Events in a Pipeline

- Mismatch in Events Count in Source and Destination

- Audit Tables

- Activity Log

-

Pipeline FAQs

- Can multiple Sources connect to one Destination?

- What happens if I re-create a deleted Pipeline?

- Why is there a delay in my Pipeline?

- Can I change the Destination post-Pipeline creation?

- Why is my billable Events high with Delta Timestamp mode?

- Can I drop multiple Destination tables in a Pipeline at once?

- How does Run Now affect scheduled ingestion frequency?

- Will pausing some objects increase the ingestion speed?

- Can I see the historical load progress?

- Why is my Historical Load Progress still at 0%?

- Why is historical data not getting ingested?

- How do I set a field as a primary key?

- How do I ensure that records are loaded only once?

- Events Usage

-

Sources

- Free Sources

-

Databases and File Systems

- Data Warehouses

-

Databases

- Connecting to a Local Database

- Amazon DocumentDB

- Amazon DynamoDB

- Elasticsearch

-

MongoDB

- Generic MongoDB

- MongoDB Atlas

- Support for Multiple Data Types for the _id Field

- Example - Merge Collections Feature

-

Troubleshooting MongoDB

-

Errors During Pipeline Creation

- Error 1001 - Incorrect credentials

- Error 1005 - Connection timeout

- Error 1006 - Invalid database hostname

- Error 1007 - SSH connection failed

- Error 1008 - Database unreachable

- Error 1011 - Insufficient access

- Error 1028 - Primary/Master host needed for OpLog

- Error 1029 - Version not supported for Change Streams

- SSL 1009 - SSL Connection Failure

- Troubleshooting MongoDB Change Streams Connection

- Troubleshooting MongoDB OpLog Connection

-

Errors During Pipeline Creation

- SQL Server

-

MySQL

- Amazon Aurora MySQL

- Amazon RDS MySQL

- Azure MySQL

- Generic MySQL

- Google Cloud MySQL

- MariaDB MySQL

-

Troubleshooting MySQL

-

Errors During Pipeline Creation

- Error 1003 - Connection to host failed

- Error 1006 - Connection to host failed

- Error 1007 - SSH connection failed

- Error 1011 - Access denied

- Error 1012 - Replication access denied

- Error 1017 - Connection to host failed

- Error 1026 - Failed to connect to database

- Error 1027 - Unsupported BinLog format

- Failed to determine binlog filename/position

- Schema 'xyz' is not tracked via bin logs

- Errors Post-Pipeline Creation

-

Errors During Pipeline Creation

- MySQL FAQs

- Oracle

-

PostgreSQL

- Amazon Aurora PostgreSQL

- Amazon RDS PostgreSQL

- Azure PostgreSQL

- Generic PostgreSQL

- Google Cloud PostgreSQL

- Heroku PostgreSQL

-

Troubleshooting PostgreSQL

-

Errors during Pipeline creation

- Error 1003 - Authentication failure

- Error 1006 - Connection settings errors

- Error 1011 - Access role issue for logical replication

- Error 1012 - Access role issue for logical replication

- Error 1014 - Database does not exist

- Error 1017 - Connection settings errors

- Error 1023 - No pg_hba.conf entry

- Error 1024 - Number of requested standby connections

- Errors Post-Pipeline Creation

-

Errors during Pipeline creation

-

PostgreSQL FAQs

- Can I track updates to existing records in PostgreSQL?

- How can I migrate a Pipeline created with one PostgreSQL Source variant to another variant?

- How can I prevent data loss when migrating or upgrading my PostgreSQL database?

- Why do FLOAT4 and FLOAT8 values in PostgreSQL show additional decimal places when loaded to BigQuery?

- Why is data not being ingested from PostgreSQL Source objects?

- Troubleshooting Database Sources

- Database Source FAQs

- File Storage

- Engineering Analytics

- Finance & Accounting Analytics

-

Marketing Analytics

- ActiveCampaign

- AdRoll

- Amazon Ads

- Apple Search Ads

- AppsFlyer

- CleverTap

- Criteo

- Drip

- Facebook Ads

- Facebook Page Insights

- Firebase Analytics

- Freshsales

- Google Ads

- Google Analytics 4

- Google Analytics 360

- Google Play Console

- Google Search Console

- HubSpot

- Instagram Business

- Klaviyo v2

- Lemlist

- LinkedIn Ads

- Mailchimp

- Mailshake

- Marketo

- Microsoft Ads

- Onfleet

- Outbrain

- Pardot

- Pinterest Ads

- Pipedrive

- Recharge

- Segment

- SendGrid Webhook

- SendGrid

- Salesforce Marketing Cloud

- Snapchat Ads

- SurveyMonkey

- Taboola

- TikTok Ads

- Twitter Ads

- Typeform

- YouTube Analytics

- Product Analytics

- Sales & Support Analytics

- Source FAQs

-

Destinations

- Familiarizing with the Destinations UI

- Cloud Storage-Based

- Databases

-

Data Warehouses

- Amazon Redshift

- Amazon Redshift Serverless

- Azure Synapse Analytics

- Databricks

- Google BigQuery

- Hevo Managed Google BigQuery

- Snowflake

- Troubleshooting Data Warehouse Destinations

-

Destination FAQs

- Can I change the primary key in my Destination table?

- Can I change the Destination table name after creating the Pipeline?

- How can I change or delete the Destination table prefix?

- Why does my Destination have deleted Source records?

- How do I filter deleted Events from the Destination?

- Does a data load regenerate deleted Hevo metadata columns?

- How do I filter out specific fields before loading data?

- Transform

- Alerts

- Account Management

- Activate

- Glossary

-

Releases- Release 2.45.2 (Feb 16-23, 2026)

- Release 2.45.1 (Feb 09-16, 2026)

- Release 2.45 (Jan 12-Feb 09, 2026)

-

2025 Releases

- Release 2.44 (Dec 01, 2025-Jan 12, 2026)

- Release 2.43 (Nov 03-Dec 01, 2025)

- Release 2.42 (Oct 06-Nov 03, 2025)

- Release 2.41 (Sep 08-Oct 06, 2025)

- Release 2.40 (Aug 11-Sep 08, 2025)

- Release 2.39 (Jul 07-Aug 11, 2025)

- Release 2.38 (Jun 09-Jul 07, 2025)

- Release 2.37 (May 12-Jun 09, 2025)

- Release 2.36 (Apr 14-May 12, 2025)

- Release 2.35 (Mar 17-Apr 14, 2025)

- Release 2.34 (Feb 17-Mar 17, 2025)

- Release 2.33 (Jan 20-Feb 17, 2025)

-

2024 Releases

- Release 2.32 (Dec 16 2024-Jan 20, 2025)

- Release 2.31 (Nov 18-Dec 16, 2024)

- Release 2.30 (Oct 21-Nov 18, 2024)

- Release 2.29 (Sep 30-Oct 22, 2024)

- Release 2.28 (Sep 02-30, 2024)

- Release 2.27 (Aug 05-Sep 02, 2024)

- Release 2.26 (Jul 08-Aug 05, 2024)

- Release 2.25 (Jun 10-Jul 08, 2024)

- Release 2.24 (May 06-Jun 10, 2024)

- Release 2.23 (Apr 08-May 06, 2024)

- Release 2.22 (Mar 11-Apr 08, 2024)

- Release 2.21 (Feb 12-Mar 11, 2024)

- Release 2.20 (Jan 15-Feb 12, 2024)

-

2023 Releases

- Release 2.19 (Dec 04, 2023-Jan 15, 2024)

- Release Version 2.18

- Release Version 2.17

- Release Version 2.16 (with breaking changes)

- Release Version 2.15 (with breaking changes)

- Release Version 2.14

- Release Version 2.13

- Release Version 2.12

- Release Version 2.11

- Release Version 2.10

- Release Version 2.09

- Release Version 2.08

- Release Version 2.07

- Release Version 2.06

-

2022 Releases

- Release Version 2.05

- Release Version 2.04

- Release Version 2.03

- Release Version 2.02

- Release Version 2.01

- Release Version 2.00

- Release Version 1.99

- Release Version 1.98

- Release Version 1.97

- Release Version 1.96

- Release Version 1.95

- Release Version 1.93 & 1.94

- Release Version 1.92

- Release Version 1.91

- Release Version 1.90

- Release Version 1.89

- Release Version 1.88

- Release Version 1.87

- Release Version 1.86

- Release Version 1.84 & 1.85

- Release Version 1.83

- Release Version 1.82

- Release Version 1.81

- Release Version 1.80 (Jan-24-2022)

- Release Version 1.79 (Jan-03-2022)

-

2021 Releases

- Release Version 1.78 (Dec-20-2021)

- Release Version 1.77 (Dec-06-2021)

- Release Version 1.76 (Nov-22-2021)

- Release Version 1.75 (Nov-09-2021)

- Release Version 1.74 (Oct-25-2021)

- Release Version 1.73 (Oct-04-2021)

- Release Version 1.72 (Sep-20-2021)

- Release Version 1.71 (Sep-09-2021)

- Release Version 1.70 (Aug-23-2021)

- Release Version 1.69 (Aug-09-2021)

- Release Version 1.68 (Jul-26-2021)

- Release Version 1.67 (Jul-12-2021)

- Release Version 1.66 (Jun-28-2021)

- Release Version 1.65 (Jun-14-2021)

- Release Version 1.64 (Jun-01-2021)

- Release Version 1.63 (May-19-2021)

- Release Version 1.62 (May-05-2021)

- Release Version 1.61 (Apr-20-2021)

- Release Version 1.60 (Apr-06-2021)

- Release Version 1.59 (Mar-23-2021)

- Release Version 1.58 (Mar-09-2021)

- Release Version 1.57 (Feb-22-2021)

- Release Version 1.56 (Feb-09-2021)

- Release Version 1.55 (Jan-25-2021)

- Release Version 1.54 (Jan-12-2021)

-

2020 Releases

- Release Version 1.53 (Dec-22-2020)

- Release Version 1.52 (Dec-03-2020)

- Release Version 1.51 (Nov-10-2020)

- Release Version 1.50 (Oct-19-2020)

- Release Version 1.49 (Sep-28-2020)

- Release Version 1.48 (Sep-01-2020)

- Release Version 1.47 (Aug-06-2020)

- Release Version 1.46 (Jul-21-2020)

- Release Version 1.45 (Jul-02-2020)

- Release Version 1.44 (Jun-11-2020)

- Release Version 1.43 (May-15-2020)

- Release Version 1.42 (Apr-30-2020)

- Release Version 1.41 (Apr-2020)

- Release Version 1.40 (Mar-2020)

- Release Version 1.39 (Feb-2020)

- Release Version 1.38 (Jan-2020)

- Early Access New

Amazon DynamoDB

Amazon DynamoDB is a fully managed, multi-master, a multi-region non-relational database that offers built-in in-memory caching to deliver reliable performance at any scale.

Hevo uses DynamoDB’s data streams to support change data capture (CDC). Data streams are time-ordered sequences of item-level changes in the DynamoDB tables. All data in DynamoDB streams are subject to a 24-hour lifetime and are automatically removed after this time. We suggest that you keep the ingestion frequency accordingly.

To facilitate incremental data loads to a Destination, Hevo needs to keep track of the data that has been read so far from the data stream. Hevo supports two ways of replicating data to manage the ingestion information:

Refer to the table below to know the differences between the two methods.

| Kinesis Data Streams | DynamoDB Streams |

|---|---|

| Recommended method. | Default method, if DynamoDB user does not have dynamodb:CreateTable permissions. |

User permissions needed on the DynamoDB Source: - Read-only - dynamodb:CreateTable

|

User permissions needed on the DynamoDB Source: - Read-only

|

| Uses the Kinesis Client Library (KCL) to ingest the changed data from the database. | Uses the DynamoDB library. |

| Guarantees real-time data ingestion | Data might be ingested with a delay |

The Kenesis driver maintains the context. KCL creates an additional table (with prefix hevo_kcl) per table in the Source system, to store the last processed state for a table. |

Hevo keeps the entire context of data replication as metadata, including positions to indicate the last record ingested. |

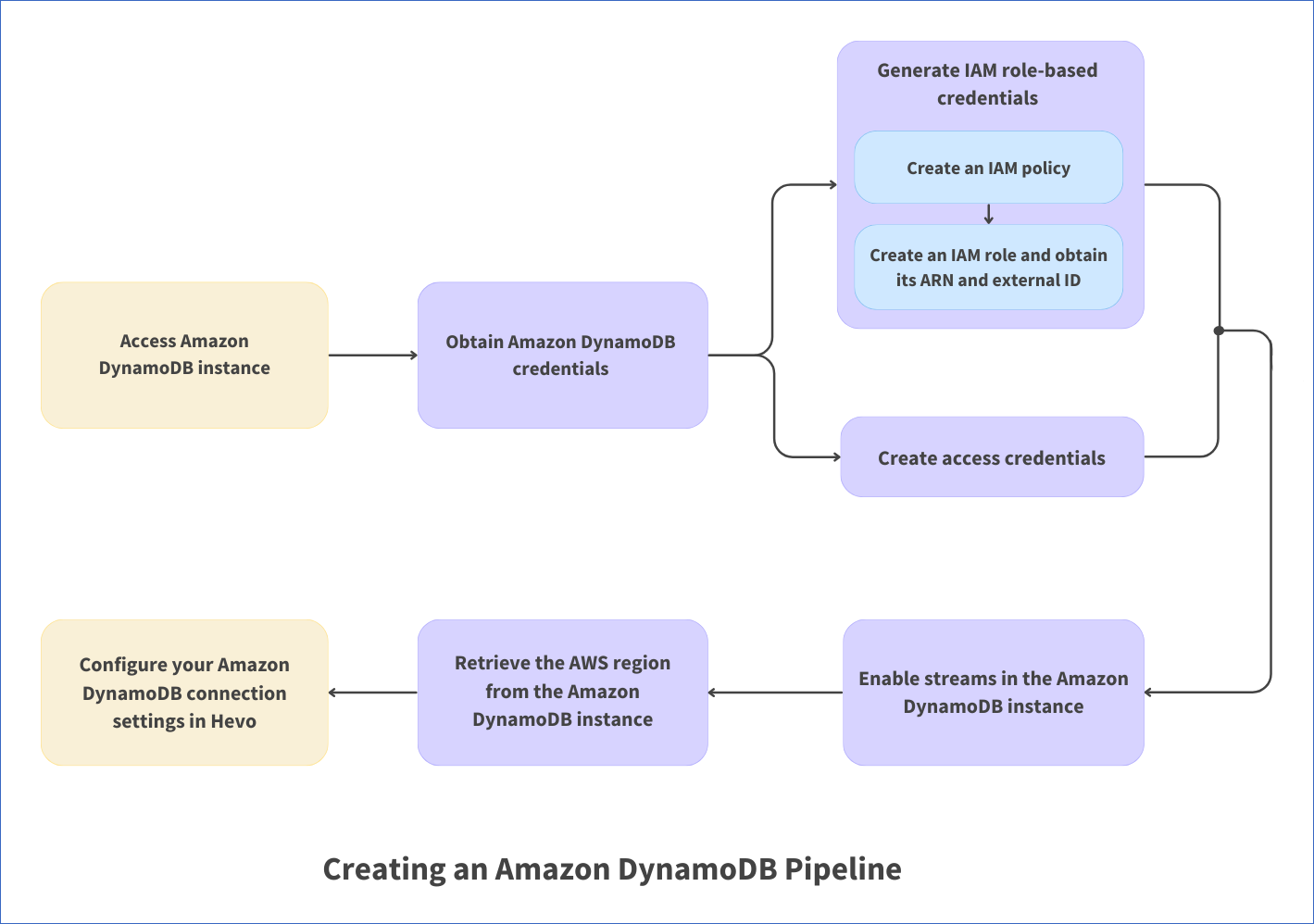

The following image illustrates the key steps that you need to complete to configure Amazon DynamoDB as a Source in Hevo:

Prerequisites

-

An active Amazon Web Services (AWS) account is available.

-

If you are logged in as an IAM user, you have permission to:

-

Create the access credentials or generate IAM role-based credentials.

-

Obtain or create the IAM policies

-

-

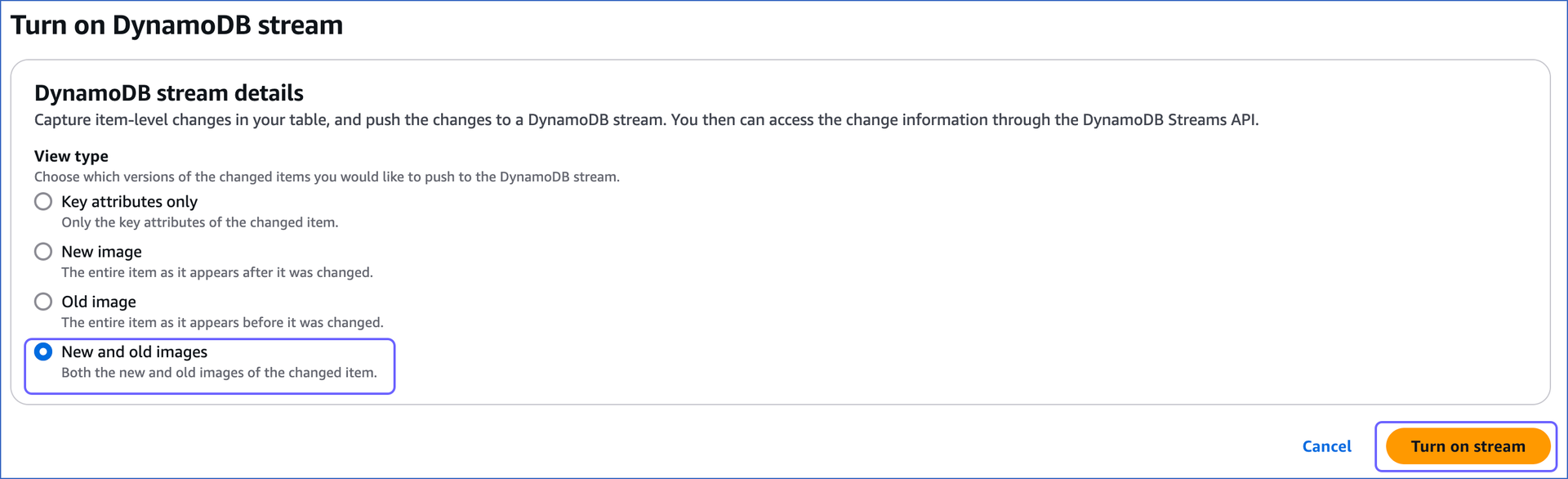

Streams are enabled on the DynamoDB tables to be replicated and the value of the StreamViewType parameter is set to New and old images (NEW_AND_OLD_IMAGES in the CLI). Without this configuration, while the historical data is successfully ingested, the incremental data ingestion fails.

Note: Hevo does not modify any data in the Source tables. The permissions are used solely to store the last processed state for a table by the Kinesis Client Library (KCL).

-

You are assigned the Team Administrator, Team Collaborator, or Pipeline Administrator role in Hevo, to create the Pipeline.

Obtain Amazon DynamoDB Credentials

You must either Create access credentials or Generate IAM role based credentials to allow Hevo to connect to your Amazon DynamoDB account and ingest data from it.

Create access credentials

You need the access key and secret access key from your Amazon DynamoDB account to allow Hevo to access the data from it.

-

Log in to the AWS IAM Console.

-

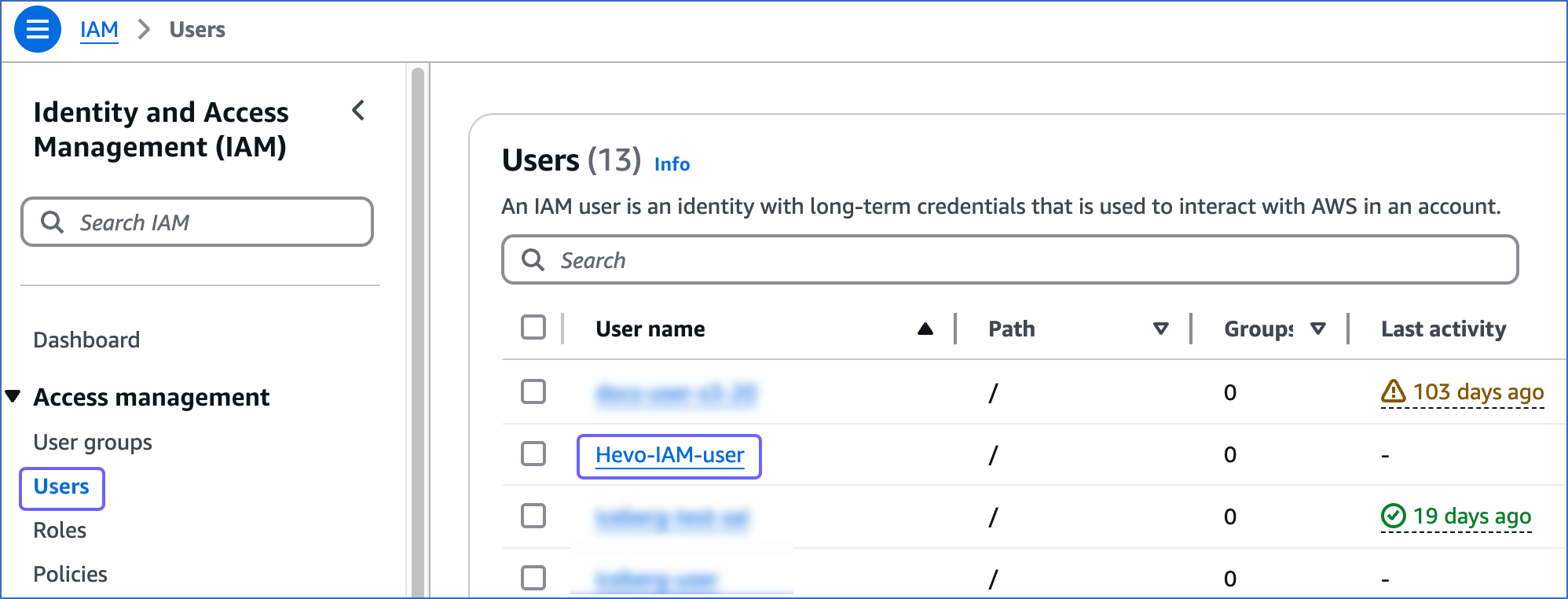

In the left navigation bar, under Access management, click Users. Then, in the right pane, click the User name for which you want to create an access key.

-

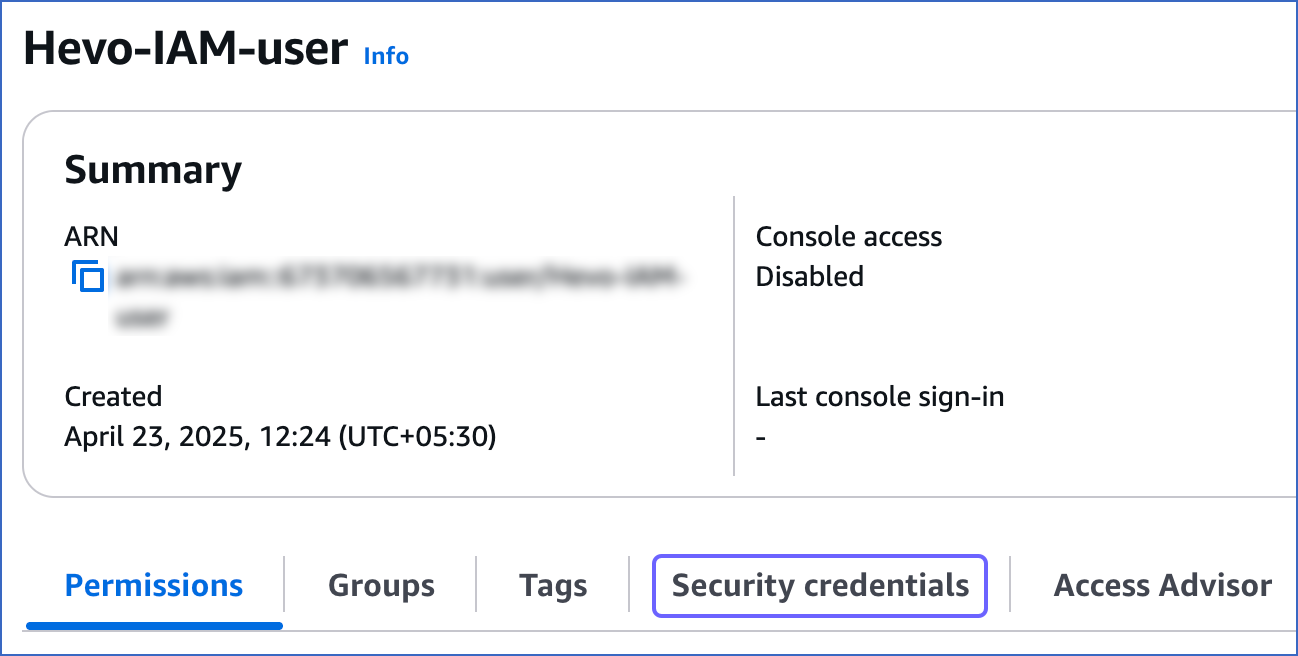

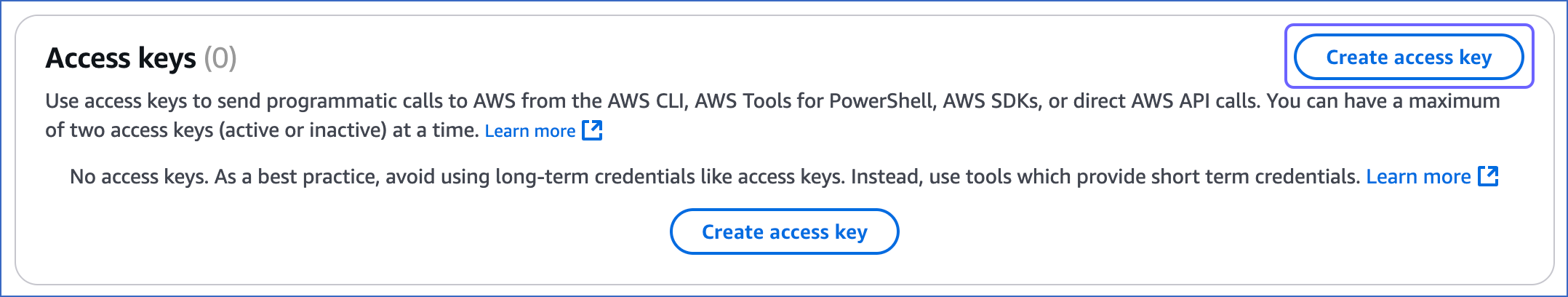

On the <User name> page, click the Security credentials tab and scroll down to the Access keys section.

-

Click Create access key.

-

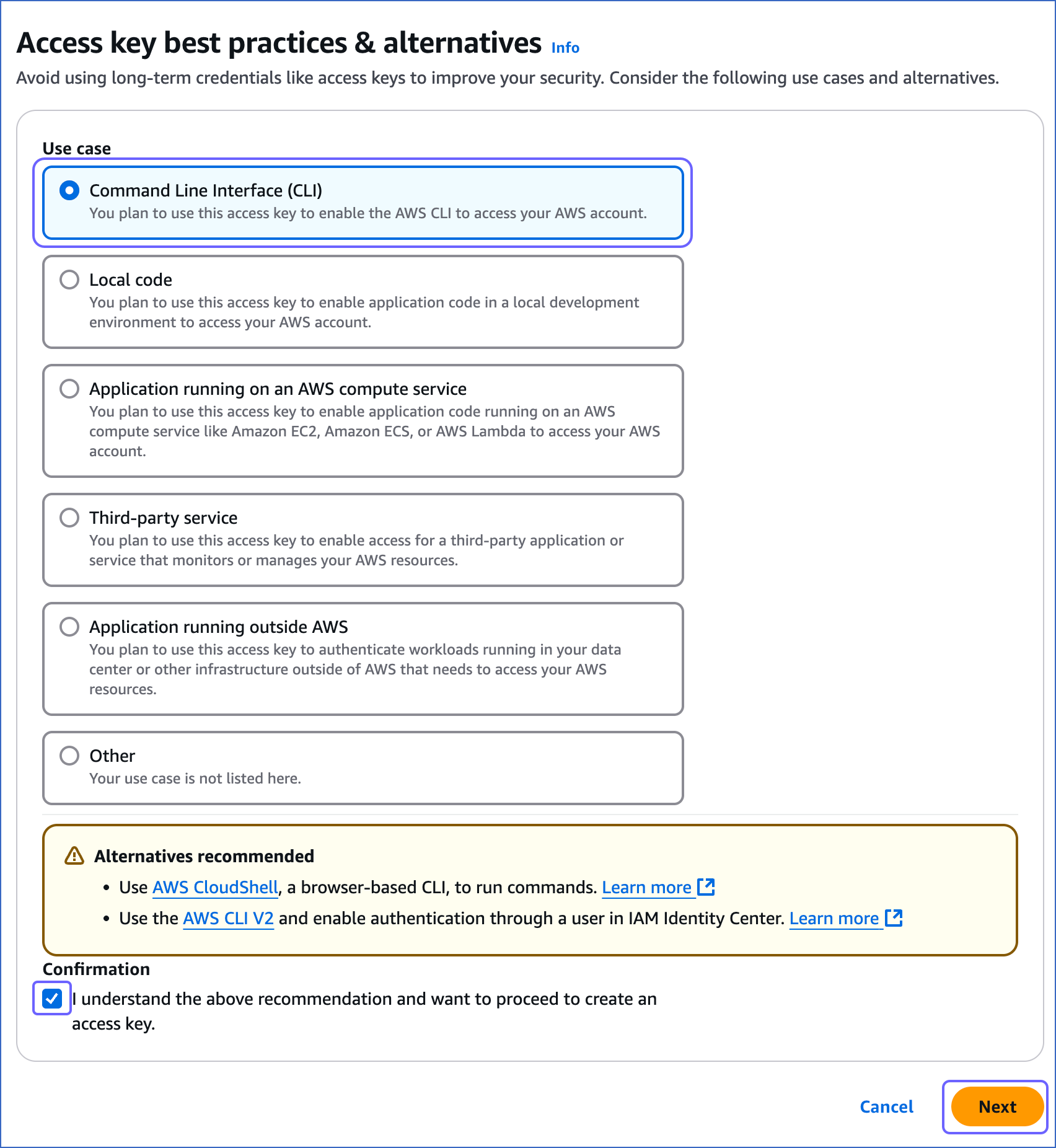

On the Access key best practices & alternatives page, do the following:

-

Select one of the following recommended methods. For example, in the image above, Command Line Interface (CLI) is selected.

-

Select the I understand the above recommendation… check box, to proceed with creating the access key.

-

Click Next.

-

-

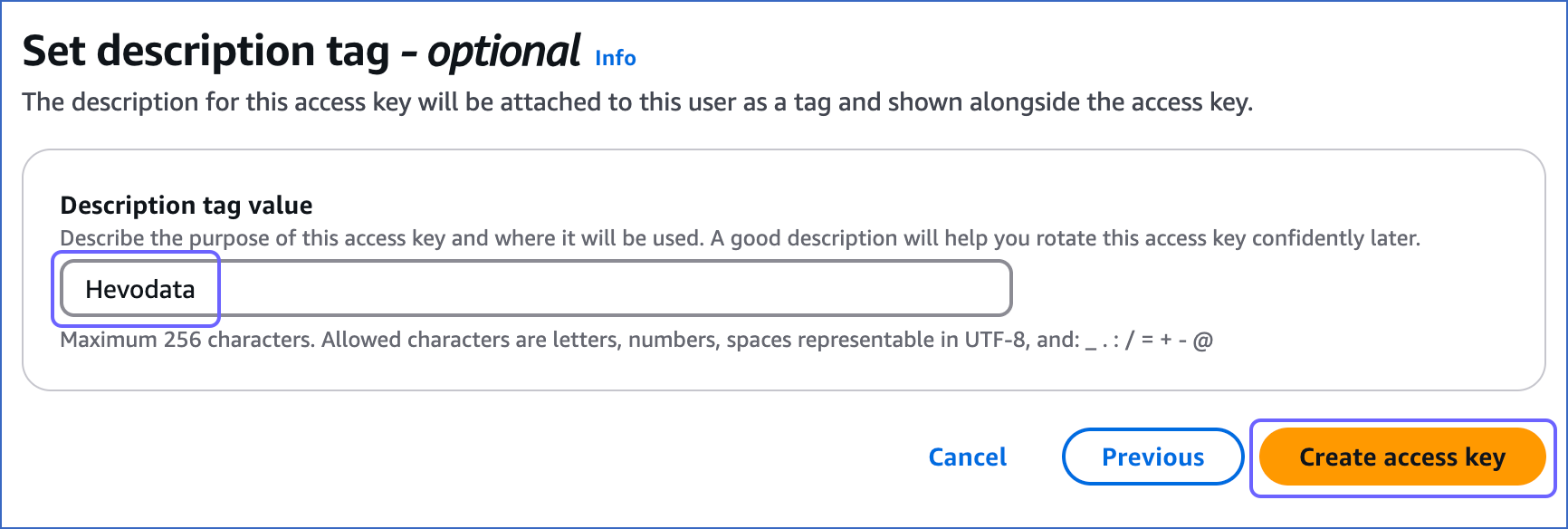

On the Set description tag - optional page, do the following:

-

(Optional) Specify a description for the access key in the Description tag value field.

-

Click Create access key.

-

-

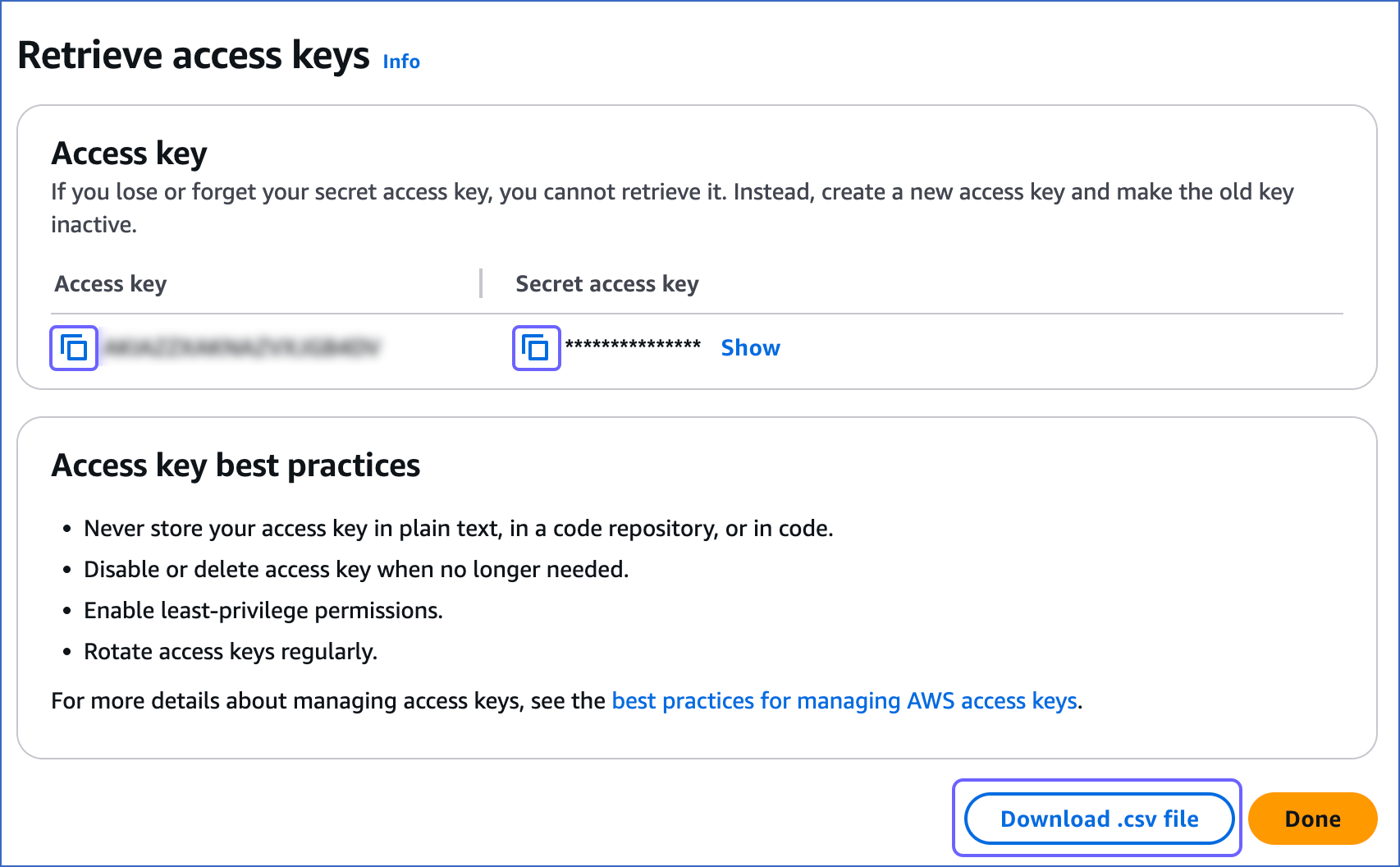

On the Retrieve access keys page, do the following:

-

Click the copy icon in the Access key field and save the key securely like any other password.

-

Click the copy icon in the Secret access key field and save the key securely like any other password.

Note: Once you exit this page, you cannot view these keys again.

-

(Optional) Click Download .csv file to save the access key and the secret access key on your local machine.

-

Generate IAM role-based credentials

To generate your IAM role-based credentials, you need to:

-

Create an IAM policy with the permission to allow Hevo to access data from your DynamoDB database.

-

Create an IAM role for Hevo, as the Amazon Resource Name (ARN) and the external ID from this role are required to configure Amazon DynamoDB as a Source in Hevo.

These steps are explained in details below:

1. Create an IAM policy

-

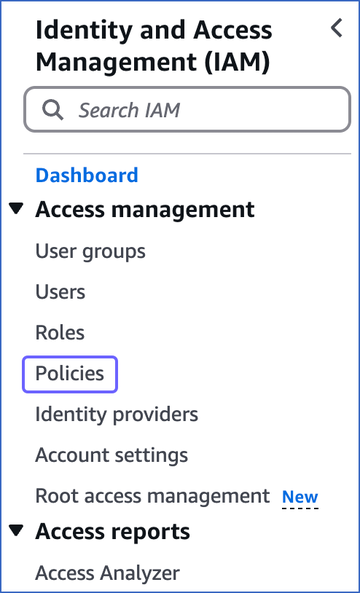

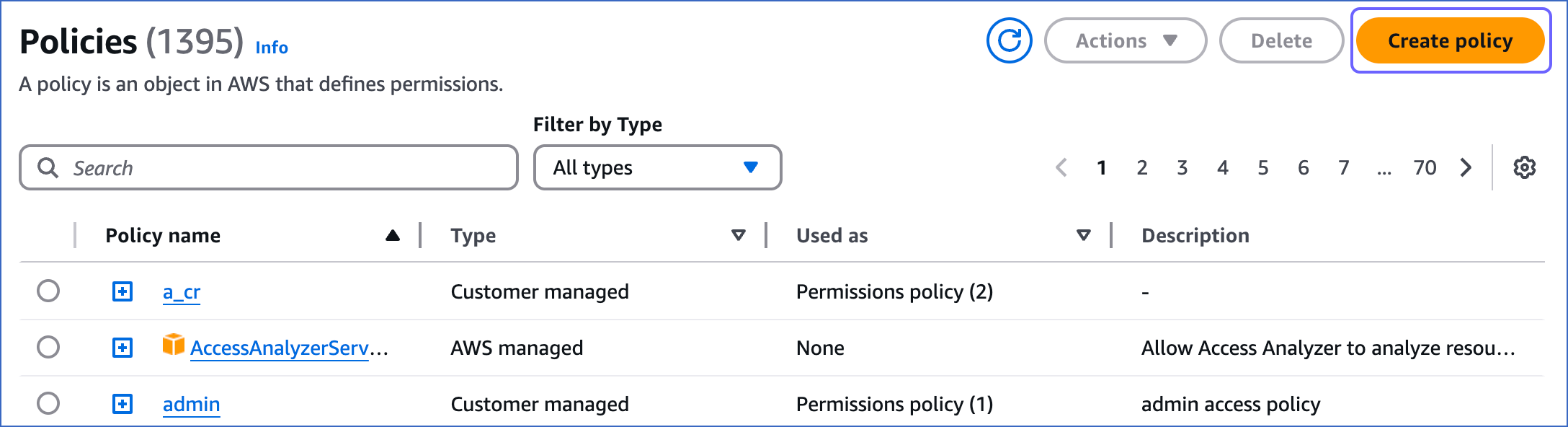

Log in to the AWS IAM Console.

-

In the left navigation bar, under Access management, click Policies.

-

On the Policies page, click Create policy.

-

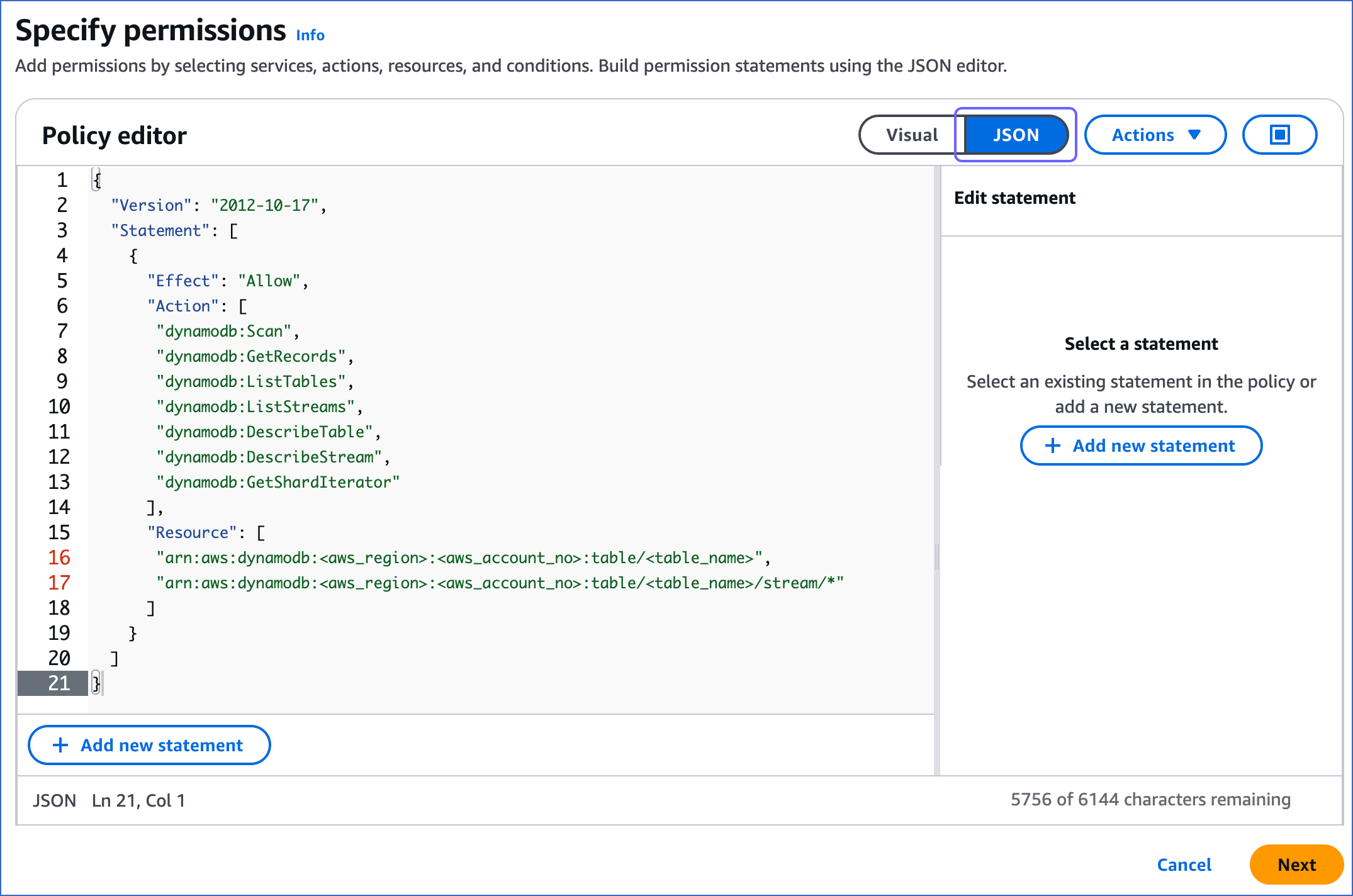

On the Specify permissions page, click the JSON tab and paste the following policy in the editor. The JSON statements list the permissions the policy would assign to Hevo.

Note:

-

Replace the placeholder values in the commands below with your own. For example, <aws_region> with us-east-1.

-

You can replace the <table_name> in the command below with

*to ingest data from all the tables in your database.

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "dynamodb:Scan", "dynamodb:GetRecords", "dynamodb:ListTables", "dynamodb:ListStreams", "dynamodb:DescribeTable", "dynamodb:DescribeStream", "dynamodb:GetShardIterator" ], "Resource": [ "arn:aws:dynamodb:<aws_region>:<aws_account_no>:table/<table_name>", "arn:aws:dynamodb:<aws_region>:<aws_account_no>:table/<table_name>/stream/*" ] } ] }Note: Hevo does not modify any data in the Source tables. The permissions are used solely to store the last processed state for a table by the KCL.

-

-

Click Next.

-

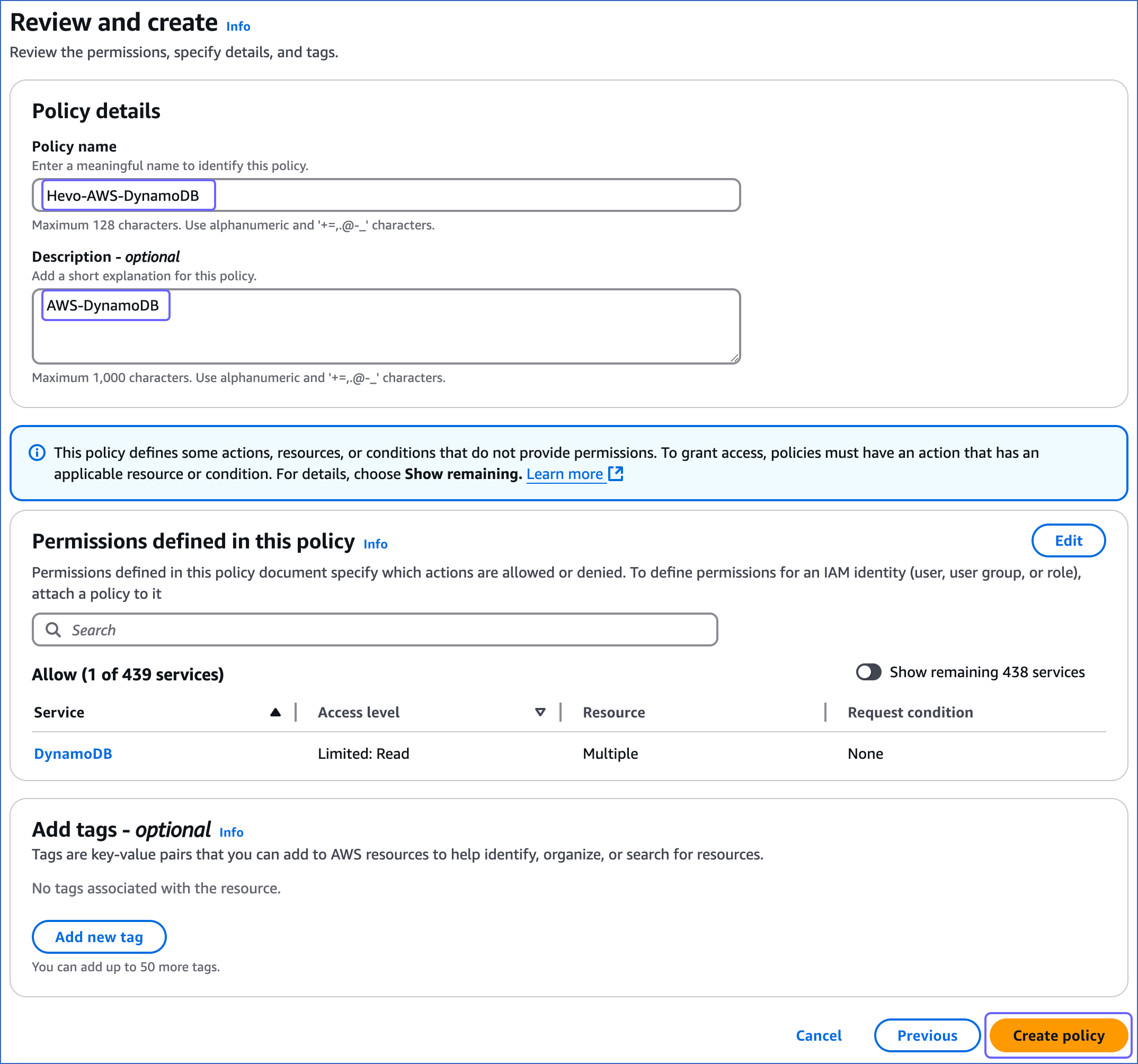

On the Review and create page, specify a Policy name and Description for your policy and click Create policy.

You are redirected to the Policies page, where you can see the policy that you created.

2. Create an IAM role and obtain its ARN and External ID

Perform the following steps to create an IAM role:

-

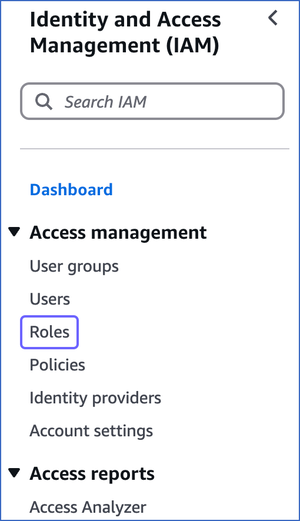

Log in to the AWS IAM Console.

-

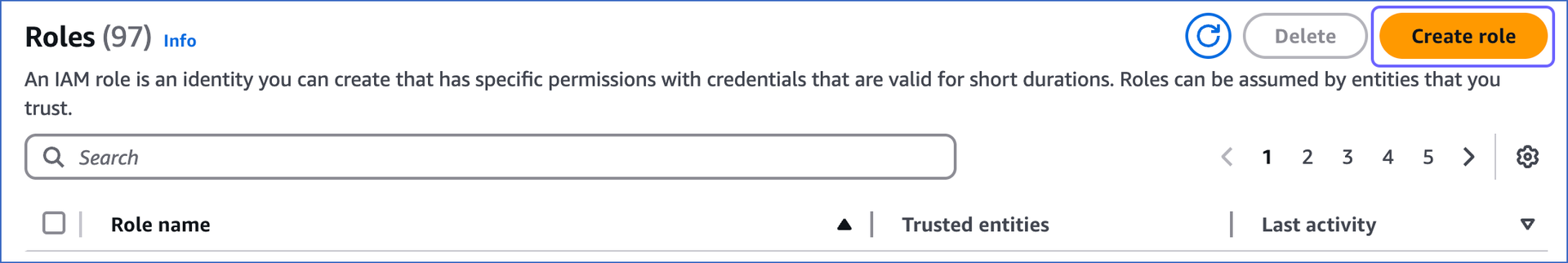

In the left navigation bar, under Access management, click Roles.

-

On the Roles page, click Create role.

-

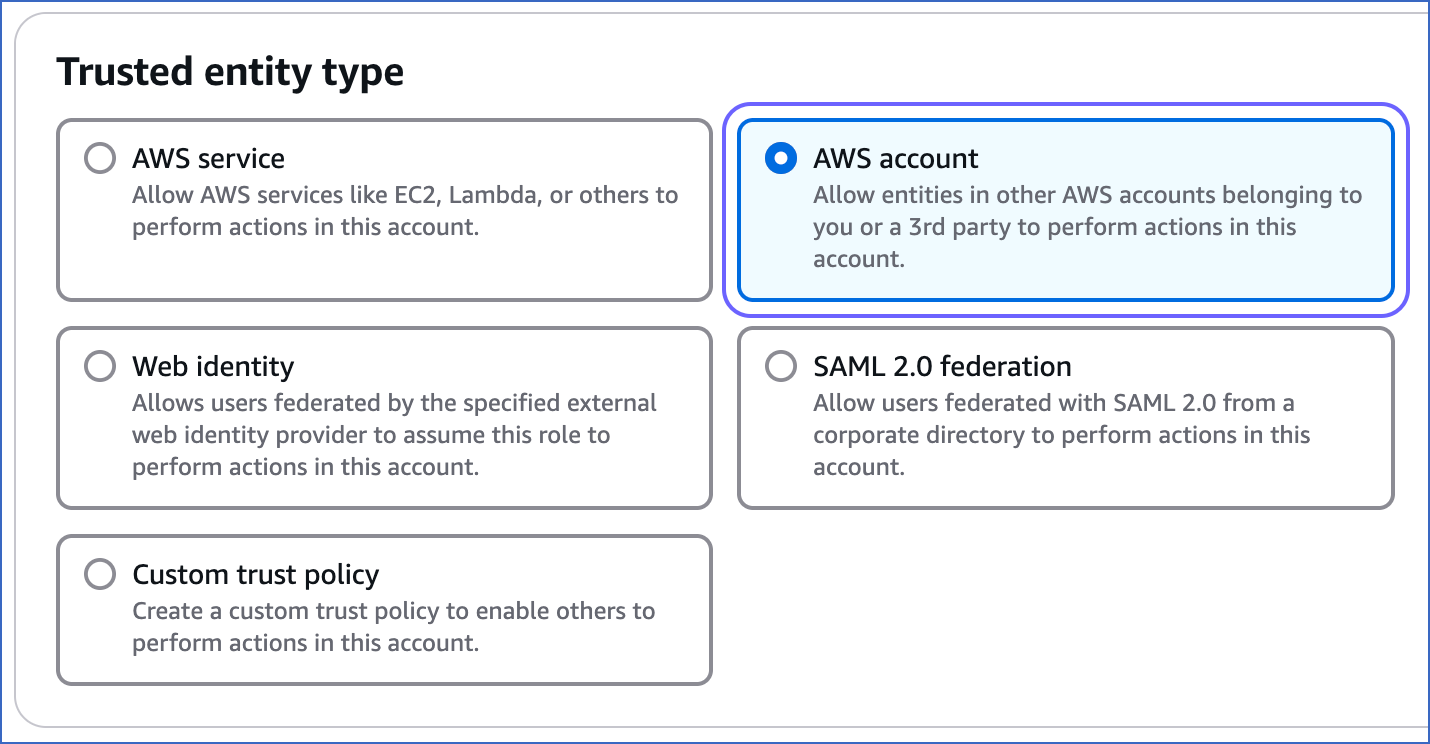

On the Select trusted entity page:

-

In the Trusted entity type section, select AWS account.

-

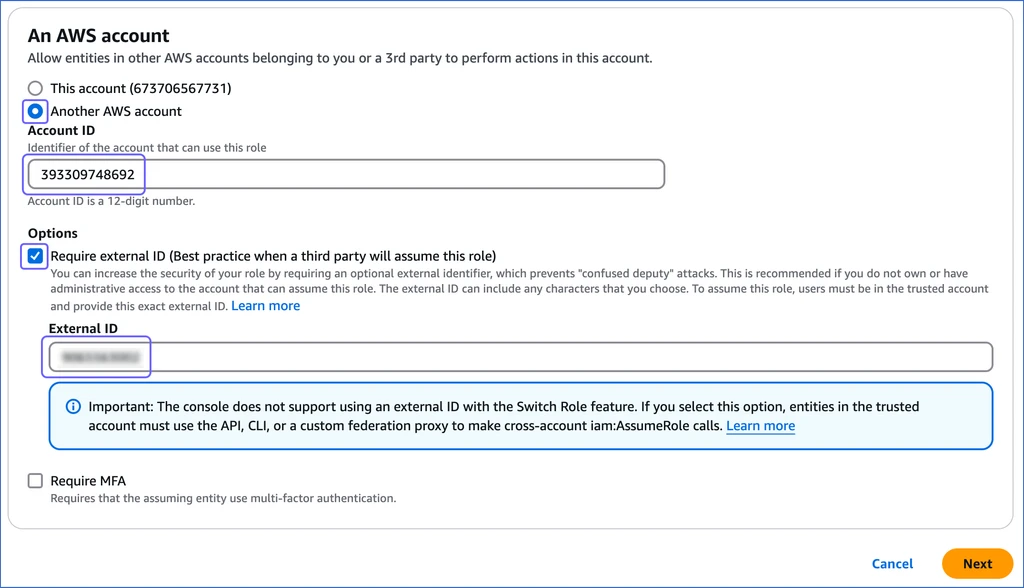

In the An AWS account section, do the following:

-

Select the Another AWS account option, and specify Hevo’s Account ID, 393309748692.

-

In the Options section, select the Require external ID… check box, and specify an External ID of your choice.

Note: You must save this external ID in a secure location like any other password. This is required while setting up a Pipeline in Hevo.

-

-

-

Click Next.

-

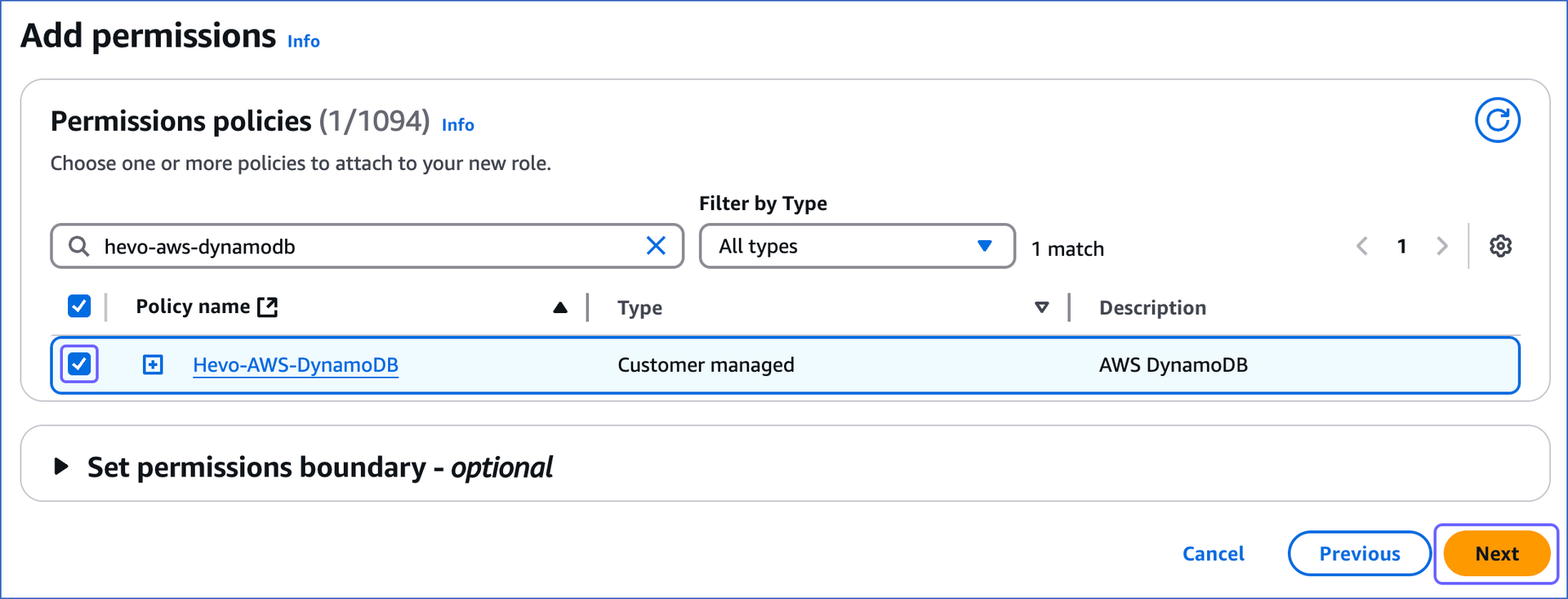

In the Permissions policies section, search and select the check box corresponding to the policy that you created in the create an IAM policy section above, and then click Next at the bottom of the page.

-

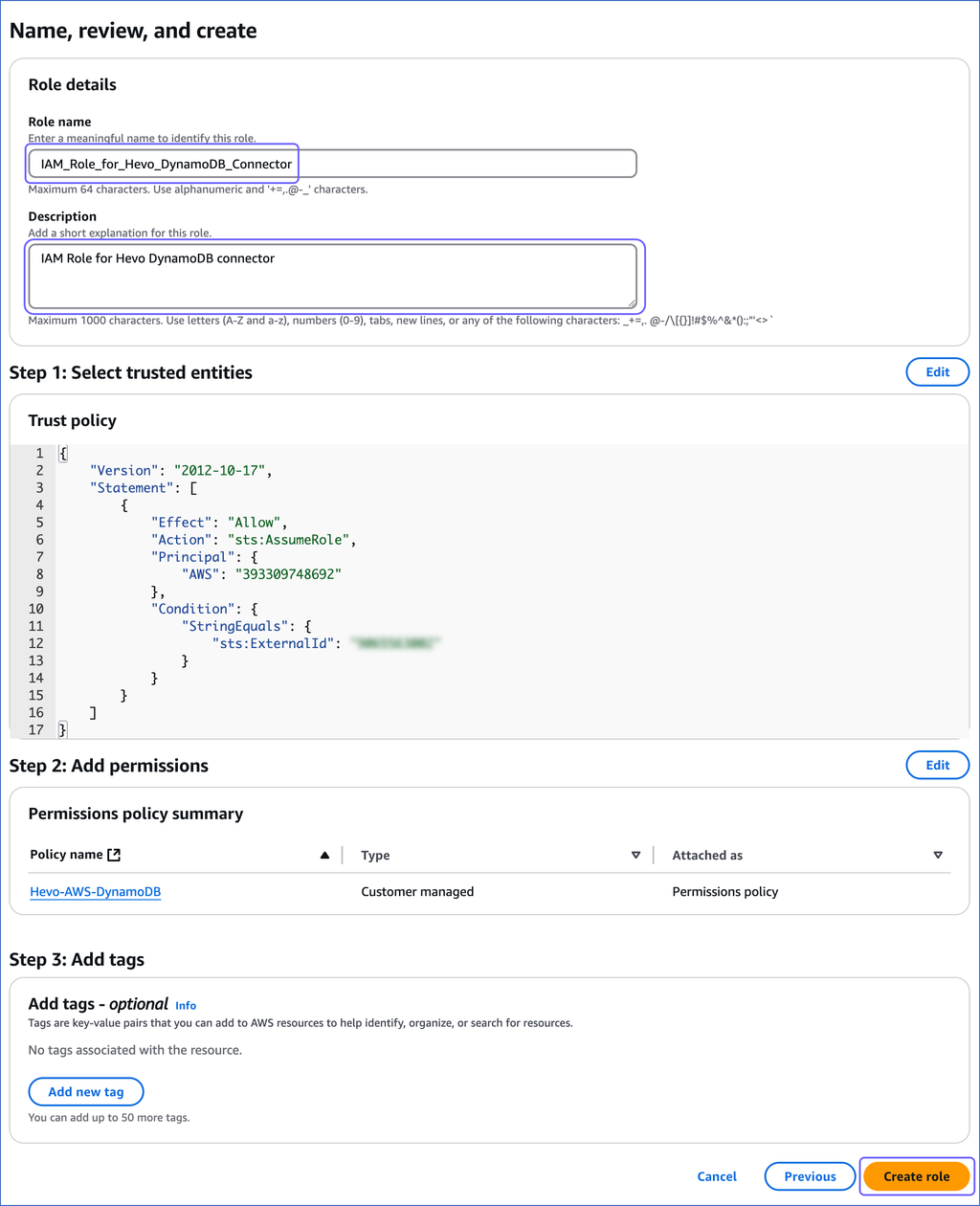

On the Name, review, and create page, specify the Role name and Description, and then click Create role.

-

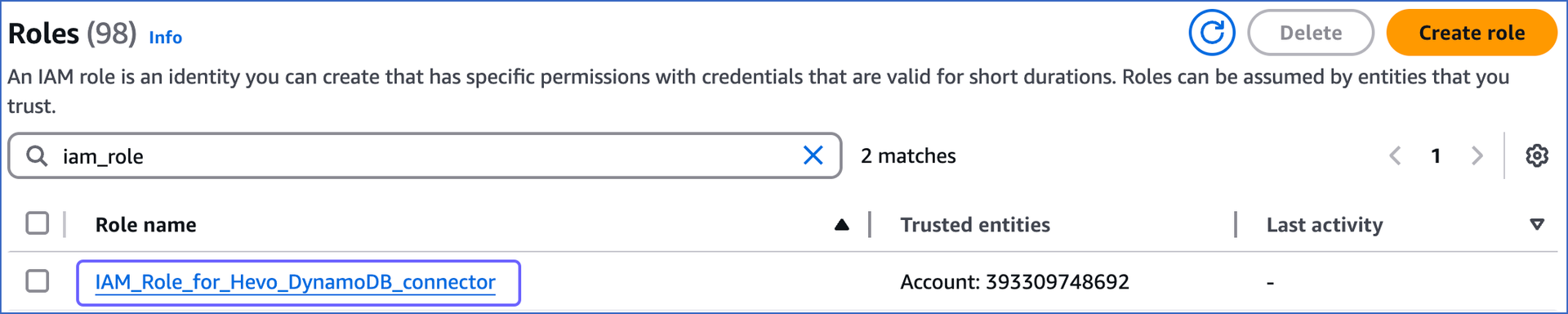

On the Roles page, search and click the role that you created above.

-

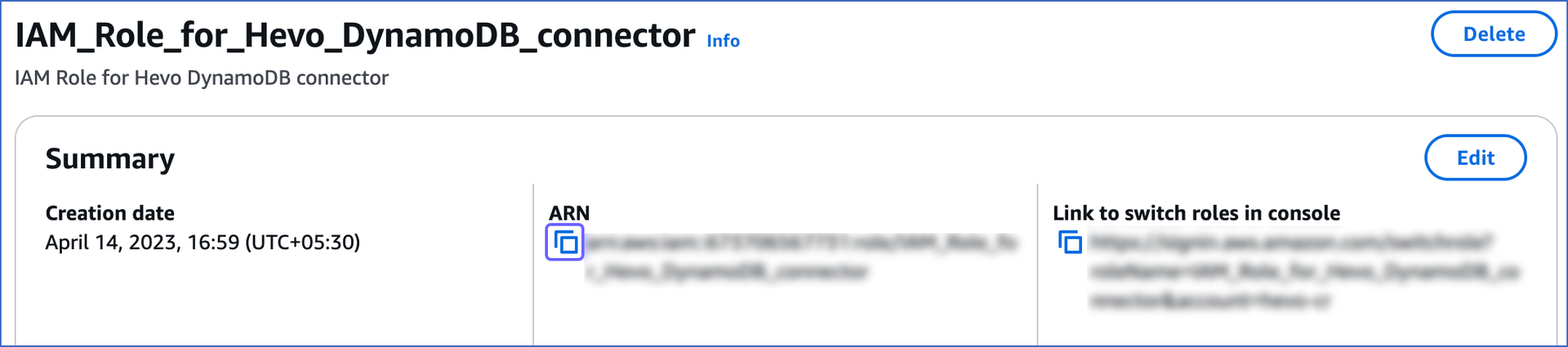

On the <Role name> page, in the Summary section, click the copy icon to copy the ARN and save it securely like any other password. Use this ARN while configuring Amazon DynamoDB as a Source in Hevo.

Enable Streams

You need to enable streams for all DynamoDB tables you want to sync through Hevo. To do this:

-

Sign in to the AWS DynamoDB Console.

-

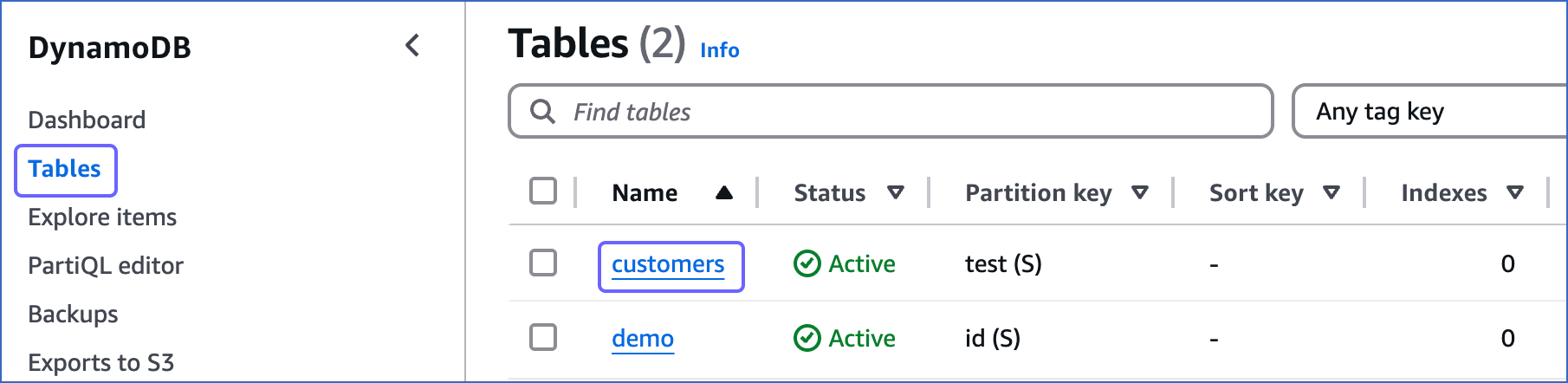

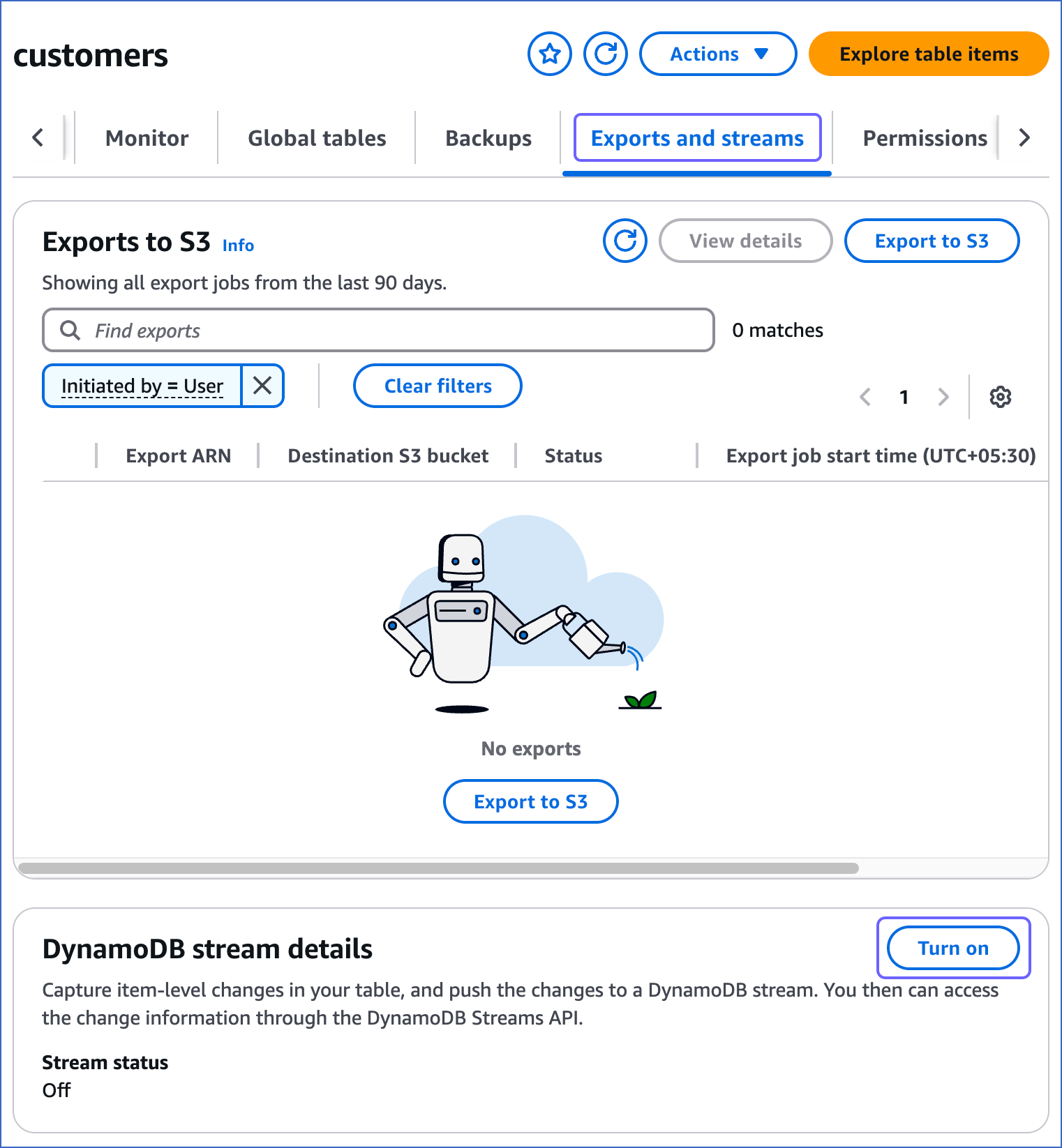

In the left navigation bar, click Tables, and then click the table for which you want to enable streams. For example, customers in the image below.

-

On the <Table name> page, click the Exports and streams tab, scroll down to the DynamoDB stream details section and click Turn on.

-

On the Turn on DynamoDB stream page, select New and old images and click Turn on stream.

-

Repeat steps 2-4 for all the tables you want to synchronize.

Note: Tables without DynamoDB streams enabled cannot be ingested. Hevo displays a warning for such tables on the Select Objects page during Pipeline creation, indicating that streams are not enabled.

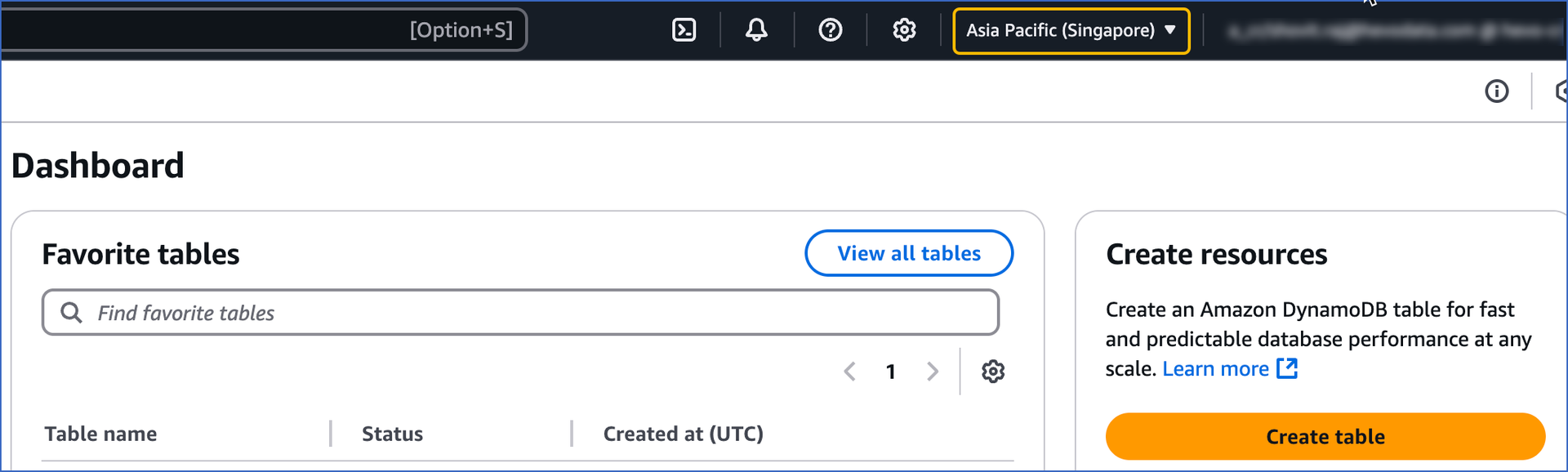

Retrieve the AWS Region

To configure Amazon DynamoDB as a Source in Hevo you need to provide the AWS region where your DynamoDB instance is running.

To retrieve your AWS region:

-

Log in to your Amazon DynamoDB Console, and in the top-right corner of the page, locate the AWS region.

Note: Your Amazon DynamoDB instance must be created in one of the AWS regions supported by Hevo.

Configure Amazon DynamoDB Connection Settings

Perform the following steps to configure DynamoDB as a Source in Hevo:

-

Click PIPELINES in the Navigation Bar.

-

Click + Create Pipeline in the Pipelines List View.

-

On the Select Source Type page, select DynamoDB.

-

On the Select Destination Type page, select the type of Destination you want to use.

-

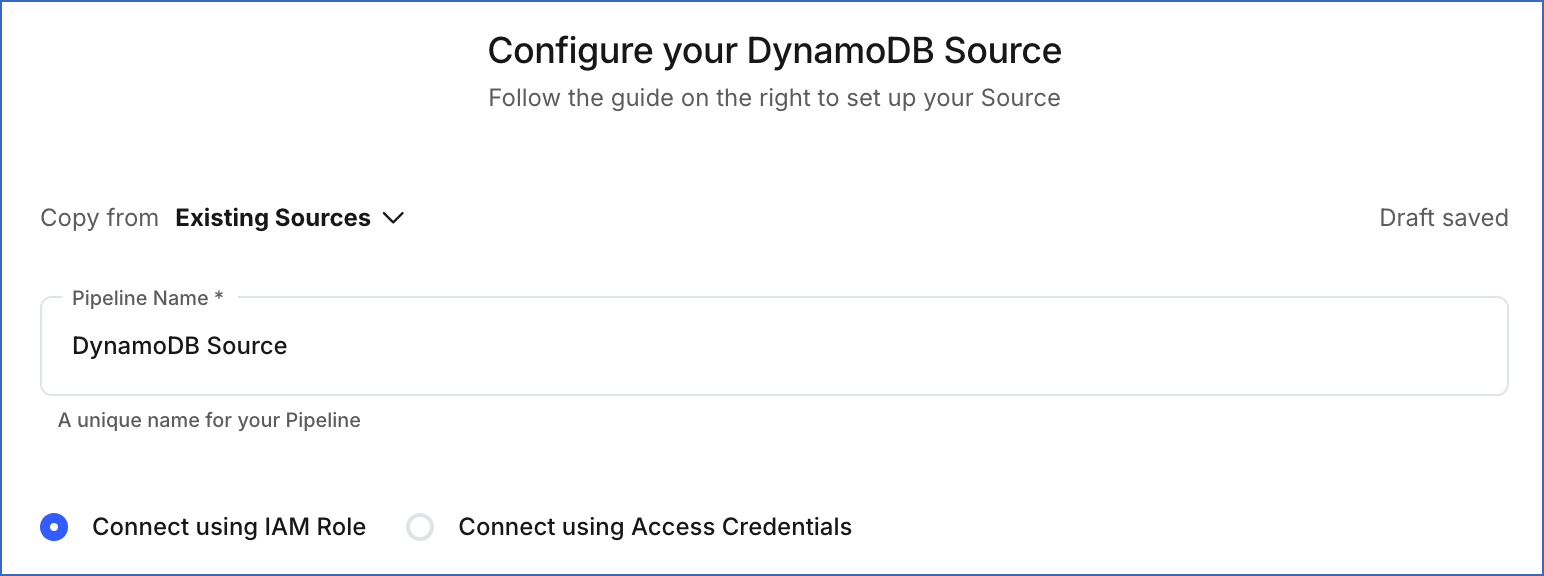

On the Configure your DynamoDB Source page, specify the following:

-

Pipeline Name: A unique name for the Pipeline, not exceeding 255 characters.

-

Select one of the methods to connect to your Amazon DynamoDB database:

-

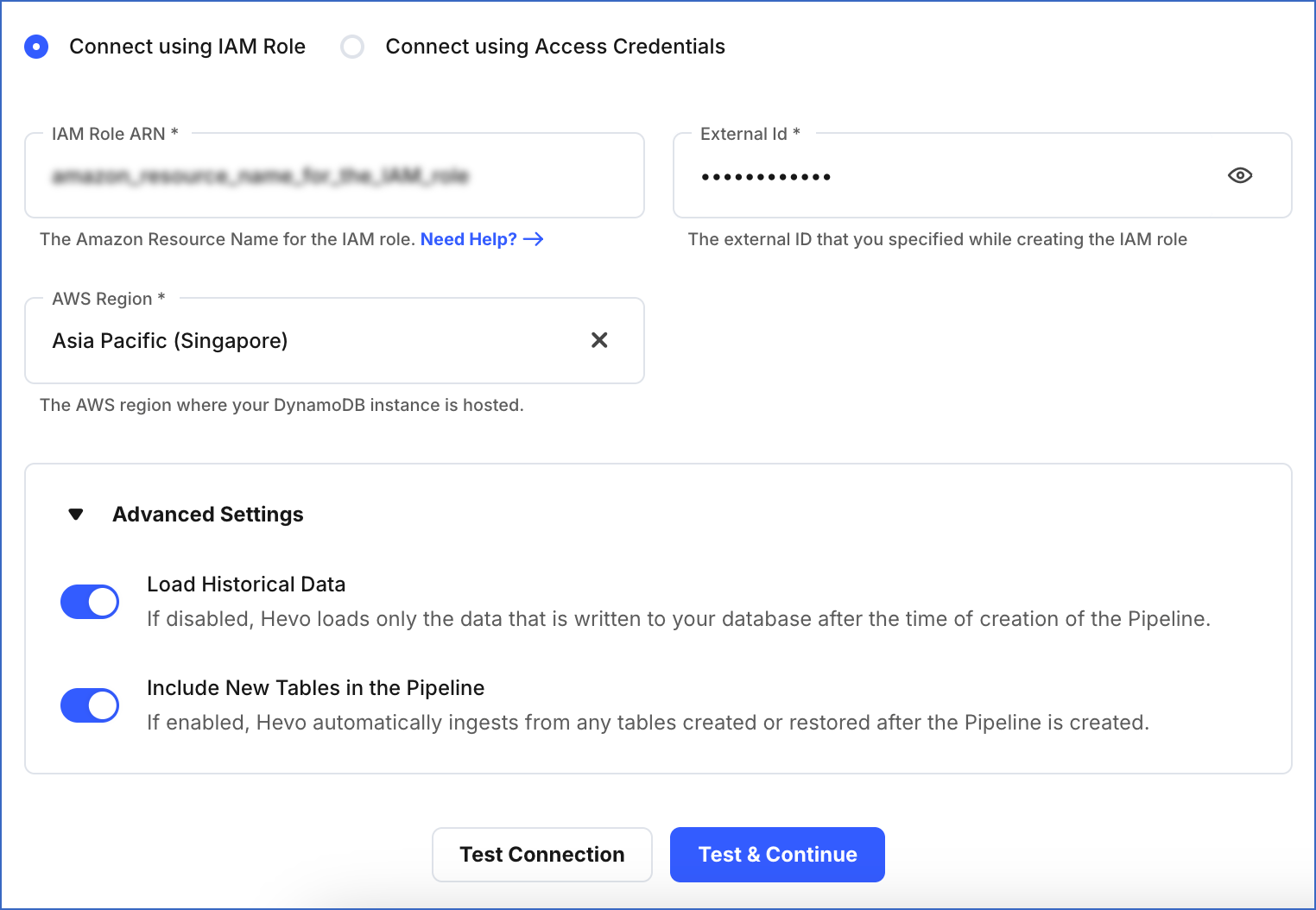

Connect using IAM Role:

-

IAM Role ARN: The Amazon Resource Name (ARN) of the IAM role that you retrieved in the section above.

-

External Id: The external ID that you specified above.

-

AWS Region: The AWS region where your DynamoDB instance is running.

-

-

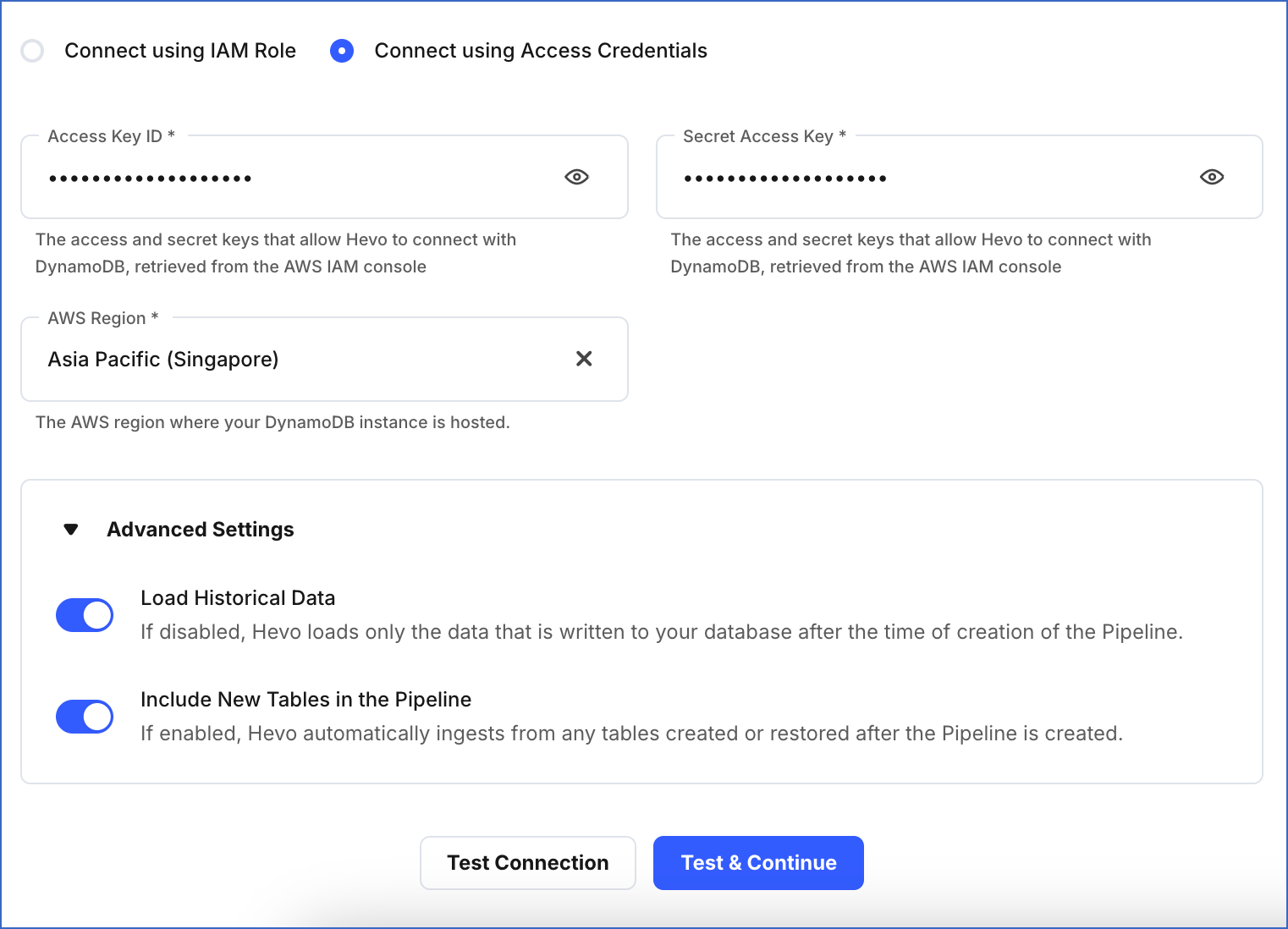

Connect using Access Credentials:

-

Access Key ID: The AWS access key that you retrieved in the Create access credentials section above.

-

Secret Access Key: The AWS secret access key for the access key ID that you retrieved in the Create access credentials section above.

-

AWS Region: The AWS region where your DynamoDB instance is running.

-

-

-

Advanced Settings:

-

Load Historical Data: If this option is enabled, the entire table data is fetched during the first run of the Pipeline. If disabled, Hevo loads only the data that was written in your database after the time of creation of the Pipeline.

-

Include New Tables in the Pipeline: Applicable for all ingestion modes except Custom SQL.

If enabled, Hevo automatically ingests data from tables created in the Source after the Pipeline has been built. These may include completely new tables or previously deleted tables that have been re-created in the Source. All data for these tables is ingested using database logs, making it incremental and therefore billable.

You can change this setting later.

-

-

-

Click Test Connection to check connectivity with the Amazon DynamoDB database.

-

Click Test & Continue.

-

Proceed to configuring the data ingestion and setting up the Destination.

Hevo defers the data ingestion for a pre-determined time in the following scenarios:

- If your DynamoDB Source does not contain any new Events to be ingested.

- If you are using DynamoDB Streams and the required permissions are not granted to Hevo.

Hevo re-attempts to fetch the data only after the deferment period elapses.

Data Replication

| For Teams Created | Default Ingestion Frequency | Minimum Ingestion Frequency | Maximum Ingestion Frequency | Custom Frequency Range (in Hrs) |

|---|---|---|---|---|

| Before Release 2.21 | 5 Mins | 5 Mins | 1 Hr | NA |

| After Release 2.21 | 6 Hrs | 30 Mins | 24 Hrs | 1-24 |

Note: The custom frequency must be set in hours as an integer value. For example, 1, 2, or 3, but not 1.5 or 1.75.

-

Historical Data: In the first run of the Pipeline, Hevo ingests all the data available in your Amazon DynamoDB database.

-

Incremental Data: Once the historical load is complete, all new and updated data is synchronized with your Destination as per the ingestion frequency.

Additional Information

Read the detailed Hevo documentation for the following related topics:

Schema and Type Mapping

Hevo replicates the schema of the tables from the Source DynamoDB as-is to your Destination database or data warehouse. In rare cases, we skip some columns with an unsupported Source data type while transforming and mapping.

The following table shows how your DynamoDB data types get transformed to a warehouse type.

| DynamoDB Data Type | Warehouse Data Type |

|---|---|

| String | VARCHAR |

| Binary | Bytes |

| Number | Decimal/Long |

| STRINGSET | JSON |

| NUMBERSET | JSON |

| BINARYSET | JSON |

| Map | JSON |

| List | JSON |

| Boolean | Boolean |

| NULL | - |

Limitations

-

On every Pipeline run, Hevo ingests the entire data present in the Dynamo DB data streams that you are using for ingestion. Data streams can store data for a maximum of 24 hrs. So, depending on your Pipeline frequency, some events might get re-ingested in the Pipeline and consume your Events quota. Read Pipeline Frequency to know how it affects your Events quota consumption.

-

Hevo does not load data from a column into the Destination table if its size exceeds 16 MB, and skips the Event if it exceeds 40 MB. If the Event contains a column larger than 16 MB, Hevo attempts to load the Event after dropping that column’s data. However, if the Event size still exceeds 40 MB, then the Event is also dropped. As a result, you may see discrepancies between your Source and Destination data. To avoid such a scenario, ensure that each Event contains less than 40 MB of data.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Feb-09-2026 | 2.45 | Updated section, Enable Streams to add a note about tables without DynamoDB streams enabled. |

| Nov-06-2025 | NA | Updated the document as per the latest Hevo UI. |

| Sep-18-2025 | NA | Updated section, Configure Amazon DynamoDB Connection Settings as per the latest UI. |

| Aug-1-2025 | NA | Added clarification that data ingested from new and re-created tables is billable. |

| Jul-07-2025 | NA | Updated the Limitations section to inform about the max record and column size in an Event. |

| Apr-28-2025 | NA | Updated sections, Obtain Amazon DynamoDB Credentials, Enable Streams, and Retrieve the AWS Region as per the latest Amazon DynamoDB UI. |

| Apr-21-2025 | NA | Updated the Create an IAM policy sub-section to revise the IAM policy JSON and reflect the latest Amazon DynamoDB UI. |

| Jan-07-2025 | NA | Updated the Limitations section to add information on Event size. |

| Oct-29-2024 | NA | Updated sections, Enable Streams and Retrieve the AWS Region as per the latest Amazon DynamoDB UI. |

| May-27-2024 | 2.23.4 | Added the Data Replication section. |

| Apr-25-2023 | 2.12 | - Added section, Obtain Amazon DynamoDB Credentials. - Renamed and updated section, (Optional) Obtain your Access Key ID and Secret Access Key to Create access credentials. - Updated section, Configure Amazon DynamoDB Connection Settings to add information about connecting to Amazon DynamoDB database using IAM role. |

| Nov-08-2022 | NA | Added section, Limitations. |

| Oct-17-2022 | 1.99 | Updated section, Configure Amazon DynamoDB Connection Settings to add information about deferment of data ingestion if required permissions are not granted. |

| Oct-04-2021 | 1.73 | - Updated the section, Prerequisites to inform users about setting the value of the StreamViewType parameter to NEW_AND_OLD_IMAGES. - Updated the section, Enable Streams to reflect the latest changes in the DynamoDB console. |

| Aug-8-2021 | NA | Added a note in the Source Considerations section about Hevo deferring data ingestion in Pipelines created with this Source. |

| Jul-12-2021 | 1.67 | Added the field Include New Tables in the Pipeline under Source configuration settings. |

| Feb-22-2021 | 1.57 | Added sections: - Create the AWS Access Key and the AWS Secret Key - Retrieve the AWS Region |