Snowflake

On This Page

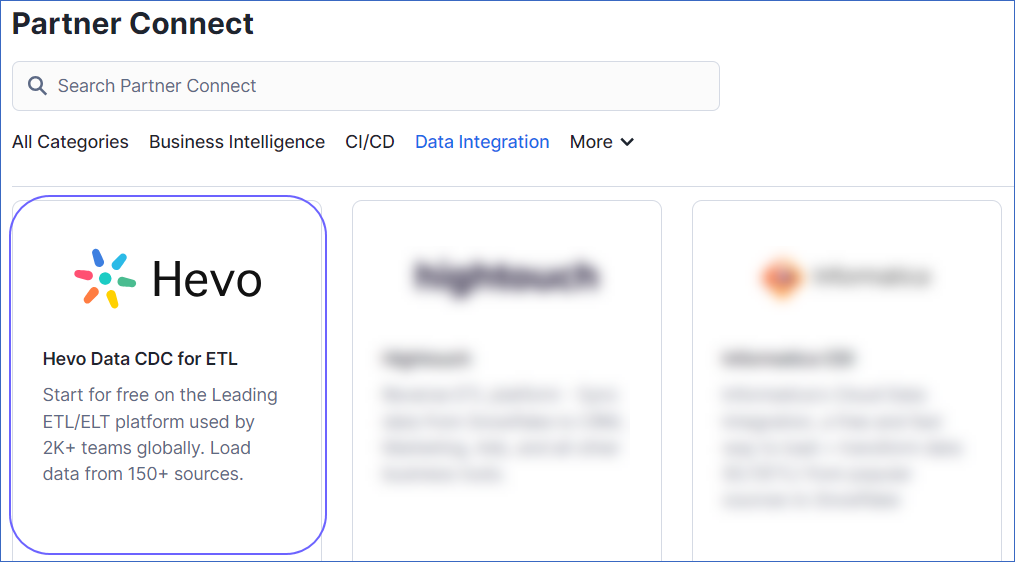

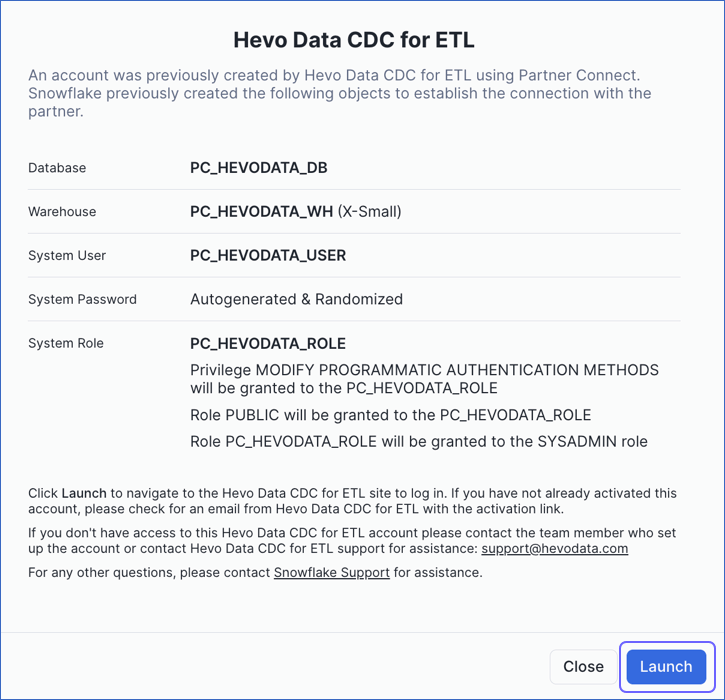

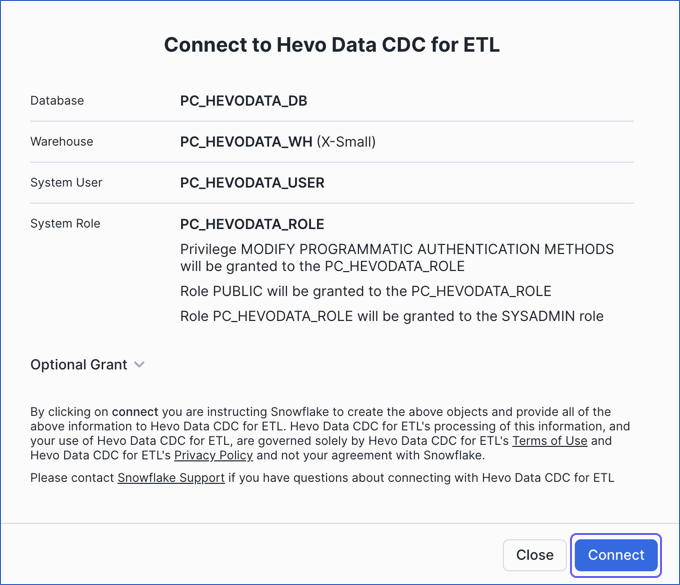

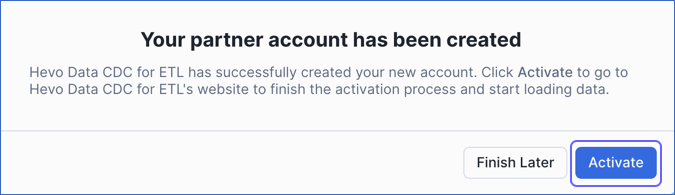

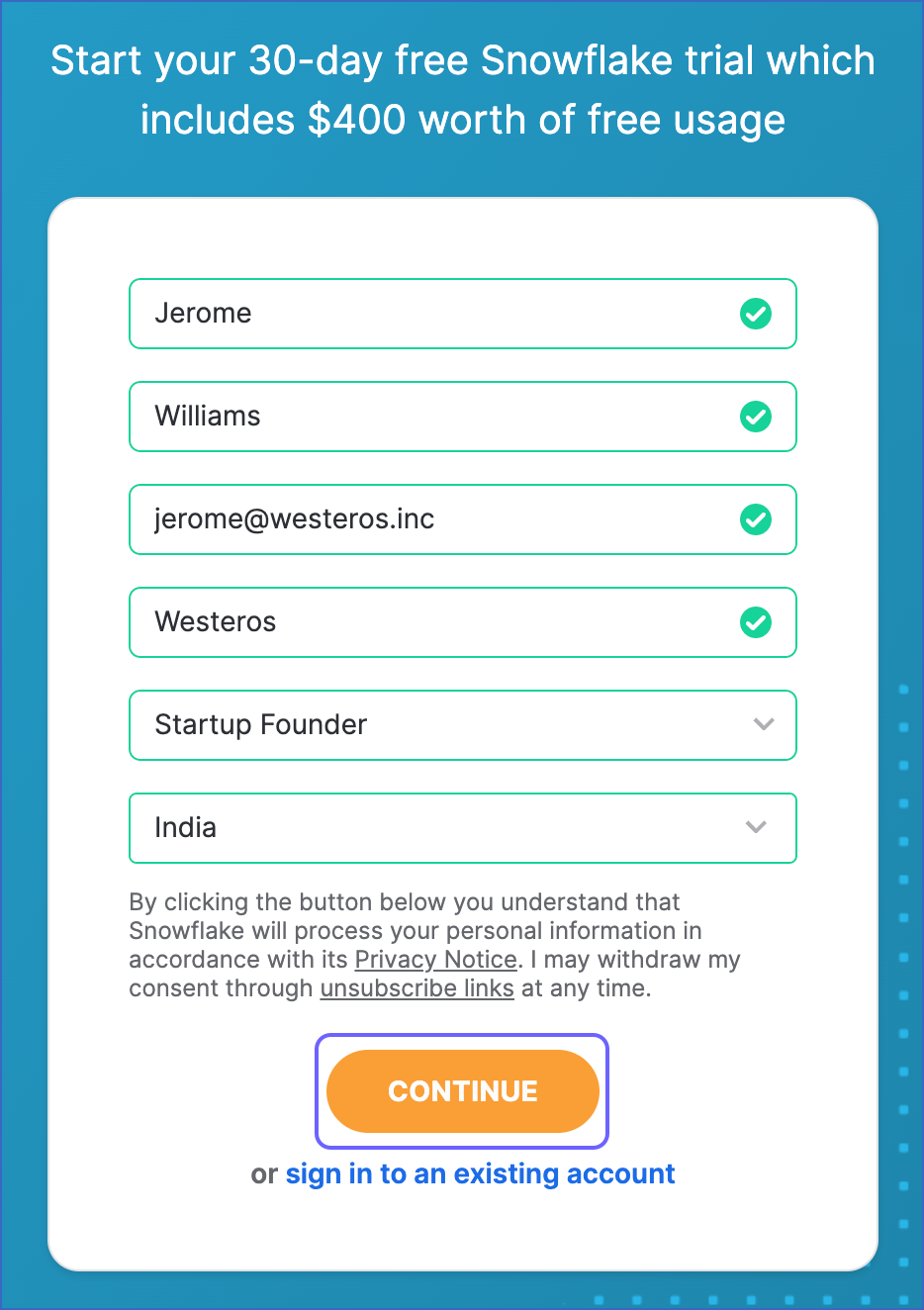

Collaborated with Snowflake to provide support for configuring Snowflake in Hevo using the Snowflake Partner Connect feature. Hevo can now be accessed from within the Snowflake platform, through which you can seamlessly set up an account and create data Pipelines with Snowflake as your Destination.

Snowflake offers a cloud-based data storage and analytics service, generally termed as data warehouse-as-a-service. Companies can use it to store and analyze data using cloud-based hardware and software.

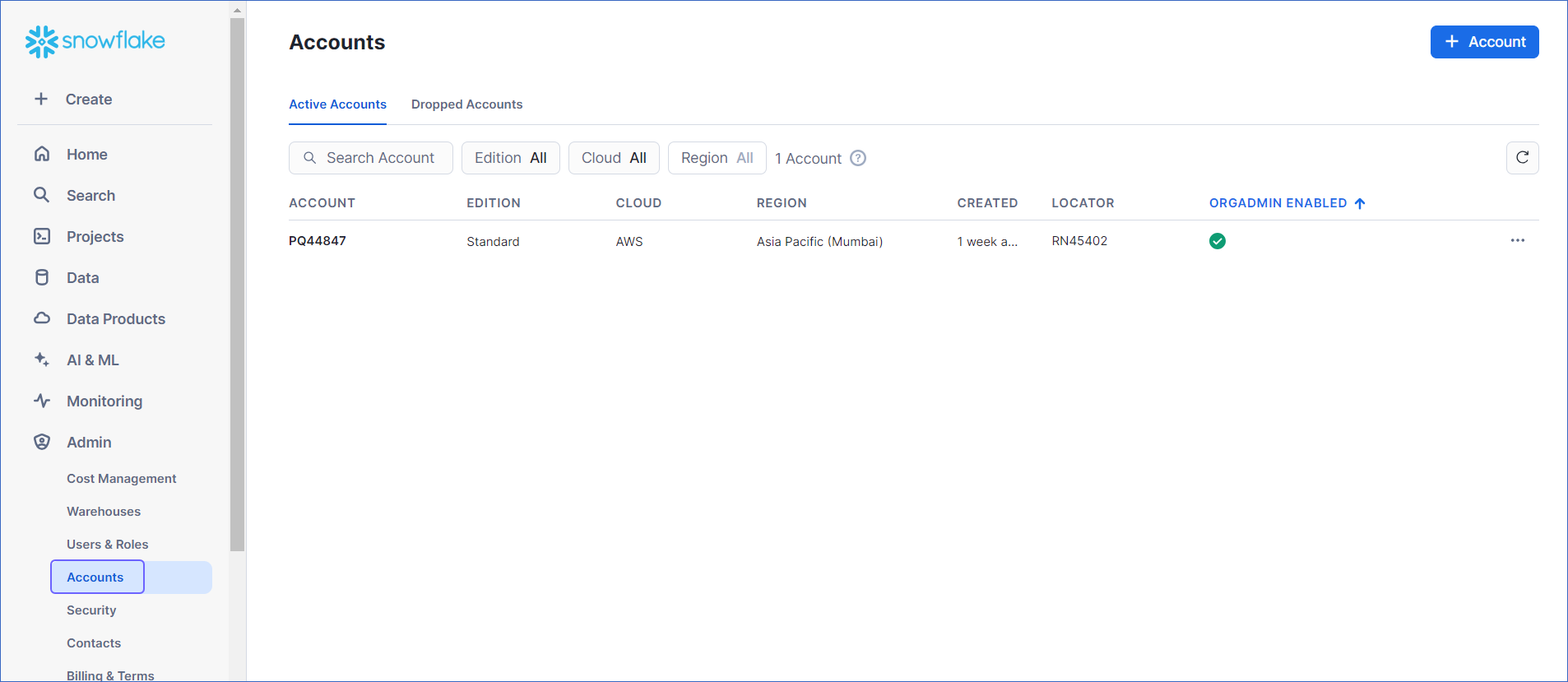

In Snowflake, you can create both data warehouses and databases to store your data. Each data warehouse can further have one or more databases, although this is not mandatory. Snowflake provides you with one data warehouse automatically when you create an account.

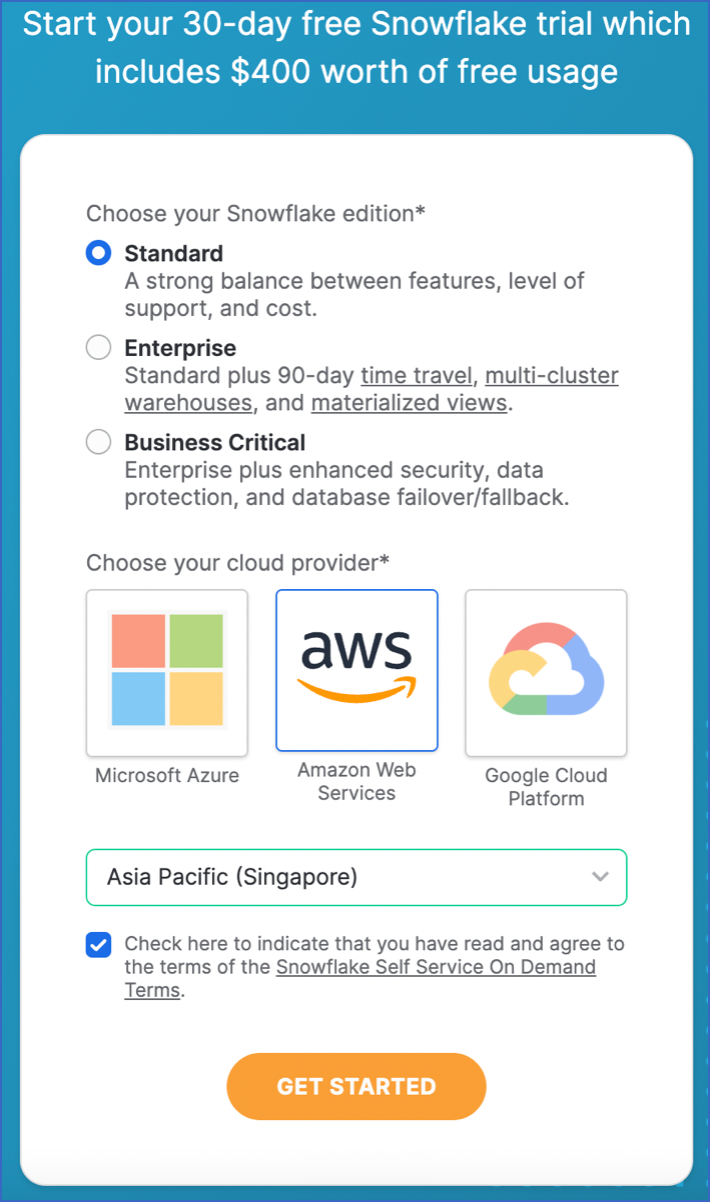

The Snowflake data warehouse may be hosted on any of the following Cloud providers:

-

Amazon Web Services (AWS)

-

Google Cloud Platform (GCP)

-

Microsoft Azure (Azure)

For Hevo to access your data, you must assign the required permissions. Snowflake uses Roles to assign permissions to users. You need ACCOUNTADMIN, SECURITYADMIN, or SYSADMIN privileges to create the required roles for Hevo. Read more about Roles in Snowflake.

The data from your Pipeline is staged in Hevo’s S3 bucket before being finally loaded to your Snowflake warehouse.

To connect your Snowflake instance to Hevo, you can either use a private link which directly connects to your Cloud provider through Virtual Private Cloud (VPC) or connect via a public network using your Snowflake account URL.

A private link enables communication and network traffic to remain exclusively within the cloud provider’s private network while maintaining direct and secure access across VPCs. It allows you to transfer data to Snowflake without going through the public internet or using proxies to connect Snowflake to your network. Note that even with a private link, the public endpoint is still accessible and Hevo uses that to connect to your database cluster.

Note: The private link is supported only for the Hevo platform regions.

Please reach out to Hevo Support to retrieve the private link for your cloud provider.

Modifying Snowflake Destination Configuration

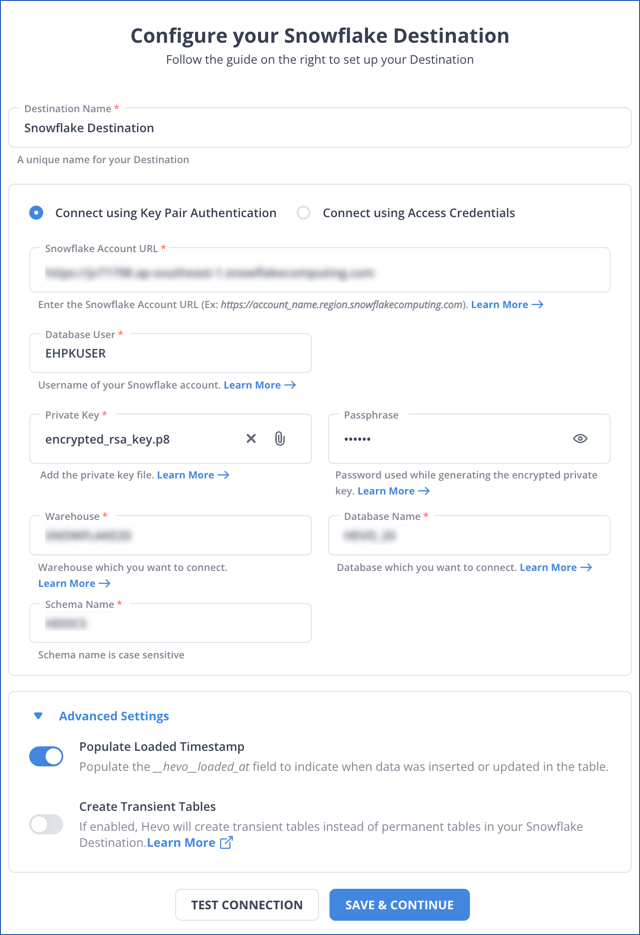

You can modify some settings after creating a Snowflake Destination. However, any configuration changes will affect all the Pipelines using this Destination. To learn about the effects, refer to section, Potential impacts of modifying the configuration settings.

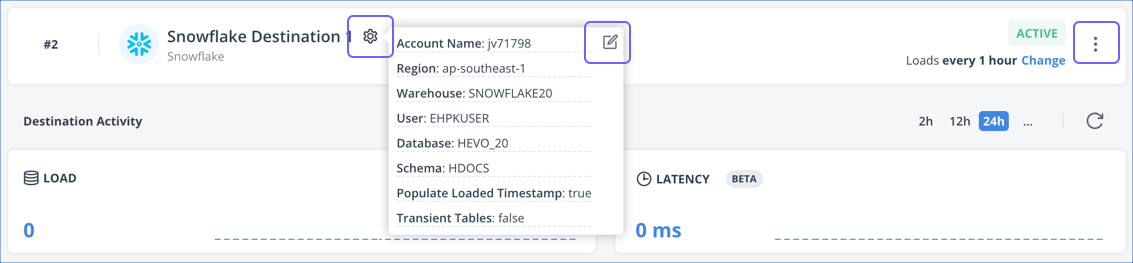

To modify the Snowflake Destination configuration:

-

Navigate to the Destinations Detailed View.

-

Do one of the following:

-

Click the Settings icon next to the Destination name, and then click the Edit icon.

-

Click the Kebab menu icon on the right, and then click Edit.

-

-

On the Edit Snowflake Destination connection settings page, you can modify the following settings:

-

Authentication method

-

Destination name

-

Account URL

-

Database User

-

Private Key or Database Password, depending on the authentication method

-

Passphrase

Note: You must change the passphrase if you have modified your encrypted private key.

-

Warehouse name

-

Database name

-

Schema name

-

Populate Loaded Timestamp

-

-

Click SAVE & CONTINUE. Optionally, click TEST CONNECTION to check the connection to your Snowflake Destination, and then save the modified configuration.

Potential impacts of modifying the configuration settings

-

Changing any configuration setting affects all Pipelines using the Destination.

-

Changing any data warehouse or database-related settings may lead to inconsistency in the data stored in your Destination tables.

(Optional) Configuring Key Pair Rotation

You can rotate and replace the private and public keys used for authentication based on your set expiration schedule. Snowflake supports using multiple active keys, and currently, you can associate two public keys with a single user using the RSA_PUBLIC_KEY and RSA_PUBLIC_KEY_2 parameters.

Perform the following steps to rotate your keys:

-

Assign the newly generated public key to your Snowflake user. Use the parameter key that is currently not assigned to the user. For example, if

RSA_PUBLIC_KEYis presently associated with the user, then assign the new public key toRSA_PUBLIC_KEY_2and vice versa. To do this, you can run the following command in your Snowflake account:ALTER USER <your_snowflake_user> SET RSA_PUBLIC_KEY_2='<public key>'; -

Edit your Snowflake Destination configuration and replace the private key with the one you generated in Step 1.

-

Remove the old public key assigned to your Snowflake user. Assuming that your old public key is set in

RSA_PUBLIC_KEY, run the following command in your Snowflake account to remove the assigned key:ALTER USER <your_snowflake_user> UNSET RSA_PUBLIC_KEY;

Note: Replace the placeholder values in the commands above with your own. For example, <your_snowflake_user> with HARRY_POTTER.

Handling Source Data with Different Data Types

For teams created in or after Hevo Release 1.58, Hevo automatically modifies the data type of a Snowflake table column to accommodate Source data with a different data type. Data type promotion is performed on tables that are less than 50GB in size. Read Handling Different Data Types in Source Data.

Note: Your Hevo release version is mentioned at the bottom of the Navigation Bar.

Destination Considerations

- In Snowflake, when you use the conversion functions TO_VARCHAR() and TO_DATE() on a high-precision timestamp column, it returns the same output values for similar inputs. For example, both functions return the same output for the timestamp values 2023-01-01 12:00:00.000001 and 2023-01-01 12:00:00.000002. As a result, when you run a query containing any one of these conversion functions, you may see duplicate records in your Destination table.

Limitations

-

For Snowflake Destinations, Hevo supports column values of up to 16 MB for most data types, including VARCHAR, VARIANT, ARRAY, and OBJECT data types. For BINARY, GEOGRAPHY, and GEOMETRY data types, the limit is 8 MB per column. If any Source objects have columns with values that exceed these limits, Hevo skips those Events and does not load their data to the Destination.

-

Hevo replicates a maximum of 4096 columns to each Snowflake table, of which six are Hevo-reserved metadata columns used during data replication. Therefore, your Pipeline can replicate up to 4090 (4096-6) columns for each table. Read Limits on the Number of Destination Columns.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Jul-07-2025 | NA | Updated the limitation about data type column size limits. |

| Nov-11-2024 | NA | Updated section, Limitations to add a limitation on data ingestion from Source objects with column values exceeding 16 MB. |

| Oct-08-2024 | 2.28.2 | Updated the page to make key pair authentication the recommended method and add information about service users. |

| Sep-23-2024 | NA | Added clarification about the users who can use Snowflake Partner Connect. |

| Sep-16-2024 | 2.27.3 | - Added sections Obtain a Private and Public Key Pair, Configuring Key Pair Rotation, and Modifying Snowflake Destination Configuration for information on key pair authentication and editing the Destination configuration. - Updated section Configure Snowflake as a Destination to provide description of the key pair authentication fields. |

| Aug-05-2024 | NA | Updated sections, Connect Using Snowflake Partner Connect and Connect Using the Snowflake Credentials as per the latest Snowflake UI. |

| Apr-18-2024 | 2.22.2 | Added sections: - Connect Using Snowflake Partner Connect. - Connect Using the Snowflake Credentials |

| Apr-11-2024 | NA | Added section, Destination Considerations. |

| Oct-03-2023 | NA | Updated sections, Create a Snowflake Account and Create and Configure your Snowflake Warehouse as per the latest Snowflake UI. |

| Aug-11-2023 | NA | Fixed broken links. |

| Apr-25-2023 | 2.12 | Updated section, Configure Snowflake as a Destination to add information that you must specify all fields to create a Pipeline. |

| Dec-19-2022 | 2.04 | Updated section, Configure Snowflake as a Destination to reflect the latest Hevo UI. |

| Dec-19-2022 | 2.04 | Updated the page overview to add information about Hevo supporting private links for Snowflake. |

| Nov-24-2022 | NA | Added a step in section, Create and Configure your Snowflake Warehouse. |

| Oct-10-2022 | NA | Added the section (Optional) Create a Snowflake Account. |

| Jun-16-2022 | NA | Modified section, Prerequisites to update the permissions required by Hevo to access data on your schema. |

| Jun-09-2022 | NA | Updated the page to provide a script containing all the user commands for creating a Snowflake warehouse. |

| Mar-31-2022 | NA | Updated the screenshots to reflect the latest Snowflake UI. |

| Feb-07-2022 | 1.81 | Updated the page to add the step, Create and Configure Your Snowflake Warehouse, and other permission related content. |

| Mar-09-2021 | 1.58 | Added section, Handling Source Data with Different Data Types. |

| Feb-22-2021 | NA | - Updated the page overview to state that the Pipeline stages the ingested data in Hevo’s S3 bucket, from where it is finally loaded to the Destination. - Formatting-related edits. |