MongoDB

On This Page

MongoDB is a document-oriented NoSQL database that supports high volume data storage. MongoDB makes use of collections and documents instead of tables and rows to organize data. Collections, equivalent of tables in an RDBMS, hold sets of documents. Documents, or objects, are similar to records of an RDBMS. Insert, update, and delete operations can be performed on a collection within MongoDB.

Hevo supports two ways of configuring MongoDB:

-

MongoDB Atlas - This option is applicable if your MongoDB database is hosted on MongoDB Atlas.

-

Generic MongoDB - This option is applicable for all deployments of MongoDB other than MongoDB Atlas.

Hevo connects to your MongoDB database using password authentication.

The following table lists the differences between the two:

| Generic MongoDB | MongoDB Atlas |

|---|---|

| Managed by the user or a third-party other than MongoDB Atlas. | Fully managed database service from MongoDB. |

Refer to the required MongoDB variant for the steps to configure it as a Source in your Hevo Pipeline and start ingesting data.

MongoDB Configurations

MongoDB supports three configurations:

-

Standalone without replicas: Includes a single instance of MongoDB.

Note: Hevo currently does not support this configuration.

-

Standalone with replicas: Includes one primary instance of MongoDB. Secondary instances follow the primary one by replicating data from it. While configuring MongoDB as a Source, you must provide a comma separated list of all the instances in the Database Host field.

-

Clustered: Includes three components - a shard router (mongos), a config server (mongod), and a data shard (mongod). The data shard can individually have replicas for redundancy. While configuring MongoDB as a Source, you must provide a comma separated list of all instances of the MongoDB Routers in the Database Host field.

Prerequisites

-

The MongoDB version is 3.4 or higher.

-

The MongoDB version is 4.0 or above, if the Ingestion mode is Change Streams. The OpLog ingestion mode is compatible with all versions of MongoDB.

Use the following command to find out the MongoDB version on Ubuntu.

~$ mongod --version -

A MongoDB user with

readaccess to the database that is to be replicated and to thelocaldatabase. -

A retention period of at least 72 hours or more in the OpLog. Read OpLog Retention Period.

OpLog Retention Period

The OpLog Retention Period is the duration for which Events are held in the OpLog. If an Event is not read within that period, then it is lost.

This may happen if:

-

The OpLog is full, and the database has started discarding the older Event entries to write the newer ones.

-

The timestamp of the Event is older than the OpLog retention time.

The OpLog Retention Period directly impacts the OpLog size that you must maintain to hold the entries.

To enable Hevo to read the Events without losing them, you need to maintain an adequate OpLog size or retention period. If either of these is insufficient, Hevo alerts you about the same. For more information, read OpLog Alerts.

OpLog Alerts

If the OpLog retention period is set to less than 24 hours, and if the OpLog is almost full, Hevo displays a warning as shown below:

The OpLog window is calculated as the difference between the first and last time of entry in the OpLog. To prevent the likelihood of Events not being read from the OpLog, you must set the OpLog window to at least 24 hours.

We recommend having enough space to retain the OpLog for 72 hours. This is to avoid disruption in replication due to a sudden influx of Events from the OpLog.

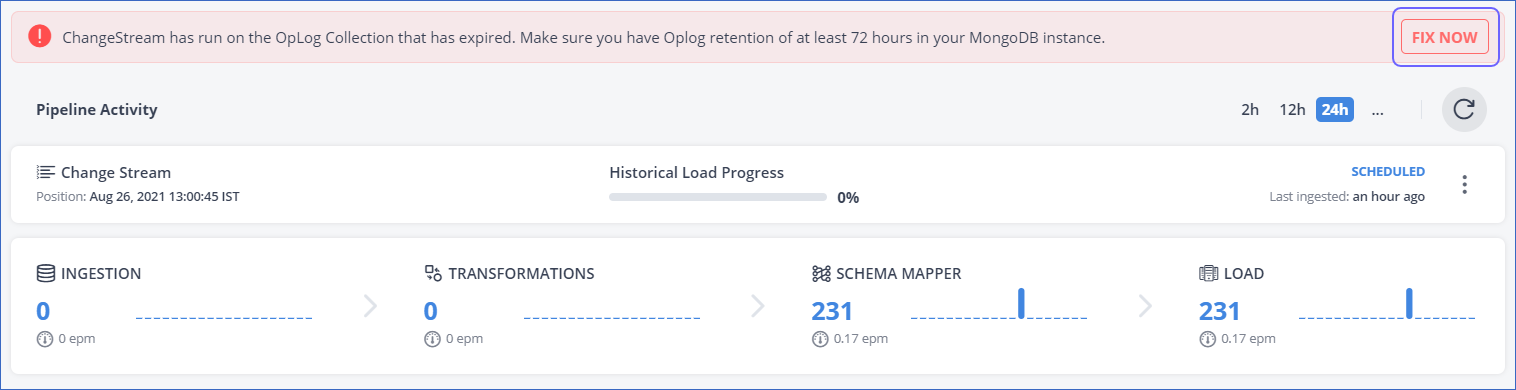

In case the OpLog expires, Hevo displays the error message as shown below:

To resolve this error, see section Resolving Pipeline failure due to OpLog Expiry below.

Resolving Pipeline Failure due to OpLog Expiry

In case of Pipeline failures due to OpLog expiry, Hevo displays the following error in Pipelines Detailed View:

To resolve the error:

-

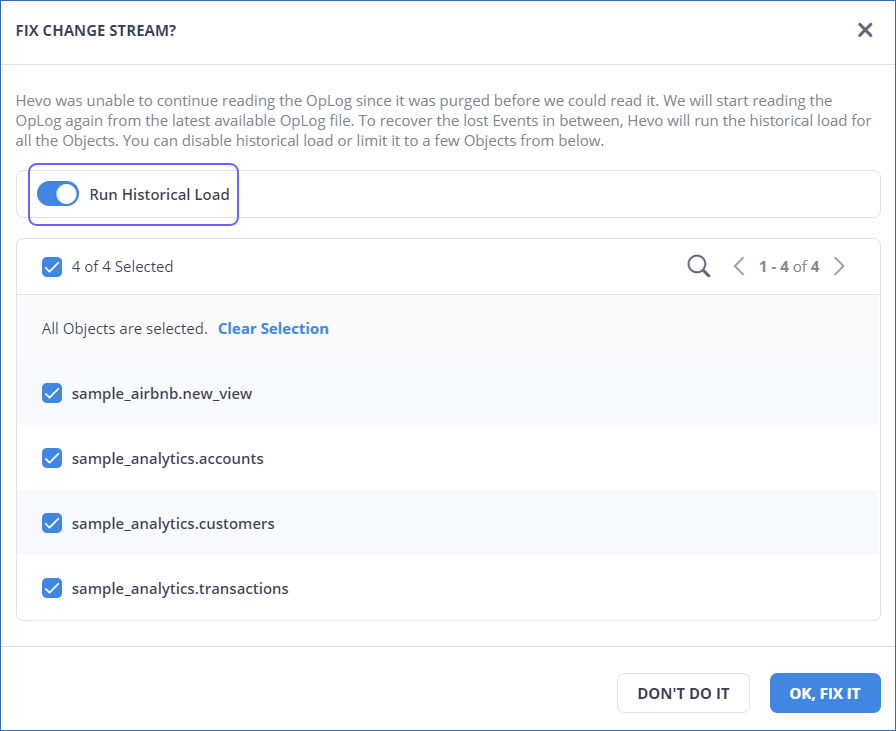

In the error banner, click FIX NOW and do one of the following:

-

Enable the Run Historical Load option and select the objects you want to restart the historical load for and read the OpLog from the latest available log files. This allows you to recover all the Events lost due to the expired BinLog as Hevo ingests them as historical data. Once all the historical data is read, Hevo starts reading the latest available log files.

-

Disable the Run Historical Load option and read the OpLog from the latest available log files. Choosing this option means all the Events lost due to the expired log files are not recovered. Hevo skips those Events and starts reading from the latest available log files.

-

-

Click OK, FIX IT.

Hevo recommends extending the OpLog retention period to avoid such failures in the future.

Extending the OpLog Retention Period

Perform the following steps to extend the OpLog retention period. We recommend a retention period of 72 hours.

Note: To extend the OpLog Retention Period, you must have access to the admin database.

-

Do one of the following:

-

In the Pipeline Detailed View, click the kebab menu in the Summary Bar.

-

Click Change Position.

-

Update the position to the current data and time.

-

Click UPDATE.

-

In the Objects list, click the kebab menu and then, Restart Historical Load for the required objects.

Alternatively, select all the objects and restart the historical load for them.

Once the historical load completes, all the data until the current position date and time that you updated in Step 4 above should become available.

Schema Mapper and Primary Keys

Hevo uses the following primary keys to upload the records in the Destination:

Source Considerations

-

MongoDB does not support null values for the

_idfield.The

_idfield in a MongoDB document serves as its primary key. Therefore, commands that use_idas a parameter, such as commands to fetch, sort, or update data, do not run successfully if you provide a null value in_idfield.For example, when you run the following command in MongoDB to select and sort data according to their

_idvalues, you get a Null Pointer exception while fetching the document if the_idfield does not hold a value:db.collection.aggregate({ $group : { _id : {$type:"$_id"}, type: {$min:"$_id"} } } ) -

MongoDB does not support BSON documents larger than 16 MB in Change Streams

The Change Streams response documents must adhere to the 16 MB limit for BSON documents. In case the size of the document exceeds 16 MB, the Pipeline is paused and an error is displayed stating, Failed to read documents from the Change Stream. Documents larger than 16 MB are not supported. This applies to scenarios such as when the update operation in Change Streams is configured to return the full updated document, or an insert/replace operation is performed within a document that is at or just below the specified size limit.

-

Views in MongoDB does not support OpLog or Change Streams. As a result, Hevo is unable to ingest incremental data for this object.

For Pipelines created before Release 2.08, you can ingest the data as historical data by restarting the object each time.

Note: The Events ingested during the historical load for this object count towards your Events quota consumption.

For Pipelines created after Release 2.08, Hevo does not ingest data related to Views.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Oct-09-2024 | NA | Updated the Prerequisites section to reflect that Hevo only supports MongoDB version 3.4 and higher. |

| Apr-29-2024 | NA | Removed sections, Select the Source Type, Select the MongoDB Variant, and Specify MongoDB Connection Settings. |

| Mar-05-2024 | NA | Updated links. |

| Feb-20-2023 | 2.08 | Updated section, Source Considerations to inform users about Hevo not ingesting the Views object as it does not have support for OpLog or Change Streams. |

| Apr-21-2022 | 1.86 | Removed section, Select the Pipeline Mode. |

| Jan-24-2022 | NA | Added information about how data is read in OpLog and Change Stream mode in the Select the Pipeline Mode section. |

| Dec-06-2021 | NA | Updated section, Source Considerations to include the size limit for BSON documents in Change Streams. |

| Nov-09-2021 | 1.75 | - Added the section, Source Considerations to explain that MongoDB does not support null values for the _id field. |

| Sep-09-2021 | 1.71 | - Renamed and updated the section, Resolving Pipeline failure due to OpLog Expiry. - Updated the section, Oplog Alerts with a new error message. |

| Aug-06-2021 | 1.69 | - Added sections, OpLog Retention Period and Resolving OpLog Expiry Failures. - Updated section, OpLog Alerts with the new warning message. - Added a note in section, Extending OpLog Retention Period that access to the admin database is needed to extend the OpLog retention period. |

| Jun-28-2021 | 1.66 | - Added section, Schema Mapper and Primary Keys. |

| May-19-2021 | NA | - Added section, Extending the OpLog Retention Period. - Updated section, OpLog Alerts. |