- Introduction

- Getting Started

- Creating an Account in Hevo

- Subscribing to Hevo via AWS Marketplace

- Connection Options

- Familiarizing with the UI

- Creating your First Pipeline

- Data Loss Prevention and Recovery

- Data Ingestion

- Data Loading

- Loading Data in a Database Destination

- Loading Data to a Data Warehouse

- Optimizing Data Loading for a Destination Warehouse

- Deduplicating Data in a Data Warehouse Destination

- Manually Triggering the Loading of Events

- Scheduling Data Load for a Destination

- Loading Events in Batches

- Data Loading Statuses

- Data Spike Alerts

- Name Sanitization

- Table and Column Name Compression

- Parsing Nested JSON Fields in Events

- Pipelines

- Data Flow in a Pipeline

- Familiarizing with the Pipelines UI

- Working with Pipelines

- Managing Objects in Pipelines

- Pipeline Jobs

-

Transformations

-

Python Code-Based Transformations

- Supported Python Modules and Functions

-

Transformation Methods in the Event Class

- Create an Event

- Retrieve the Event Name

- Rename an Event

- Retrieve the Properties of an Event

- Modify the Properties for an Event

- Fetch the Primary Keys of an Event

- Modify the Primary Keys of an Event

- Fetch the Data Type of a Field

- Check if the Field is a String

- Check if the Field is a Number

- Check if the Field is Boolean

- Check if the Field is a Date

- Check if the Field is a Time Value

- Check if the Field is a Timestamp

-

TimeUtils

- Convert Date String to Required Format

- Convert Date to Required Format

- Convert Datetime String to Required Format

- Convert Epoch Time to a Date

- Convert Epoch Time to a Datetime

- Convert Epoch to Required Format

- Convert Epoch to a Time

- Get Time Difference

- Parse Date String to Date

- Parse Date String to Datetime Format

- Parse Date String to Time

- Utils

- Examples of Python Code-based Transformations

-

Drag and Drop Transformations

- Special Keywords

-

Transformation Blocks and Properties

- Add a Field

- Change Datetime Field Values

- Change Field Values

- Drop Events

- Drop Fields

- Find & Replace

- Flatten JSON

- Format Date to String

- Format Number to String

- Hash Fields

- If-Else

- Mask Fields

- Modify Text Casing

- Parse Date from String

- Parse JSON from String

- Parse Number from String

- Rename Events

- Rename Fields

- Round-off Decimal Fields

- Split Fields

- Examples of Drag and Drop Transformations

- Effect of Transformations on the Destination Table Structure

- Transformation Reference

- Transformation FAQs

-

Python Code-Based Transformations

-

Schema Mapper

- Using Schema Mapper

- Mapping Statuses

- Auto Mapping Event Types

- Manually Mapping Event Types

- Modifying Schema Mapping for Event Types

- Schema Mapper Actions

- Fixing Unmapped Fields

- Resolving Incompatible Schema Mappings

- Resizing String Columns in the Destination

- Changing the Data Type of a Destination Table Column

- Schema Mapper Compatibility Table

- Limits on the Number of Destination Columns

- File Log

- Troubleshooting Failed Events in a Pipeline

- Mismatch in Events Count in Source and Destination

- Audit Tables

- Activity Log

-

Pipeline FAQs

- Can multiple Sources connect to one Destination?

- What happens if I re-create a deleted Pipeline?

- Why is there a delay in my Pipeline?

- Can I change the Destination post-Pipeline creation?

- Why is my billable Events high with Delta Timestamp mode?

- Can I drop multiple Destination tables in a Pipeline at once?

- How does Run Now affect scheduled ingestion frequency?

- Will pausing some objects increase the ingestion speed?

- Can I see the historical load progress?

- Why is my Historical Load Progress still at 0%?

- Why is historical data not getting ingested?

- How do I set a field as a primary key?

- How do I ensure that records are loaded only once?

- Events Usage

- Sources

- Free Sources

-

Databases and File Systems

- Data Warehouses

-

Databases

- Connecting to a Local Database

- Amazon DocumentDB

- Amazon DynamoDB

- Elasticsearch

-

MongoDB

- Generic MongoDB

- MongoDB Atlas

- Support for Multiple Data Types for the _id Field

- Example - Merge Collections Feature

-

Troubleshooting MongoDB

-

Errors During Pipeline Creation

- Error 1001 - Incorrect credentials

- Error 1005 - Connection timeout

- Error 1006 - Invalid database hostname

- Error 1007 - SSH connection failed

- Error 1008 - Database unreachable

- Error 1011 - Insufficient access

- Error 1028 - Primary/Master host needed for OpLog

- Error 1029 - Version not supported for Change Streams

- SSL 1009 - SSL Connection Failure

- Troubleshooting MongoDB Change Streams Connection

- Troubleshooting MongoDB OpLog Connection

-

Errors During Pipeline Creation

- SQL Server

-

MySQL

- Amazon Aurora MySQL

- Amazon RDS MySQL

- Azure MySQL

- Generic MySQL

- Google Cloud MySQL

- MariaDB MySQL

-

Troubleshooting MySQL

-

Errors During Pipeline Creation

- Error 1003 - Connection to host failed

- Error 1006 - Connection to host failed

- Error 1007 - SSH connection failed

- Error 1011 - Access denied

- Error 1012 - Replication access denied

- Error 1017 - Connection to host failed

- Error 1026 - Failed to connect to database

- Error 1027 - Unsupported BinLog format

- Failed to determine binlog filename/position

- Schema 'xyz' is not tracked via bin logs

- Errors Post-Pipeline Creation

-

Errors During Pipeline Creation

- MySQL FAQs

- Oracle

-

PostgreSQL

- Amazon Aurora PostgreSQL

- Amazon RDS PostgreSQL

- Azure PostgreSQL

- Generic PostgreSQL

- Google Cloud PostgreSQL

- Heroku PostgreSQL

-

Troubleshooting PostgreSQL

-

Errors during Pipeline creation

- Error 1003 - Authentication failure

- Error 1006 - Connection settings errors

- Error 1011 - Access role issue for logical replication

- Error 1012 - Access role issue for logical replication

- Error 1014 - Database does not exist

- Error 1017 - Connection settings errors

- Error 1023 - No pg_hba.conf entry

- Error 1024 - Number of requested standby connections

- Errors Post-Pipeline Creation

-

Errors during Pipeline creation

- PostgreSQL FAQs

- Troubleshooting Database Sources

- File Storage

- Engineering Analytics

- Finance & Accounting Analytics

-

Marketing Analytics

- ActiveCampaign

- AdRoll

- Amazon Ads

- Apple Search Ads

- AppsFlyer

- CleverTap

- Criteo

- Drip

- Facebook Ads

- Facebook Page Insights

- Firebase Analytics

- Freshsales

- Google Ads

- Google Analytics

- Google Analytics 4

- Google Analytics 360

- Google Play Console

- Google Search Console

- HubSpot

- Instagram Business

- Klaviyo v2

- Lemlist

- LinkedIn Ads

- Mailchimp

- Mailshake

- Marketo

- Microsoft Ads

- Onfleet

- Outbrain

- Pardot

- Pinterest Ads

- Pipedrive

- Recharge

- Segment

- SendGrid Webhook

- SendGrid

- Salesforce Marketing Cloud

- Snapchat Ads

- SurveyMonkey

- Taboola

- TikTok Ads

- Twitter Ads

- Typeform

- YouTube Analytics

- Product Analytics

- Sales & Support Analytics

- Source FAQs

- Destinations

- Familiarizing with the Destinations UI

- Cloud Storage-Based

- Databases

-

Data Warehouses

- Amazon Redshift

- Amazon Redshift Serverless

- Azure Synapse Analytics

- Databricks

- Google BigQuery

- Hevo Managed Google BigQuery

- Snowflake

-

Destination FAQs

- Can I change the primary key in my Destination table?

- Can I change the Destination table name after creating the Pipeline?

- How can I change or delete the Destination table prefix?

- Why does my Destination have deleted Source records?

- How do I filter deleted Events from the Destination?

- Does a data load regenerate deleted Hevo metadata columns?

- How do I filter out specific fields before loading data?

- Transform

- Alerts

- Account Management

- Activate

- Glossary

Releases- Release 2.38 (Jun 09-July 07, 2025)

- Release 2.37 (May 12-Jun 09, 2025)

- 2025 Releases

-

2024 Releases

- Release 2.32 (Dec 16 2024-Jan 20, 2025)

- Release 2.31 (Nov 18-Dec 16, 2024)

- Release 2.30 (Oct 21-Nov 18, 2024)

- Release 2.29 (Sep 30-Oct 22, 2024)

- Release 2.28 (Sep 02-30, 2024)

- Release 2.27 (Aug 05-Sep 02, 2024)

- Release 2.26 (Jul 08-Aug 05, 2024)

- Release 2.25 (Jun 10-Jul 08, 2024)

- Release 2.24 (May 06-Jun 10, 2024)

- Release 2.23 (Apr 08-May 06, 2024)

- Release 2.22 (Mar 11-Apr 08, 2024)

- Release 2.21 (Feb 12-Mar 11, 2024)

- Release 2.20 (Jan 15-Feb 12, 2024)

-

2023 Releases

- Release 2.19 (Dec 04, 2023-Jan 15, 2024)

- Release Version 2.18

- Release Version 2.17

- Release Version 2.16 (with breaking changes)

- Release Version 2.15 (with breaking changes)

- Release Version 2.14

- Release Version 2.13

- Release Version 2.12

- Release Version 2.11

- Release Version 2.10

- Release Version 2.09

- Release Version 2.08

- Release Version 2.07

- Release Version 2.06

-

2022 Releases

- Release Version 2.05

- Release Version 2.04

- Release Version 2.03

- Release Version 2.02

- Release Version 2.01

- Release Version 2.00

- Release Version 1.99

- Release Version 1.98

- Release Version 1.97

- Release Version 1.96

- Release Version 1.95

- Release Version 1.93 & 1.94

- Release Version 1.92

- Release Version 1.91

- Release Version 1.90

- Release Version 1.89

- Release Version 1.88

- Release Version 1.87

- Release Version 1.86

- Release Version 1.84 & 1.85

- Release Version 1.83

- Release Version 1.82

- Release Version 1.81

- Release Version 1.80 (Jan-24-2022)

- Release Version 1.79 (Jan-03-2022)

-

2021 Releases

- Release Version 1.78 (Dec-20-2021)

- Release Version 1.77 (Dec-06-2021)

- Release Version 1.76 (Nov-22-2021)

- Release Version 1.75 (Nov-09-2021)

- Release Version 1.74 (Oct-25-2021)

- Release Version 1.73 (Oct-04-2021)

- Release Version 1.72 (Sep-20-2021)

- Release Version 1.71 (Sep-09-2021)

- Release Version 1.70 (Aug-23-2021)

- Release Version 1.69 (Aug-09-2021)

- Release Version 1.68 (Jul-26-2021)

- Release Version 1.67 (Jul-12-2021)

- Release Version 1.66 (Jun-28-2021)

- Release Version 1.65 (Jun-14-2021)

- Release Version 1.64 (Jun-01-2021)

- Release Version 1.63 (May-19-2021)

- Release Version 1.62 (May-05-2021)

- Release Version 1.61 (Apr-20-2021)

- Release Version 1.60 (Apr-06-2021)

- Release Version 1.59 (Mar-23-2021)

- Release Version 1.58 (Mar-09-2021)

- Release Version 1.57 (Feb-22-2021)

- Release Version 1.56 (Feb-09-2021)

- Release Version 1.55 (Jan-25-2021)

- Release Version 1.54 (Jan-12-2021)

-

2020 Releases

- Release Version 1.53 (Dec-22-2020)

- Release Version 1.52 (Dec-03-2020)

- Release Version 1.51 (Nov-10-2020)

- Release Version 1.50 (Oct-19-2020)

- Release Version 1.49 (Sep-28-2020)

- Release Version 1.48 (Sep-01-2020)

- Release Version 1.47 (Aug-06-2020)

- Release Version 1.46 (Jul-21-2020)

- Release Version 1.45 (Jul-02-2020)

- Release Version 1.44 (Jun-11-2020)

- Release Version 1.43 (May-15-2020)

- Release Version 1.42 (Apr-30-2020)

- Release Version 1.41 (Apr-2020)

- Release Version 1.40 (Mar-2020)

- Release Version 1.39 (Feb-2020)

- Release Version 1.38 (Jan-2020)

- Early Access New

Apache Kafka

Apache Kafka is an open-source community distributed event streaming platform.

You can use Hevo Pipelines to replicate the data from your Apache Kafka Source to the Destination system.

Prerequisites

-

The bootstrap server information is available in Apache Kafka.

-

The Certificate Authority (CA) file, the client certificate, and the client key are available if Secure Sockets Layer (SSL) encryption is used.

-

You are assigned the Team Administrator, Team Collaborator, or Pipeline Administrator role in Hevo to create the Pipeline.

Note: Hevo recommends using Apache Kafka version 0.10.0.1 or higher.

Perform the following steps to configure your Apache Kafka Source:

Locate the Bootstrap Server Information

To locate the bootstrap server information:

-

Open the

server.propertiesfile.Note: The location of the

server.propertiesfile varies depending on the operating system being used. Contact your server administrator if you cannot locate this file. -

Copy the entire line after

bootstrap.servers:that contains the list of bootstrap servers and ports.For example: Copy

hostname1:9092, hostname2:9092from the line below:bootstrap.servers: hostname1:9092, hostname2:9092You can use this information while configuring your Hevo Pipeline.

Configure Secure Sockets Layer (SSL) (Optional)

In the context of SSL/TLS:

-

A KeyStore is a secure collection of identity certificates and private keys. By default, the KeyStore is in Java KeyStore (.jks) format.

-

CN represents the common name of the server protected by the SSL certificate.

-

FQDN represents the most complete domain name that identifies a host or server.

Hevo requires the CA file, the client certificate, and the client key to configure Apache Kafka as a Source if SSL encryption is enabled in Hevo. To generate these files, log in to the Kafka server folder where the Kafka TrustStore and KeyStore are located and perform the following steps:

1. Generate Client Certificate and Client Key

-

Enter the following command on the Kafka server command line:

keytool -keystore {key_store_name}.jks -alias {key_store_name_alias} -keyalg RSA -validity {validity} -genkeyNote: The alias is just a shorter name for the key store. The same alias needs to be reused throughout the steps.

You need to remember the passwords for each KeyStore or TrustStore to use later.

-

Provide the answers to questions that are displayed on the interactive prompt.

For the question

What is your first and last name?, enter the CN for your certificate.Note: The Common Name (CN) must match the fully qualified domain name (FQDN) of the server to ensure that Hevo connects to the correct server. Refer to this page to find the FQDN based on the type of the server.

2. Create your Certificate Authority

Note: This step is optional if you already have a CA to sign the certificates.

A Certificate Authority (CA) is responsible for signing certificates for each server in the cluster to prevent unauthorized access.

Run the following command on the Kafka server command line to create your own certificate authority:

openssl req -new -x509 -keyout ca-key -out ca-cert -days {validity}

3. Add the Certificate Authority to a TrustStore

A TrustStore is a secure collection of CA certificates that the broker can trust.

Run the following command on the Kafka server command line to add the CA to the broker’s truststore:

keytool -keystore {broker_trust_store}.jks -alias CARoot -importcert -file ca-cert

4. Sign the certificate with the CA file

Enter the following command on the Kafka server command line to sign the certificates with the CA file:

-

Export the certificate from the KeyStore:

keytool -keystore {key_store_name}.jks -alias {key_store_name_alias} -certreq -file cert-file -

Sign the certificate with the CA:

openssl x509 -req -CA ca-cert -CAkey ca-key -in cert-file -out cert-signed -days {validity} -CAcreateserial -passin pass:{ca-password} -

Add the certificates back to the keystore:

keytool -keystore {key_store_name}.jks -alias CARoot -importcert -file ca-cert keytool -keystore {key_store_name}.jks -alias {key_store_name_alias} -importcert -file cert-signed

5. Extract the Client Certificate key from KeyStore

Before extracting the client certificate key from the KeyStore, you must convert the KeyStore file from its existing .jks format to the PKCS12 (.p12) format for interoperability.

Enter the following command in the Kafka server command line:

-

Convert the keystore from .jks format to .p12 format:

keytool -v -importkeystore -srckeystore {key_store_name}.jks -srcalias {key_store_name_alias} -destkeystore {key_store_name}.p12 -deststoretype PKCS12 -

Extract the client certificate key into a .pem file.

openssl pkcs12 -in {key_store_name}.p12 -nocerts -nodes > cert-key.pemThis is the format in which Hevo understands the certificate keys.

You can now find the following files in the Kafka server folder where the Kafka TrustStore and KeyStore are located and upload them to Hevo to configure Apache Kafka as a Source.

-

Client Certificate:

cert-signed -

Client Key:

cert-key.pem -

CA File:

ca-cert

Whitelist Hevo’s IP Addresses

You need to whitelist the Hevo IP address for your region to enable Hevo to connect to your Kafka server. To do this:

-

Open the Kafka server configuration file:

sudo nano /usr/local/etc/config/server.propertiesNote: Depending on how Kafka was installed, this file may be in a different location.

-

In the file, scroll to

listenerssection. If it does not exist, add it on a new line. -

Add the following under

listeners:<protocol>://0.0.0.0:<port>Or

<protocol>://<hevo_ip>:<port>Note: The

<protocol>can be PLAINTEXT or SSL, and theportcan be the same port used in your bootstrap server. The<hevo_ip>is the Hevo IP address for your region. If you are adding these to existing values, use comma to separate the entries. -

Save the file.

Configure Apache Kafka Connection Settings

Perform the following steps to configure Apache Kafka as the Source in your Pipeline:

-

Click PIPELINES in the Navigation Bar.

-

Click + CREATE PIPELINE in the Pipelines List View.

-

On the Select Source Type page, select Kafka.

-

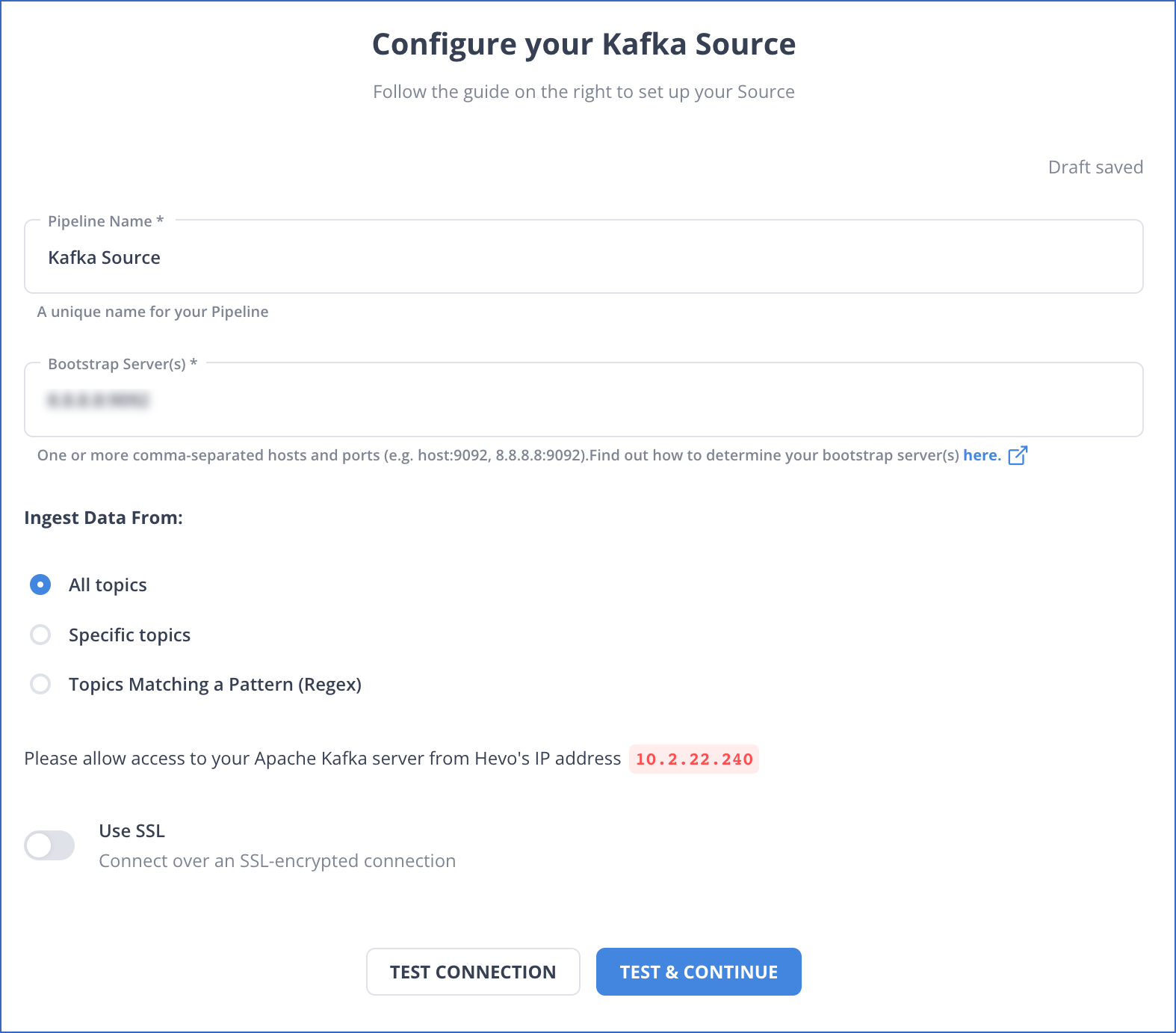

On the Configure your Kafka Source page, specify the following:

-

Pipeline Name: A unique name for your Pipeline, not exceeding 255 characters.

-

Bootstrap Server(s): The bootstrap server(s) extracted from Apache Kafka.

-

Ingest Data From

-

All Topics: Select this option to ingest data from all topics. Any new topics that are created are automatically included.

-

Specific Topics: Select this option to manually specify a comma-separated list of topics. New topics are not automatically added in this option.

-

Topics Matching a Pattern (Regex): Select this option to specify a Regular Expression (regex) to match and select the topic names. This option also fetches data for new topics that match the pattern dynamically. You can test your regex patterns here.

-

-

Use SSL: Enable this option to use an SSL-encrypted connection. Specify the following:

-

CA File: The file containing the SSL server certificate authority (CA).

-

Load all CA Certificates: If selected, Hevo loads all CA certificates (up to 50) from the uploaded CA file, else it loads only the first certificate.

Note: Select this check box if you have more than one certificate in your CA file.

-

-

Client Certificate: The client public key certificate file.

-

Client Key: The client private key file.

-

-

-

Click TEST & CONTINUE.

-

Proceed to configuring the data ingestion and setting up the Destination.

Data Replication

| For Teams Created | Default Ingestion Frequency | Minimum Ingestion Frequency | Maximum Ingestion Frequency | Custom Frequency Range (in Hrs) |

|---|---|---|---|---|

| Before Release 2.21 | 5 Mins | 5 Mins | 1 Hr | NA |

| After Release 2.21 | 6 Hrs | 30 Mins | 24 Hrs | 1-24 |

Note: The custom frequency must be set in hours as an integer value. For example, 1, 2, or 3, but not 1.5 or 1.75.

- Incremental Data: Once the Pipeline is created, all new and updated records are synchronized with your Destination as per the ingestion frequency.

If you restart an object via the Pipeline UI, Hevo ingests all the data available at that time in the Source.

For records that are structured as a list of records, Hevo ingests each record as an individual record. Each child record contains a common field called ref_id which is used to indicate a common parent record.

Additional Information

Read the detailed Hevo documentation for the following related topics:

Limitations

-

Hevo only supports SSL/TLS-encrypted and plain text data in Apache Kafka.

-

Hevo only supports JSON data format in Apache Kafka.

-

Hevo does not load data from a column into the Destination table if its size exceeds 16 MB, and skips the Event if it exceeds 40 MB. If the Event contains a column larger than 16 MB, Hevo attempts to load the Event after dropping that column’s data. However, if the Event size still exceeds 40 MB, then the Event is also dropped. As a result, you may see discrepancies between your Source and Destination data. To avoid such a scenario, ensure that each Event contains less than 40 MB of data.

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Jul-07-2025 | NA | Updated the Limitations section to inform about the max record and column size in an Event. |

| Jan-07-2025 | NA | Updated the Limitations section to add information on Event size. |

| Mar-18-2024 | 2.21.2 | Updated section, Configure Apache Kafka Connection Settings to add information about the Load all CA certificates option. |

| Mar-05-2024 | 2.21 | Updated the ingestion frequency table in the Data Replication section. |

| Dec-07-2022 | NA | Updated section, Data Replication to reorganize the content for better understanding and coherence. |

| Sep-19-2022 | NA | Added section, Data Replication. |