Starting Release 2.19, Hevo has stopped supporting XMIN as a query mode for all variants of the PostgreSQL Source. As a result, you will not be able to create new Pipelines using this query mode. This change does not affect existing Pipelines. However, you will not be able to change the query mode to XMIN for any objects currently ingesting data using other query modes.

Google Cloud PostgreSQL is a fully-managed database service that helps you set up, maintain, manage, and administer your PostgreSQL relational databases on the Google Cloud platform.

You can ingest data from your Google Cloud PostgreSQL database using Hevo Pipelines and replicate it to a Destination of your choice.

Prerequisites

Perform the following steps to configure your Google Cloud PostgreSQL Source:

Set up Log-based Incremental Replication (Optional)

Note: If you are not using Logical Replication, skip this step.

PostgreSQL (version 9.4 and above) supports logical replication by writing additional information to its Write Ahead Logs (WALs).

To configure logical replication:

-

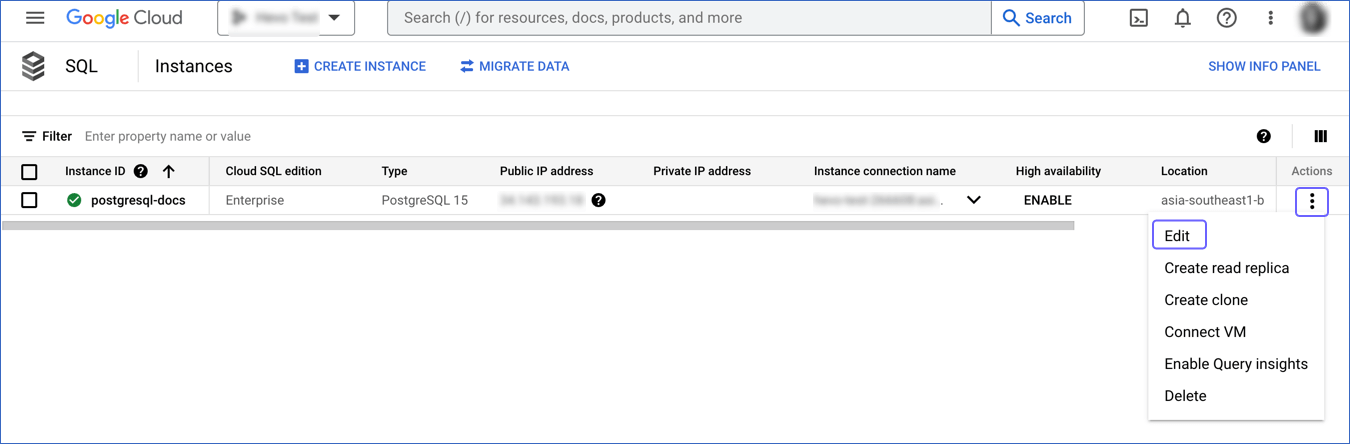

Log in to Google Cloud SQL to access your database instance.

-

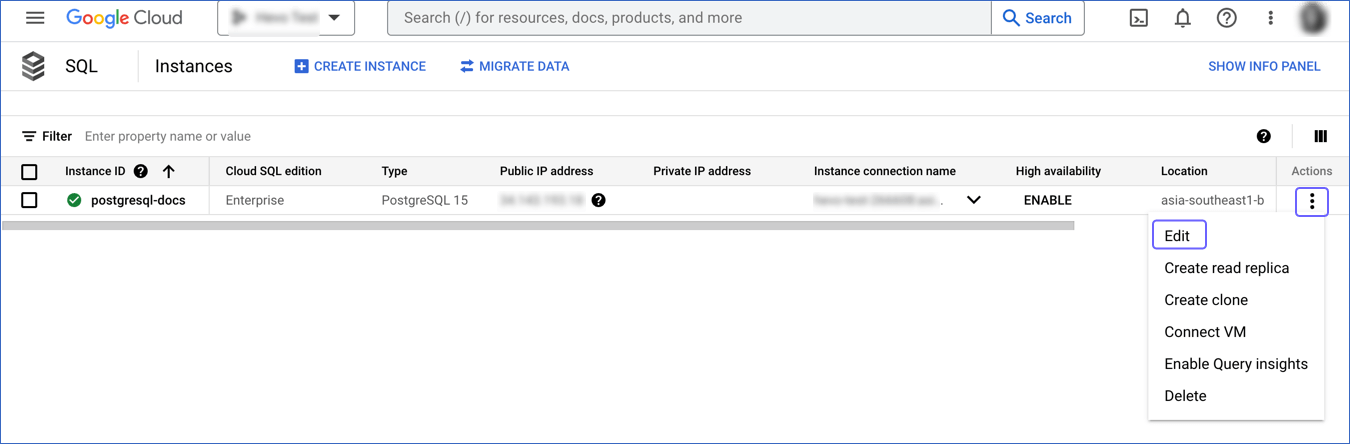

Click the More ( ) icon next to the PostgreSQL instance and click Edit.

) icon next to the PostgreSQL instance and click Edit.

-

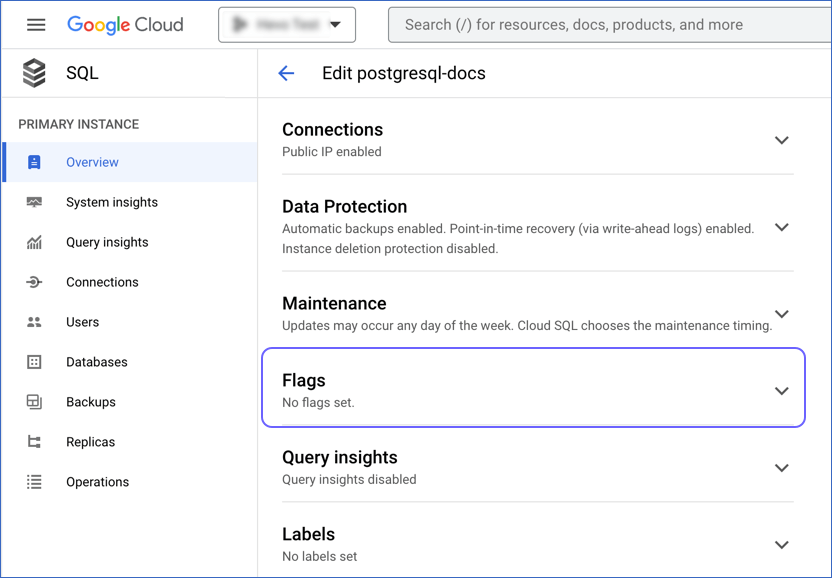

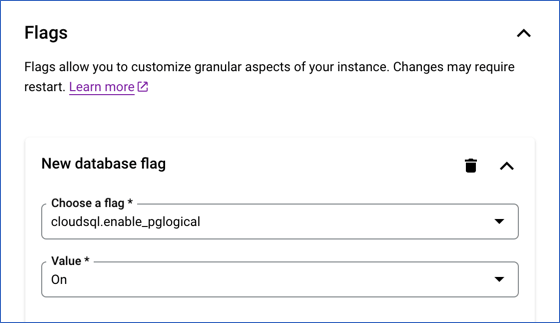

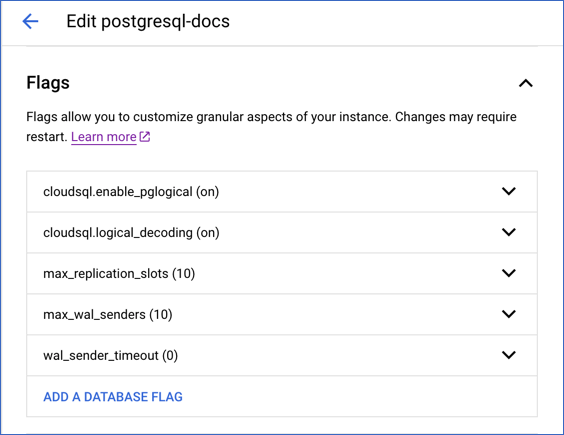

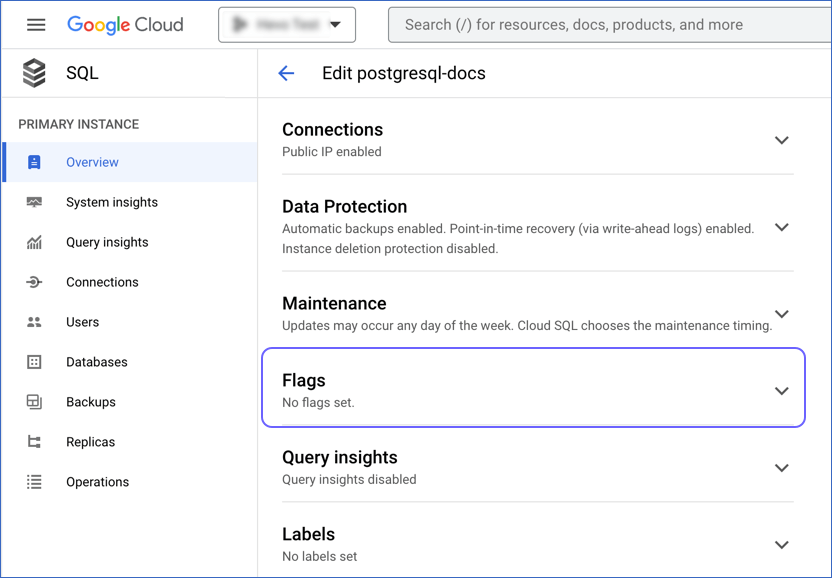

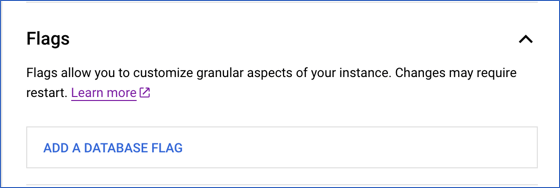

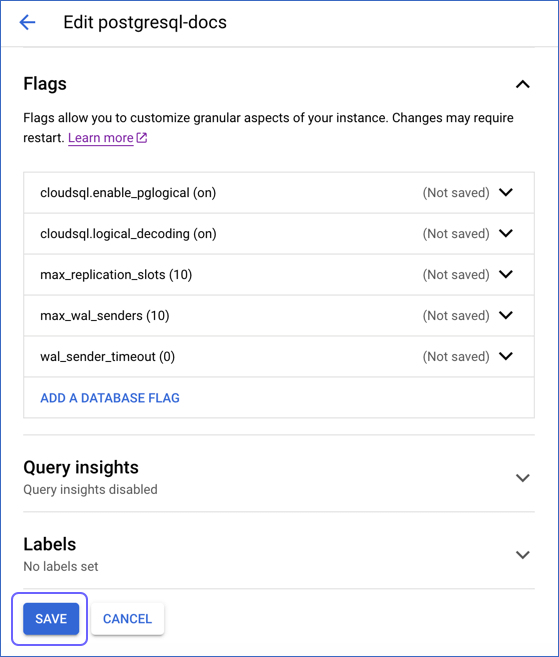

Scroll down to the Flags section.

-

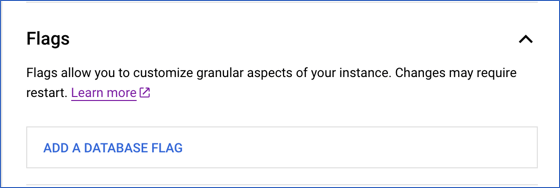

Click the drop-down next to Flags and click ADD A DATABASE FLAG.

-

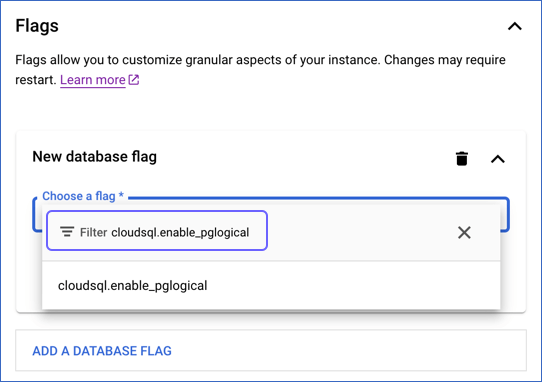

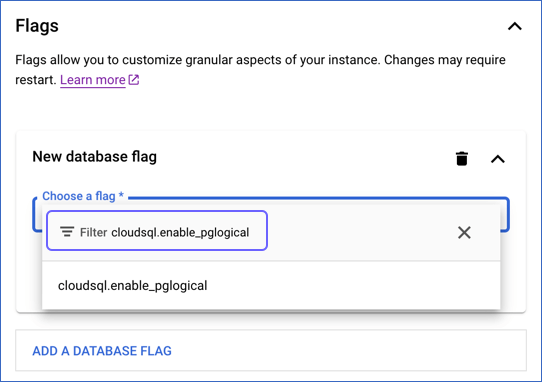

In the New database flag dialog window, click the arrow in the Choose a flag bar and type the flag name in the Filter bar.

-

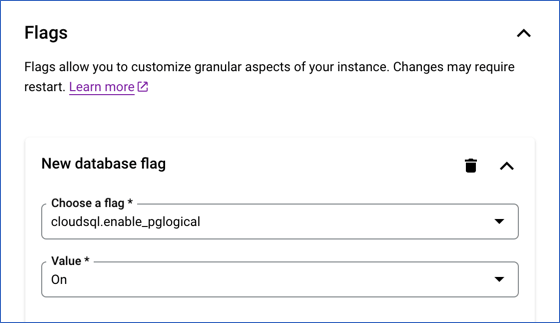

Click the flag name to select it. Select an appropriate value for the flag from the drop-down or by typing it.

-

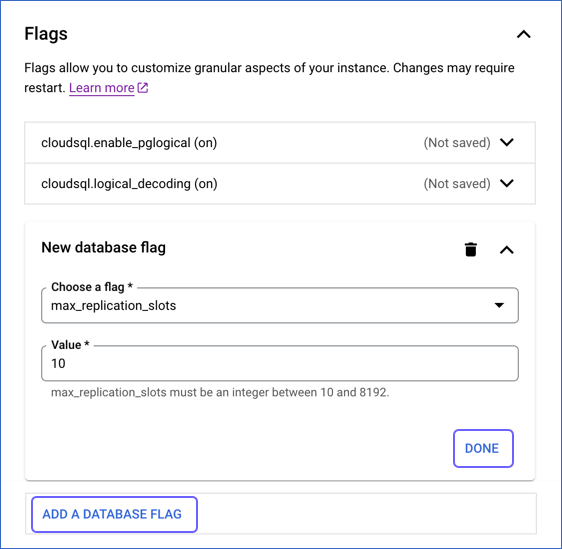

Click DONE and proceed to the next step to add all the required flags. Skip to step 9 if you have finished adding the flags.

-

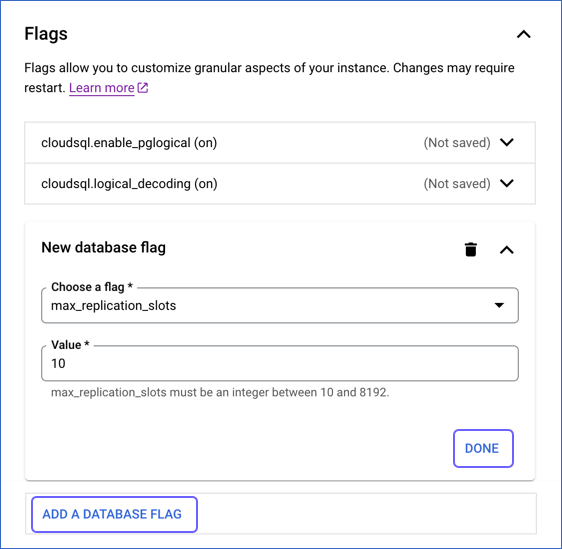

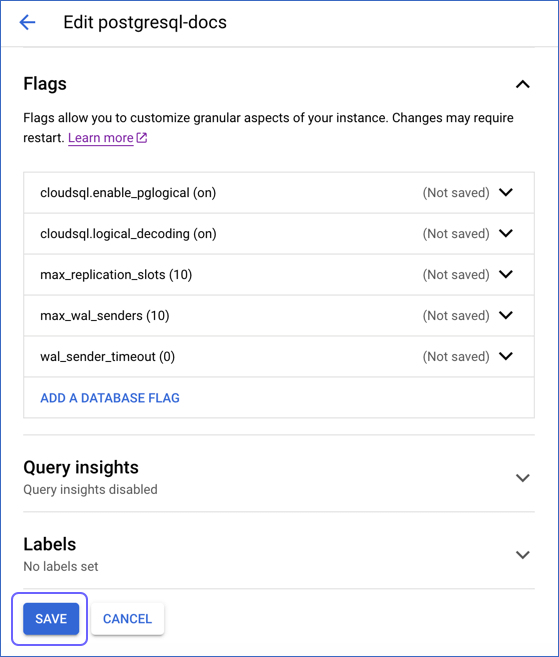

Click ADD A DATABASE FLAG, and then, repeat steps 5-7 to add the following flags and the specified value:

| Flag Name |

Value |

Description |

cloudsql.enable_pglogical |

On |

The setting to enable or turn off the pglogical extension. Default value: On. |

cloudsql.logical_decoding |

On |

The setting to enable or turn off logical replication. Default value: On. |

max_replication_slots |

10 |

The number of clients that can connect to the server. |

max_wal_senders |

10 |

The number of processes that can simultaneously transmit the WAL log. |

wal_sender_timeout |

0 |

The time, in seconds, after which PostgreSQL terminates the replication connections due to inactivity. Default value: 60 seconds.

You must set the value to 0 so that the connections are never terminated and your Pipeline does not fail. |

-

Click SAVE.

-

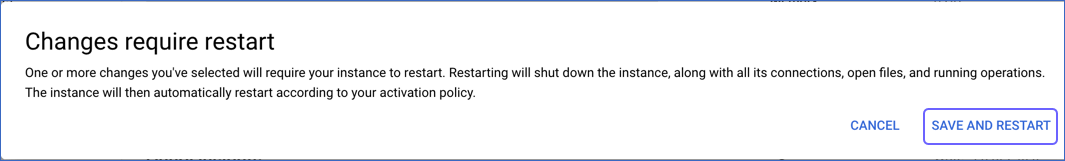

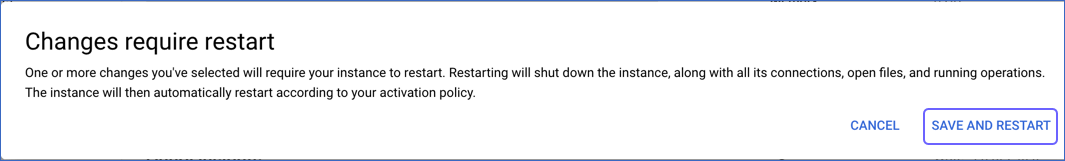

In the confirmation dialog, click SAVE AND RESTART.

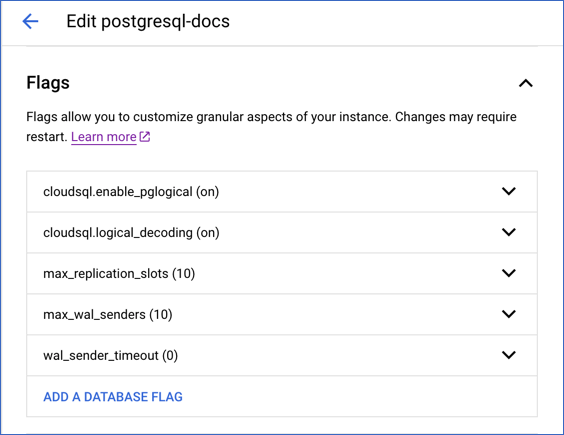

Once the instance restarts, you can view the configured settings under the Flags section.

Whitelist Hevo’s IP Addresses

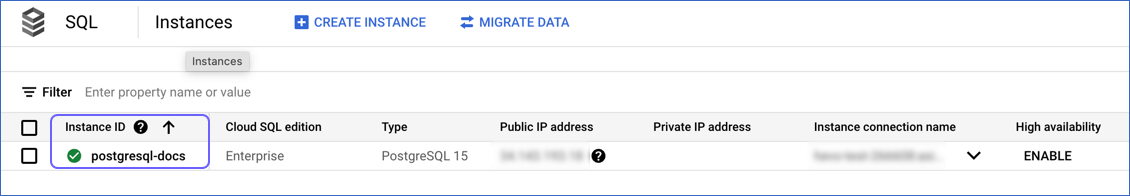

You need to whitelist the Hevo IP addresses for your region to enable Hevo to connect to your PostgreSQL database. To do this:

-

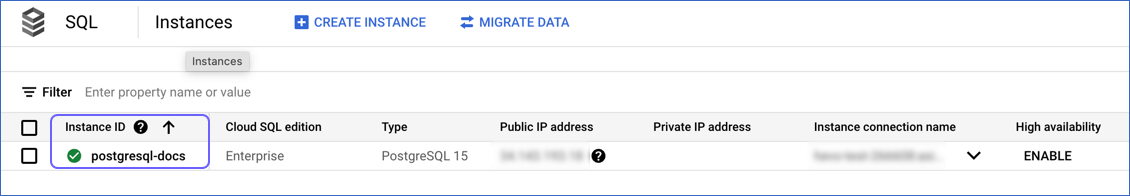

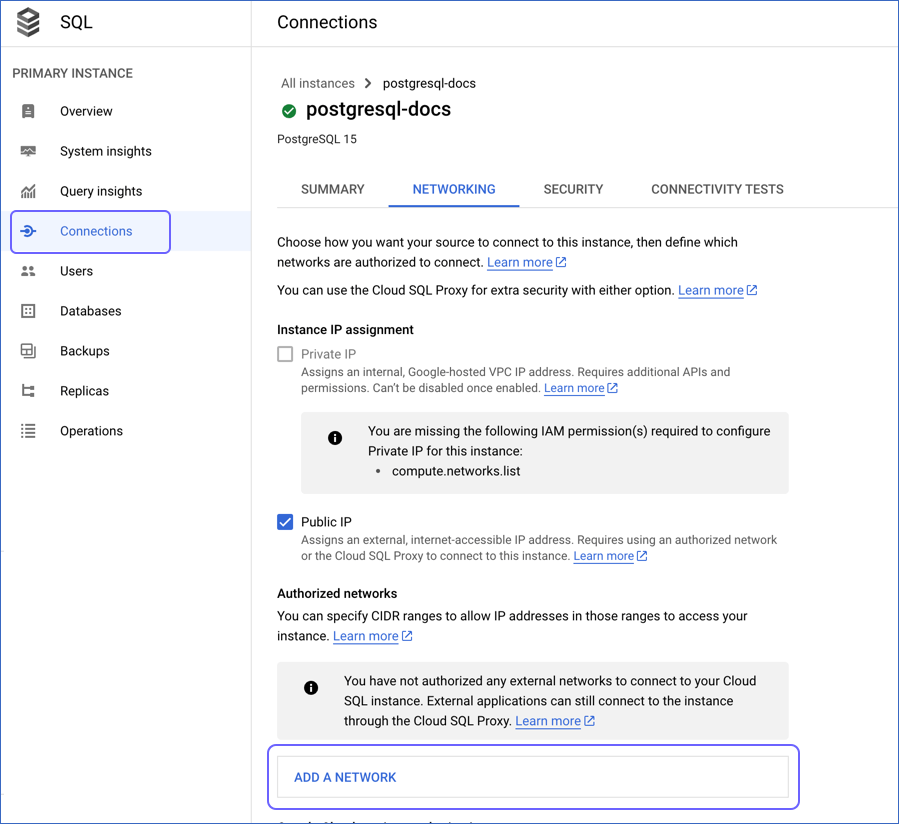

Log in to the Google Cloud SQL Console and click on your PostgreSQL instance ID.

-

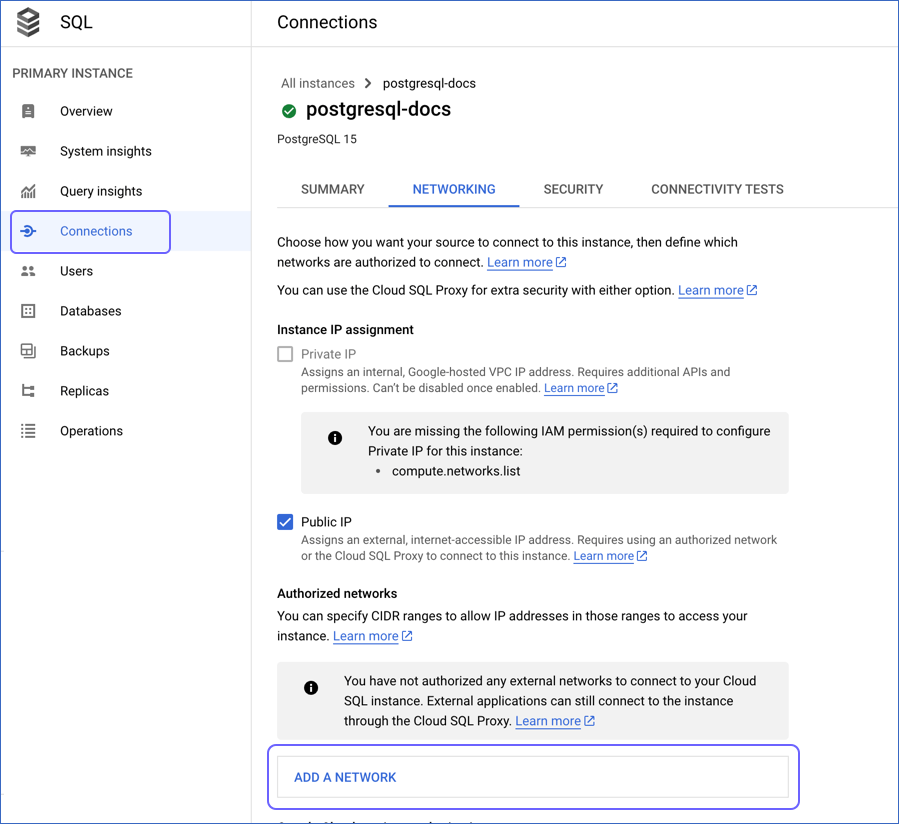

In the left navigation pane of the <PostgreSQL Instance ID> page, click Connections.

-

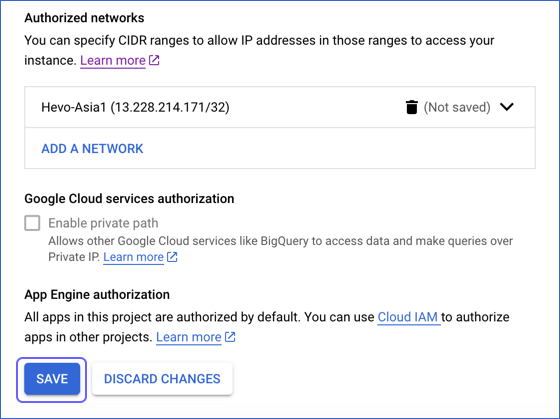

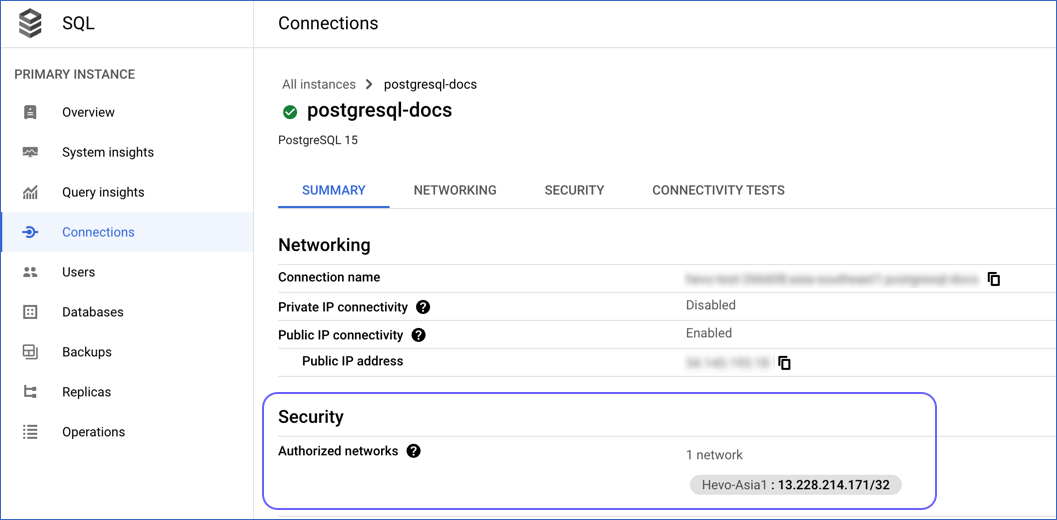

In the Connections pane, click the NETWORKING tab, and scroll down to the Authorized networks section.

-

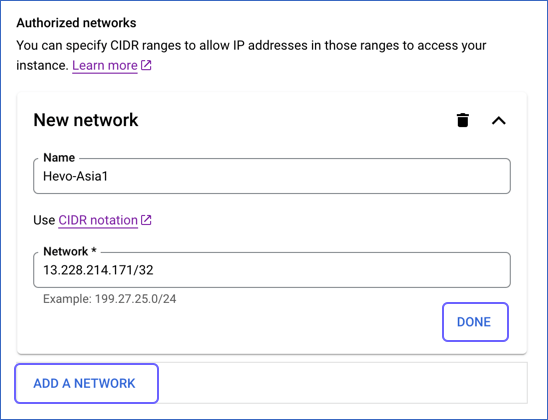

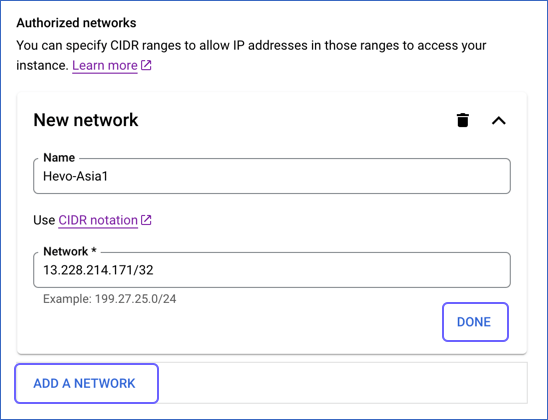

Click ADD A NETWORK, and in New Network, specify the following:

-

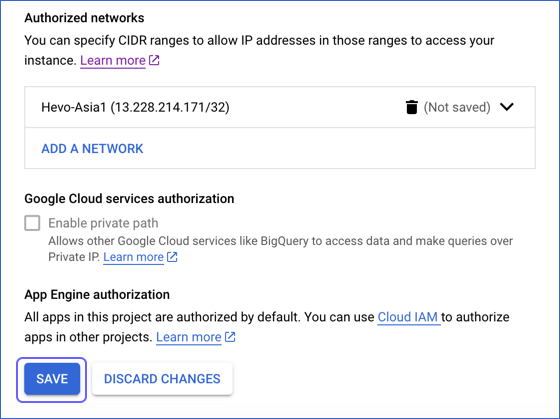

Click DONE, and then, repeat the step above for all the network addresses you want to add.

-

Click Save.

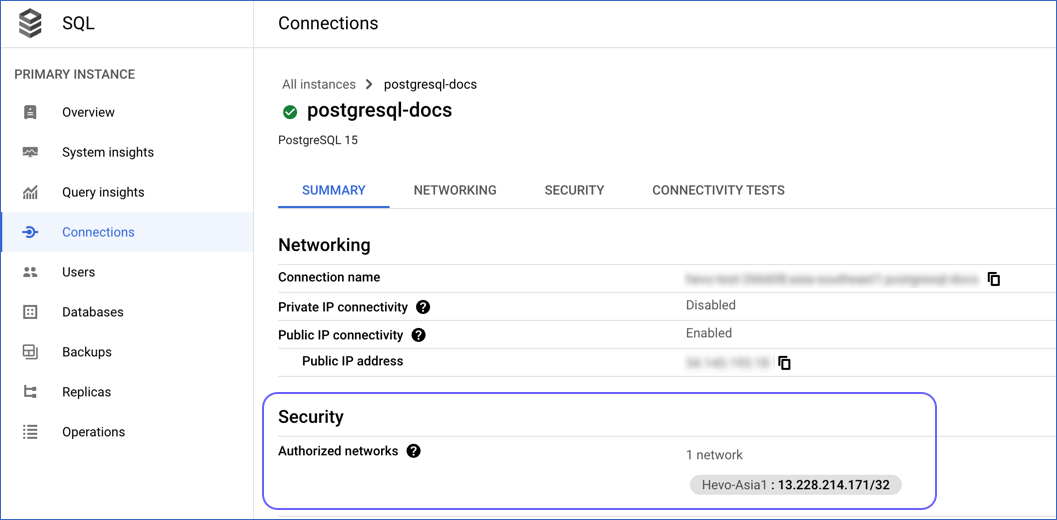

You can view the networks that you have authorized in the Summary tab under Security.

Create a Replication User and Grant Privileges

While using logical replication in Google Cloud PostgreSQL, the user must have the cloudsqlsuperuser role. This role is needed to run the CREATE EXTENSION command.

Create a PostgreSQL user with REPLICATION privileges as follows:

-

Connect to your PostgreSQL database instance as a super admin using any SQL client, such as DataGrip, and run the following commands:

CREATE USER <database_username> WITH REPLICATION

IN ROLE cloudsqlsuperuser LOGIN PASSWORD 'password';

Alternatively, set this attribute for an existing user as follows:

ALTER USER <existing_user> WITH REPLICATION;

-

Run the following commands to grant privileges to the database user:

GRANT CONNECT ON DATABASE <database_name> TO <database_username>;

GRANT USAGE ON SCHEMA <schema_name> TO <database_username>;

GRANT SELECT ON ALL TABLES IN SCHEMA <schema_name> TO <database_username>;

-

Alter the schema’s default privileges to grant SELECT privileges on tables created in the future to the database user:

ALTER DEFAULT PRIVILEGES IN SCHEMA <schema_name> GRANT SELECT ON TABLES TO <database_username>;

Note: Replace the placeholder values in the commands above with your own. For example, <database_username> with hevouser.

Specify Google Cloud PostgreSQL Connection Settings

Perform the following steps to configure Google Cloud PostgreSQL as a Source in Hevo:

-

Click PIPELINES in the Navigation Bar.

-

Click + CREATE PIPELINE in the Pipelines List View.

-

In the Select Source Type page, select Google Cloud PostgreSQL.

-

In the Configure your Google Cloud PostgreSQL Source page, specify the following:

-

Pipeline Name: A unique name for your Pipeline, not exceeding 255 characters.

-

Database Host: The Google Cloud PostgreSQL host’s IP address or DNS. For example, 35.220.150.0.

-

Database Port: The port on which your Google Cloud PostgreSQL server listens for connections. Default value: 5432.

-

Database User: The read-only user who has the permission to read tables in your database.

-

Database Password: The password for the read-only user.

-

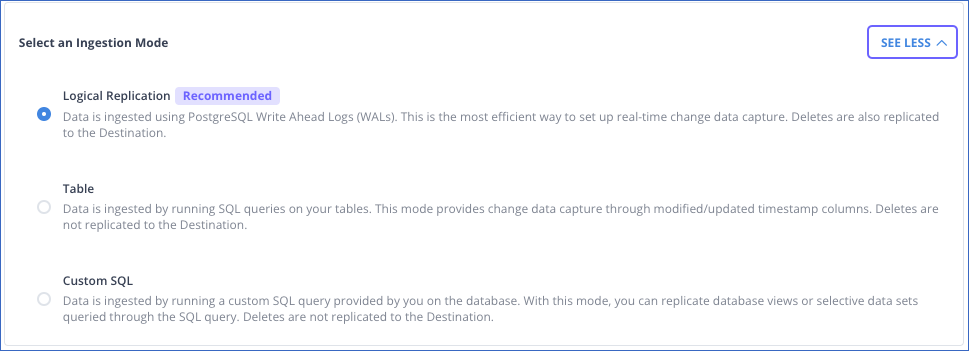

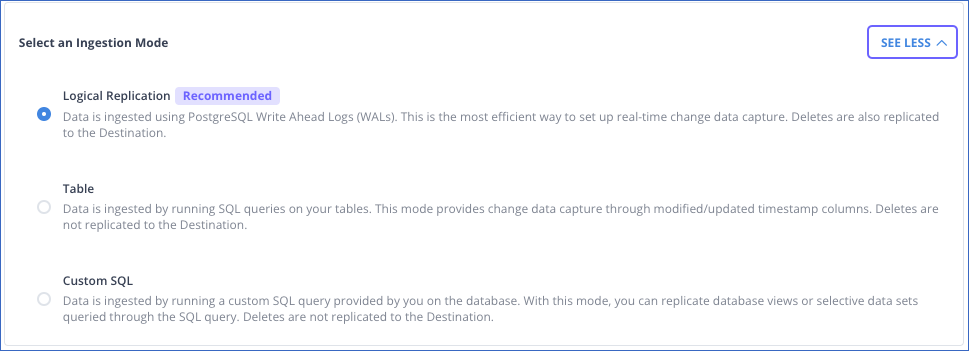

Select an Ingestion Mode: The desired mode by which you want to ingest data from the Source. This section is expanded by default. However, you can collapse the section by clicking SEE LESS. Default value: Logical Replication.

The available ingestion modes are Logical Replication, Table, and Custom SQL. Additionally, the XMIN ingestion mode is available for Early Access. Logical Replication is the recommended ingestion mode and is selected by default.

Depending on the ingestion mode you select, you must configure the objects to be replicated. Refer to section, Object and Query Mode Settings for the steps to do this.

Note: For the Custom SQL ingestion mode, all Events loaded to the Destination are billable.

-

Database Name: The name of an existing database that you wish to replicate.

-

Schema Name (Optional): The schema in your database that holds the tables to be replicated. Default value: public.

Note: The Schema Name field is displayed only for Table and Custom SQL ingestion modes.

-

Connection Settings:

-

Connect through SSH: Enable this option to connect to Hevo using an SSH tunnel, instead of directly connecting your PostgreSQL database host to Hevo. This provides an additional level of security to your database by not exposing your PostgreSQL setup to the public. Read Connecting Through SSH.

If this option is turned off, you must whitelist Hevo’s IP addresses.

-

Use SSL: Enable this option to use an SSL-encrypted connection. Specify the following:

-

CA File: The file containing the SSL server certificate authority (CA).

-

Load all CA Certificates: If selected, Hevo loads all CA certificates (up to 50) from the uploaded CA file, else it loads only the first certificate.

Note: Select this check box if you have more than one certificate in your CA file.

-

Client Certificate: The client’s public key certificate file.

-

Client Key: The client’s private key file.

-

Advanced Settings

-

Load Historical Data: Applicable for Pipelines with Logical Replication mode.

If enabled, the entire table data is fetched during the first run of the Pipeline.

If disabled, Hevo loads only the data that was written in your database after the time of creation of the Pipeline.

-

Merge Tables: Applicable for Pipelines with Logical Replication mode.

If enabled, Hevo merges tables with the same name from different databases while loading the data to the warehouse. Hevo loads the Database Name field with each record.

If disabled, the database name is prefixed to each table name. Read How does the Merge Tables feature work?

-

Include New Tables in the Pipeline: Applicable for all ingestion modes except Custom SQL.

If enabled, Hevo automatically ingests data from tables created in the Source after the Pipeline has been built. These may include completely new tables or previously deleted tables that have been re-created in the Source.

If disabled, new and re-created tables are not ingested automatically. They are added in SKIPPED state in the objects list, in the Pipeline Overview page. You can include these objects post-Pipeline creation to ingest data.

You can change this setting later.

-

Click TEST CONNECTION. This button is enabled once you specify all the mandatory fields. Hevo’s underlying connectivity checker validates the connection settings you provide.

-

Click TEST & CONTINUE to proceed for setting up the Destination. This button is enabled once you specify all the mandatory fields.

Object and Query Mode Settings

Once you have specified the Source connection settings in Step 4 above, do one of the following:

-

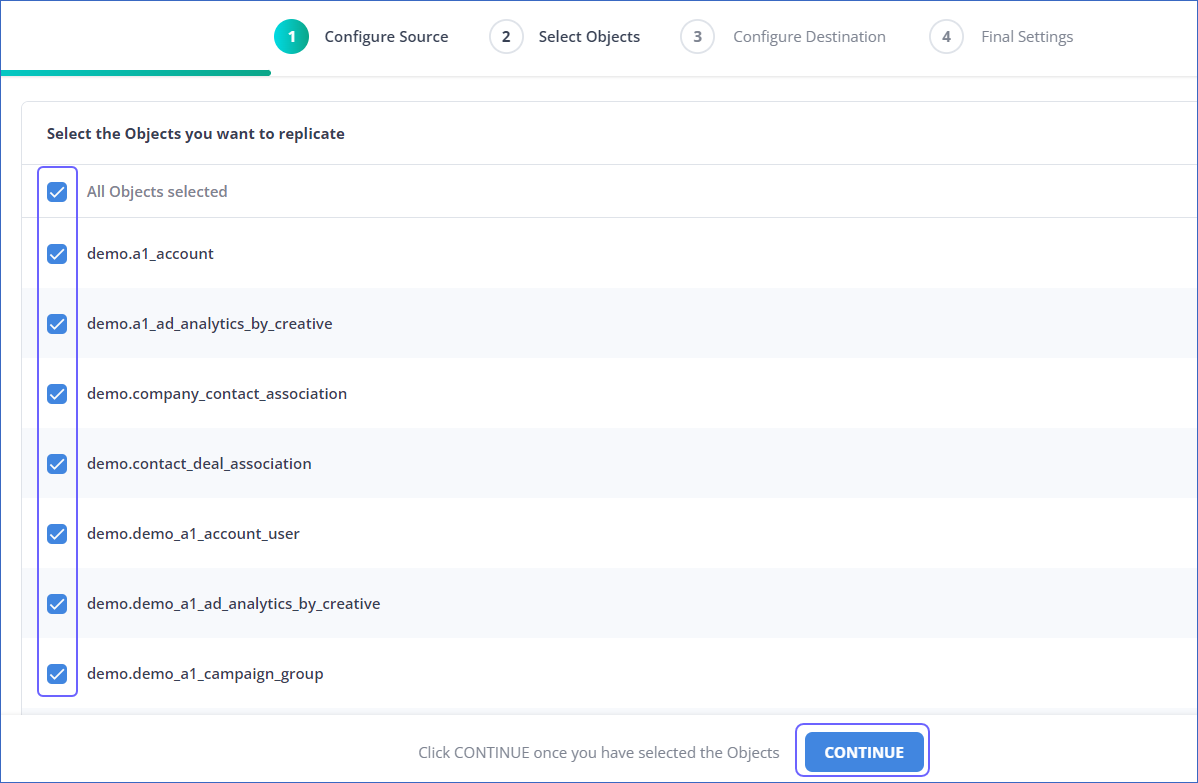

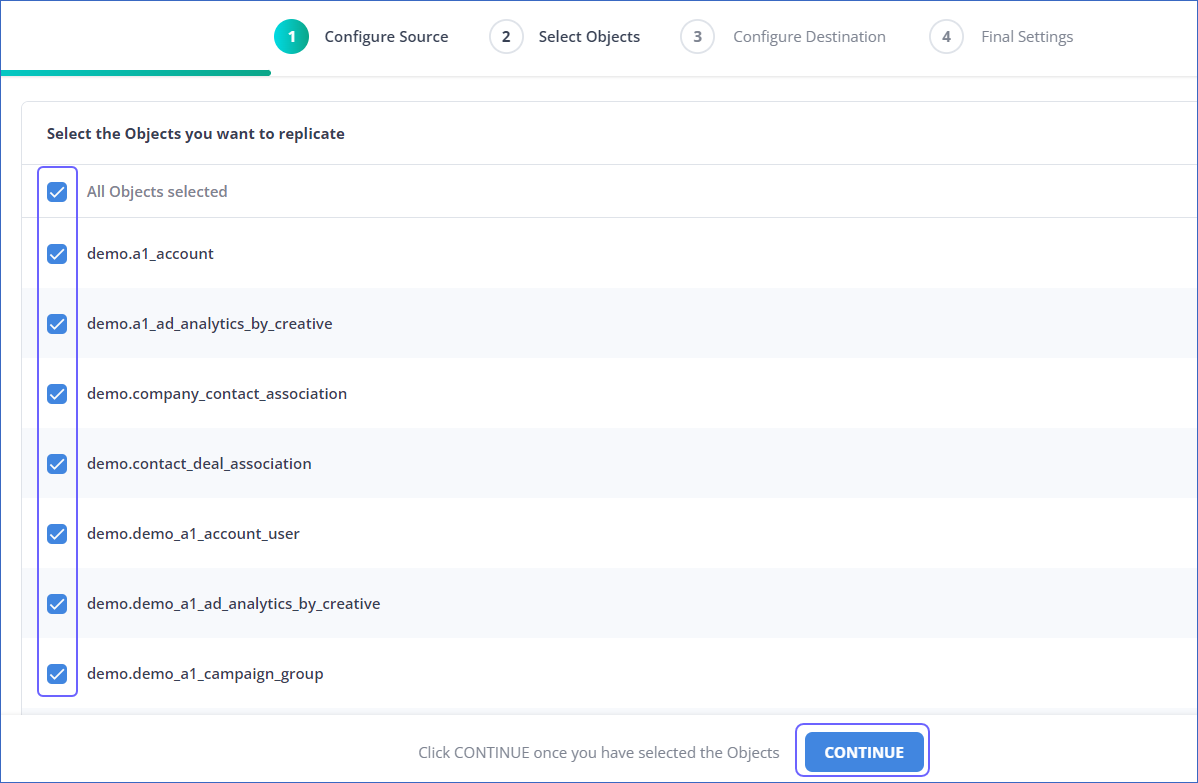

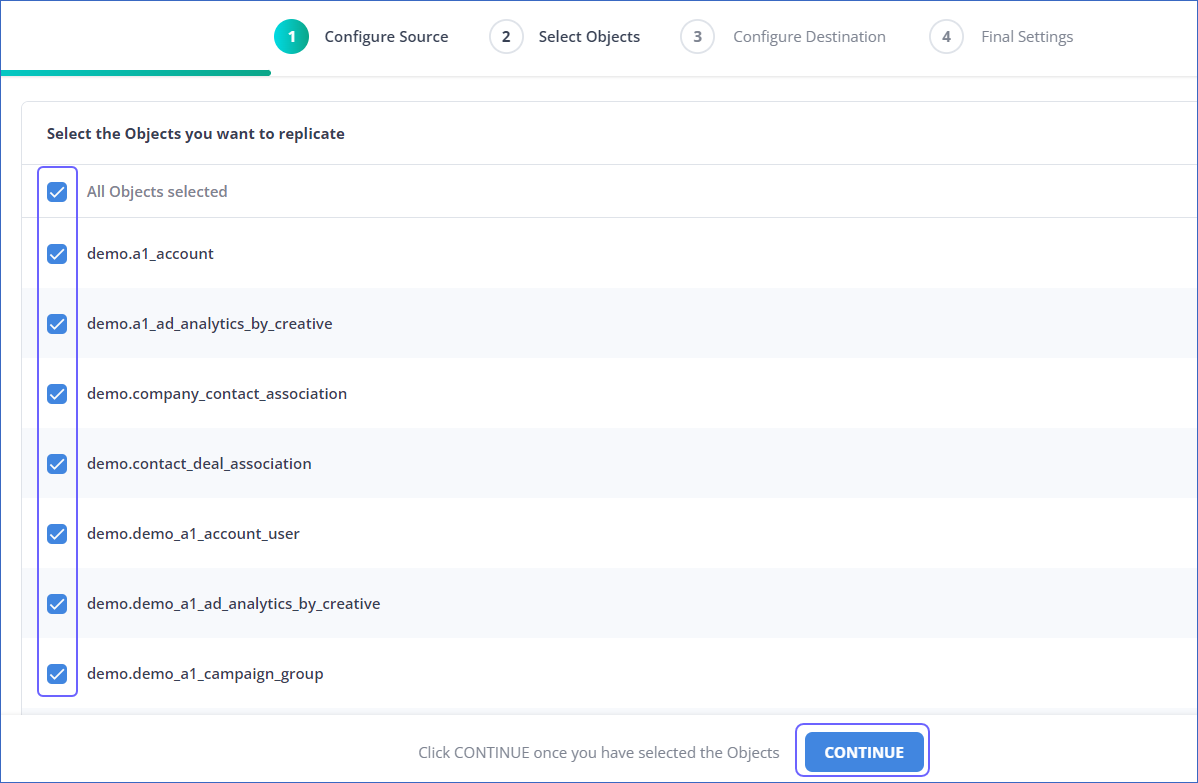

For Pipelines configured with the Table or Logical Replication mode:

-

In the Select Objects page, select the objects you want to replicate and click CONTINUE.

Note:

-

Each object represents a table in your database.

-

From Release 2.19 onwards, for log-based Pipelines, you can keep the objects listed in the Select Objects page deselected by default. In this case, when you skip object selection, all objects are skipped for ingestion, and the Pipeline is created in the Active state. You can include the required objects post-Pipeline creation. Contact Hevo Support to enable this option for your team.

-

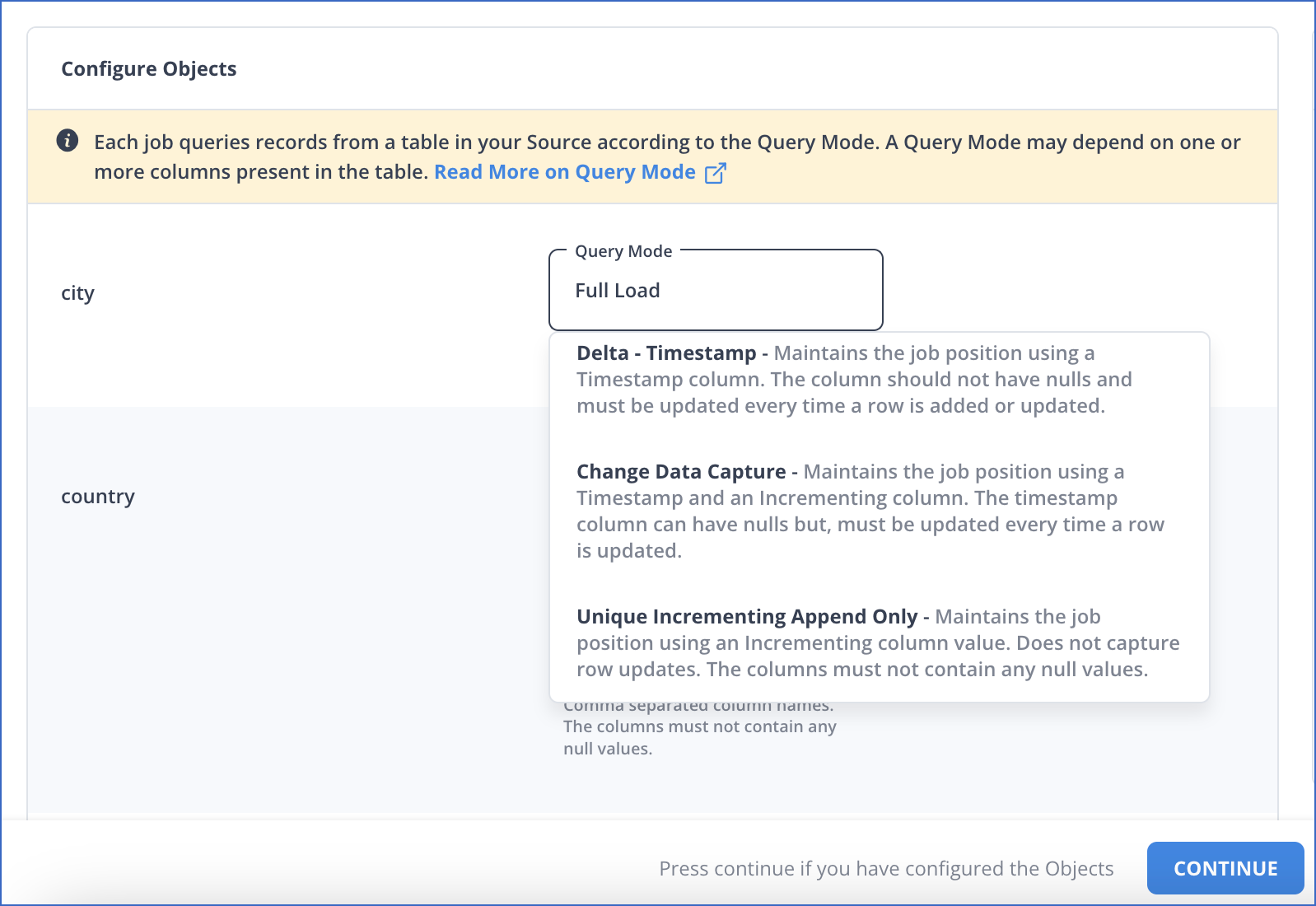

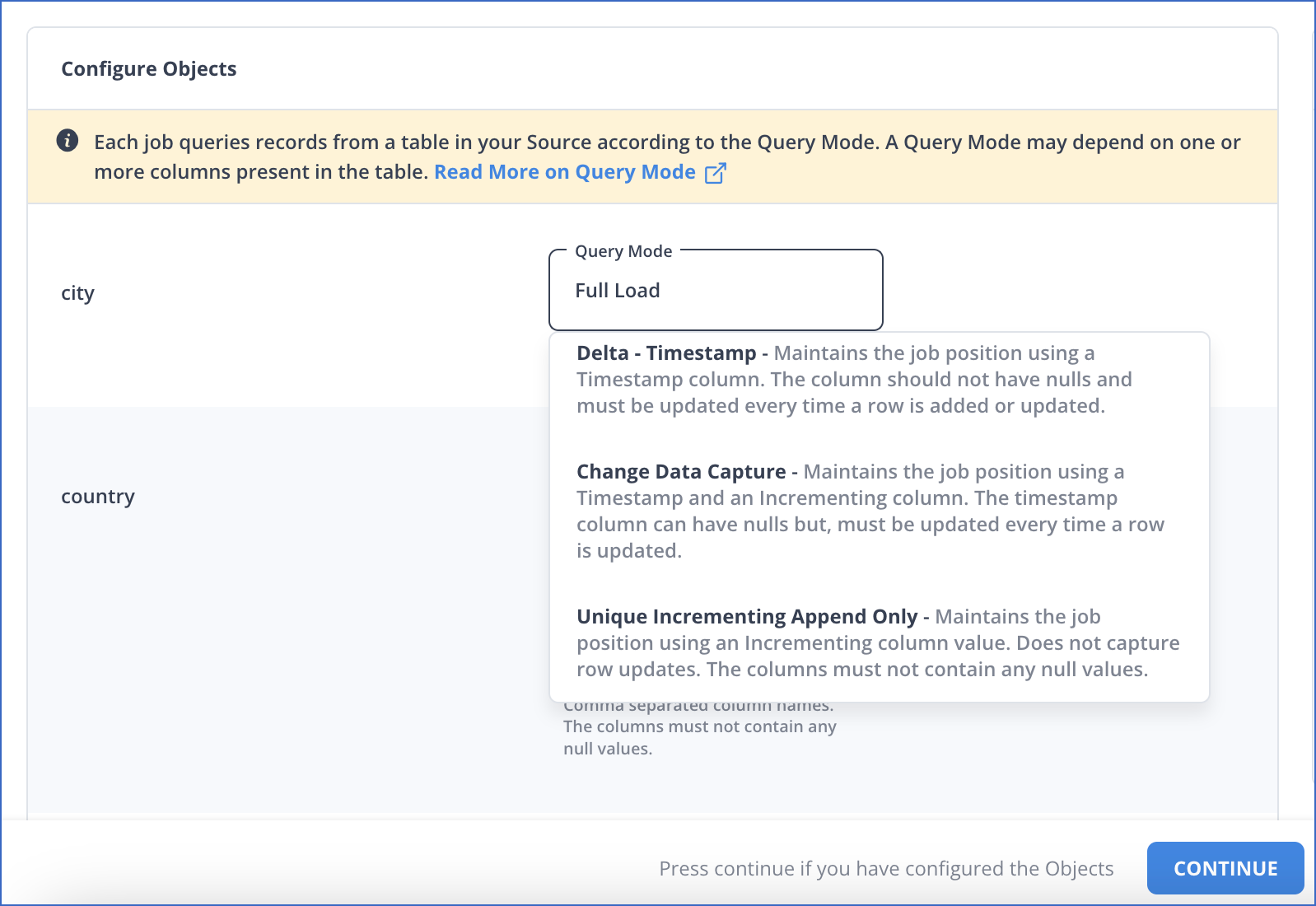

In the Configure Objects page, specify the query mode you want to use for each selected object.

Note: In Full Load mode, Hevo attempts to replicate the full table in a single run of the Pipeline, with an ingestion limit of 25 Million rows.

-

For Pipelines configured with the XMIN mode:

-

In the Select Objects page, select the objects you want to replicate.

Note:

-

Each object represents a table in your database.

-

For the selected objects, only new and updated records are ingested using the XMIN column.

-

The Edit Config option is unavailable for the objects selected for XMIN-based ingestion. You cannot change the ingestion mode for these objects post-Pipeline creation.

-

Click CONTINUE.

-

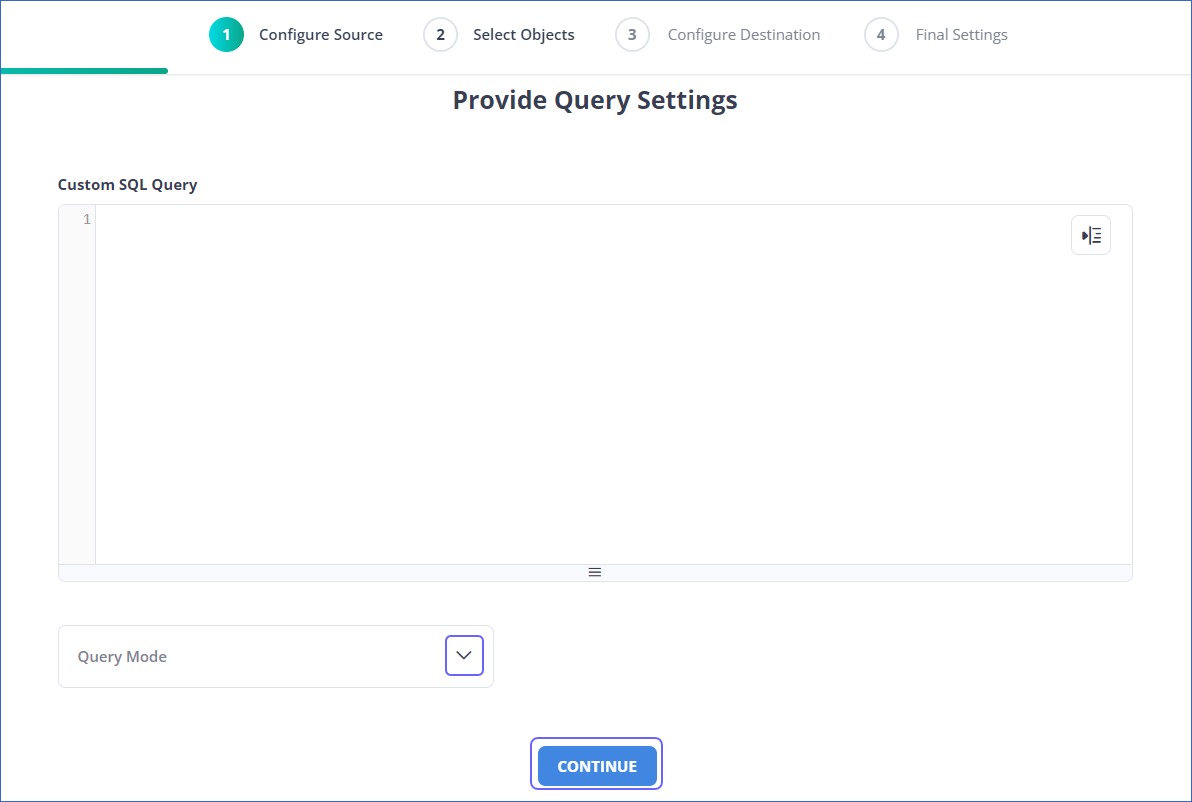

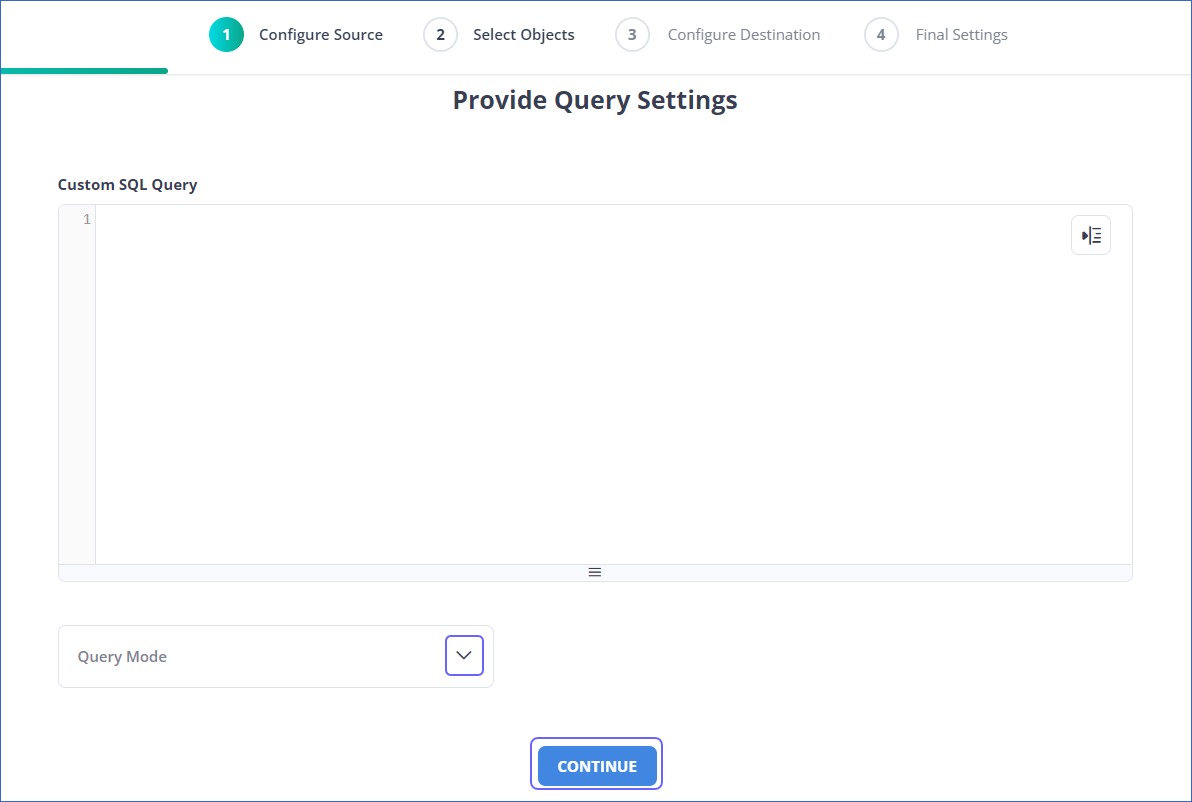

For Pipelines configured with the Custom SQL mode:

-

In the Provide Query Settings page, enter the custom SQL query to fetch data from the Source.

-

In the Query Mode drop-down, select the query mode, and click CONTINUE.

Data Replication

| For Teams Created |

Ingestion Mode |

Default Ingestion Frequency |

Minimum Ingestion Frequency |

Maximum Ingestion Frequency |

Custom Frequency Range (in Hrs) |

| Before Release 2.21 |

Table |

15 Mins |

15 Mins |

24 Hrs |

1-24 |

| |

Log-based |

5 Mins |

5 Mins |

1 Hr |

NA |

| After Release 2.21 |

Table |

6 Hrs |

30 Mins |

24 Hrs |

1-24 |

| |

Log-based |

30 Mins |

30 Mins |

12 Hrs |

1-24 |

Note: The custom frequency must be set in hours as an integer value. For example, 1, 2, or 3 but not 1.5 or 1.75.

-

Historical Data: In the first run of the Pipeline, Hevo ingests all available data for the selected objects from your Source database.

-

Incremental Data: Once the historical load is complete, data is ingested as per the ingestion frequency.

Read the detailed Hevo documentation for the following related topics:

Source Considerations

-

If you add a column with a default value to a table in PostgreSQL, entries with it are created in the WAL only for the rows that are added or updated after the column is added. As a result, in the case of log-based Pipelines, Hevo cannot capture the column value for the unchanged rows. To capture those values, you need to:

-

PostgreSQL versions 15.7 and below do not support logical replication on read replicas. This feature is available starting from version 16.

-

When you delete a row in the Source table, its XMIN value is deleted as well. As a result, for Pipelines created with the XMIN ingestion mode, Hevo cannot track deletes in the Source object(s). To capture deletes, you need to restart the historical load for the respective object.

-

XMIN is a system-generated column in PostgreSQL, and it cannot be indexed. Hence, to identify the updated rows in Pipelines created with the XMIN ingestion mode, Hevo scans the entire table. This action may lead to slower data ingestion and increased processing overheads on your PostgreSQL database host. Due to this, Hevo recommends that you create the Pipeline in the Logical Replication mode.

Note: The XMIN limitations specified above are applicable only to Pipelines created using the XMIN ingestion mode, which is currently available for Early Access.

- PostgreSQL retains Write-Ahead Logs (WALs) until all replication clients, such as Hevo or other consumers, have acknowledged the receipt of relevant changes. In other words, PostgreSQL waits for each client to catch up to a certain point in the WAL stream before it can safely discard older logs. As a result, the WAL size may grow even though Hevo consistently ingests new changes without lag and has no backlog. This growth can be due to long-running transactions, inactive or lagging replicas, or delays in PostgreSQL’s internal cleanup. If the WAL size exceeds the retention threshold, PostgreSQL may drop the replication slot, causing Pipeline failure. To prevent this, monitor long-running transactions and ensure other replication clients are progressing as expected. Read Viewing Statistics in PostgreSQL.

Limitations

-

The data type Array in the Source is automatically mapped to Varchar at the Destination. No other mapping is currently supported.

-

Hevo does not support data replication from foreign tables, temporary tables, and views.

-

If your Source data has indexes (indices) and constraints, you must recreate them in your Destination table, as Hevo does not replicate them from the Source. It only creates the existing primary keys.

-

Hevo does not set the __hevo_marked_deleted field to True for data deleted from the Source table using the TRUNCATE command. This could result in a data mismatch between the Source and Destination tables.

-

Hevo supports only RSA keys for establishing SSL connections. Hence, you must ensure that your SSL certificates and keys are generated using the RSA encryption algorithm.

-

Hevo does not load data from a column into the Destination table if its size exceeds 16 MB, and skips the Event if it exceeds 40 MB. If the Event contains a column larger than 16 MB, Hevo attempts to load the Event after dropping that column’s data. However, if the Event size still exceeds 40 MB, then the Event is also dropped. As a result, you may see discrepancies between your Source and Destination data. To avoid such a scenario, ensure that each Event contains less than 40 MB of data.

-

For log-based Pipelines, you may see duplicate data in your Destination tables for interleaved transactions. This occurs because Hevo may replicate data from the WAL multiple times for the nested transactions.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date |

Release |

Description of Change |

| Jul-08-2025 |

NA |

Updated section, Prerequisites to add information about the maximum supported version of PostgreSQL. |

| Jul-07-2025 |

NA |

Updated the Limitations section to inform about the max record and column size in an Event. |

| Jun-23-2025 |

NA |

Updated section, Source Considerations to add information about WAL size growth issue in PostgreSQL. |

| Jun-02-2025 |

NA |

Added a limitation about duplicate data for interleaved transactions. |

| Jan-07-2025 |

NA |

Updated the Limitations section to add information on Event size. |

| Sep-30-2024 |

NA |

Updated sections, Prerequisites and Source Considerations to add information about the logical replication support for read replicas. |

| Jun-27-2024 |

NA |

Updated section, Limitations to add information about Hevo supporting only RSA-based keys. |

| May-30-2024 |

NA |

Updated section, Create a Replication User and Grant Privileges to add all the necessary permissions. |

| Apr-29-2024 |

NA |

Updated section, Specify Google Cloud PostgreSQL Connection Settings to include more detailed steps. |

| Mar-18-2024 |

2.21.2 |

Updated section, Specify Google Cloud PostgreSQL Connection Settings to add information about the Load all CA certificates option. |

| Mar-05-2024 |

2.21 |

Added the Data Replication section. |

| Feb-05-2024 |

NA |

Updated sections, Specify Google Cloud PostgreSQL Connection Settings and Object and Query Mode Settings to add information about the XMIN ingestion mode. |

| Jan-22-2024 |

2.19.2 |

Updated section, Object and Query Mode Settings to add a note about the enhanced object selection flow available for log-based Pipelines. |

| Jan-10-2024 |

NA |

- Updated section, Source Considerations to add information about limitations of XMIN query mode.

- Removed mentions of XMIN as a query mode. |

| Nov-03-2023 |

NA |

Renamed section, Object Settings to Object and Query Mode Settings. |

| Oct-03-2023 |

NA |

Updated sections:

- Set up Log-based Incremental Replication and Whitelist Hevo’s IP Addresses to reflect the changed Google Cloud SQL UI,

- Specify Google Cloud PostgreSQL Connection Settings to describe the schema name displayed in Table and Custom SQL ingestion modes,

- Source Considerations to add information about logical replication not supported on read replicas, and

- Limitations to add limitations about data replicated by Hevo. |

| Sep-19-2023 |

NA |

Updated section, Limitations to add information about Hevo not supporting data replication from certain tables. |

| Jun-26-2023 |

NA |

Added section, Source Considerations. |

| Apr-21-2023 |

NA |

Updated section, Specify Google Cloud PostgreSQL Connection Settings to add a note to inform users that all loaded Events are billable for Custom SQL mode-based Pipelines. |

| Mar-09-2023 |

2.09 |

Updated section, Specify Google Cloud PostgreSQL Connection Settings to mention about SEE MORE in the Select an Ingestion Mode section. |

| Dec-19-2022 |

2.04 |

Updated section, Specify Google Cloud PostgreSQL Connection Settings to add information that you must specify all fields to create a Pipeline. |

| Dec-07-2022 |

2.03 |

Updated section, Specify Google Cloud PostgreSQL Connection Settings to mention about including skipped objects post-Pipeline creation. |

| Dec-07-2022 |

2.03 |

Updated section, Specify Google Cloud PostgreSQL Connection Settings to mention about the connectivity checker. |

| Jul-04-2022 |

NA |

- Added sections, Specify Google Cloud PostgreSQL Connection Settings and Object Settings. |

| Mar-07-2022 |

1.83 |

Updated the section, Prerequisites with a note about the logical replication. |

| Jan-24-2022 |

1.80 |

Removed from Limitations that Hevo does not support UUID datatype as primary key. |

| Dec-20-2021 |

1.78 |

Updated section, Set up Log-based Incremental Replication. |

| Sep-09-2021 |

1.71 |

- Updated the section, Limitations to include information about columns with the UUID data type not being supported as a primary key.

- Added WAL replication mode in the Prerequisites section.

- Replaced the section Grant Privileges to the User with Create a Replication User and Grant Privileges. |

| Jun-14-2021 |

1.65 |

Updated the Grant Privileges to the User section to include latest commands. |