Managing Objects in Pipelines

On This Page

Objects represent the entity that Hevo ingests from your Source system. Depending on the type of the Source, the entity can be a table, collection, report, or Event Type.

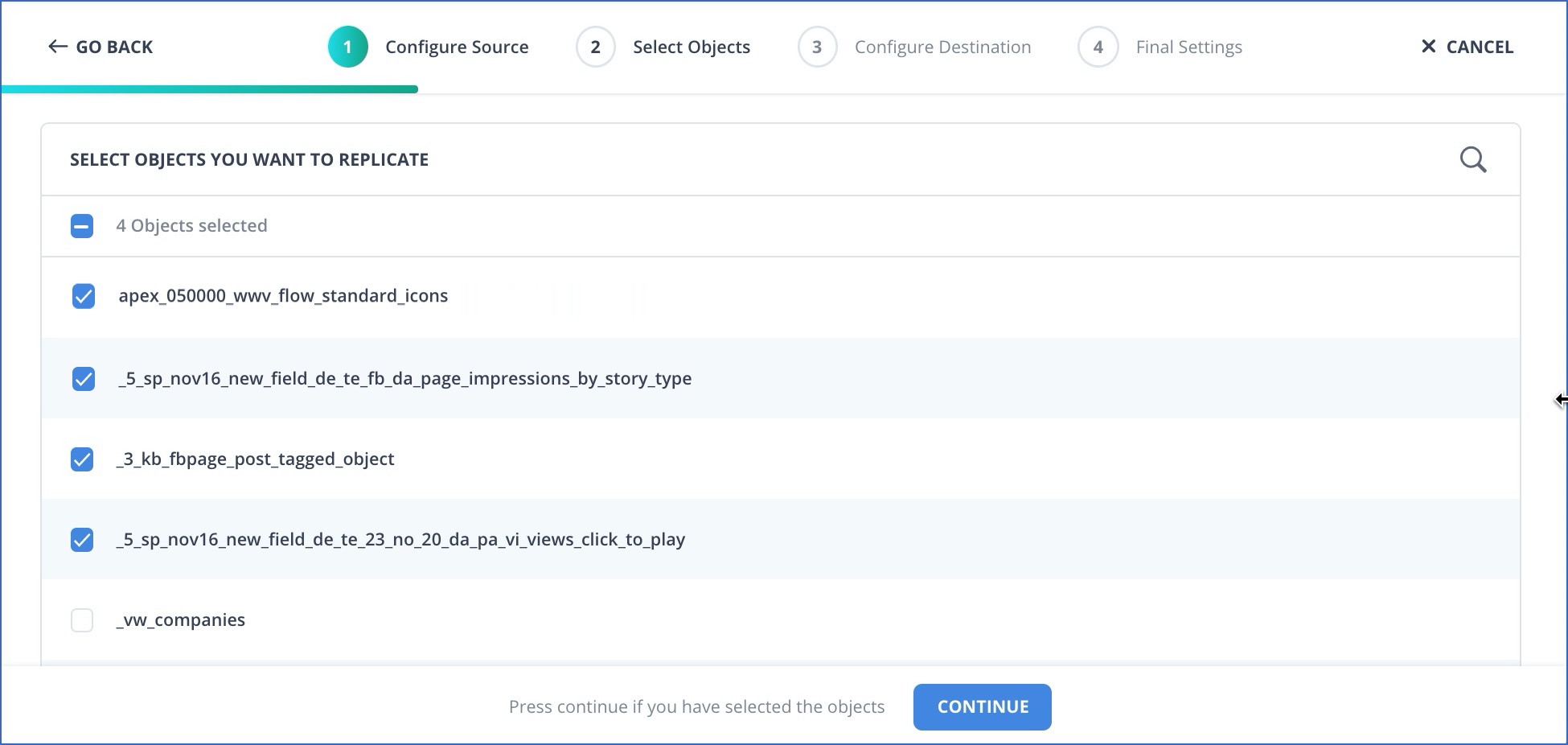

Once you complete the Source configuration while creating the Pipeline, the Select Objects page displays the objects in your Source. Only parent-level objects are displayed for selection. For example, let us say, there are the objects, Order and Order_items. Then, only the Order object is available for selection as Hevo understands Order_items to be a child of this object. In the case of a Google Sheets Source, while each tab of a worksheet is listed and can be selected, it is ingested as a separate object.

By default, all the objects are selected for ingestion. At this time, you can deselect the objects from which you do not want to ingest data; these are marked as SKIPPED in the Pipeline. You can also change your selection post-Pipeline creation. If an object is skipped after the Pipeline is created, Events ingested from it are loaded to the Destination, and the object is then marked as SKIPPED.

Note: Currently, each Pipeline supports a maximum of 25,000 objects. If you need to increase the object limit for your Pipeline, contact Hevo Support.

If the object listing page does not load properly and you choose to skip object selection, the Pipeline is created with all objects included for ingestion. However, from Release 2.19 onwards, for log-based Pipelines created with any variant of PostgreSQL and Oracle, Hevo gives you the option to keep the objects in the Select Objects page deselected by default. In this case, when you skip object selection, all objects are skipped for ingestion, and the Pipeline is created in the Active state. You can include the required objects post-Pipeline creation. Contact Hevo Support to enable this option for your team.

In the case of RDBMS Source objects in Pipelines created with Log-based or Table ingestion modes, Hevo tries to find the optimal query mode for ingesting the data. If it is unable to do so, you are required to configure the query mode for that object yourself. In that case, you are directed to the Configure Objects page, in which Hevo displays the objects pending query mode identification. You can set up the query mode at this time and select the required column for it. If you skip this step, then the objects are queried in Full Load mode.

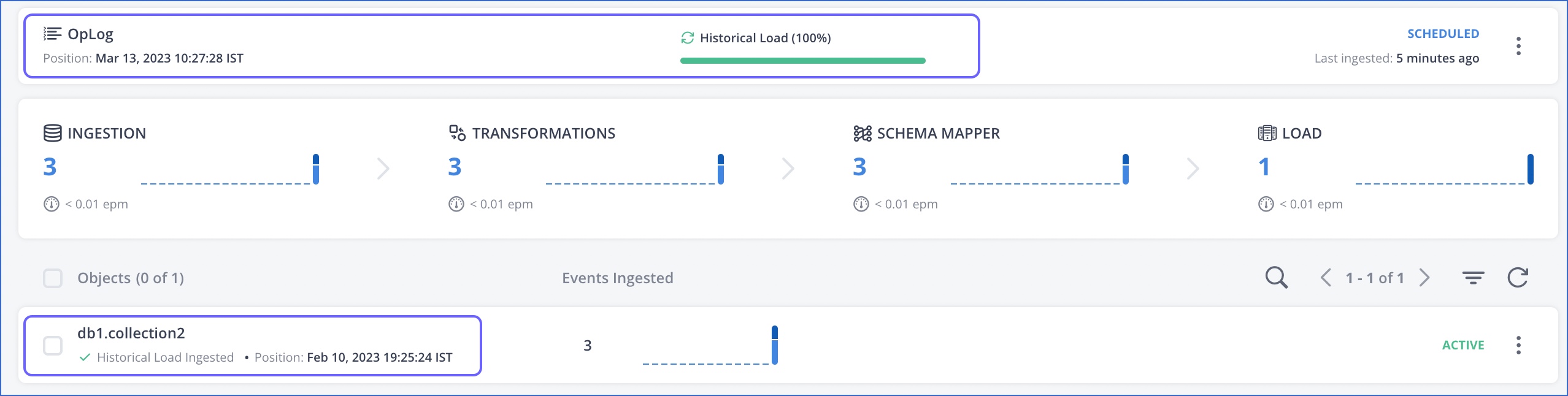

You can see the objects that you selected in the Objects section in the Pipeline Detailed View. The information displayed for each object is based on the ingestion mode and the Source configuration you specified while creating the Pipeline. For example, the following image shows the object list for a Source where the historical data ingestion option was selected for some objects and some objects were skipped.

NOTE: Objects are not listed for Sources which depend on webhooks to receive data.

The following information is typically provided for a Pipeline object:

![]()

-

The name of the object

-

The number of Events ingested

-

A trend-line graph showing the activity in the last 24 hours

-

Hover text on the trend-line graph to display the billable and historical Events count

-

The object ingestion status, such as Active, Paused, or Skipped

-

The time when the Events for this object were last ingested

-

The More (

) icon that leads to the Actions menu for the object

) icon that leads to the Actions menu for the object

Pipelines created with database Sources may display some additional details such as the log position till which data has been ingested and the historical load status, if the Pipeline is log-based and the Load Historical Data option is selected, respectively, as shown in the image below.

In the image above, OpLog information indicates the record position till which the incremental data has been ingested from a MongoDB Source. The historical data ingestion status is displayed for the Pipeline as a whole and for each object.

Click the More (![]() ) icon to view the Actions menu that lists the actions available for an object. You can use these actions to control the data that you ingest for each object. Read SaaS Objects and Actions and Database Objects and Actions for more information.

) icon to view the Actions menu that lists the actions available for an object. You can use these actions to control the data that you ingest for each object. Read SaaS Objects and Actions and Database Objects and Actions for more information.

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Mar-10-2025 | NA | Updated the page to add information about object limit in Pipelines. |

| Apr-18-2024 | NA | Added information about ingested Events being loaded to the Destination for objects skipped post-Pipeline creation. |

| Jan-22-2024 | 2.19.2 | Updated the page to add information about the enhanced object selection flow available for PostgreSQL and Oracle Sources. |

| Apr-07-2023 | NA | Reorganized the content around Pipeline objects for clarity. |

| Dec-07-2022 | 2.03 | - Added section, Including Skipped Objects Post-Pipeline Creation. - Renamed section, Post-Pipeline Query Mode Optimization to Optimizing Query Mode Post-Pipeline Creation. |

| Oct-17-2022 | 1.99 | Updated the page contents to reflect the latest UI changes in Pipeline Overview page. |