Google Cloud Storage (GCS)

On This Page

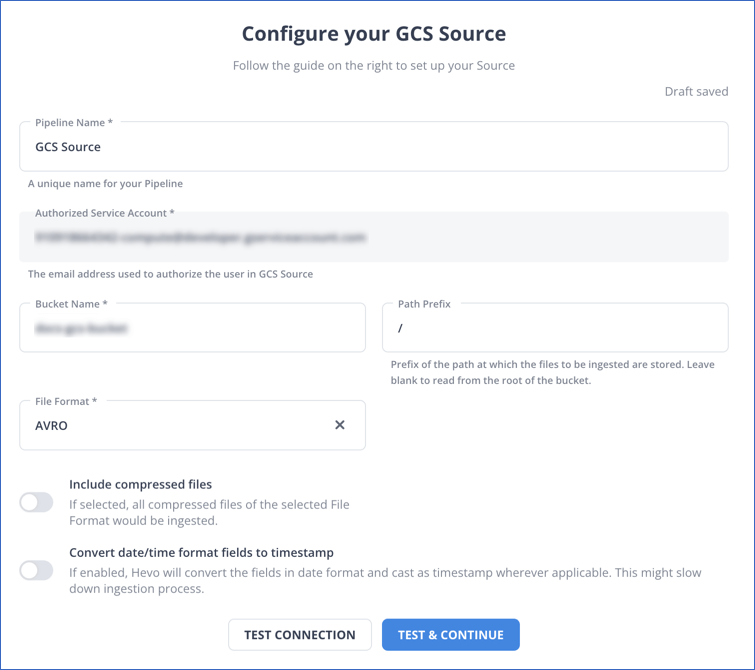

Google Cloud Storage (GCS) is a storage platform that is fast, cost-effective, secure, and used for storing unstructured data (objects) in any file format in a bucket. You can use this data for multiple processes, such as creating a BigQuery data warehouse, and running analytical processes. Hevo supports replication of data from your GCS bucket in the following file formats: AVRO, CSV, JSON, and XML.

As of Release 1.66, Hevo adds the __hevo_source_modified_at field to the Destination as a metadata field. For existing Pipelines that have this field:

-

If this field is displayed in the Schema Mapper, you must ignore it and not try to map it to a Destination table column, else the Pipeline displays an error.

-

Hevo automatically loads the information for the

__hevo_source_modified_atcolumn, which is already present in the Destination table.

You can, however, continue to use __hevo_source_modified_at to create transformations using the function event.getSourceModifiedAt(). Read Metadata Column __hevo_source_modified_at.

Example: Automatic Column Header Creation for CSV Tables

Consider the following data in CSV format, which has no column headers.

CLAY COUNTY,32003,11973623

CLAY COUNTY,32003,46448094

CLAY COUNTY,32003,55206893

CLAY COUNTY,32003,15333743

SUWANNEE COUNTY,32060,85751490

SUWANNEE COUNTY,32062,50972562

ST JOHNS COUNTY,846636,32033,

NASSAU COUNTY,32025,88310177

NASSAU COUNTY,32041,34865452

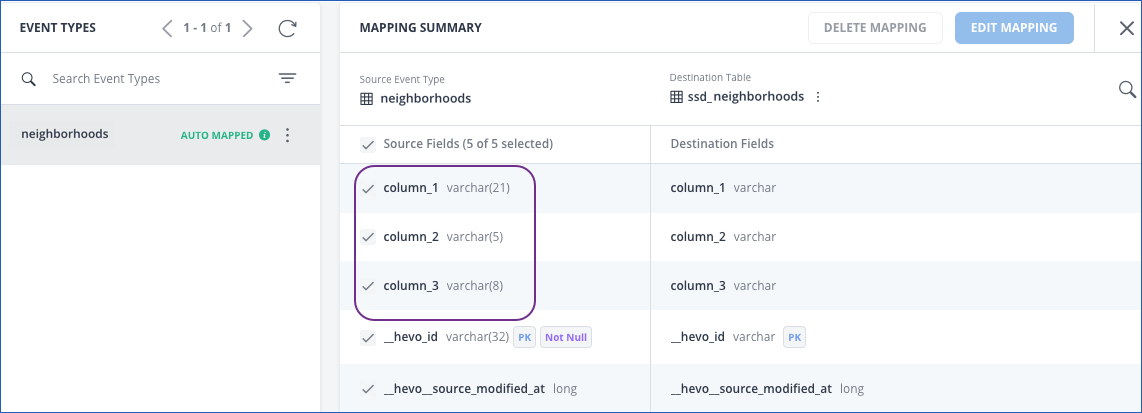

If you disable the Treat first row as column headers option, Hevo auto-generates the column headers, as seen in the schema map below:

The record in the Destination appears as follows:

Source Consideration

- By default, all new GCS buckets have soft delete enabled. However, it does not impact Hevo’s ingestion behavior. This means that if a file is deleted before ingestion begins, Hevo does not fetch it. If a table already exists in the Destination from a previous ingestion but the corresponding file is deleted before the next ingestion, the table is populated with NULL values. Deleting a file after ingestion does not affect the Destination table values, as the data is already processed.

Limitations

-

Hevo does not consider the character used as a delimiter in CSV files as data. This is true even if you place an escape character (“\”) before the delimiter.

For example, suppose the delimiter for your CSV file is a “;”, and the data record in your GCS Source is “A\;B”. Hevo ingests this data as two separate fields, as it does not identify the semicolon as a part of the data.

-

Hevo does not load data from a column into the Destination table if its size exceeds 16 MB, and skips the Event if it exceeds 40 MB. If the Event contains a column larger than 16 MB, Hevo attempts to load the Event after dropping that column’s data. However, if the Event size still exceeds 40 MB, then the Event is also dropped. As a result, you may see discrepancies between your Source and Destination data. To avoid such a scenario, ensure that each Event contains less than 40 MB of data.

-

Hevo currently supports only the ISO 8859-1 character set. If the file name or content includes unsupported characters, such as emojis or special symbols, the Pipeline may fail. If your file uses a different character set, contact Hevo Support.

-

If you initially configure the Source to ingest data from a specific path prefix, such as a folder or directory path, and later update it to a different prefix, Hevo treats the new prefix as a separate data root. This triggers a new historical load for all files under the updated path, even if those files were previously ingested through the earlier configuration.

To avoid duplicate ingestion, you can skip the historical load and configure the child folders to resume from a specific point using the Change Position option. Any data ingested using the Change Position action is billable.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Jul-07-2025 | NA | Updated the Limitations section to inform about the max record and column size in an Event. |

| Jun-02-2025 | NA | Updated section, Limitations to add a point about new historical loads when changing the path prefix. |

| May-08-2025 | NA | Updated section, Limitations to add a point about Hevo supporting only ISO-8859-1 encoding format. |

| Mar-20-2025 | NA | Added section, Source Consideration. |

| Jan-07-2025 | NA | Updated the Limitations section to add information on Event size. |

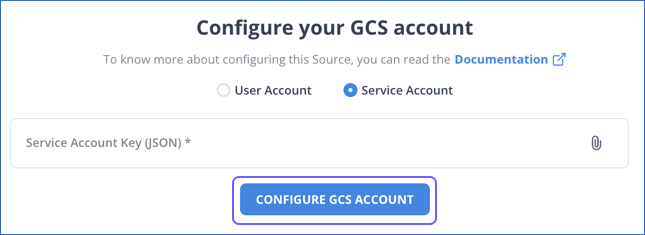

| May-27-2024 | 2.23.4 | Added information about connecting to GCS with service accounts in the Configuring Google Cloud Storage as a Source section. |

| Mar-05-2024 | 2.21 | Updated the ingestion frequency table in the Data Replication section. |

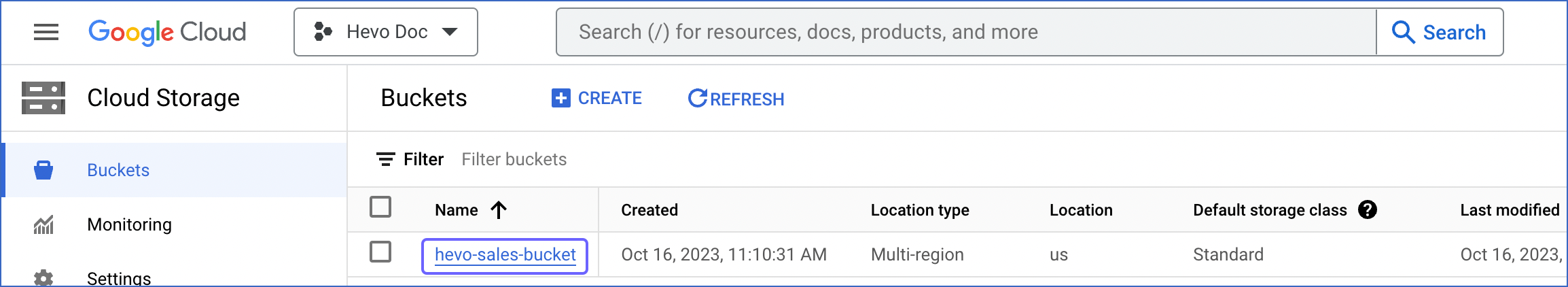

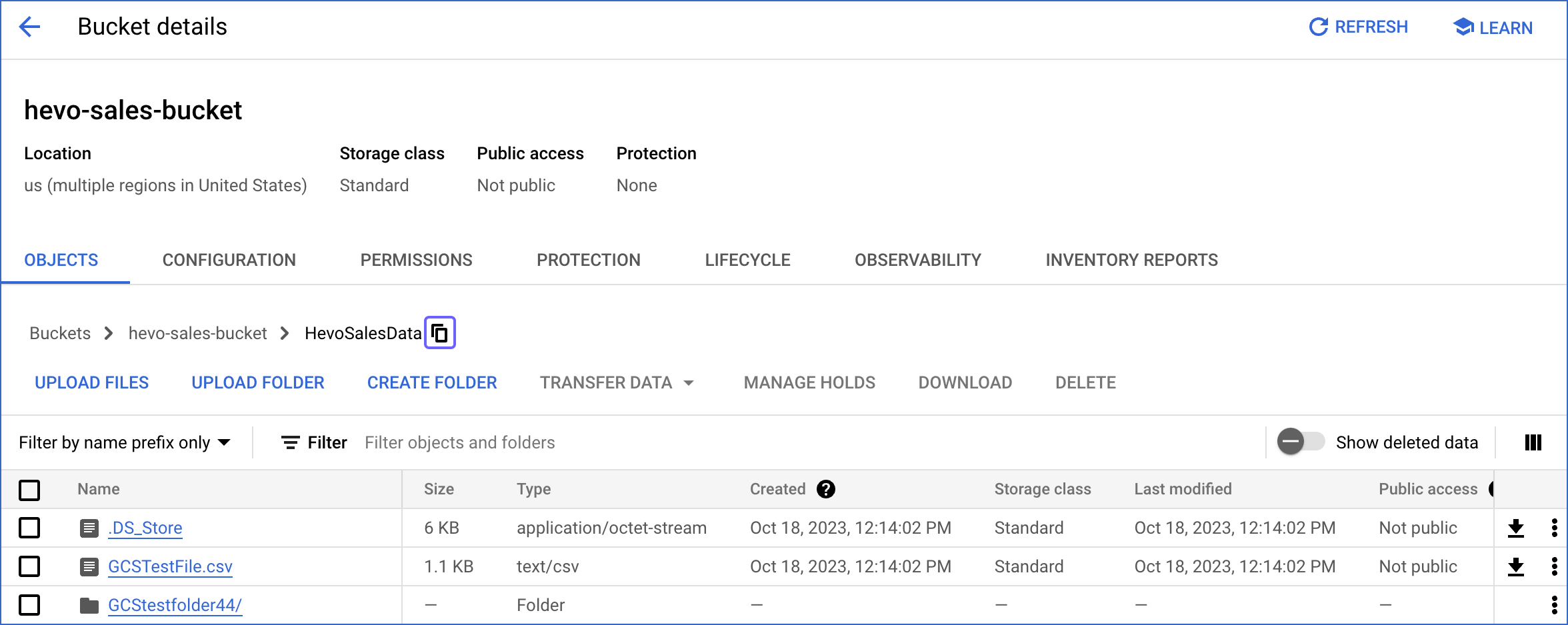

| Oct-30-2023 | NA | Added section, Obtaining the GCS Bucket Name and Folder Path. |

| Jul-25-2023 | NA | Added limitation about Hevo not handling escape characters in CSV files. |

| Jan-10-2023 | NA | Updated the page for consistent information structure. |

| Dec-20-2022 | NA | Added section, Limitations. |

| Nov-08-2022 | NA | Updated section, Configuring Google Cloud Storage as a Source to add information about the Convert date/time format fields to timestamp option. |

| Sep-21-2022 | NA | Added a note in section, Configuring Google Cloud Storage as a Source. |

| Mar-21-2022 | 1.85 | Removed section, Limitations as Hevo now supports UTF-16 encoding format for CSV files. |

| Oct-25-2021 | NA | Added section, Data Replication. |

| Jun-28-2021 | 1.66 | Updated the page overview with information about __hevo_source_modified_at being uploaded as a metadata field from Release 1.66 onwards. |

| Feb-22-2021 | NA | Added the limitation about Hevo not supporting UTF-16 encoding format for CSV data. |