- Introduction

-

Getting Started

- Creating an Account in Hevo

- Subscribing to Hevo via AWS Marketplace

- Subscribing to Hevo via Snowflake Marketplace

- Connection Options

- Familiarizing with the UI

- Creating your First Pipeline

- Data Loss Prevention and Recovery

-

Data Ingestion

- Types of Data Synchronization

- Ingestion Modes and Query Modes for Database Sources

- Ingestion and Loading Frequency

- Data Ingestion Statuses

- Deferred Data Ingestion

- Handling of Primary Keys

- Handling of Updates

- Handling of Deletes

- Hevo-generated Metadata

- Best Practices to Avoid Reaching Source API Rate Limits

-

Edge

- Getting Started

- Data Ingestion

- Core Concepts

-

Pipelines

- Familiarizing with the Pipelines UI (Edge)

- Creating an Edge Pipeline

- Working with Edge Pipelines

- Object and Schema Management

- Pipeline Job History

- Sources

- Destinations

- Alerts

- Custom Connectors

-

Releases

- Edge Release Notes - February 18, 2026

- Edge Release Notes - February 10, 2026

- Edge Release Notes - February 03, 2026

- Edge Release Notes - January 20, 2026

- Edge Release Notes - December 08, 2025

- Edge Release Notes - December 01, 2025

- Edge Release Notes - November 05, 2025

- Edge Release Notes - October 30, 2025

- Edge Release Notes - September 22, 2025

- Edge Release Notes - August 11, 2025

- Edge Release Notes - July 09, 2025

- Edge Release Notes - November 21, 2024

-

Data Loading

- Loading Data in a Database Destination

- Loading Data to a Data Warehouse

- Optimizing Data Loading for a Destination Warehouse

- Deduplicating Data in a Data Warehouse Destination

- Manually Triggering the Loading of Events

- Scheduling Data Load for a Destination

- Loading Events in Batches

- Data Loading Statuses

- Data Spike Alerts

- Name Sanitization

- Table and Column Name Compression

- Parsing Nested JSON Fields in Events

-

Pipelines

- Data Flow in a Pipeline

- Familiarizing with the Pipelines UI

- Working with Pipelines

- Managing Objects in Pipelines

- Pipeline Jobs

-

Transformations

-

Python Code-Based Transformations

- Supported Python Modules and Functions

-

Transformation Methods in the Event Class

- Create an Event

- Retrieve the Event Name

- Rename an Event

- Retrieve the Properties of an Event

- Modify the Properties for an Event

- Fetch the Primary Keys of an Event

- Modify the Primary Keys of an Event

- Fetch the Data Type of a Field

- Check if the Field is a String

- Check if the Field is a Number

- Check if the Field is Boolean

- Check if the Field is a Date

- Check if the Field is a Time Value

- Check if the Field is a Timestamp

-

TimeUtils

- Convert Date String to Required Format

- Convert Date to Required Format

- Convert Datetime String to Required Format

- Convert Epoch Time to a Date

- Convert Epoch Time to a Datetime

- Convert Epoch to Required Format

- Convert Epoch to a Time

- Get Time Difference

- Parse Date String to Date

- Parse Date String to Datetime Format

- Parse Date String to Time

- Utils

- Examples of Python Code-based Transformations

-

Drag and Drop Transformations

- Special Keywords

-

Transformation Blocks and Properties

- Add a Field

- Change Datetime Field Values

- Change Field Values

- Drop Events

- Drop Fields

- Find & Replace

- Flatten JSON

- Format Date to String

- Format Number to String

- Hash Fields

- If-Else

- Mask Fields

- Modify Text Casing

- Parse Date from String

- Parse JSON from String

- Parse Number from String

- Rename Events

- Rename Fields

- Round-off Decimal Fields

- Split Fields

- Examples of Drag and Drop Transformations

- Effect of Transformations on the Destination Table Structure

- Transformation Reference

- Transformation FAQs

-

Python Code-Based Transformations

-

Schema Mapper

- Using Schema Mapper

- Mapping Statuses

- Auto Mapping Event Types

- Manually Mapping Event Types

- Modifying Schema Mapping for Event Types

- Schema Mapper Actions

- Fixing Unmapped Fields

- Resolving Incompatible Schema Mappings

- Resizing String Columns in the Destination

- Changing the Data Type of a Destination Table Column

- Schema Mapper Compatibility Table

- Limits on the Number of Destination Columns

- File Log

- Troubleshooting Failed Events in a Pipeline

- Mismatch in Events Count in Source and Destination

- Audit Tables

- Activity Log

-

Pipeline FAQs

- Can multiple Sources connect to one Destination?

- What happens if I re-create a deleted Pipeline?

- Why is there a delay in my Pipeline?

- Can I change the Destination post-Pipeline creation?

- Why is my billable Events high with Delta Timestamp mode?

- Can I drop multiple Destination tables in a Pipeline at once?

- How does Run Now affect scheduled ingestion frequency?

- Will pausing some objects increase the ingestion speed?

- Can I see the historical load progress?

- Why is my Historical Load Progress still at 0%?

- Why is historical data not getting ingested?

- How do I set a field as a primary key?

- How do I ensure that records are loaded only once?

- Events Usage

-

Sources

- Free Sources

-

Databases and File Systems

- Data Warehouses

-

Databases

- Connecting to a Local Database

- Amazon DocumentDB

- Amazon DynamoDB

- Elasticsearch

-

MongoDB

- Generic MongoDB

- MongoDB Atlas

- Support for Multiple Data Types for the _id Field

- Example - Merge Collections Feature

-

Troubleshooting MongoDB

-

Errors During Pipeline Creation

- Error 1001 - Incorrect credentials

- Error 1005 - Connection timeout

- Error 1006 - Invalid database hostname

- Error 1007 - SSH connection failed

- Error 1008 - Database unreachable

- Error 1011 - Insufficient access

- Error 1028 - Primary/Master host needed for OpLog

- Error 1029 - Version not supported for Change Streams

- SSL 1009 - SSL Connection Failure

- Troubleshooting MongoDB Change Streams Connection

- Troubleshooting MongoDB OpLog Connection

-

Errors During Pipeline Creation

- SQL Server

-

MySQL

- Amazon Aurora MySQL

- Amazon RDS MySQL

- Azure MySQL

- Generic MySQL

- Google Cloud MySQL

- MariaDB MySQL

-

Troubleshooting MySQL

-

Errors During Pipeline Creation

- Error 1003 - Connection to host failed

- Error 1006 - Connection to host failed

- Error 1007 - SSH connection failed

- Error 1011 - Access denied

- Error 1012 - Replication access denied

- Error 1017 - Connection to host failed

- Error 1026 - Failed to connect to database

- Error 1027 - Unsupported BinLog format

- Failed to determine binlog filename/position

- Schema 'xyz' is not tracked via bin logs

- Errors Post-Pipeline Creation

-

Errors During Pipeline Creation

- MySQL FAQs

- Oracle

-

PostgreSQL

- Amazon Aurora PostgreSQL

- Amazon RDS PostgreSQL

- Azure PostgreSQL

- Generic PostgreSQL

- Google Cloud PostgreSQL

- Heroku PostgreSQL

-

Troubleshooting PostgreSQL

-

Errors during Pipeline creation

- Error 1003 - Authentication failure

- Error 1006 - Connection settings errors

- Error 1011 - Access role issue for logical replication

- Error 1012 - Access role issue for logical replication

- Error 1014 - Database does not exist

- Error 1017 - Connection settings errors

- Error 1023 - No pg_hba.conf entry

- Error 1024 - Number of requested standby connections

- Errors Post-Pipeline Creation

-

Errors during Pipeline creation

-

PostgreSQL FAQs

- Can I track updates to existing records in PostgreSQL?

- How can I migrate a Pipeline created with one PostgreSQL Source variant to another variant?

- How can I prevent data loss when migrating or upgrading my PostgreSQL database?

- Why do FLOAT4 and FLOAT8 values in PostgreSQL show additional decimal places when loaded to BigQuery?

- Why is data not being ingested from PostgreSQL Source objects?

- Troubleshooting Database Sources

- Database Source FAQs

- File Storage

- Engineering Analytics

- Finance & Accounting Analytics

-

Marketing Analytics

- ActiveCampaign

- AdRoll

- Amazon Ads

- Apple Search Ads

- AppsFlyer

- CleverTap

- Criteo

- Drip

- Facebook Ads

- Facebook Page Insights

- Firebase Analytics

- Freshsales

- Google Ads

- Google Analytics 4

- Google Analytics 360

- Google Play Console

- Google Search Console

- HubSpot

- Instagram Business

- Klaviyo v2

- Lemlist

- LinkedIn Ads

- Mailchimp

- Mailshake

- Marketo

- Microsoft Ads

- Onfleet

- Outbrain

- Pardot

- Pinterest Ads

- Pipedrive

- Recharge

- Segment

- SendGrid Webhook

- SendGrid

- Salesforce Marketing Cloud

- Snapchat Ads

- SurveyMonkey

- Taboola

- TikTok Ads

- Twitter Ads

- Typeform

- YouTube Analytics

- Product Analytics

- Sales & Support Analytics

- Source FAQs

-

Destinations

- Familiarizing with the Destinations UI

- Cloud Storage-Based

- Databases

-

Data Warehouses

- Amazon Redshift

- Amazon Redshift Serverless

- Azure Synapse Analytics

- Databricks

- Google BigQuery

- Hevo Managed Google BigQuery

- Snowflake

- Troubleshooting Data Warehouse Destinations

-

Destination FAQs

- Can I change the primary key in my Destination table?

- Can I change the Destination table name after creating the Pipeline?

- How can I change or delete the Destination table prefix?

- Why does my Destination have deleted Source records?

- How do I filter deleted Events from the Destination?

- Does a data load regenerate deleted Hevo metadata columns?

- How do I filter out specific fields before loading data?

- Transform

- Alerts

- Account Management

- Activate

- Glossary

-

Releases- Release 2.45.2 (Feb 16-23, 2026)

- Release 2.45.1 (Feb 09-16, 2026)

- Release 2.45 (Jan 12-Feb 09, 2026)

-

2025 Releases

- Release 2.44 (Dec 01, 2025-Jan 12, 2026)

- Release 2.43 (Nov 03-Dec 01, 2025)

- Release 2.42 (Oct 06-Nov 03, 2025)

- Release 2.41 (Sep 08-Oct 06, 2025)

- Release 2.40 (Aug 11-Sep 08, 2025)

- Release 2.39 (Jul 07-Aug 11, 2025)

- Release 2.38 (Jun 09-Jul 07, 2025)

- Release 2.37 (May 12-Jun 09, 2025)

- Release 2.36 (Apr 14-May 12, 2025)

- Release 2.35 (Mar 17-Apr 14, 2025)

- Release 2.34 (Feb 17-Mar 17, 2025)

- Release 2.33 (Jan 20-Feb 17, 2025)

-

2024 Releases

- Release 2.32 (Dec 16 2024-Jan 20, 2025)

- Release 2.31 (Nov 18-Dec 16, 2024)

- Release 2.30 (Oct 21-Nov 18, 2024)

- Release 2.29 (Sep 30-Oct 22, 2024)

- Release 2.28 (Sep 02-30, 2024)

- Release 2.27 (Aug 05-Sep 02, 2024)

- Release 2.26 (Jul 08-Aug 05, 2024)

- Release 2.25 (Jun 10-Jul 08, 2024)

- Release 2.24 (May 06-Jun 10, 2024)

- Release 2.23 (Apr 08-May 06, 2024)

- Release 2.22 (Mar 11-Apr 08, 2024)

- Release 2.21 (Feb 12-Mar 11, 2024)

- Release 2.20 (Jan 15-Feb 12, 2024)

-

2023 Releases

- Release 2.19 (Dec 04, 2023-Jan 15, 2024)

- Release Version 2.18

- Release Version 2.17

- Release Version 2.16 (with breaking changes)

- Release Version 2.15 (with breaking changes)

- Release Version 2.14

- Release Version 2.13

- Release Version 2.12

- Release Version 2.11

- Release Version 2.10

- Release Version 2.09

- Release Version 2.08

- Release Version 2.07

- Release Version 2.06

-

2022 Releases

- Release Version 2.05

- Release Version 2.04

- Release Version 2.03

- Release Version 2.02

- Release Version 2.01

- Release Version 2.00

- Release Version 1.99

- Release Version 1.98

- Release Version 1.97

- Release Version 1.96

- Release Version 1.95

- Release Version 1.93 & 1.94

- Release Version 1.92

- Release Version 1.91

- Release Version 1.90

- Release Version 1.89

- Release Version 1.88

- Release Version 1.87

- Release Version 1.86

- Release Version 1.84 & 1.85

- Release Version 1.83

- Release Version 1.82

- Release Version 1.81

- Release Version 1.80 (Jan-24-2022)

- Release Version 1.79 (Jan-03-2022)

-

2021 Releases

- Release Version 1.78 (Dec-20-2021)

- Release Version 1.77 (Dec-06-2021)

- Release Version 1.76 (Nov-22-2021)

- Release Version 1.75 (Nov-09-2021)

- Release Version 1.74 (Oct-25-2021)

- Release Version 1.73 (Oct-04-2021)

- Release Version 1.72 (Sep-20-2021)

- Release Version 1.71 (Sep-09-2021)

- Release Version 1.70 (Aug-23-2021)

- Release Version 1.69 (Aug-09-2021)

- Release Version 1.68 (Jul-26-2021)

- Release Version 1.67 (Jul-12-2021)

- Release Version 1.66 (Jun-28-2021)

- Release Version 1.65 (Jun-14-2021)

- Release Version 1.64 (Jun-01-2021)

- Release Version 1.63 (May-19-2021)

- Release Version 1.62 (May-05-2021)

- Release Version 1.61 (Apr-20-2021)

- Release Version 1.60 (Apr-06-2021)

- Release Version 1.59 (Mar-23-2021)

- Release Version 1.58 (Mar-09-2021)

- Release Version 1.57 (Feb-22-2021)

- Release Version 1.56 (Feb-09-2021)

- Release Version 1.55 (Jan-25-2021)

- Release Version 1.54 (Jan-12-2021)

-

2020 Releases

- Release Version 1.53 (Dec-22-2020)

- Release Version 1.52 (Dec-03-2020)

- Release Version 1.51 (Nov-10-2020)

- Release Version 1.50 (Oct-19-2020)

- Release Version 1.49 (Sep-28-2020)

- Release Version 1.48 (Sep-01-2020)

- Release Version 1.47 (Aug-06-2020)

- Release Version 1.46 (Jul-21-2020)

- Release Version 1.45 (Jul-02-2020)

- Release Version 1.44 (Jun-11-2020)

- Release Version 1.43 (May-15-2020)

- Release Version 1.42 (Apr-30-2020)

- Release Version 1.41 (Apr-2020)

- Release Version 1.40 (Mar-2020)

- Release Version 1.39 (Feb-2020)

- Release Version 1.38 (Jan-2020)

- Early Access New

Ordergroove (Edge)

Edge Pipeline is now available for Public Review. You can explore and evaluate its features and share your feedback.

Ordergroove is a subscription management platform that helps businesses manage recurring purchases, subscription lifecycles, and billing for their online stores. It enables merchants to create and manage subscription programs, apply discounts and promotions, and support ongoing customer purchases.

Hevo uses Ordergroove’s REST APIs to replicate data from your account into the Destination of your choice. This allows you to analyze your data using analytics and reporting tools. To ingest data, you must provide Hevo with an API key to authenticate to your Ordergroove account.

Ordergroove Environments

Ordergroove provides separate environments for testing purposes and production use:

-

Staging: This environment is used for pre-production testing and validation with data models that closely resemble production. A staging environment is accessed using URLs that include identifiers such as

stgorstaging. For example,https://rc3.stg.ordergroove.com/. -

Production: This environment is used for live business operations and contains real customer data, which is typically required for reporting and analysis. A production environment is accessed using standard Ordergroove URLs without staging identifiers. For example,

https://rc3.ordergroove.com/.

Hevo supports replicating data from both staging and production environments.

Supported Features

| Feature Name | Supported |

|---|---|

| Capture deletes | Yes |

| Data blocking (skip objects and fields) | Yes |

| Resync (objects and Pipelines) | Yes |

| API configurable | Yes |

| Authorization via API | Yes |

Prerequisites

-

An active Ordergroove account exists in the Production or Staging environment from which data is to be ingested.

-

An API key with bulk data access permissions is available to provide Hevo access to your Ordergroove account data.

Obtain the API Key

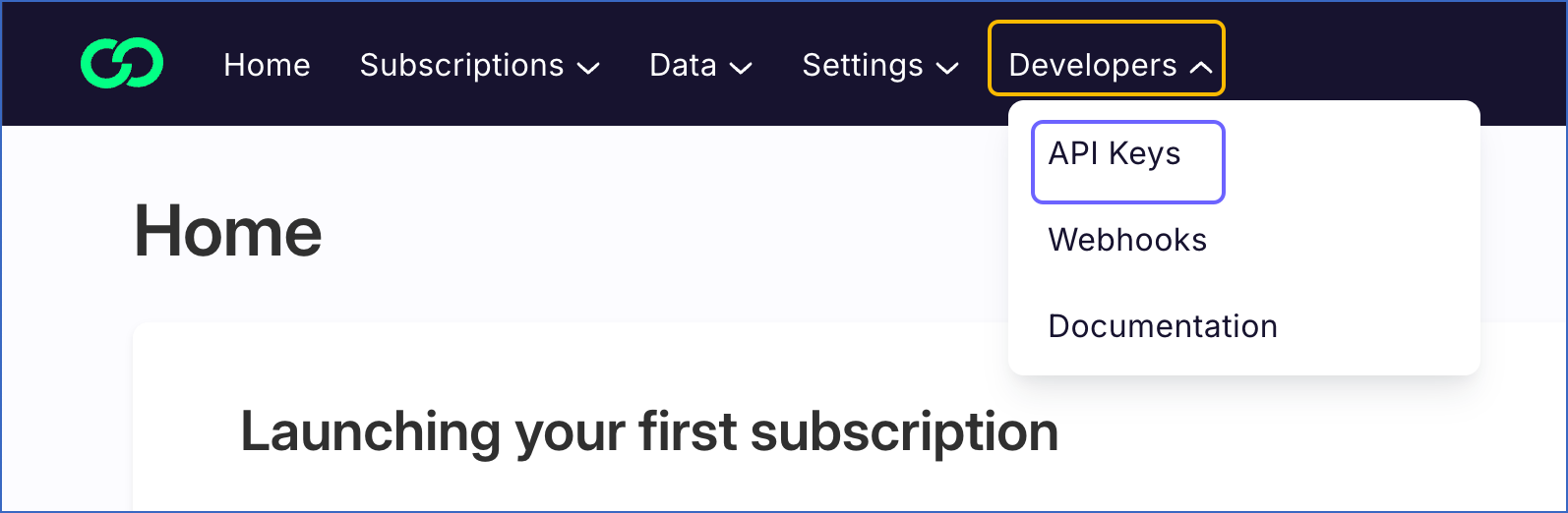

To connect Hevo to your Ordergroove account, you must provide an API key. You can either use an existing API key or create one if required.

If you want to ingest staging data, the API key must be created in the Staging environment. Similarly, to ingest production data, the key must be created in the Production environment. Ordergroove API keys do not expire and can be reused across all your Pipelines.

Perform the following steps to obtain the API key:

-

Log in to your Ordergroove account as a user with permission to create API keys, such as an Account Manager Admin.

-

In the top navigation bar, hover over Developers and click API Keys.

-

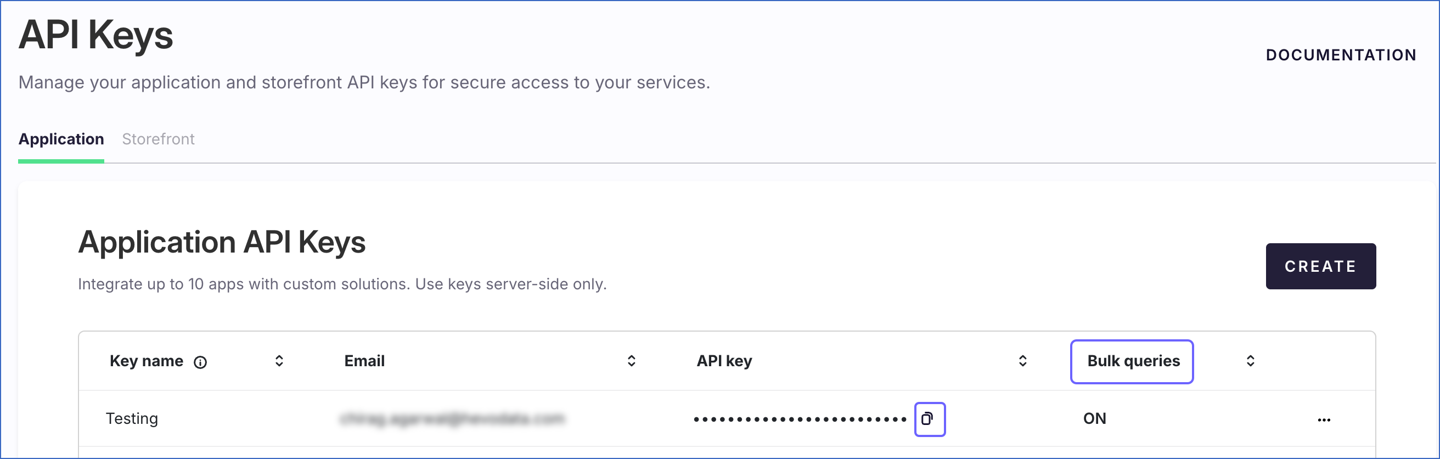

On the API Keys page, do one of the following:

-

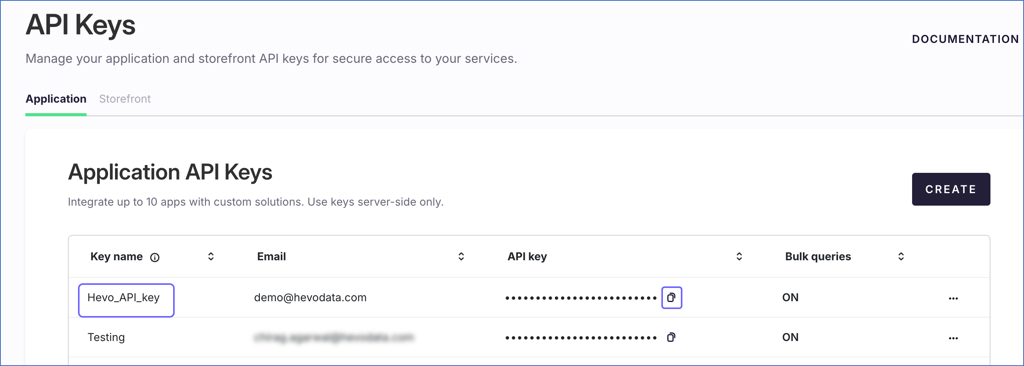

If you have an existing API key with the Bulk queries setting enabled, click the copy (

) icon to copy the key. Use this key while configuring your Ordergroove Source in the Hevo Pipeline.

) icon to copy the key. Use this key while configuring your Ordergroove Source in the Hevo Pipeline.

-

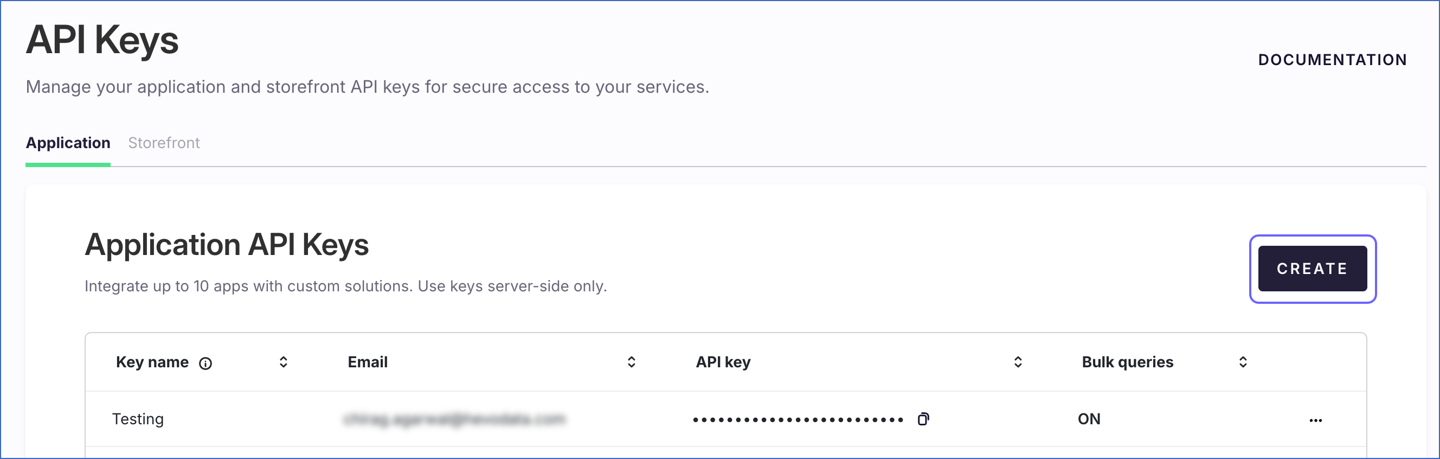

To create an API key, perform the following steps:

Note: You can create up to 10 API keys per account.

-

Click CREATE.

-

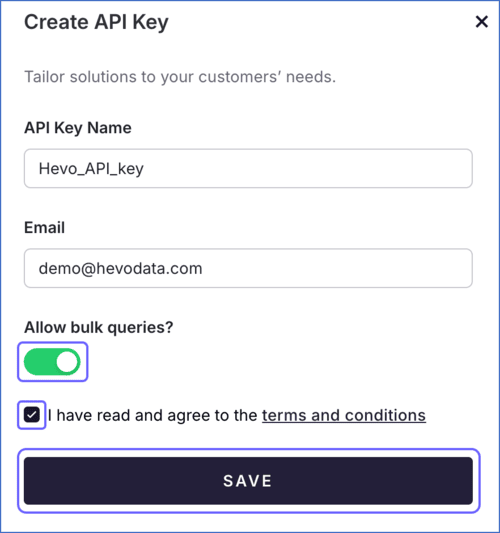

On the Create API Key slide-in page, do the following:

-

Specify the following:

-

API Key Name: A unique name to identify the API key.

-

Email: The email address you want to associate with the API key.

-

-

Enable the Allow bulk queries? setting.

-

Select the acknowledgment check box, and then click SAVE.

-

You can now view the newly created API key on the API Keys page. Click the copy (

) icon to copy the key, and use it while configuring your Ordergroove Source in the Hevo Pipeline.

) icon to copy the key, and use it while configuring your Ordergroove Source in the Hevo Pipeline.

-

-

If the API key configured in the Pipeline is revoked manually from your Ordergroove account, Hevo cannot authenticate with the Source. As a result, all active jobs for the Pipeline fail, and no data is replicated. To resume data replication, modify the Source configuration in the Pipeline with a valid API key. Once the updated key is saved, Hevo re-authenticates the Source, and data ingestion resumes from the last saved offset.

Configure Ordergroove as a Source in your Pipeline

Perform the following steps to configure your Ordergroove Source:

-

Click Pipelines in the Navigation Bar.

-

Click + Create Pipeline in the Pipelines List View.

-

On the Select Source Type page, select Ordergroove.

-

On the Select Destination Type page, select the type of Destination you want to use.

-

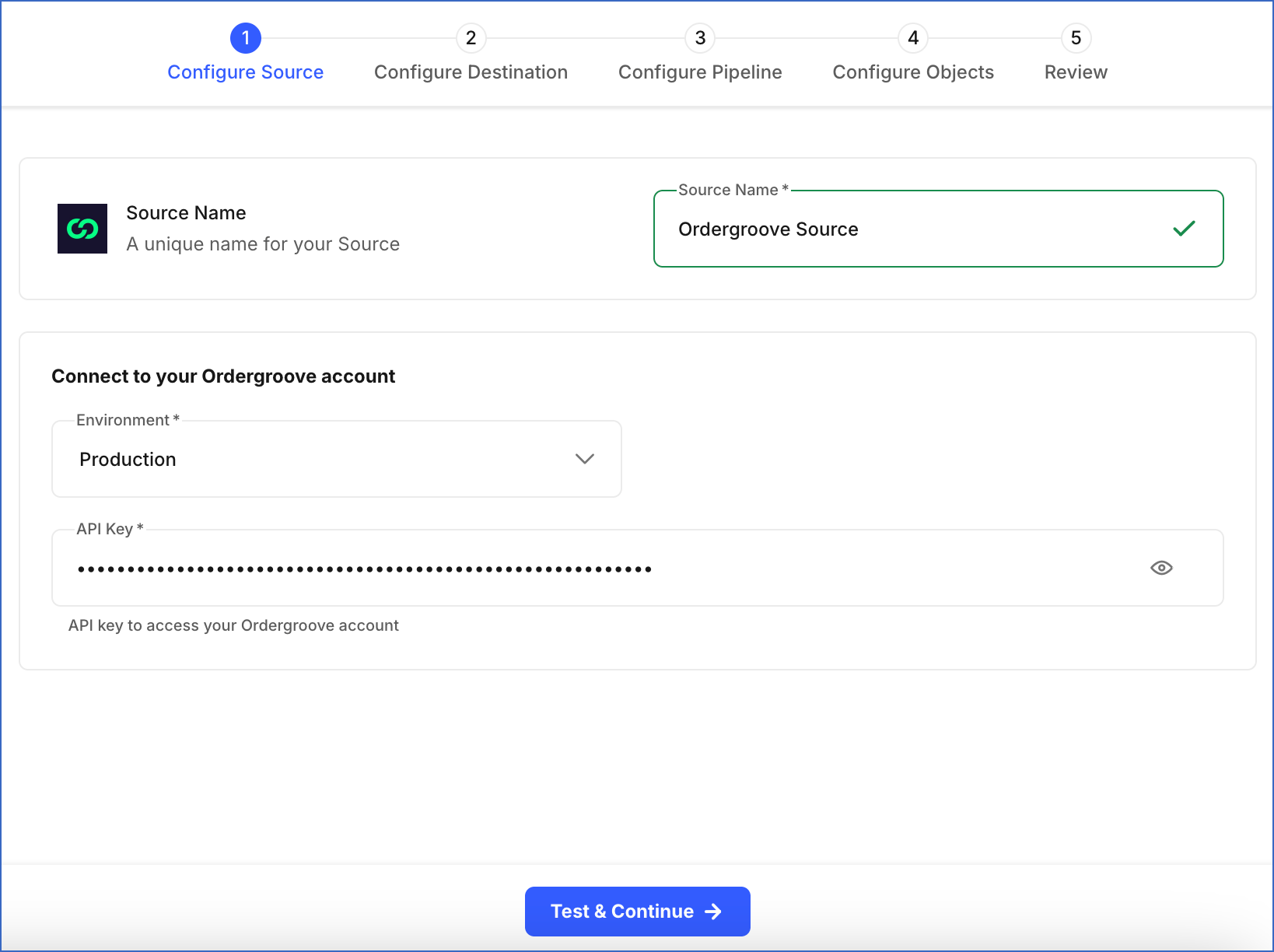

In the Configure Source screen, specify the following:

-

Source Name: A unique name for your Source, not exceeding 255 characters. For example, Ordergroove Source.

-

In the Connect to your Ordergroove account section, from the drop-down, select the Environment from which you want Hevo to ingest data. This can be Production or Staging.

-

API Key: The API key that you obtained from your Ordergroove account.

-

-

Click Test & Continue to test the connection to your Ordergroove Source. Once the test is successful, you can proceed to set up your Destination.

Data Replication

Hevo replicates data for all the objects selected on the Configure Objects page during Pipeline creation. By default, all supported objects and their available fields are selected. However, you can modify this selection while creating or editing the Pipeline.

Selecting a parent object automatically includes all its associated child objects for replication. Child objects cannot be selected or deselected individually.

Hevo ingests the following types of data from your Source objects:

-

Historical Data: The first run of the Pipeline ingests all available historical data for the selected objects and loads it into the Destination.

-

Incremental Data: Once the historical load is complete, new and updated records for objects are ingested as per the sync frequency.

For the following objects, Hevo ingests only the incremental data in subsequent Pipeline runs:

Incremental changes are detected using timestamp fields:

-

Orders and Subscription: updated

-

Item: order_updated_start_date and order_updated_end_date

For all other objects, Hevo ingests the entire data during each Pipeline run.

Ordergroove currently enforces a rate limit of 6000 API requests per IP per minute. If this limit is exceeded, a rate limit exception occurs. To understand how Hevo handles such scenarios, read Handling Rate Limit Exceptions.

Note: You can create a Pipeline with this Source only using the Merge load mode. The Append mode is not supported for this Source.

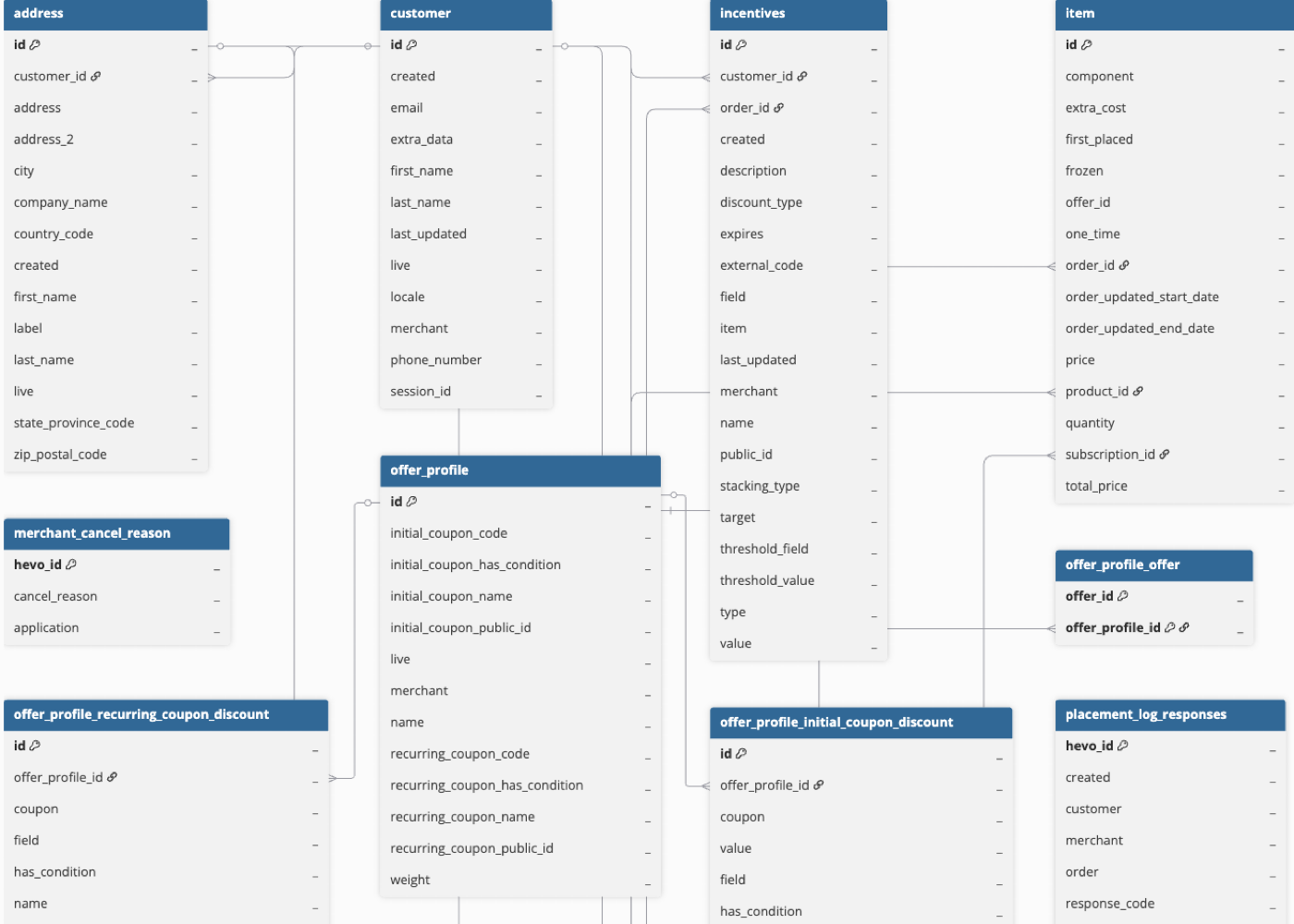

Schema and Primary Keys

Hevo uses the following schema to upload the records in the Destination. For a detailed view of the objects, fields, and relationships, click the ERD.

Data Model

The following is the list of tables (objects) that are created at the Destination when you run the Pipeline:

| Object | Description |

|---|---|

| Address | Contains customer address information, such as street, city, state or province, country, and postal code. These addresses are used for delivering orders and subscription-based items. |

| Customer | Contains details of customers, including name, contact information, and account status. Each record represents an individual associated with subscriptions and orders. |

| Incentives | Contains details about discounts and promotional offers applied to customers, subscriptions, or orders. It includes the incentive type, values, conditions, and validity period. |

| Item | Contains details of individual items within an order, including product information, quantity, pricing, and discounts. |

| Merchant Cancel Reason | Contains the list of cancellation reasons defined by the merchant. |

| Offer Profile | Represents how one or more discounts are grouped and applied to subscriptions or orders. This includes information about initial and recurring discounts, along with their conditions and applicability. This object has the following child objects: - Offer Profile Initial Coupon Discount - Offer Profile Offer - Offer Profile Recurring Coupon Discount |

| Offer Profile Initial Coupon Discount | Contains details of coupon-based discounts applied on the first purchase through an offer profile. This includes coupon information, along with discount values and conditions. |

| Offer Profile Offer | Represents the relationship between an offer profile and the offers associated with it. |

| Offer Profile Recurring Coupon Discount | Contains details of coupon-based discounts applied to recurring purchases through an offer profile. This includes coupon information, along with discount values and conditions. |

| Orders | Contains information about orders, including status, pricing breakdowns, applied discounts, taxes, shipping information, and associated customer details. Each order represents a single purchase transaction and may include one or more items. |

| Payment | Contains payment-related information for a customer, including billing information, payment method, card details, and payment status. These records are used to process and manage payments for subscriptions and orders. |

| Placement Log Responses | Contains responses generated during order placement attempts, including response codes, messages, and associated order and customer details. These records help track and analyze order placement outcomes and errors. |

| Product | Contains details of products available for purchase, including pricing information, availability status, and configuration settings used for subscriptions and orders. This object has the following child objects: - Product Group - Product Relationships - Product Selection Rule - Product Selection Rule Element |

| Product Group | Represents groups of products categorized together for specific purposes, such as eligibility or incentives. |

| Product Relationships | Contains details of the relationship between two linked products. |

| Product Selection Rule | Contains details of the rules used to select or group products for subscriptions. |

| Product Selection Rule Element | Contains details of individual rule elements that control product selection behavior, along with the date from which the rule applies. |

| Resources | Contains information about resources defined for a merchant, including their name, description, and timestamps indicating when the resource was created or last updated. |

| Subscription | Contains details of customer subscriptions, including subscribed products, pricing, payment and shipping information, and the current subscription status. This object has the following child objects: - Subscription Component - Subscription Discount |

| Subscription Component | Contains details of the products included in a subscription along with the quantity of each product. |

| Subscription Discount | Contains details of discounts applied to a subscription, including the discount name and the subscription ID. |

Additional Information

Read the detailed Hevo documentation for the following related topics:

Configure Webhooks for Capturing Delete Events

For Item and Orders objects, Hevo captures delete events through webhooks. To enable this, you must configure webhooks in your Ordergroove account using the webhook URL generated for your Pipeline. Once configured, whenever records for these objects are deleted, Hevo sets the metadata column __hevo__marked_deleted to true for the corresponding records in the Destination.

Perform the following steps to configure webhooks for capturing delete events:

Obtain the Webhook URL for your Pipeline

Perform the following steps to obtain the webhook URL generated by Hevo for your Pipeline:

-

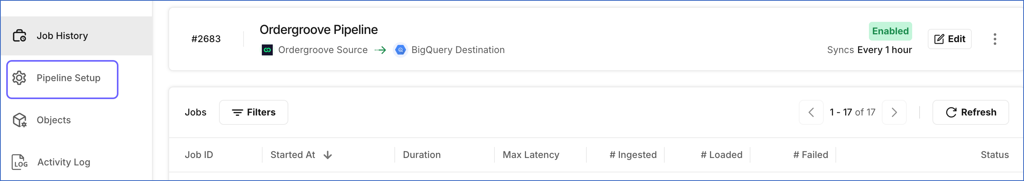

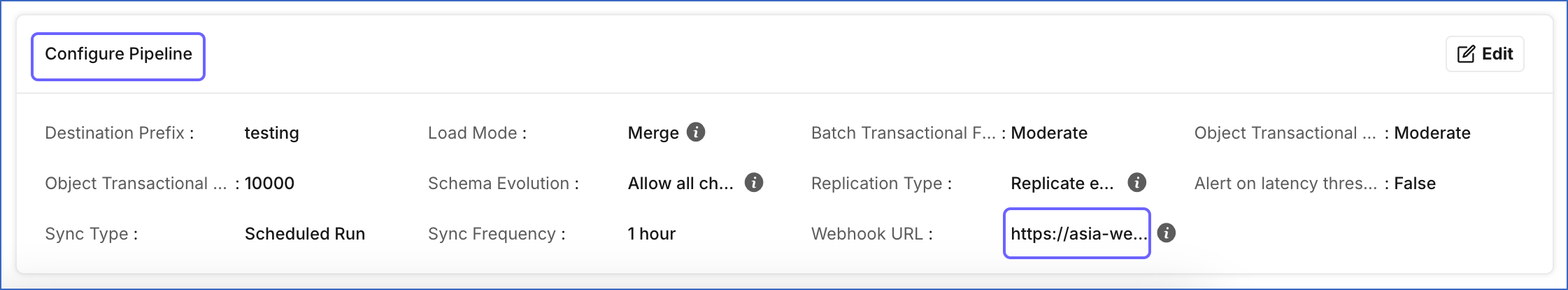

In the Pipeline’s toolbar, click Pipeline Setup.

-

Scroll down to the Configure Pipeline section and copy the URL displayed in the Webhook URL field.

Create a Webhook in your Ordergroove account

Perform the following steps to create a webhook in your Ordergroove account to capture delete events for Item and Orders objects:

-

Log in to your Ordergroove account as a user with permission to create webhooks, such as an Account Manager Admin.

-

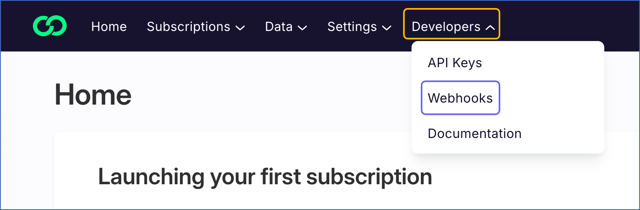

In the top navigation bar, hover over Developers, and then click Webhooks.

-

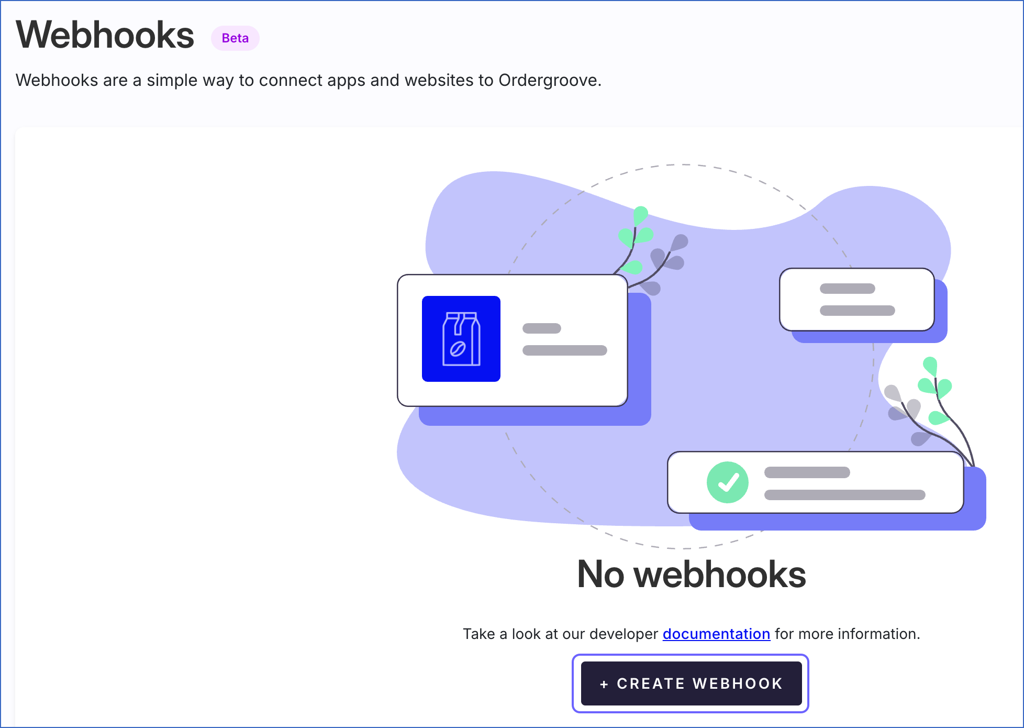

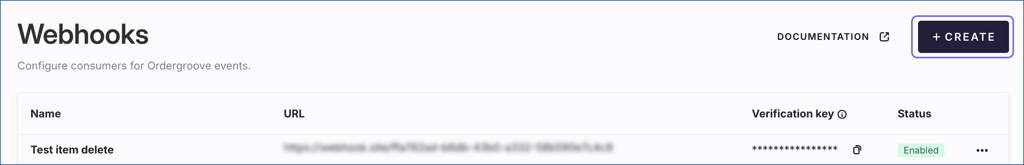

On the Webhooks page, do one of the following:

-

If no webhooks are currently configured in your Ordergroove account, click + CREATE WEBHOOK to create one.

-

If webhooks are already configured, click + CREATE to create one.

-

-

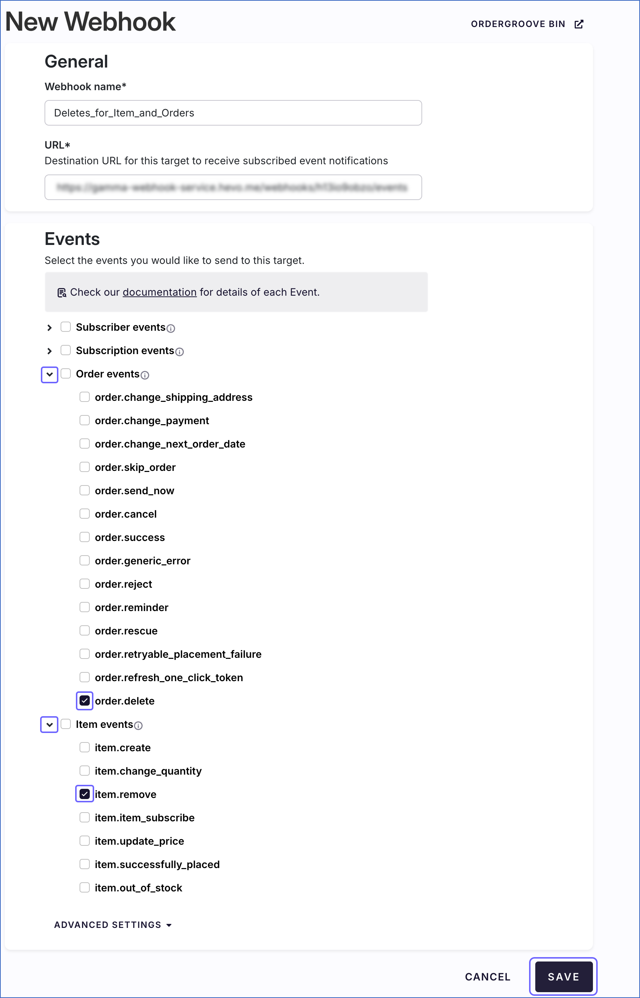

On the New Webhook page, do the following:

-

In the General section, specify the following:

-

Webhook name: A unique name to identify the webhook.

-

URL: The webhook URL that you obtained from your Pipeline.

-

-

In the Events section, do the following:

-

Click the expand icon next to Order events, and select the check box for order.delete event.

-

Click the expand icon next to Item events, and select the check box for item.remove event.

Note: If you select any additional events, Ordergroove may send notifications for those events to the configured webhook URL. However, Hevo ignores these notifications, and they do not affect data ingestion.

-

-

Click SAVE to create the webhook.

-

You can now view the newly created webhook on the Webhooks page.

Handling of Deletes

Ordergroove does not explicitly mark records as deleted in its REST API responses. When a record is deleted, it is no longer returned in subsequent API responses.

Hevo uses a full data refresh approach to capture delete actions for parent objects. During each Pipeline run, Hevo compares the data fetched from the Source object with the data present in the Destination table. If a record exists in the Destination but is no longer returned by the Source, the record is marked as deleted by setting the value of the metadata column __hevo__marked_deleted to True. This applies only to the following objects, for which the complete data is ingested during each sync.

-

Address

-

Customer

-

Incentives

-

Merchant Cancel Reason

-

Offer Profile

-

Payment

-

Placement Log Responses

-

Product

-

Resources

For Item and Orders objects, where only new and updated records are ingested after the first Pipeline run, Hevo captures delete events through webhooks. To enable this, you must configure webhooks in your Ordergroove account. Once configured, Hevo receives a notification when records for such objects are deleted and marks them as deleted in the Destination table.

For child objects, during each sync, Hevo removes the existing data from the corresponding Destination tables and loads them with the latest data ingested from the Source. As a result, if any records are deleted for child objects, those records are not present in the Destination after the next Pipeline run.

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Jan-20-2026 | NA | New document. |