Google Drive

On This Page

User account-based authentication is no longer supported for the Google Drive Source. We recommend that you migrate your existing Pipelines to a service account for uninterrupted data replication. Read Migrating User Account-Based Pipelines to Service Account for the steps to do this.

You can load data from files in your Google Drive into a Destination database or data warehouse using Hevo Pipelines. Hevo supports the Excel (.xlsx), Google Sheets, CSV, and TSV files to ingest data. By default, Hevo ingests data from all supported files present in the selected folders.

As of Release 1.66, __hevo_source_modified_at is uploaded to the Destination as a metadata field. For existing Pipelines that have this field:

-

If this field is displayed in the Schema Mapper, you must ignore it and not try to map it to a Destination table column, else the Pipeline displays an error.

-

Hevo automatically loads this information in the

__hevo_source_modified_atcolumn, which is already present in the Destination table.

You can, however, continue to use __hevo_source_modified_at to create transformations using the function event.getSourceModifiedAt(). Read Metadata Column __hevo_source_modified_at.

Existing Pipelines that do not have this field are not impacted.

If your Google Drive account does not contain any new Events to be ingested, Hevo defers the data ingestion for a pre-determined time. Hevo re-attempts to fetch the data only after the deferment period elapses. Read Deferred Data Ingestion.

User Authentication

You can connect to the Google Drive Source only via service accounts. One service account can be mapped to your entire team. Read Google Account Authentication Methods to know how to set up a service account if you do not already have one.

Treatment of Duplicate Column Headers

To identify each column in the Source uniquely during ingestion, Hevo renames any duplicate column headers that are found, as:

-

Columns with the same header: The column header is changed to lowercase and the column number is suffixed to it. For example, if there are three columns, test, test, and test in columns G, H, and K, respectively, these are changed to test_g, test_h, and test_k. The same happens for headers with space between them. For example, if there are three columns, Software Used, Software Used, and Software Used in columns H, I, and J, respectively, these are changed to software_used_h, software_used_i, and software_used_j.

-

Columns with same header but different case: All column headers are changed to lowercase and assigned a sequential, numeric suffix. For example, if there are three columns, test, Test, and tEst, these are changed to test, test_1, and test_2 during the auto mapping phase. The same happens for headers with space between them. For example, if there are two columns, Software Used, and Software used, these are changed to software_used, and software_used_1.

-

Columns with __Hevo_id as the header: The column header is changed to __hevo_id, and the __hevo_id column that Hevo adds to keep track of offsets during ingestion is named as __hevo_id_1.

-

Columns with __hevo_id as the header: Hevo suffixes the column number to the column header. For example, if the __hevo_id_ column lies in column number B, it is renamed to __hevo_id_b. If the __hevo_id_b column is also present in the Source column list, the original __hevo_id column is named to __hevoid_b_b. This is done because Hevo adds the column, __hevo_id_ as a primary key to keep track of offsets during the ingestion process. Towards this purpose, any existing column with that name is renamed.

Source Considerations

- The Google Drive Files: export API cannot export Google Sheets larger than 10MB. As a result, Hevo cannot ingest such sheets.

If your Google Drive account does not contain any new Events to be ingested, Hevo defers the data ingestion for a pre-determined time. Hevo re-attempts to fetch the data only after the deferment period elapses. Read Deferred Data Ingestion.

Limitations

-

Hevo does not support the

.xlsformat of Excel files. -

Hevo sets a limit of 50MB for Excel sheets and 5GB for CSV and TSV files. If you need to ingest larger CSV and TSV files, you can contact Hevo Support and get the limits increased for your team.

-

If the names of any of the Drive folders are modified after the Pipeline is created, then the new Events are ingested with a different Event name that is derived from the new folder name.

-

Hevo does not load data from a column into the Destination table if its size exceeds 16 MB, and skips the Event if it exceeds 40 MB. If the Event contains a column larger than 16 MB, Hevo attempts to load the Event after dropping that column’s data. However, if the Event size still exceeds 40 MB, then the Event is also dropped. As a result, you may see discrepancies between your Source and Destination data. To avoid such a scenario, ensure that each Event contains less than 40 MB of data.

-

Hevo currently supports only the ISO 8859-1 character set. If the file name or content includes unsupported characters, such as emojis or special symbols, the Pipeline may fail. If your file uses a different character set, contact Hevo Support.

-

If you initially select a parent folder for ingestion and later reconfigure the Source to select specific child folders within it, Hevo treats each newly selected child folder as a separate ingestion path. This triggers a new historical load for those folders, even if their data was previously ingested through the parent folder.

To avoid duplicate ingestion, you can skip the historical load and configure the child folders to resume from a specific point using the Change Position option. Any data ingested using the Change Position action is billable.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Jul-07-2025 | NA | Updated the Limitations section to inform about the max record and column size in an Event. |

| Jun-02-2025 | NA | Updated section, Limitations to add a point about new historical loads when selecting child folders for ingestion. |

| May-08-2025 | NA | Updated section, Limitations to add a point about Hevo supporting only ISO-8859-1 encoding format. |

| Jan-07-2025 | NA | Updated the Limitations section to add information on Event size. |

| Aug-19-2024 | NA | Updated section, Configuring Google Drive as a Source to remove mentions of user account-based authentication. |

| Aug-07-2023 | NA | - Updated section, Configuring Google Drive as a Source to add information about the supported file formats. - Updated section, Limitations to add information about Hevo not supporting the .xls file format. |

| Feb-21-2023 | NA | Updated sections, Source Considerations and Limitations to add information about file size limit. |

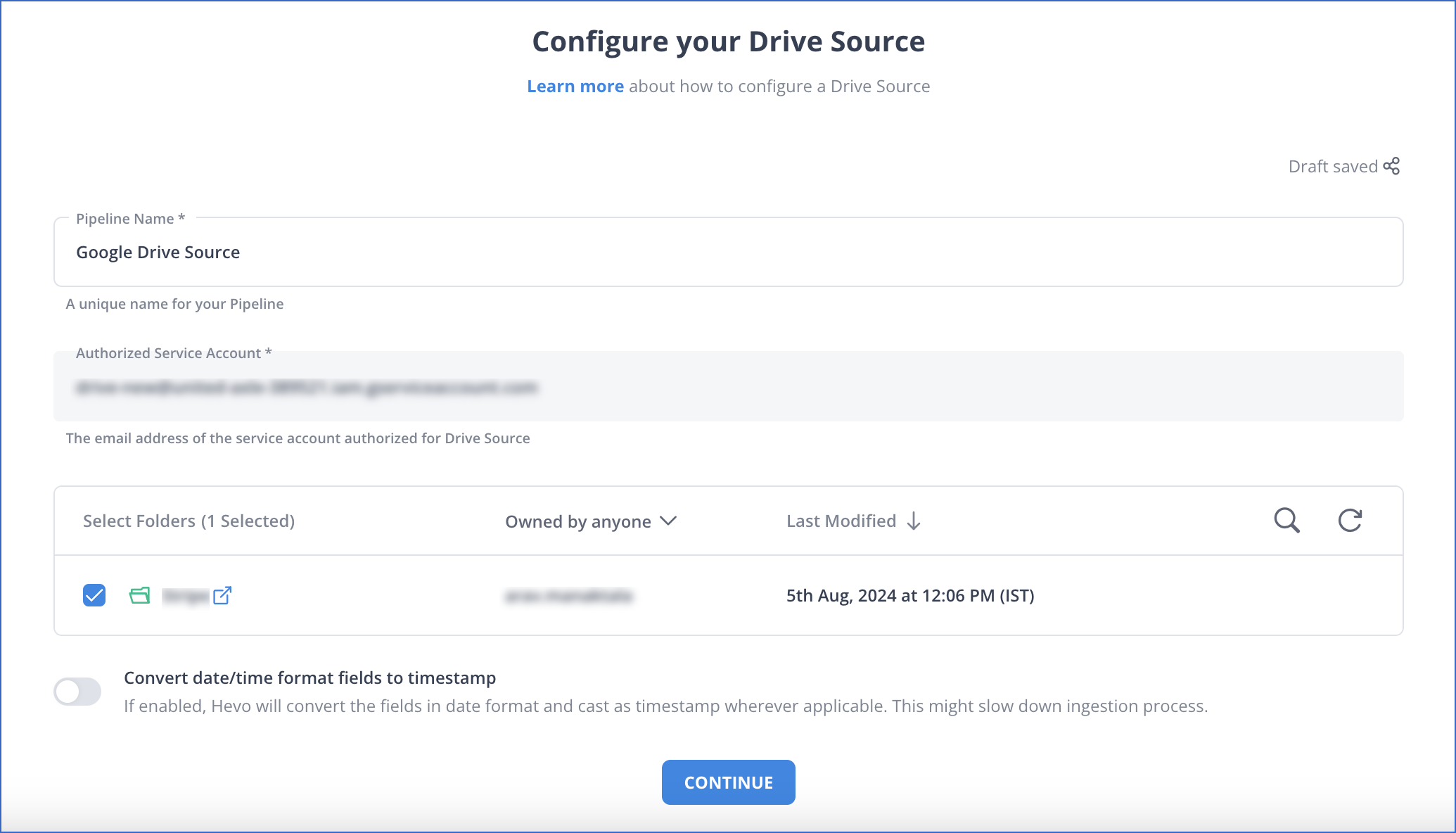

| Nov-08-2022 | NA | Updated section, Configuring Google Drive as a Source to add information about the Convert date/time format fields to timestamp option. |

| Sep-21-2022 | NA | Updated section, Schema and Data Model to add examples for the scenarios. |

| Apr-11-2022 | NA | Updated screenshot in the Configuring Google Drive as a Source section to reflect the latest UI. |

| Apr-11-2022 | 1.86 | Updated sections, Schema and Data Model and Limitations to reflect support for TSV file format. |

| Apr-11-2022 | 1.86 | Added section, Treatment of Duplicate Column Headers. |

| Mar-21-2022 | 1.85 | Updated section, Limitations to remove the point about Hevo not supporting UTF-16 encoding format for CSV files. |

| Mar-07-2022 | 1.83 | Added content in the section, Schema and Data Model, regarding worksheets with duplicate column headers. |

| Dec-06-2021 | 1.77 | Removed the limitation of not ingesting data from shared Drives, as Hevo supports it now. |

| Sep-09-2021 | NA | Added the limitation about Hevo not supporting ingestion of shared Drives. Read Limitations. |

| Aug-8-2021 | NA | Added a note in the Source Considerations section about Hevo deferring data ingestion in Pipelines created with this Source. |

| Jul-26-2021 | NA | Added a note in the Overview section about Hevo providing a fully-managed Google BigQuery Destination for Pipelines created with this Source. |

| Jun-28-2021 | 1.66 | Updated the page overview with information about __hevo_source_modified_at being uploaded as a metadata field from Release 1.66 onwards. |

| May-05-2021 | 1.62 | - Included steps to connect to Drive using a service account. - Updated the document as per the latest UI and functionality. |

| Feb-22-2021 | NA | Added the limitation about Hevo not supporting UTF-16 encoding format for CSV data. Read Limitations. |