- Introduction

- Getting Started

- Creating an Account in Hevo

- Subscribing to Hevo via AWS Marketplace

- Connection Options

- Familiarizing with the UI

- Creating your First Pipeline

- Data Loss Prevention and Recovery

- Data Ingestion

- Data Loading

- Loading Data in a Database Destination

- Loading Data to a Data Warehouse

- Optimizing Data Loading for a Destination Warehouse

- Deduplicating Data in a Data Warehouse Destination

- Manually Triggering the Loading of Events

- Scheduling Data Load for a Destination

- Loading Events in Batches

- Data Loading Statuses

- Data Spike Alerts

- Name Sanitization

- Table and Column Name Compression

- Parsing Nested JSON Fields in Events

- Pipelines

- Data Flow in a Pipeline

- Familiarizing with the Pipelines UI

- Working with Pipelines

- Managing Objects in Pipelines

- Pipeline Jobs

-

Transformations

-

Python Code-Based Transformations

- Supported Python Modules and Functions

-

Transformation Methods in the Event Class

- Create an Event

- Retrieve the Event Name

- Rename an Event

- Retrieve the Properties of an Event

- Modify the Properties for an Event

- Fetch the Primary Keys of an Event

- Modify the Primary Keys of an Event

- Fetch the Data Type of a Field

- Check if the Field is a String

- Check if the Field is a Number

- Check if the Field is Boolean

- Check if the Field is a Date

- Check if the Field is a Time Value

- Check if the Field is a Timestamp

-

TimeUtils

- Convert Date String to Required Format

- Convert Date to Required Format

- Convert Datetime String to Required Format

- Convert Epoch Time to a Date

- Convert Epoch Time to a Datetime

- Convert Epoch to Required Format

- Convert Epoch to a Time

- Get Time Difference

- Parse Date String to Date

- Parse Date String to Datetime Format

- Parse Date String to Time

- Utils

- Examples of Python Code-based Transformations

-

Drag and Drop Transformations

- Special Keywords

-

Transformation Blocks and Properties

- Add a Field

- Change Datetime Field Values

- Change Field Values

- Drop Events

- Drop Fields

- Find & Replace

- Flatten JSON

- Format Date to String

- Format Number to String

- Hash Fields

- If-Else

- Mask Fields

- Modify Text Casing

- Parse Date from String

- Parse JSON from String

- Parse Number from String

- Rename Events

- Rename Fields

- Round-off Decimal Fields

- Split Fields

- Examples of Drag and Drop Transformations

- Effect of Transformations on the Destination Table Structure

- Transformation Reference

- Transformation FAQs

-

Python Code-Based Transformations

-

Schema Mapper

- Using Schema Mapper

- Mapping Statuses

- Auto Mapping Event Types

- Manually Mapping Event Types

- Modifying Schema Mapping for Event Types

- Schema Mapper Actions

- Fixing Unmapped Fields

- Resolving Incompatible Schema Mappings

- Resizing String Columns in the Destination

- Changing the Data Type of a Destination Table Column

- Schema Mapper Compatibility Table

- Limits on the Number of Destination Columns

- File Log

- Troubleshooting Failed Events in a Pipeline

- Mismatch in Events Count in Source and Destination

- Audit Tables

- Activity Log

-

Pipeline FAQs

- Can multiple Sources connect to one Destination?

- What happens if I re-create a deleted Pipeline?

- Why is there a delay in my Pipeline?

- Can I change the Destination post-Pipeline creation?

- Why is my billable Events high with Delta Timestamp mode?

- Can I drop multiple Destination tables in a Pipeline at once?

- How does Run Now affect scheduled ingestion frequency?

- Will pausing some objects increase the ingestion speed?

- Can I see the historical load progress?

- Why is my Historical Load Progress still at 0%?

- Why is historical data not getting ingested?

- How do I set a field as a primary key?

- How do I ensure that records are loaded only once?

- Events Usage

- Sources

- Free Sources

-

Databases and File Systems

- Data Warehouses

-

Databases

- Connecting to a Local Database

- Amazon DocumentDB

- Amazon DynamoDB

- Elasticsearch

-

MongoDB

- Generic MongoDB

- MongoDB Atlas

- Support for Multiple Data Types for the _id Field

- Example - Merge Collections Feature

-

Troubleshooting MongoDB

-

Errors During Pipeline Creation

- Error 1001 - Incorrect credentials

- Error 1005 - Connection timeout

- Error 1006 - Invalid database hostname

- Error 1007 - SSH connection failed

- Error 1008 - Database unreachable

- Error 1011 - Insufficient access

- Error 1028 - Primary/Master host needed for OpLog

- Error 1029 - Version not supported for Change Streams

- SSL 1009 - SSL Connection Failure

- Troubleshooting MongoDB Change Streams Connection

- Troubleshooting MongoDB OpLog Connection

-

Errors During Pipeline Creation

- SQL Server

-

MySQL

- Amazon Aurora MySQL

- Amazon RDS MySQL

- Azure MySQL

- Generic MySQL

- Google Cloud MySQL

- MariaDB MySQL

-

Troubleshooting MySQL

-

Errors During Pipeline Creation

- Error 1003 - Connection to host failed

- Error 1006 - Connection to host failed

- Error 1007 - SSH connection failed

- Error 1011 - Access denied

- Error 1012 - Replication access denied

- Error 1017 - Connection to host failed

- Error 1026 - Failed to connect to database

- Error 1027 - Unsupported BinLog format

- Failed to determine binlog filename/position

- Schema 'xyz' is not tracked via bin logs

- Errors Post-Pipeline Creation

-

Errors During Pipeline Creation

- MySQL FAQs

- Oracle

-

PostgreSQL

- Amazon Aurora PostgreSQL

- Amazon RDS PostgreSQL

- Azure PostgreSQL

- Generic PostgreSQL

- Google Cloud PostgreSQL

- Heroku PostgreSQL

-

Troubleshooting PostgreSQL

-

Errors during Pipeline creation

- Error 1003 - Authentication failure

- Error 1006 - Connection settings errors

- Error 1011 - Access role issue for logical replication

- Error 1012 - Access role issue for logical replication

- Error 1014 - Database does not exist

- Error 1017 - Connection settings errors

- Error 1023 - No pg_hba.conf entry

- Error 1024 - Number of requested standby connections

- Errors Post-Pipeline Creation

-

Errors during Pipeline creation

- PostgreSQL FAQs

- Troubleshooting Database Sources

- File Storage

- Engineering Analytics

- Finance & Accounting Analytics

-

Marketing Analytics

- ActiveCampaign

- AdRoll

- Amazon Ads

- Apple Search Ads

- AppsFlyer

- CleverTap

- Criteo

- Drip

- Facebook Ads

- Facebook Page Insights

- Firebase Analytics

- Freshsales

- Google Ads

- Google Analytics

- Google Analytics 4

- Google Analytics 360

- Google Play Console

- Google Search Console

- HubSpot

- Instagram Business

- Klaviyo v2

- Lemlist

- LinkedIn Ads

- Mailchimp

- Mailshake

- Marketo

- Microsoft Ads

- Onfleet

- Outbrain

- Pardot

- Pinterest Ads

- Pipedrive

- Recharge

- Segment

- SendGrid Webhook

- SendGrid

- Salesforce Marketing Cloud

- Snapchat Ads

- SurveyMonkey

- Taboola

- TikTok Ads

- Twitter Ads

- Typeform

- YouTube Analytics

- Product Analytics

- Sales & Support Analytics

- Source FAQs

- Destinations

- Familiarizing with the Destinations UI

- Cloud Storage-Based

- Databases

-

Data Warehouses

- Amazon Redshift

- Amazon Redshift Serverless

- Azure Synapse Analytics

- Databricks

- Google BigQuery

- Hevo Managed Google BigQuery

- Snowflake

-

Destination FAQs

- Can I change the primary key in my Destination table?

- Can I change the Destination table name after creating the Pipeline?

- How can I change or delete the Destination table prefix?

- Why does my Destination have deleted Source records?

- How do I filter deleted Events from the Destination?

- Does a data load regenerate deleted Hevo metadata columns?

- How do I filter out specific fields before loading data?

- Transform

- Alerts

- Account Management

- Activate

- Glossary

Releases- Release 2.38.1 (Jul 07-14, 2025)

- Release 2.38 (Jun 09-July 07, 2025)

- Release 2.37 (May 12-Jun 09, 2025)

- 2025 Releases

-

2024 Releases

- Release 2.32 (Dec 16 2024-Jan 20, 2025)

- Release 2.31 (Nov 18-Dec 16, 2024)

- Release 2.30 (Oct 21-Nov 18, 2024)

- Release 2.29 (Sep 30-Oct 22, 2024)

- Release 2.28 (Sep 02-30, 2024)

- Release 2.27 (Aug 05-Sep 02, 2024)

- Release 2.26 (Jul 08-Aug 05, 2024)

- Release 2.25 (Jun 10-Jul 08, 2024)

- Release 2.24 (May 06-Jun 10, 2024)

- Release 2.23 (Apr 08-May 06, 2024)

- Release 2.22 (Mar 11-Apr 08, 2024)

- Release 2.21 (Feb 12-Mar 11, 2024)

- Release 2.20 (Jan 15-Feb 12, 2024)

-

2023 Releases

- Release 2.19 (Dec 04, 2023-Jan 15, 2024)

- Release Version 2.18

- Release Version 2.17

- Release Version 2.16 (with breaking changes)

- Release Version 2.15 (with breaking changes)

- Release Version 2.14

- Release Version 2.13

- Release Version 2.12

- Release Version 2.11

- Release Version 2.10

- Release Version 2.09

- Release Version 2.08

- Release Version 2.07

- Release Version 2.06

-

2022 Releases

- Release Version 2.05

- Release Version 2.04

- Release Version 2.03

- Release Version 2.02

- Release Version 2.01

- Release Version 2.00

- Release Version 1.99

- Release Version 1.98

- Release Version 1.97

- Release Version 1.96

- Release Version 1.95

- Release Version 1.93 & 1.94

- Release Version 1.92

- Release Version 1.91

- Release Version 1.90

- Release Version 1.89

- Release Version 1.88

- Release Version 1.87

- Release Version 1.86

- Release Version 1.84 & 1.85

- Release Version 1.83

- Release Version 1.82

- Release Version 1.81

- Release Version 1.80 (Jan-24-2022)

- Release Version 1.79 (Jan-03-2022)

-

2021 Releases

- Release Version 1.78 (Dec-20-2021)

- Release Version 1.77 (Dec-06-2021)

- Release Version 1.76 (Nov-22-2021)

- Release Version 1.75 (Nov-09-2021)

- Release Version 1.74 (Oct-25-2021)

- Release Version 1.73 (Oct-04-2021)

- Release Version 1.72 (Sep-20-2021)

- Release Version 1.71 (Sep-09-2021)

- Release Version 1.70 (Aug-23-2021)

- Release Version 1.69 (Aug-09-2021)

- Release Version 1.68 (Jul-26-2021)

- Release Version 1.67 (Jul-12-2021)

- Release Version 1.66 (Jun-28-2021)

- Release Version 1.65 (Jun-14-2021)

- Release Version 1.64 (Jun-01-2021)

- Release Version 1.63 (May-19-2021)

- Release Version 1.62 (May-05-2021)

- Release Version 1.61 (Apr-20-2021)

- Release Version 1.60 (Apr-06-2021)

- Release Version 1.59 (Mar-23-2021)

- Release Version 1.58 (Mar-09-2021)

- Release Version 1.57 (Feb-22-2021)

- Release Version 1.56 (Feb-09-2021)

- Release Version 1.55 (Jan-25-2021)

- Release Version 1.54 (Jan-12-2021)

-

2020 Releases

- Release Version 1.53 (Dec-22-2020)

- Release Version 1.52 (Dec-03-2020)

- Release Version 1.51 (Nov-10-2020)

- Release Version 1.50 (Oct-19-2020)

- Release Version 1.49 (Sep-28-2020)

- Release Version 1.48 (Sep-01-2020)

- Release Version 1.47 (Aug-06-2020)

- Release Version 1.46 (Jul-21-2020)

- Release Version 1.45 (Jul-02-2020)

- Release Version 1.44 (Jun-11-2020)

- Release Version 1.43 (May-15-2020)

- Release Version 1.42 (Apr-30-2020)

- Release Version 1.41 (Apr-2020)

- Release Version 1.40 (Mar-2020)

- Release Version 1.39 (Feb-2020)

- Release Version 1.38 (Jan-2020)

- Early Access New

Databricks

Databricks is an open-source storage layer that allows you to operate a data lakehouse architecture. This architecture provides data warehousing performance at data lake costs. Databricks runs on top of your existing data lake and is fully compatible with Apache Spark APIs. Apache Spark is an open-source data analytics engine that can perform analytics and data processing on very large data sets. Read A Gentle Introduction to Apache Spark on Databricks.

Hevo can load data from any of your Sources into a Databricks data warehouse. You can set up the Databricks Destination on the fly, while creating the Pipeline, or independently from the Navigation bar. The ingested data is first staged in Hevo’s S3 bucket before it is batched and loaded to the Databricks Destination. Additionally, Hevo supports Databricks on the AWS, Azure, and GCP platforms.

Hevo supports Databricks on the AWS, Azure, and GCP platforms. You can connect your Databricks warehouse hosted on any of these platforms to Hevo using one of the following methods:

-

Using the Databricks Partner Connect (Recommended Method)

The following image illustrates the key steps that you need to complete to configure Databricks as a Destination in Hevo:

Prerequisites

-

Your Databricks account is on the Premium plan or above, if you want to connect to the workspace using the Databricks Partner Connect.

-

An active AWS, Azure, or GCP account is available.

-

A Databricks workspace is created in your cloud service account (AWS, Azure, or GCP).

-

The workspace allows connections from the Hevo IP addresses of your region only if the IP access lists feature is enabled in your respective cloud provider.

Note: You must have Admin access to create an IP access list.

-

The URL of your Databricks workspace is available. It is in the format https://<deployment name>.cloud.databricks.com. For example, if the deployment name is dbc-westeros, the workspace URL is https://dbc-westeros.cloud.databricks.com.

-

The following requirements are met, if you want to connect to the workspace using your Databricks credentials:

-

The Databricks cluster or SQL warehouse is created.

-

The database hostname, port number, and HTTP Path are available.

-

The Personal Access Token (PAT) is available.

-

-

You are assigned the Team Collaborator or any administrator role except the Billing Administrator role in Hevo, to create the Destination.

Connect Databricks as a Destination using either of the following methods:

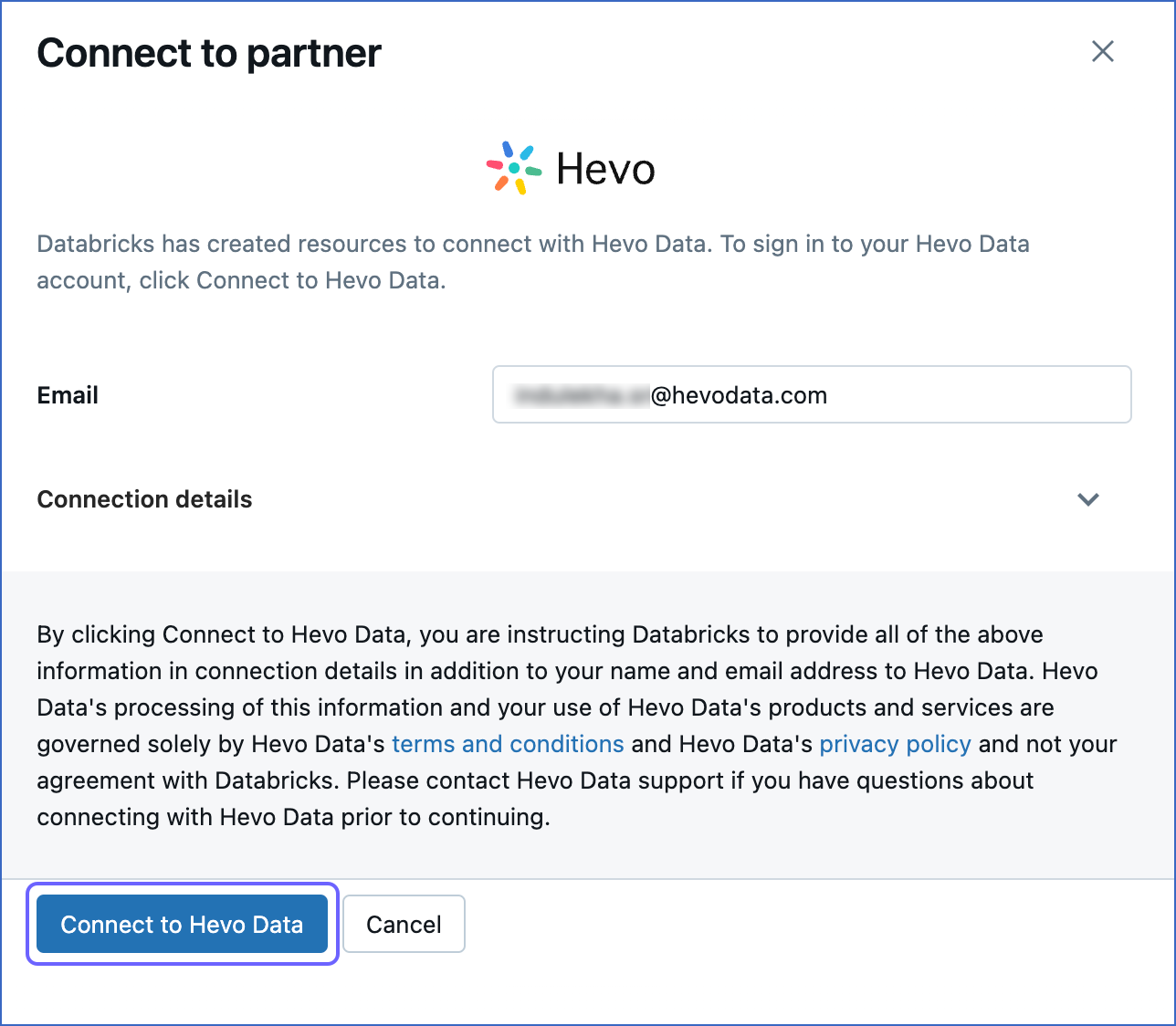

Connect Using the Databricks Partner Connect (Recommended Method)

Perform the following steps to configure Databricks as the Destination using the Databricks Partner Connect:

-

Log in to your Databricks account.

-

In the left navigation pane, click Partner Connect.

-

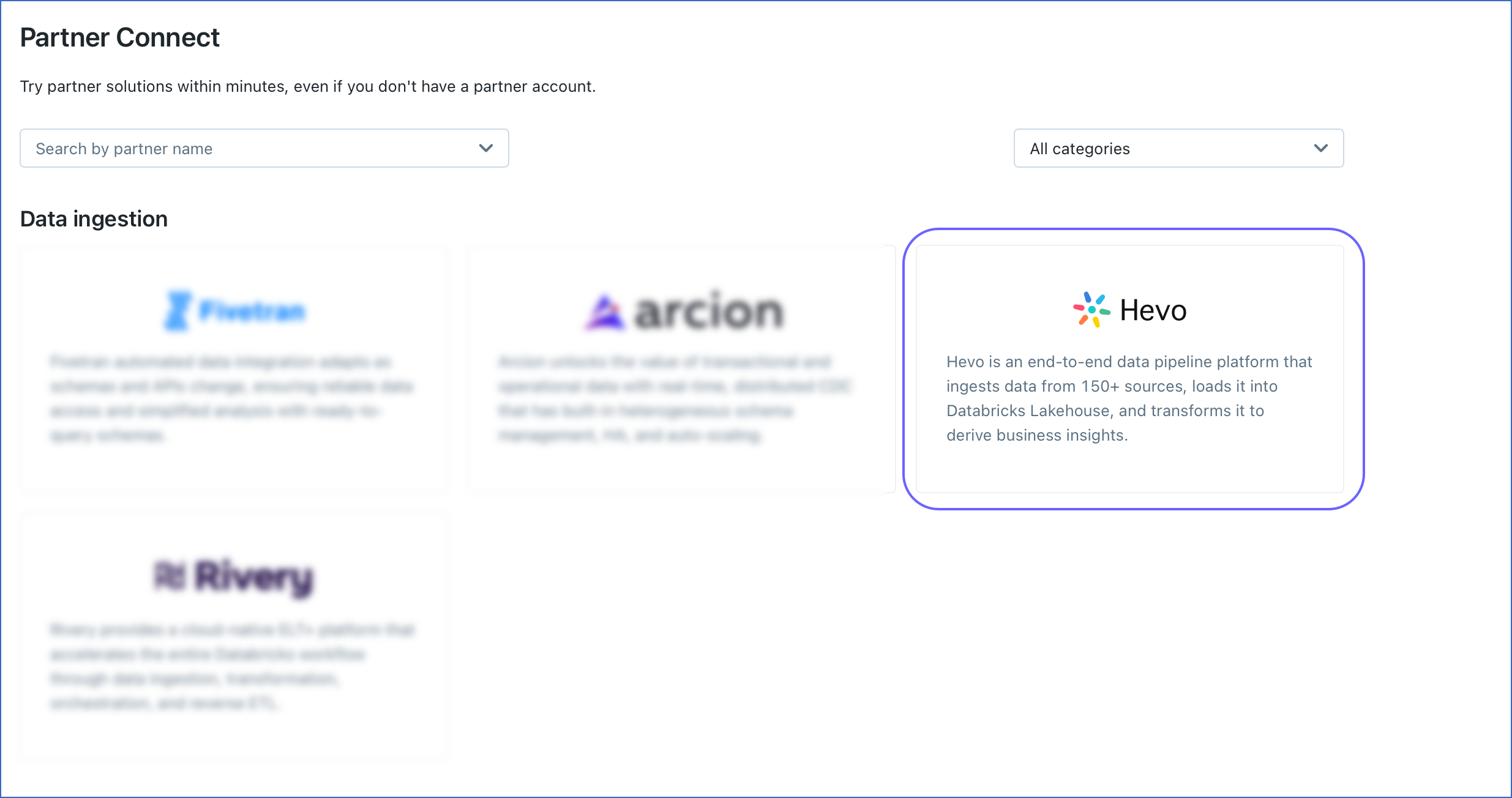

On the Partner Connect page, under Data Ingestion, click Hevo.

-

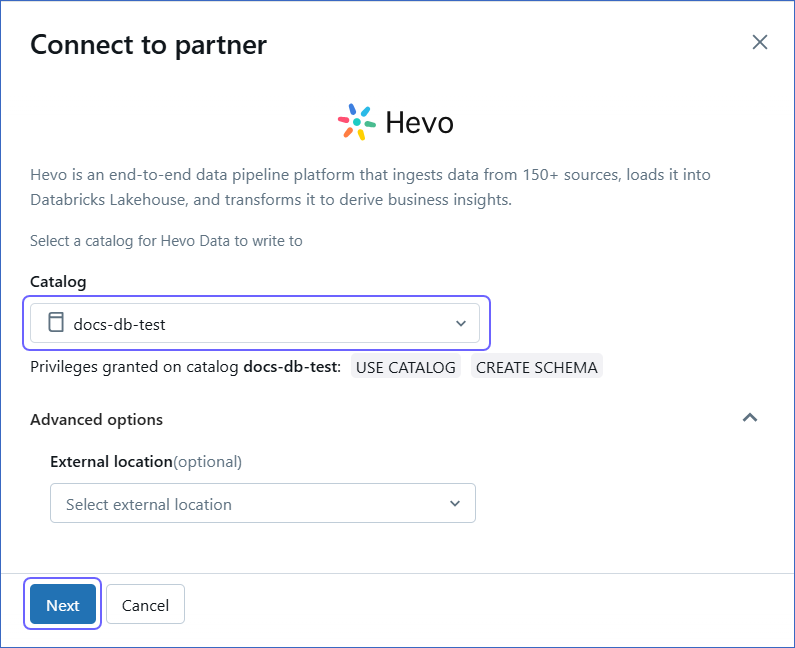

In the Connect to partner pop-up window, select the Catalog to which Hevo needs to write your data and click Next.

-

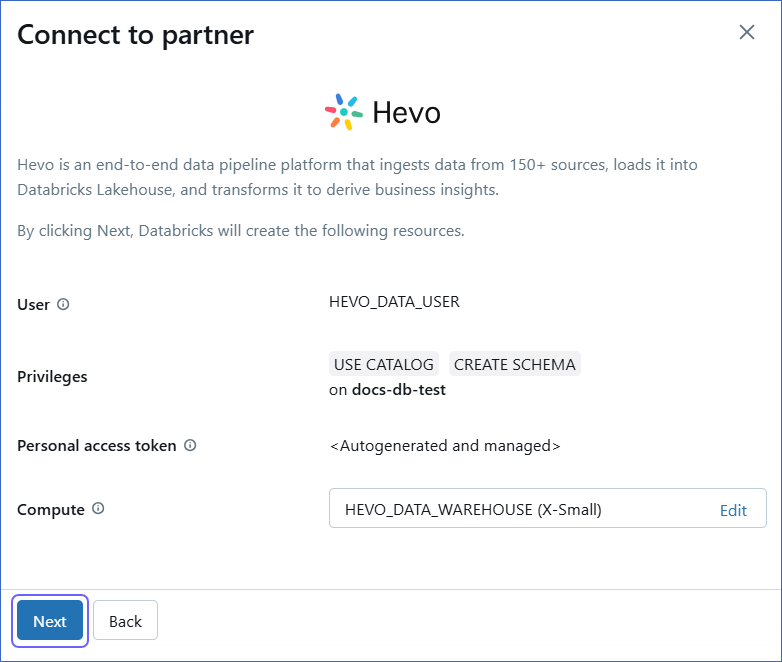

Review the settings of your Databricks resources. If required, edit the Compute field, and then click Next.

-

Click Connect to Hevo Data.

-

Sign up for Hevo or log in to your Hevo account. Post-login, you are redirected to the Configure your Databricks Destination page.

-

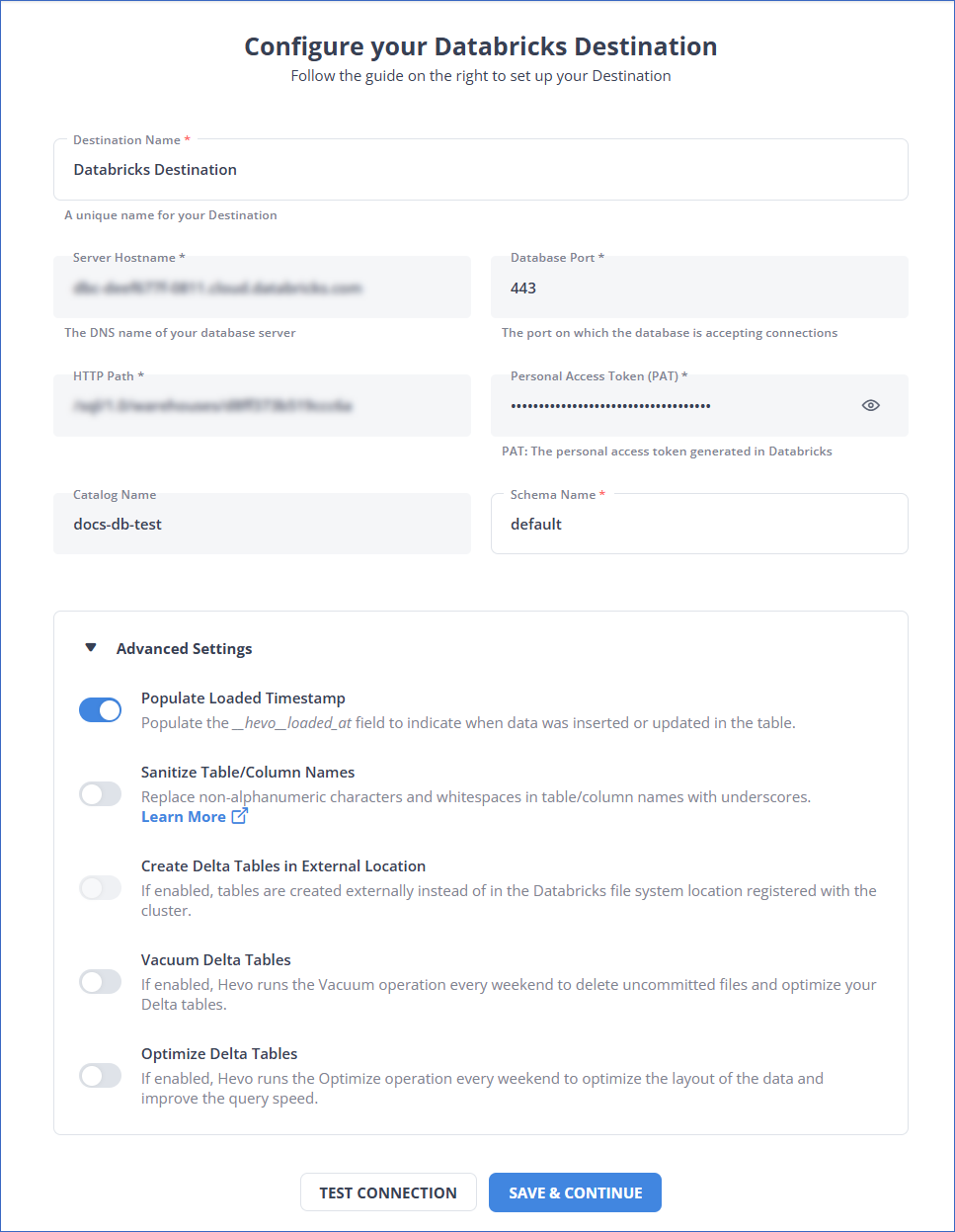

On the Configure your Databricks Destination page, specify the following:

Note: While establishing Partner Connect, the details of the Server Hostname, Database Port, HTTP Path, Personal Access Token (PAT), and Catalog Name fields are auto-populated, as Databricks creates these resources. Copy the PAT and save it securely like any other password. You may need to use it to allowlist Hevo’s IP address for your database region.

-

Destination Name: A unique name for the Destination, not exceeding 255 characters.

-

Schema Name: The name of the Destination database schema. Default value: default.

-

Advanced Settings:

-

Populate Loaded Timestamp: If enabled, Hevo appends the

___hevo_loaded_at_column to the Destination table to indicate the time when the Event was loaded. -

Sanitize Table/Column Names: If enabled, Hevo sanitizes the column names to remove all non-alphanumeric characters and spaces in the table and column names and replaces them with an underscore (_). Read Name Sanitization.

-

Create Delta Tables in External Location (Optional) (Non-editable): If enabled, Hevo creates the external Delta tables in the

/{schema}/{table}path, which is the default Databricks File System location registered with the cluster.Note: This option is disabled as Databricks automatically configures this option when connecting using the Databricks Partner Connect.

-

Vacuum Delta Tables: If enabled, Hevo runs the Vacuum operation every weekend to delete the uncommitted files and clean up your Delta tables. Databricks charges additional costs for these queries. Read Databricks Cloud Partners documentation to know more about vacuuming.

-

Optimize Delta Tables: If enabled, Hevo runs the Optimize queries every weekend to optimize the layout of the data and improve the query speed. Databricks charges additional costs for these queries. Read Databricks Cloud Partners documentation to know more about optimization.

-

-

-

Click TEST CONNECTION. This button is enabled once all the mandatory fields are specified.

Note: You must allow connections from the Hevo IP addresses of your region to your workspace if you have enabled the IP access lists feature for it.

-

Click SAVE & CONTINUE. This button is enabled once all the mandatory fields are specified.

Connect Using the Databricks Credentials

Refer to the steps in this section to create a Databricks workspace, connect to a warehouse, and obtain the Databricks credentials.

(Optional) Create a Databricks workspace

A workspace refers to your Databricks deployment in the cloud service account. Within the workspace, you can create clusters and SQL warehouses and generate the credentials for configuring Databricks as a Destination in Hevo.

To create a workspace:

-

Log in to your Databricks account.

-

Create a workspace as per your cloud provider. Read Databricks Cloud Partners for the documentation related to each cloud partner (AWS, Azure, and GCP).

You are automatically assigned the

Admin accessto the workspace that you create.

(Optional) Add Members to the Workspace

Once you have created the workspace, add your team members who can access the workspace and create and manage clusters in it.

-

Log in to your Databricks account with

Admin access. -

Add users to the workspace and assign them the necessary privileges for creating clusters. Read Databricks Cloud Partners documentation to know more.

Create a Databricks Cluster or Warehouse

You must create a cluster or SQL warehouse to allow Hevo to connect to your Databricks workspace and load data. To do so, perform the steps in one of the following:

Create a Databricks cluster

A cluster is a computing resource that can be created within a Databricks workspace and be used for loading the objects to this workspace. You can use an existing Databricks cluster or create a new one for loading data.

Note: Apache Spark jobs are available only for clusters.

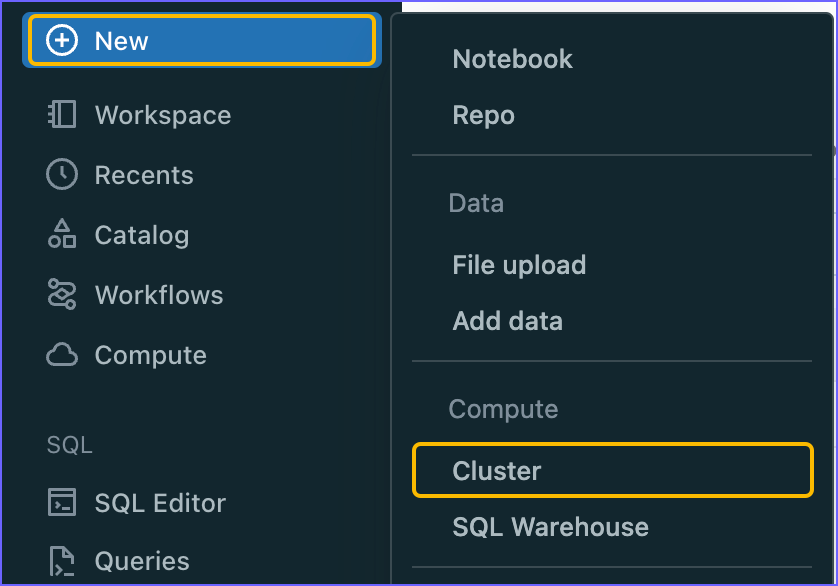

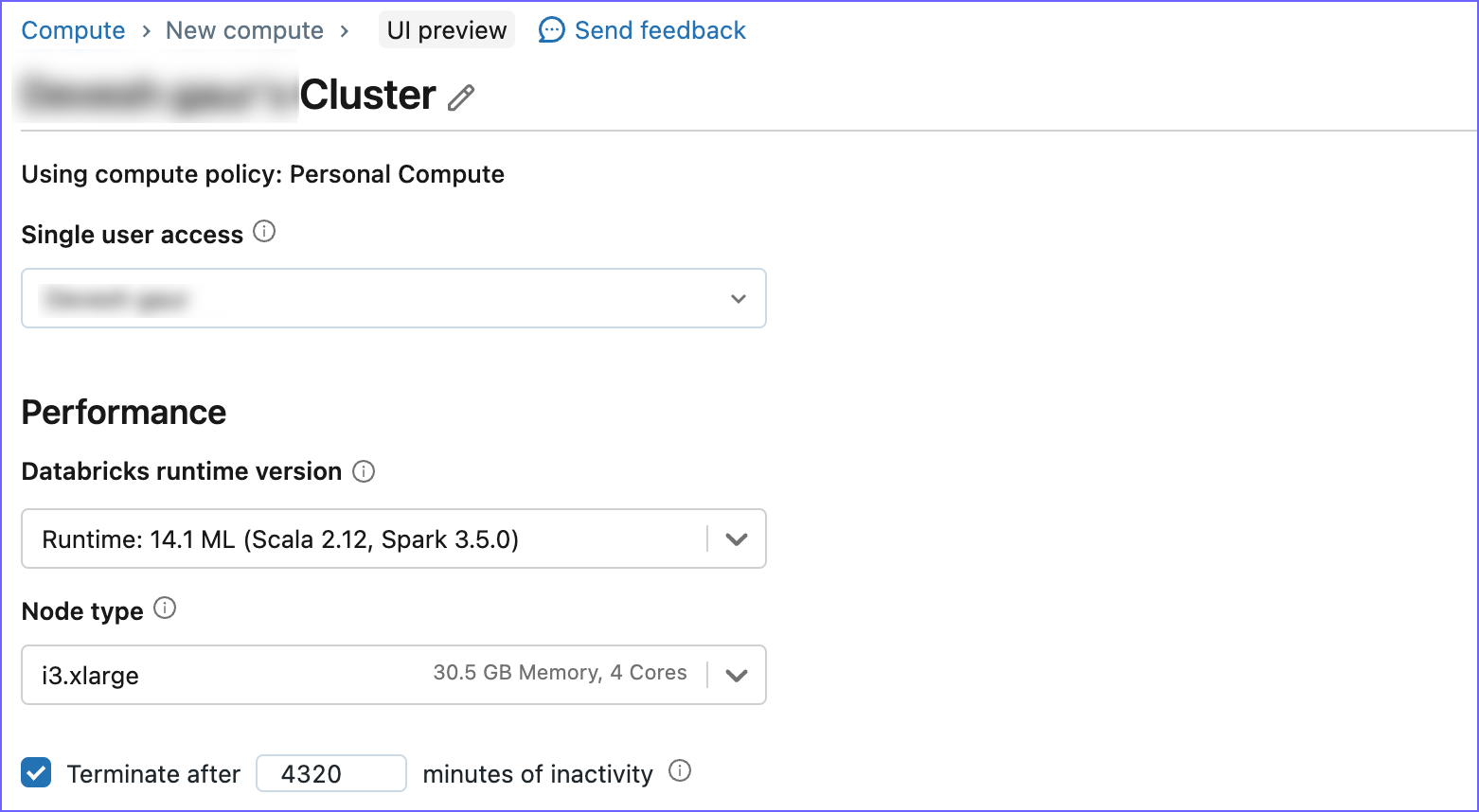

To create a Databricks cluster:

-

Log in to your Databricks workspace. URL: [https://<workspace-name><env>.databricks.com/]

-

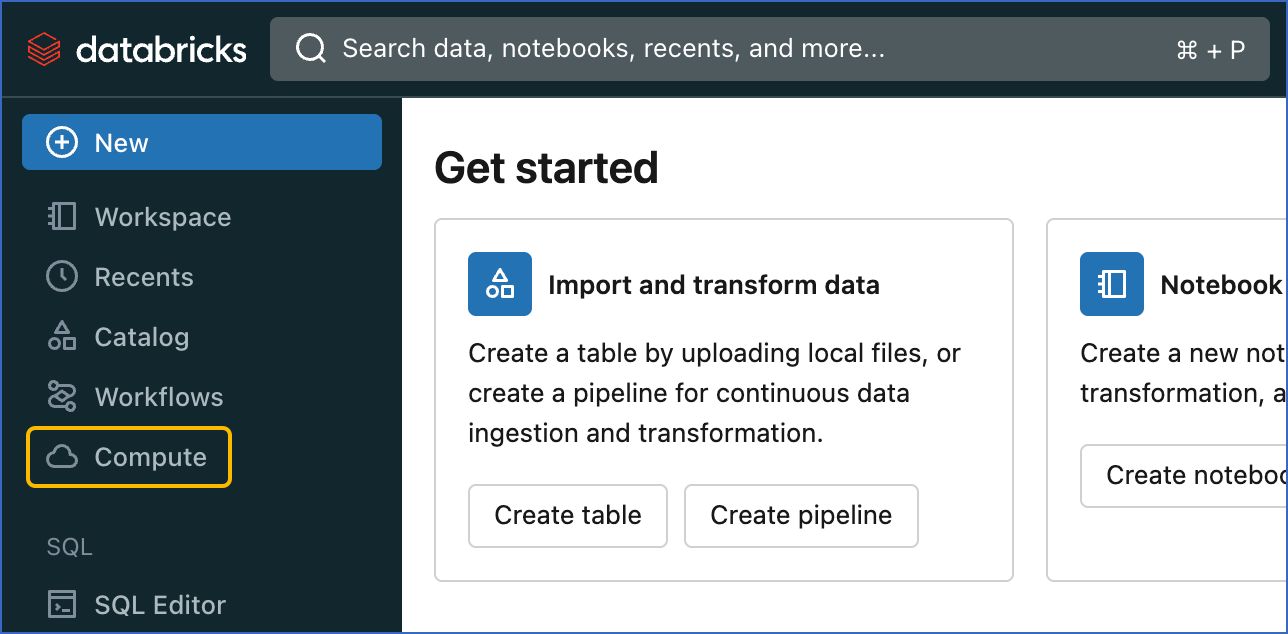

In the left navigation pane, click New, and then click Cluster.

-

Specify a Cluster name and select the required configuration, such as the Databricks runtime version and Node type.

-

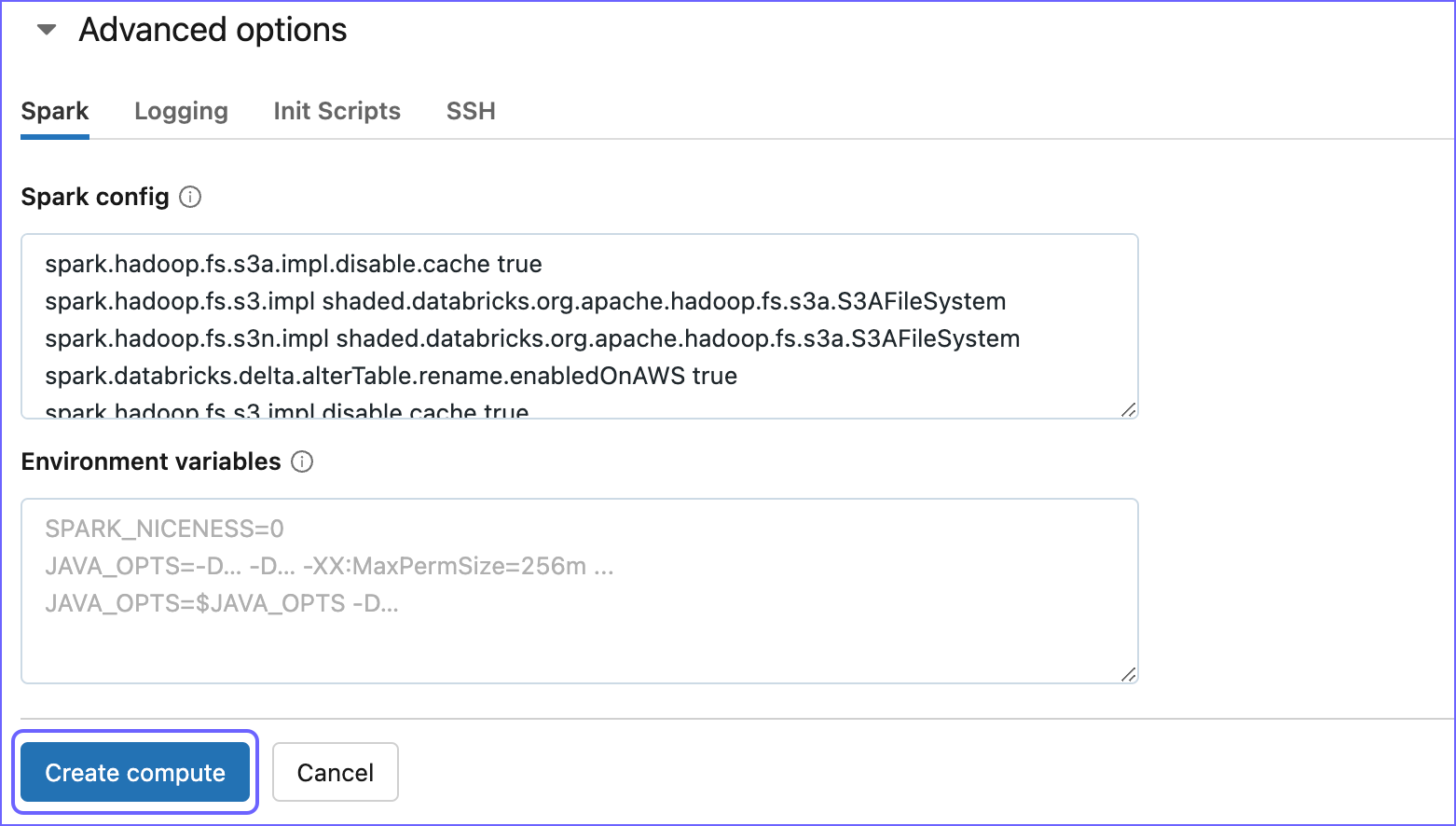

Expand the Advanced options section and click the Spark tab.

-

In the Spark Config box, paste the following code:

spark.databricks.delta.alterTable.rename.enabledOnAWS true spark.hadoop.fs.s3a.impl shaded.databricks.org.apache.hadoop.fs.s3a.S3AFileSystem spark.hadoop.fs.s3n.impl shaded.databricks.org.apache.hadoop.fs.s3a.S3AFileSystem spark.hadoop.fs.s3n.impl.disable.cache true spark.hadoop.fs.s3.impl.disable.cache true spark.hadoop.fs.s3a.impl.disable.cache true spark.hadoop.fs.s3.impl shaded.databricks.org.apache.hadoop.fs.s3a.S3AFileSystemThe above code snippet specifies the configurations required by Hevo to read the data from your AWS S3 account where the data is staged.

-

Click Create compute to create your cluster.

Create a Databricks SQL warehouse

An SQL warehouse is a computing resource that allows you to run only SQL commands on the data objects.

To create a Databricks SQL warehouse:

-

Log in to your Databricks account.

-

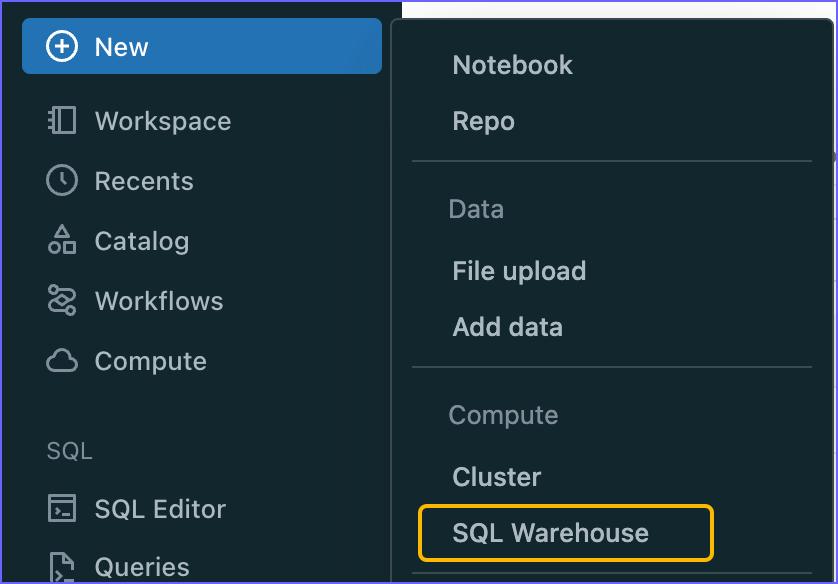

In the left navigation pane, click New, and then click SQL Warehouse.

-

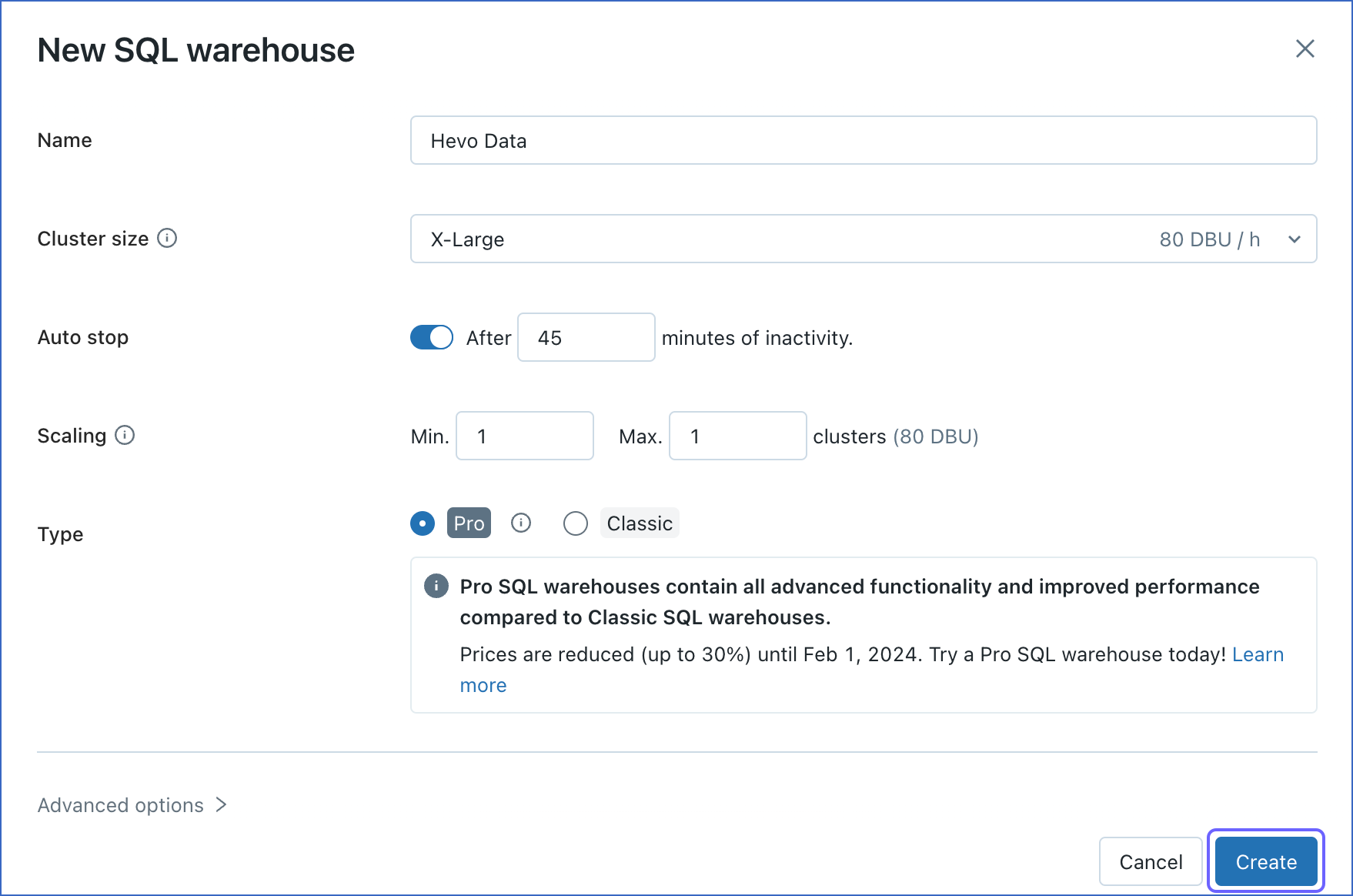

In the New SQL Warehouse window, do the following:

-

Specify a Name for the warehouse.

-

Select your Cluster Size.

-

Configure other warehouse options, as required.

-

Click Create.

-

Obtain Databricks Credentials

Once you have created the cluster or warehouse for data loading, you must obtain its details for configuring Databricks in Hevo. Depending on the resource (cluster or warehouse) you created in Step 3 above, perform the steps in one of the following:

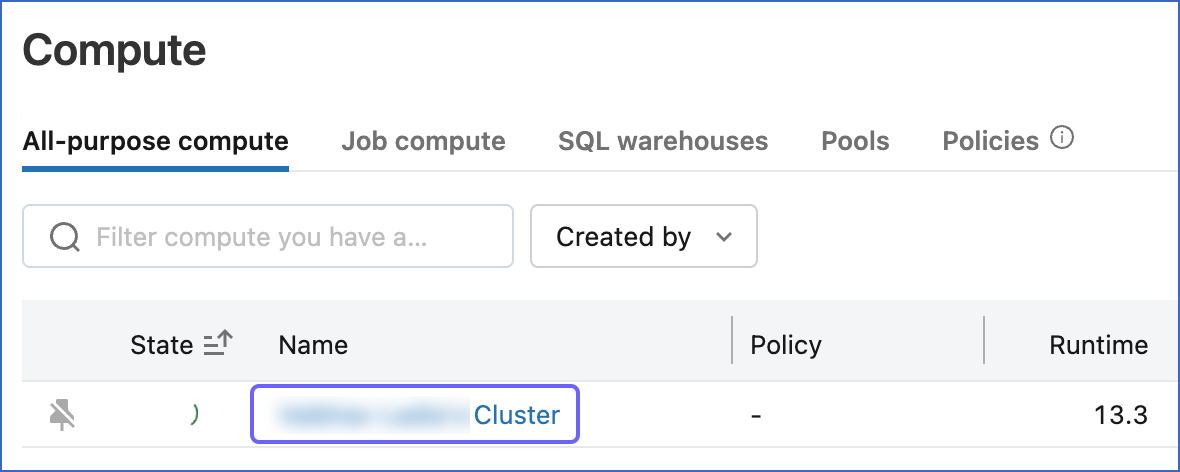

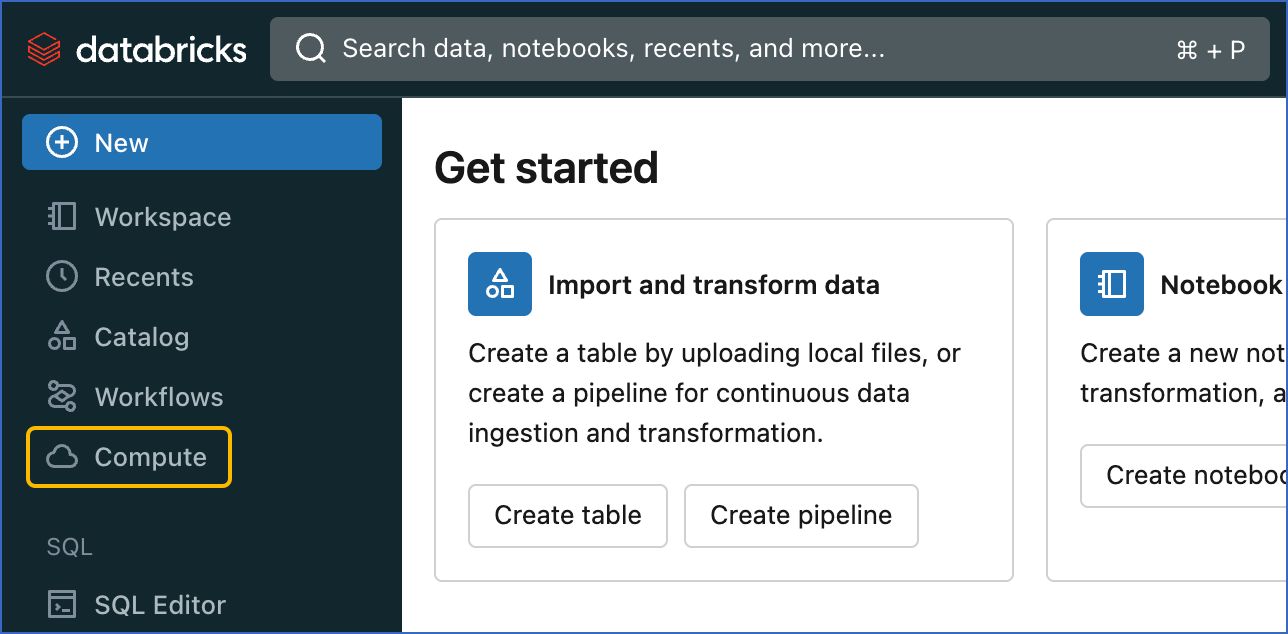

Obtain cluster details

-

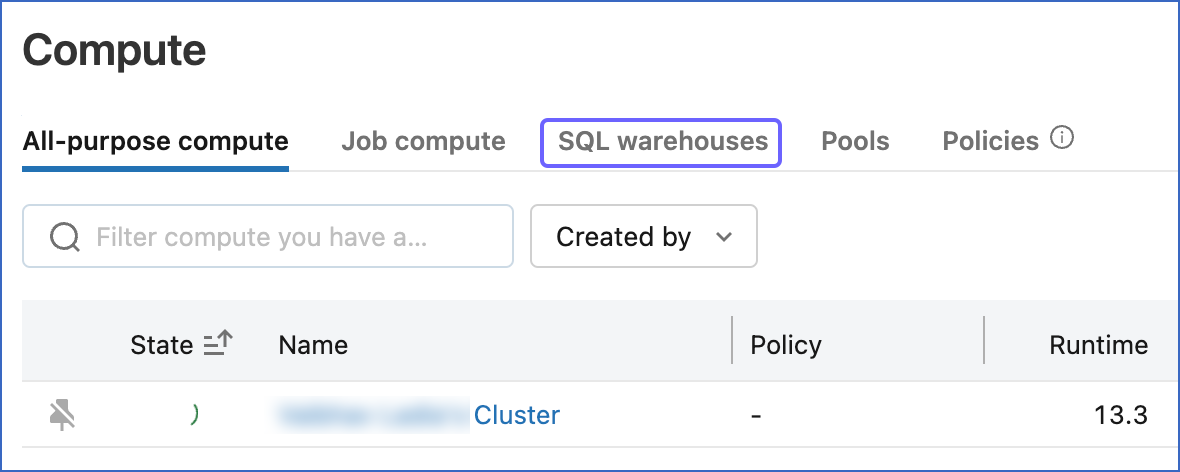

In the left navigation pane of the databricks console, click Compute.

-

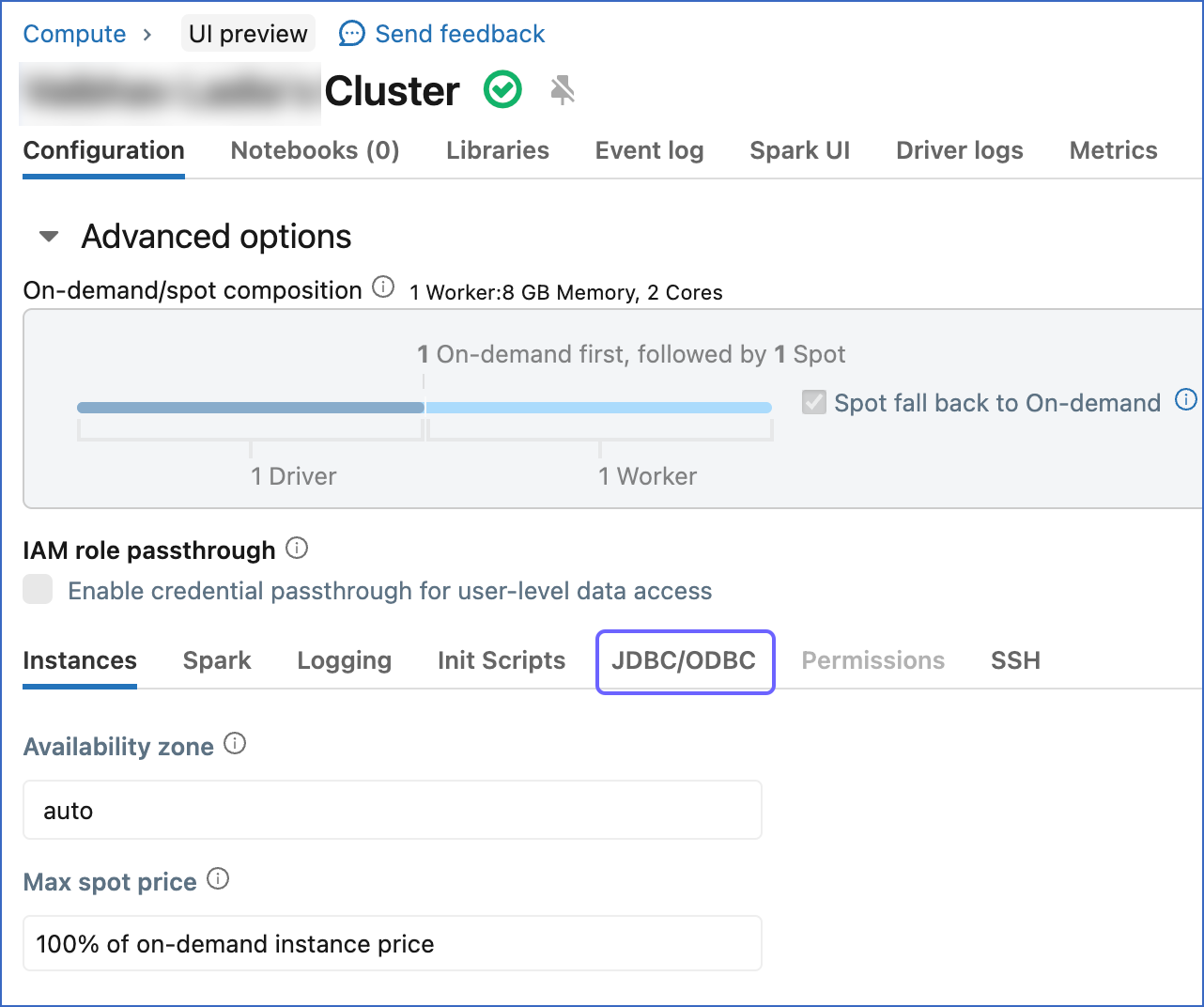

Click the cluster that you created above.

-

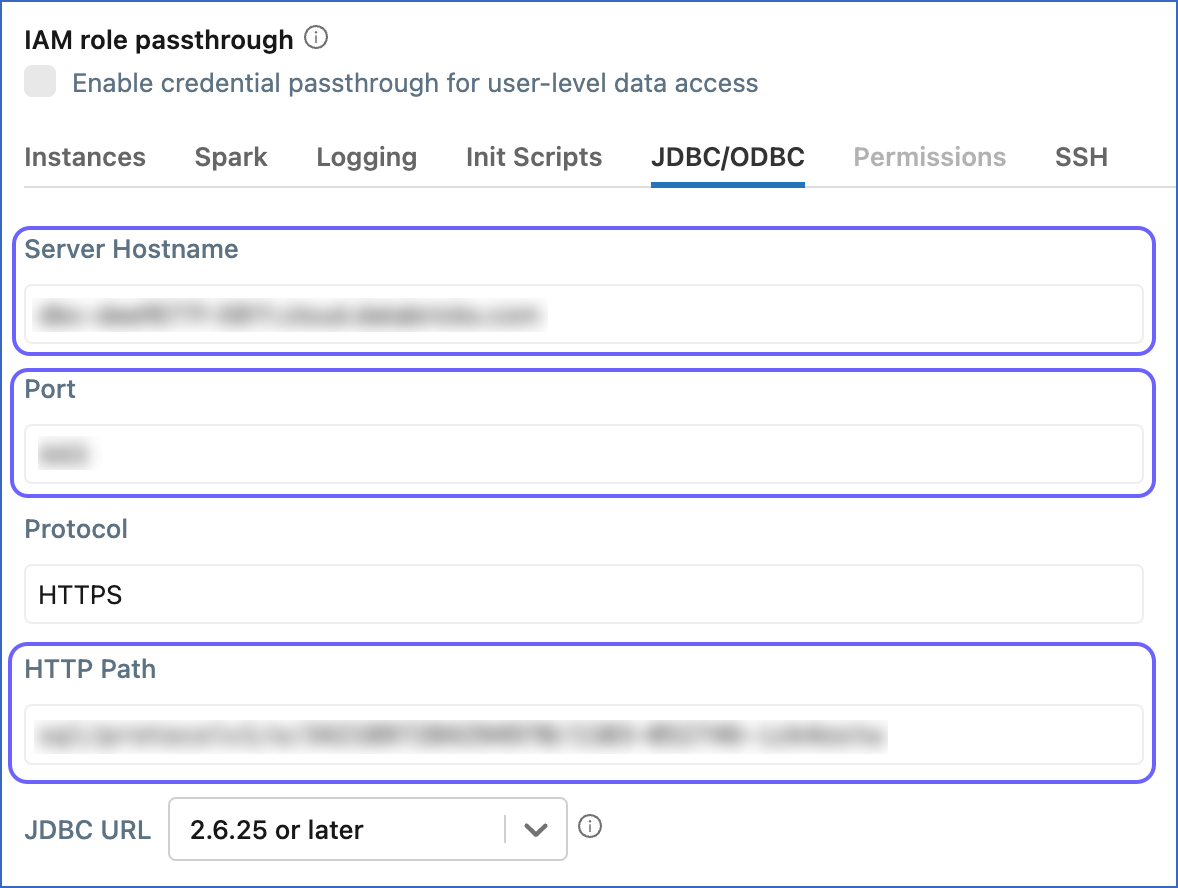

In the Configuration tab, scroll down to the Advanced Options section and click JDBC/ODBC.

-

Copy the Server Hostname, Port, and HTTP Path, and save them securely like any other password. You can use these credentials while configuring your Databricks Destination.

Obtain SQL warehouse details

-

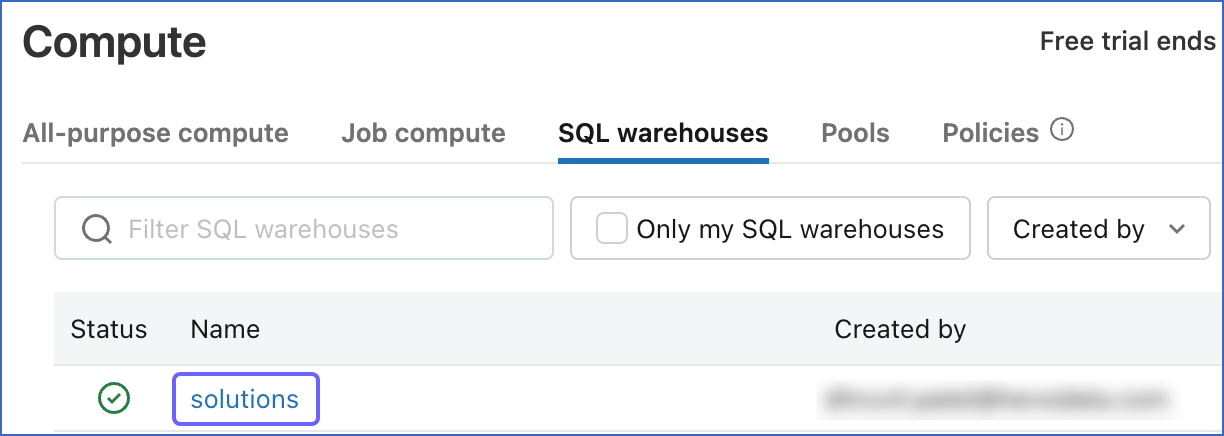

In the left navigation pane of the databricks console, click Compute.

-

On the Compute page, click SQL warehouses.

-

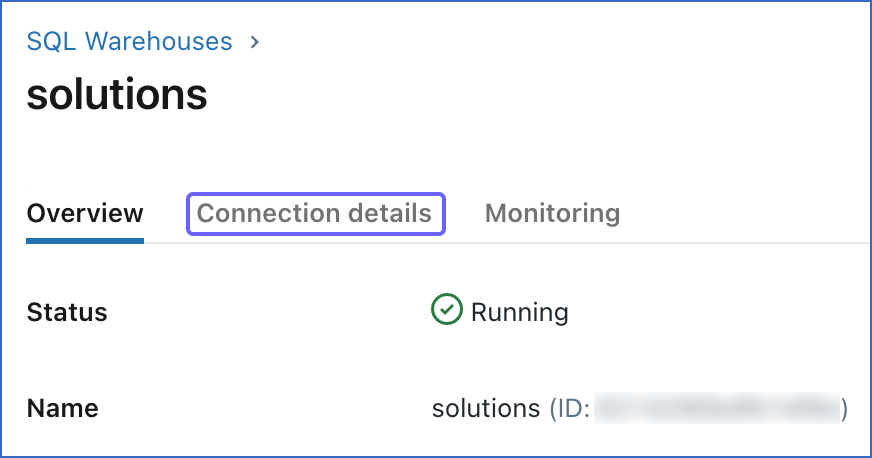

Click the SQL warehouse that you created above.

-

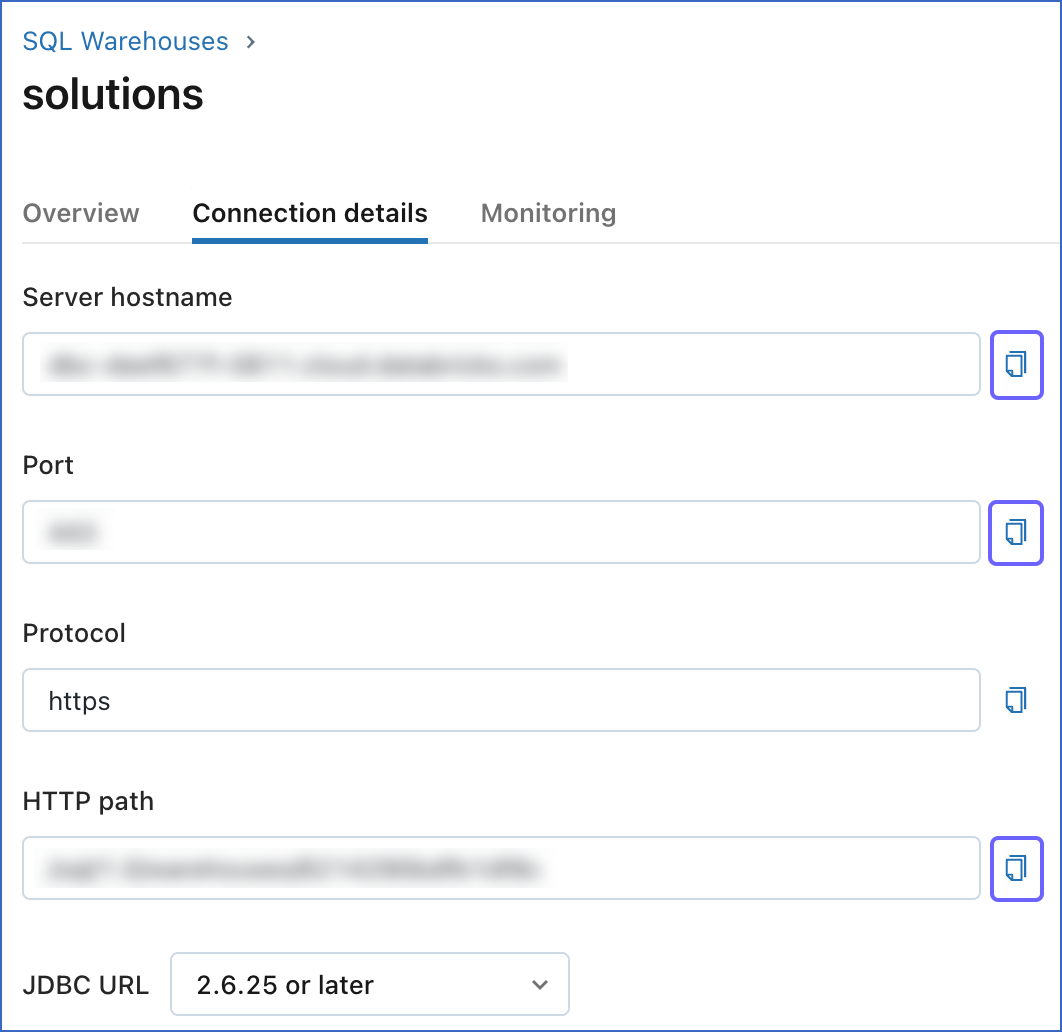

On the <SQL_Warehouse_Name> page, click Connection details.

-

Click the Copy icon corresponding to the Server hostname, Port, and HTTP path fields, to copy them and save them securely like any other password. You can use these credentials while configuring your Databricks Destination.

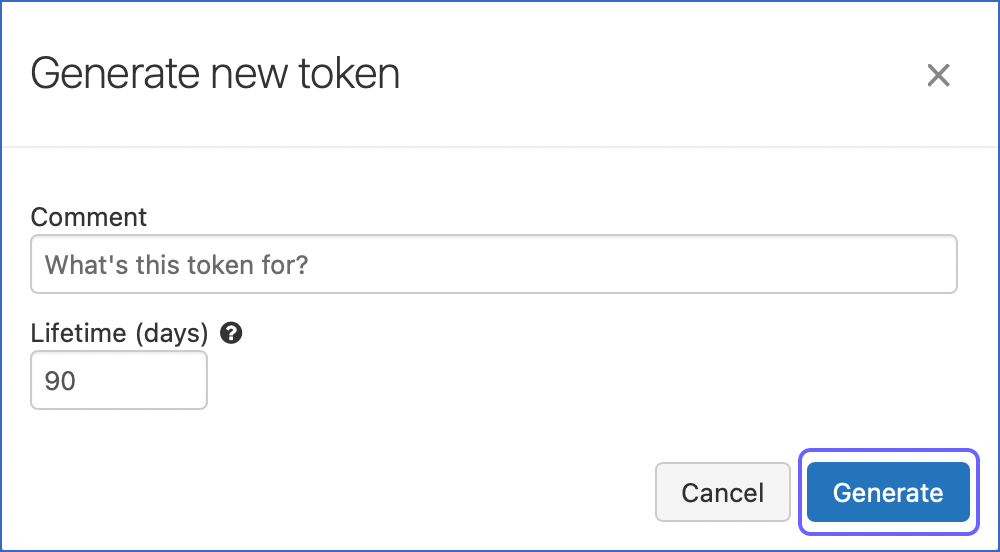

Create a Personal Access Token (PAT)

Hevo requires a Databricks Personal Access Token (PAT) to authenticate and connect to your Databricks instance and use the Databricks REST APIs.

To generate the PAT:

-

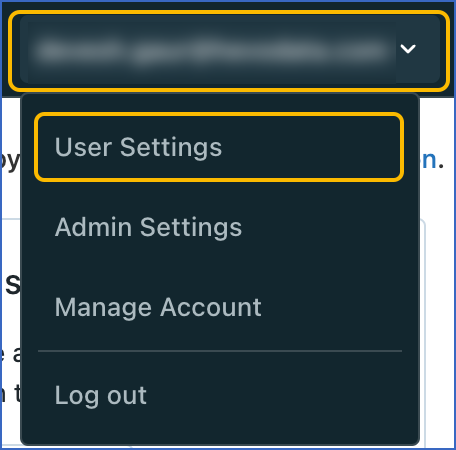

Click on the top right of your Databricks console, and in the drop-down, click User Settings.

-

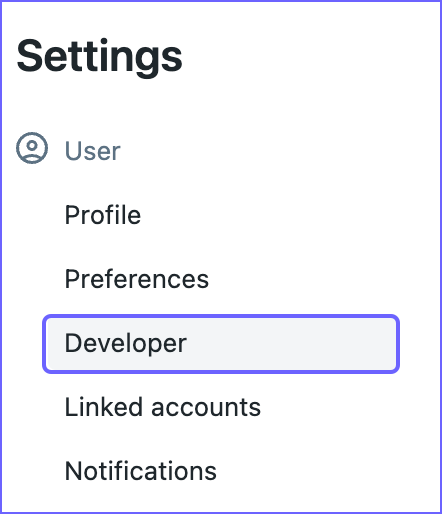

In the left navigation pane, under Settings, click Developer.

-

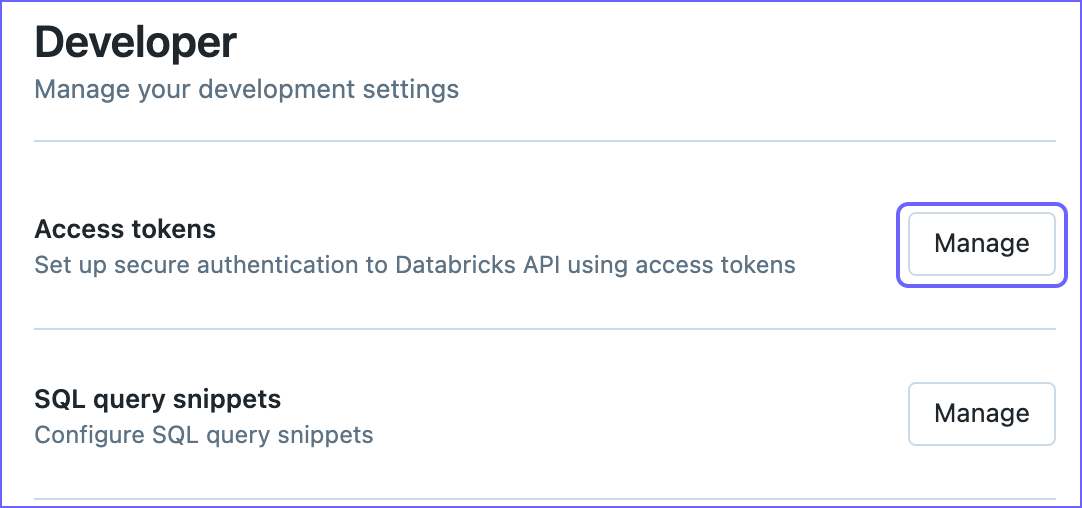

On the Developer page, click Manage corresponding to the Access tokens.

-

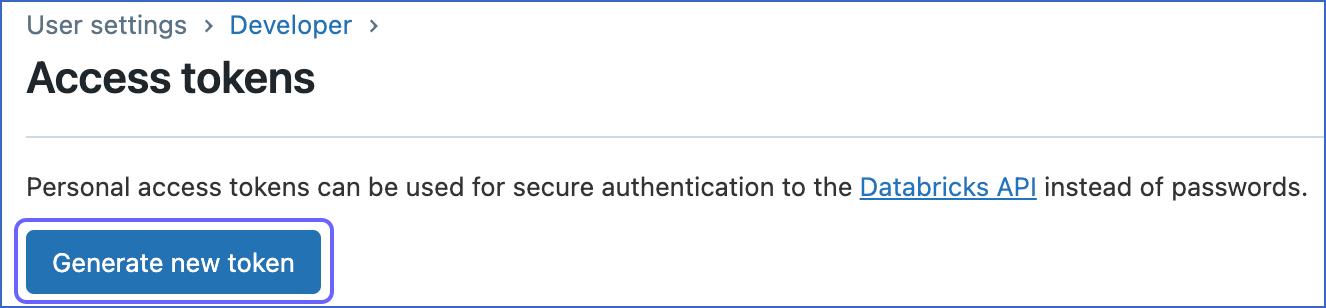

On the Access tokens page, click Generate new token.

-

Optionally, in the Generate new token dialog box, provide a description in the Comment field and specify the token Lifetime (expiration period).

-

Click Generate.

-

Copy the generated token and save it securely like any other password. Use this token to connect Databricks as a Destination in Hevo.

Note: PATs are similar to passwords; store these securely.

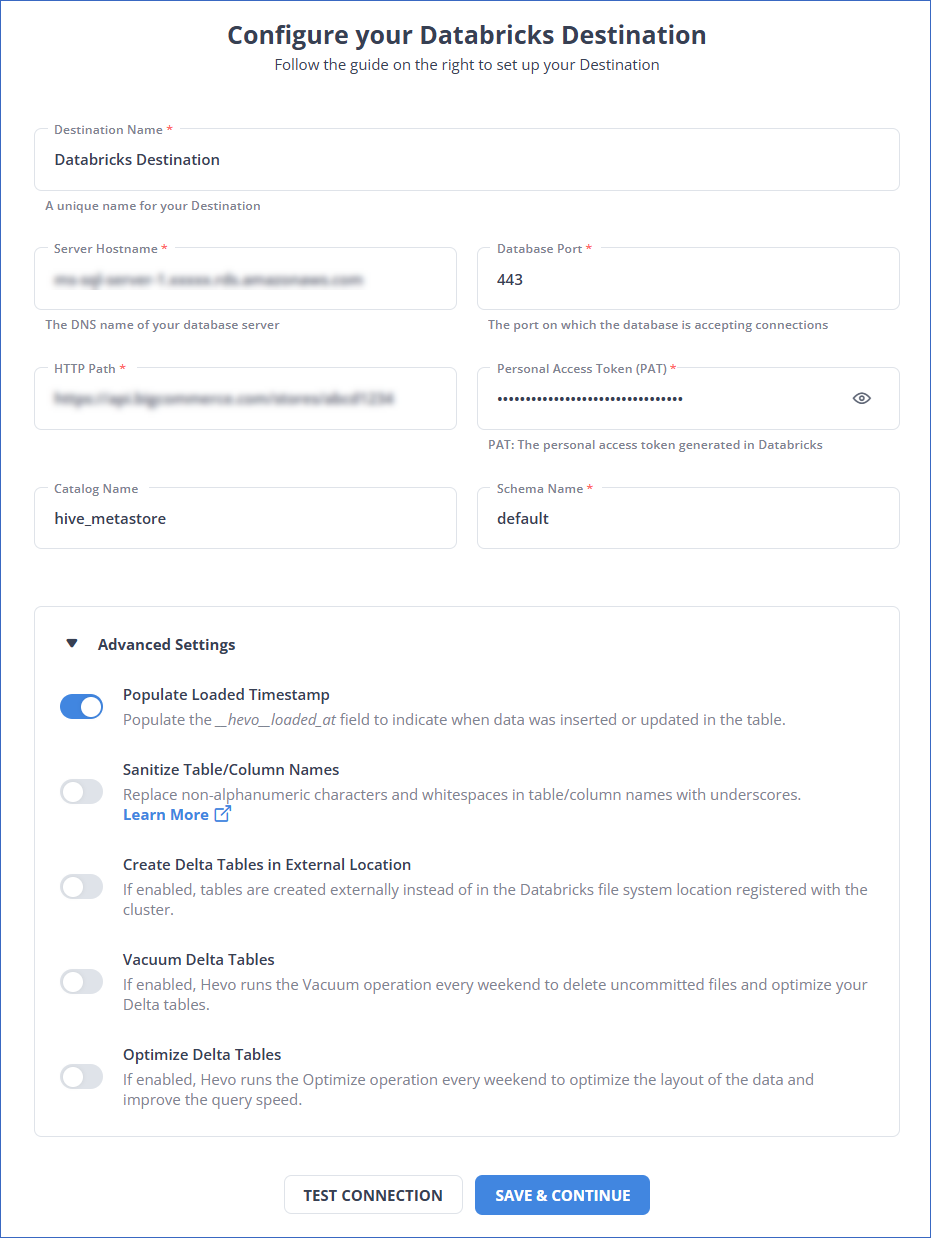

Configure Databricks as a Destination

Perform the following steps to configure Databricks as a Destination in Hevo:

-

Click DESTINATIONS in the Navigation Bar.

-

Click + CREATE DESTINATION in the Destinations List View.

-

On the Add Destination page, select Databricks.

-

On the Configure your Databricks Destination page, specify the following:

-

Destination Name: A unique name for the Destination, not exceeding 255 characters.

-

Server Hostname: The server hostname that you obtained from your Databricks account.

-

Database Port: The port that you obtained from your Databricks account. Default value: 443.

-

HTTP Path: The HTTP path that you obtained from your Databricks account.

-

Personal Access Token (PAT): The PAT that you created in your Databricks account.

-

Catalog Name: The name of the catalog that contains the Destination database.

-

Schema Name: The name of the Destination database schema. Default value: default.

-

Advanced Settings:

-

Populate Loaded Timestamp: If enabled, Hevo appends the

___hevo_loaded_at_column to the Destination table to indicate the time when the Event was loaded. -

Sanitize Table/Column Names: If enabled, Hevo sanitizes the column names to remove all non-alphanumeric characters and spaces in the table and column names and replaces them with an underscore (_). Read Name Sanitization.

-

Create Delta Tables in External Location (Optional): If enabled, you can create tables in a different location than the Databricks file system location registered with the cluster. Read Identifying the External Location for Delta Tables.

If disabled, the default Databricks File System location registered with the cluster is used. Hevo creates the external Delta tables in the

/{schema}/{table}path. -

Vacuum Delta Tables: If enabled, Hevo runs the Vacuum operation every weekend to delete the uncommitted files and clean up your Delta tables. Databricks charges additional costs for these queries. Read Databricks Cloud Partners documentation to know more about vacuuming.

-

Optimize Delta Tables: If enabled, Hevo runs the Optimize queries every weekend to optimize the layout of the data and improve the query speed. Databricks charges additional costs for these queries. Read Databricks Cloud Partners documentation to know more about optimization.

-

-

-

Click TEST CONNECTION. This button is enabled once all the mandatory fields are specified.

-

Click SAVE & CONTINUE. This button is enabled once all the mandatory fields are specified.

(Optional) Allowing Connections from Hevo IP Addresses to the Databricks Workspace

Perform the steps in this section if the IP access lists feature is enabled for your workspace.

Note: You must have the Admin access role in your Databricks workspace to perform these steps.

To add an IP access list:

-

Obtain the personal access token for your workspace. To do so:

-

If you are connecting using the Databricks credentials, follow Step 5 above.

-

If you are connecting using Partner Connect, follow these steps:

-

On the Configure your Databricks Destination page, Personal Access Token (PAT) field, click the View (

) icon to view the token.

) icon to view the token. -

Copy it and save it securely like any other password.

-

Use the obtained PAT as the Bearer Token in your API call.

-

-

Call the add an IP access list API with the POST method from any API client, such as Postman or a Terminal window. Specify the following in the JSON request body:

-

label: A string value to identify the access list. For example, Hevo if you create the list to add Hevo IP addresses. -

list_type: A string value to identify the type of list created. This parameter can take one of these values:-

ALLOW: Add the specified IP addresses to the access list.

-

BLOCK: Remove the specified IP addresses from the access list or block connections from them.

-

-

ip_addresses: A JSON array of IP addresses and CIDR ranges, given as string values. Use the Hevo IP addresses of your region and their CIDR ranges in this parameter.

The base path for the API endpoint is https://<deployment name>.cloud.databricks.com/api/2.0. For example, if the deployment name is dbc-westeros, the base path for the API endpoint is https://dbc-westeros.cloud.databricks.com/api/2.0, and the URL to call the add an IP access list API is https://dbc-westeros.cloud.databricks.com/api/2.0/ip-access-lists.

The following example adds an access list to allow Hevo IP addresses for the Asia region:

curl -X POST -n \ -H "Authorization: Bearer <your personal access token>" -H "Content-Type: application/json" -H "Accept: application/json" https://<deployment-name>.cloud.databricks.com/api/2.0/ip-access-lists -d '{ "label": "HEVO", "list_type": "ALLOW", "ip_addresses": [ "13.228.214.171/32", "52.77.50.136/32" ] }'Note: Replace the placeholder values in the commands above with your own. For example, <deployment-name> with dbc-westeros.

-

-

Proceed to the Configure your Databricks Destination page to continue setting up your Databricks Destination.

Identifying the External Location for Delta Tables

If the Create Delta tables in an external location option is enabled, Hevo creates the Delta tables in the {external-location}/{schema}/{table} path specified by you.

To locate the path of the external location, do one of the following

-

If you have DBFS access in Databricks:

-

In the databricks console, click Data in the left navigation bar.

-

Click the DBFS tab on the top of the sliding sidebar.

-

Select/view the path where the tables must be created. For example, in the above image,

/demo/defaultis the path, and the external location is derived as/demo/default/{schema}/{table}.

-

-

If you do not have DBFS access:

- Run the following command in your Databricks instance or the Destination workbench in Hevo:

DESCRIBE TABLE EXTENDED <table-name>;Read Databricks Cloud Partners documentation to know more about DESCRIBE TABLE.

Additional Information

Read the detailed Hevo documentation for the following related topics:

Limitations

- Hevo currently does not support Databricks as a Destination in the US-GCP region.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Dec-16-2024 | 2.31 | Updated section, Connect Using the Databricks Partner Connect (Recommended Method) to include support for Unity Catalog and to reflect the latest UI. |

| Nov-25-2024 | 2.30.1 | Updated sub-section, Configure Databricks as a Destination to describe the new Catalog Name field and updated the screenshot as per the latest Hevo UI. |

| Jul-22-2024 | NA | Updated section, Connect Using the Databricks Partner Connect to reflect the latest Databricks UI. |

| Jun-04-2024 | NA | - Added the process flow diagram in the page overview section. - Updated section, Configure Databricks as a Destination to clarify the Schema Name field. |

| Nov-28-2023 | NA | - Renamed section Connect your Databricks Warehouse to Create a Databricks Cluster or Warehouse. - Updated section, Obtain Databricks Credentials to add subsections, Obtain cluster details and Obtain SQL warehouse details. |

| Aug-10-2023 | NA | - Added a prerequisite about adding Hevo IP addresses to an access list. - Added the subsection Allow connections from Hevo IP addresses to the Databricks workspace for the steps to create an IP access list. |

| Apr-25-2023 | 2.12 | Updated section, Connect Using the Databricks Partner Connect (Recommended Method) to add information that you must specify all fields to create a Pipeline. |

| Nov-23-2022 | 2.02 | - Added section, Connect Using the Databricks Partner Connect to mention about Databricks Partner Connect integration. - Updated screenshots in the page to reflect the latest Databricks UI. |

| Oct-17-2022 | NA | Updated section, Limitations to add limitation regarding Hevo not supporting Databricks on Google Cloud. |

| Jan-03-2022 | 1.79 | New document. |