Amazon Redshift

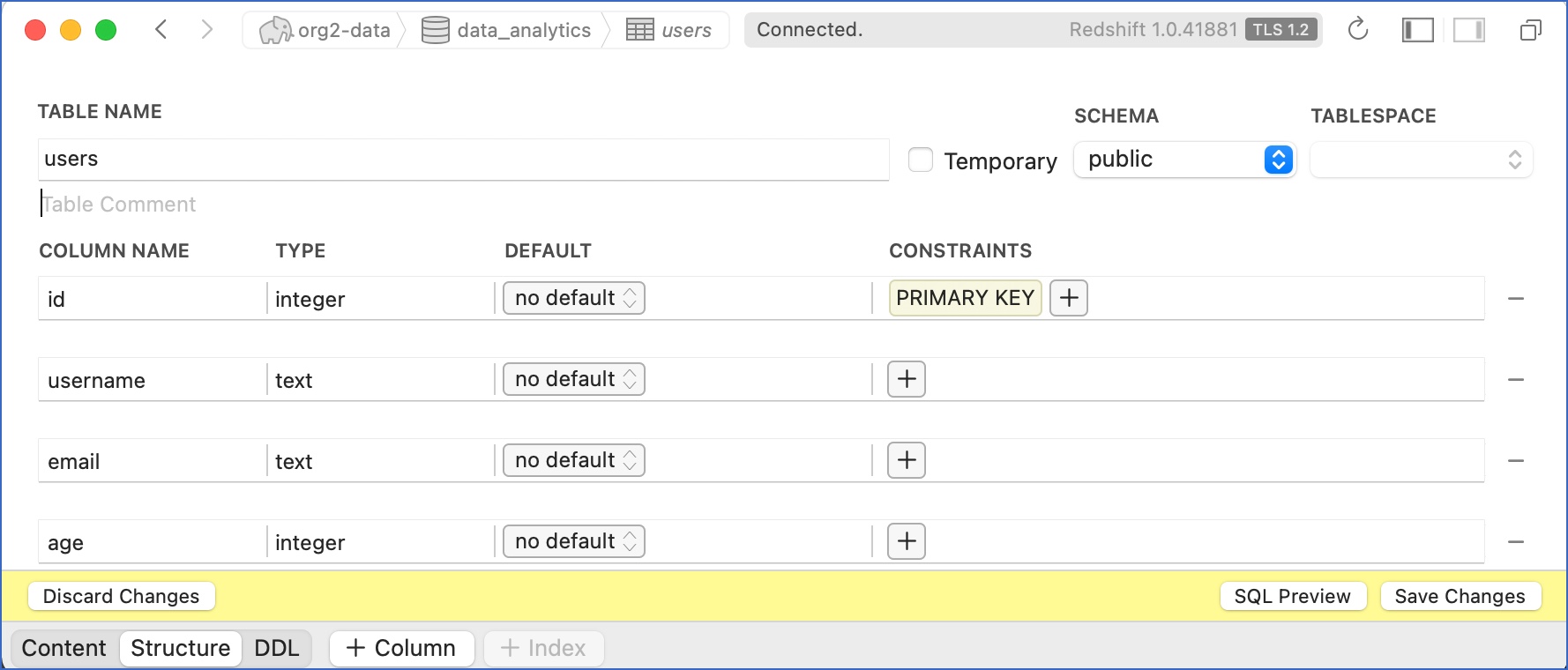

On This Page

Amazon Redshift is a fully managed, reliable data warehouse service in the cloud that offers large-scale storage and analysis of data set and performs large-scale database migrations. It is a part of the larger cloud-computing platform Amazon Web Services (AWS).

Hevo can load data from any of your Pipelines into an Amazon Redshift data warehouse. You can set up the Redshift Destination on the fly, as part of the Pipeline creation process, or independently. The ingested data is first staged in Hevo’s S3 bucket before it is batched and loaded to the Amazon Redshift Destination.

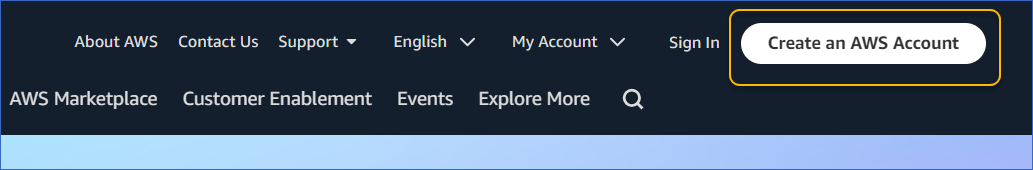

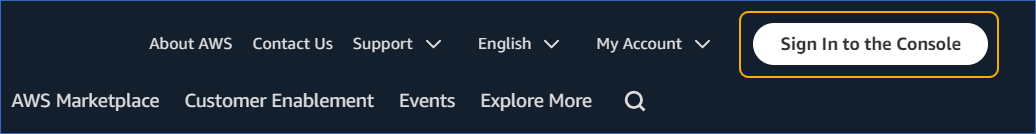

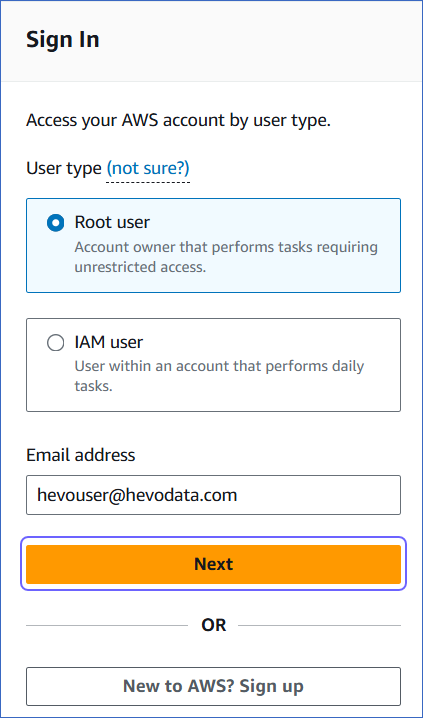

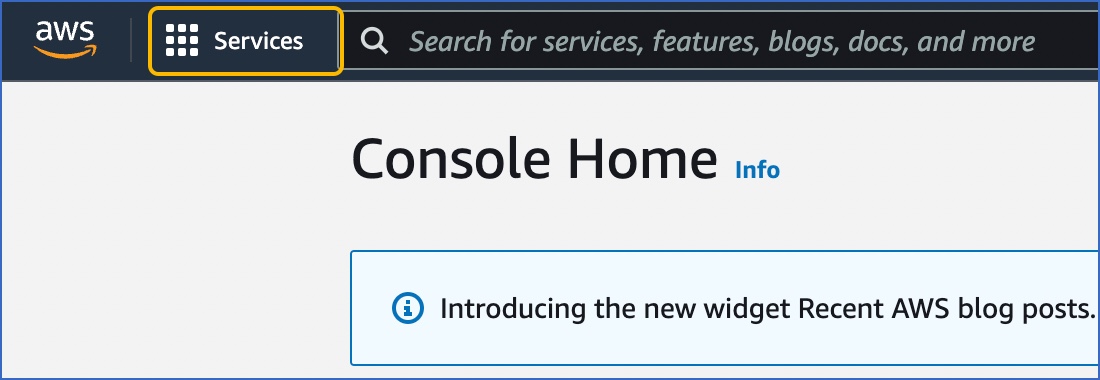

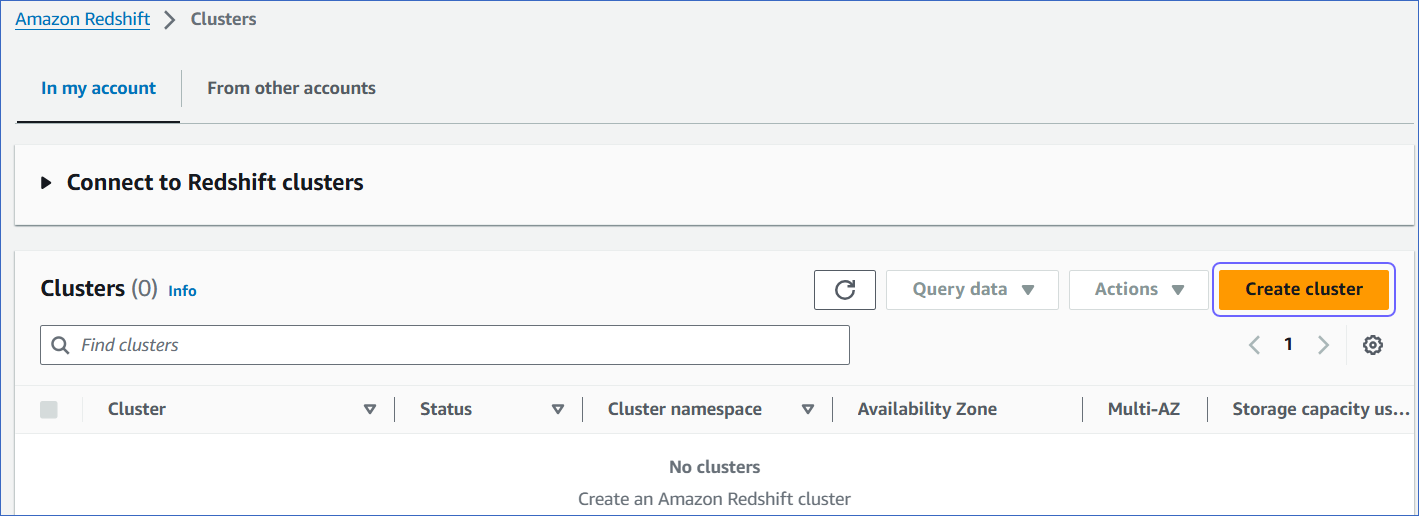

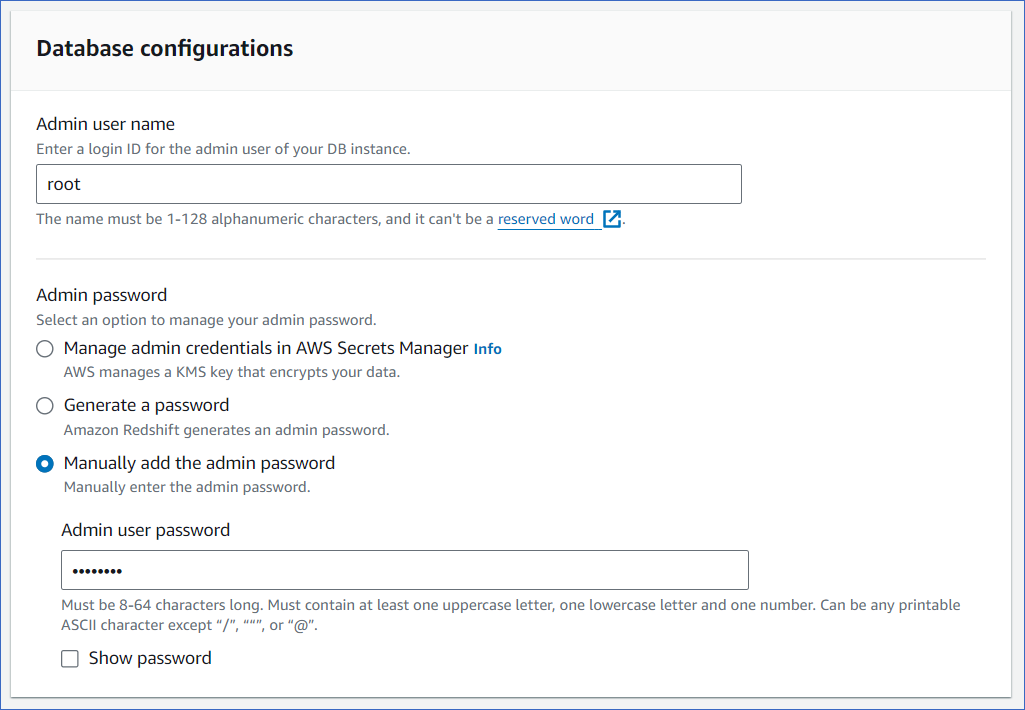

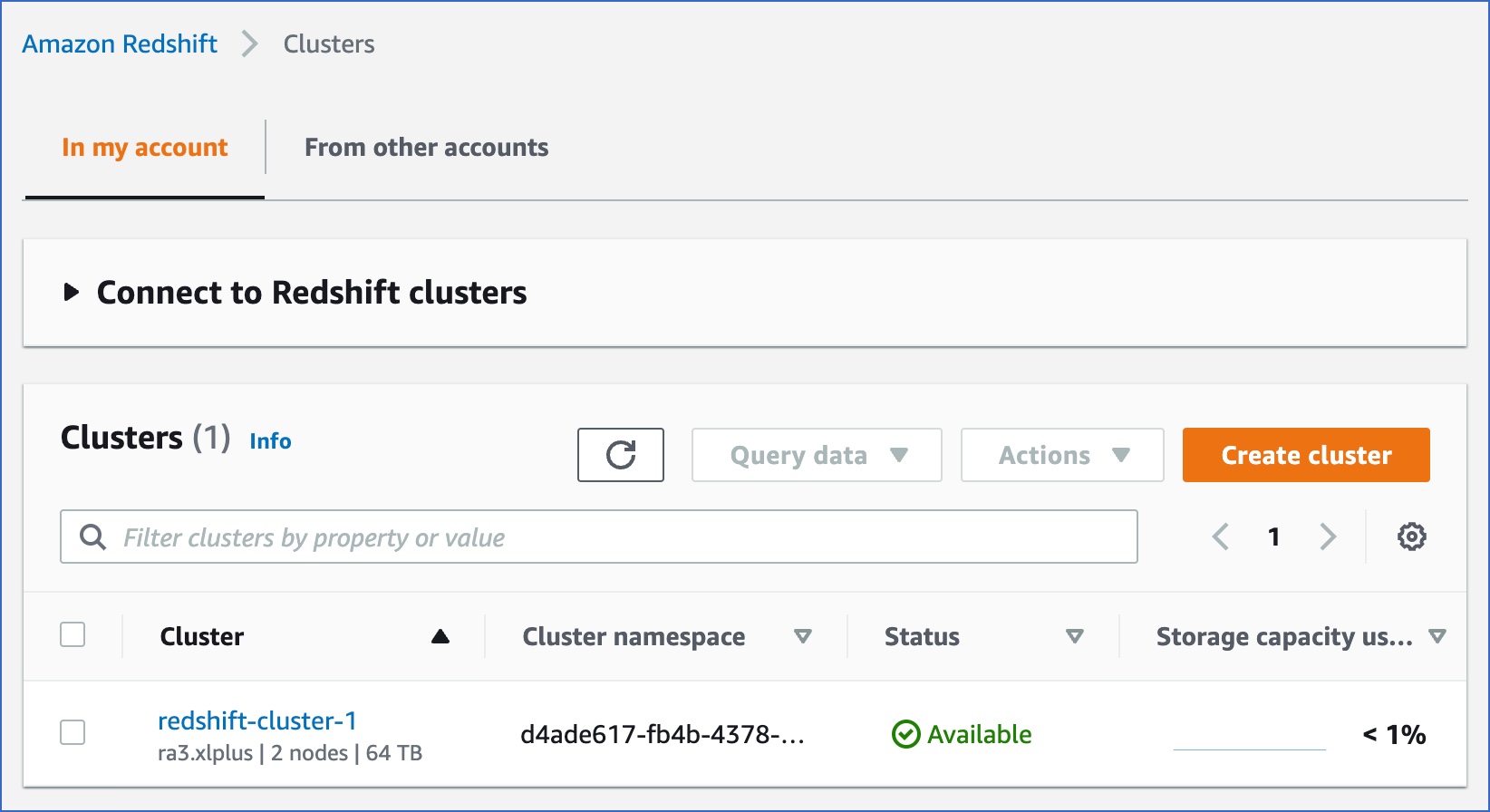

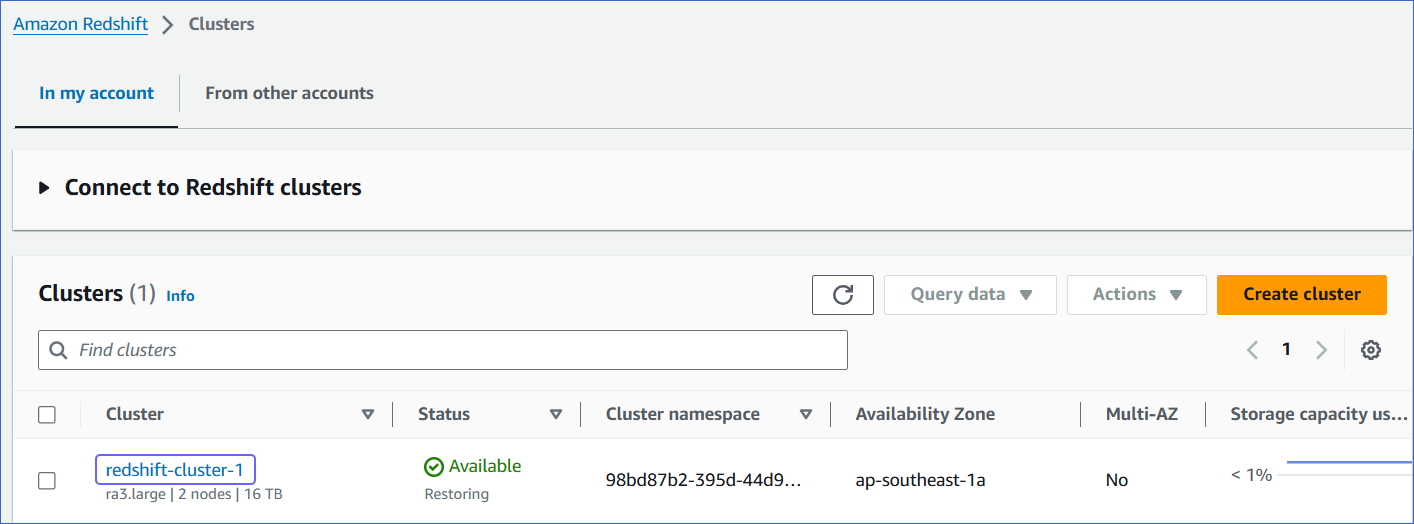

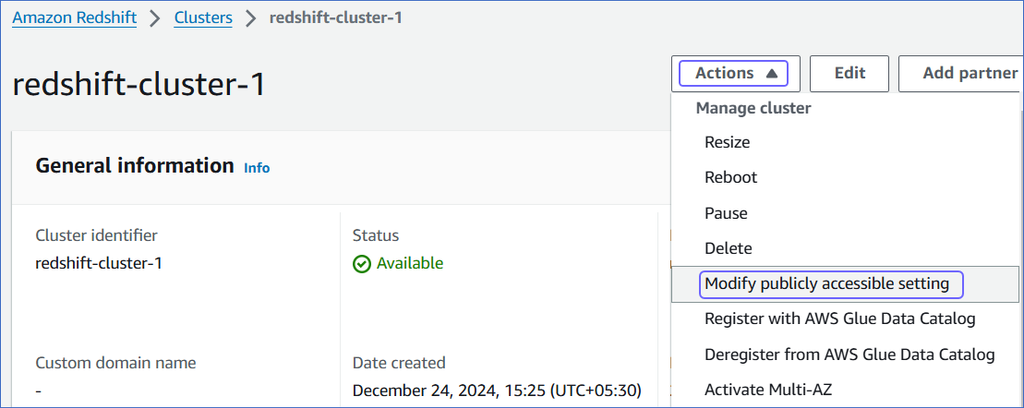

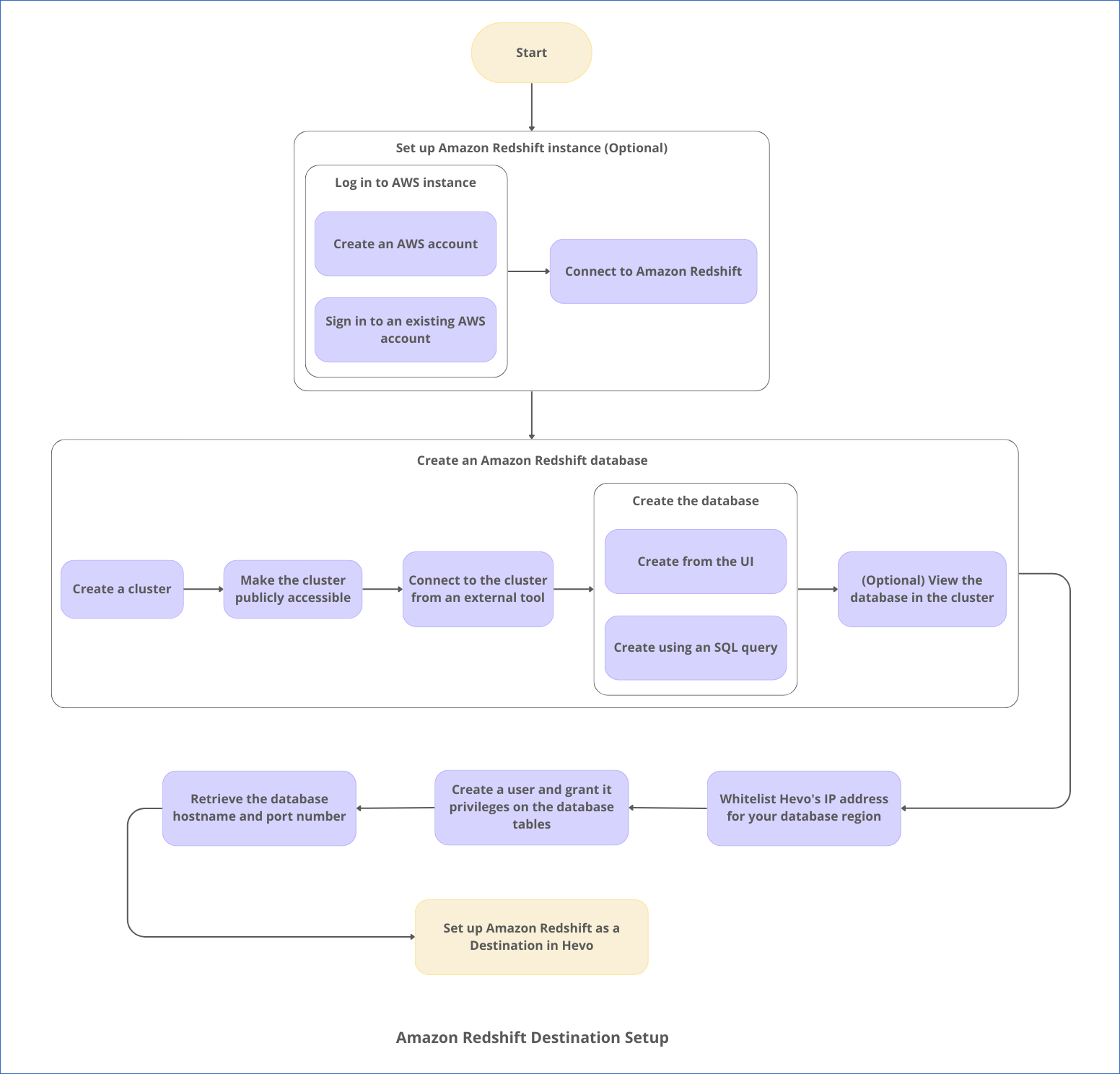

If you are new to AWS and Redshift, you can follow the steps listed below to create an AWS account and after that, create an Amazon Redshift database to which the Hevo Pipeline will load the data. Alternatively, you can create users and assign them the required permissions to set up and manage databases within Amazon Redshift. Read AWS Identity and Access Management for more details.

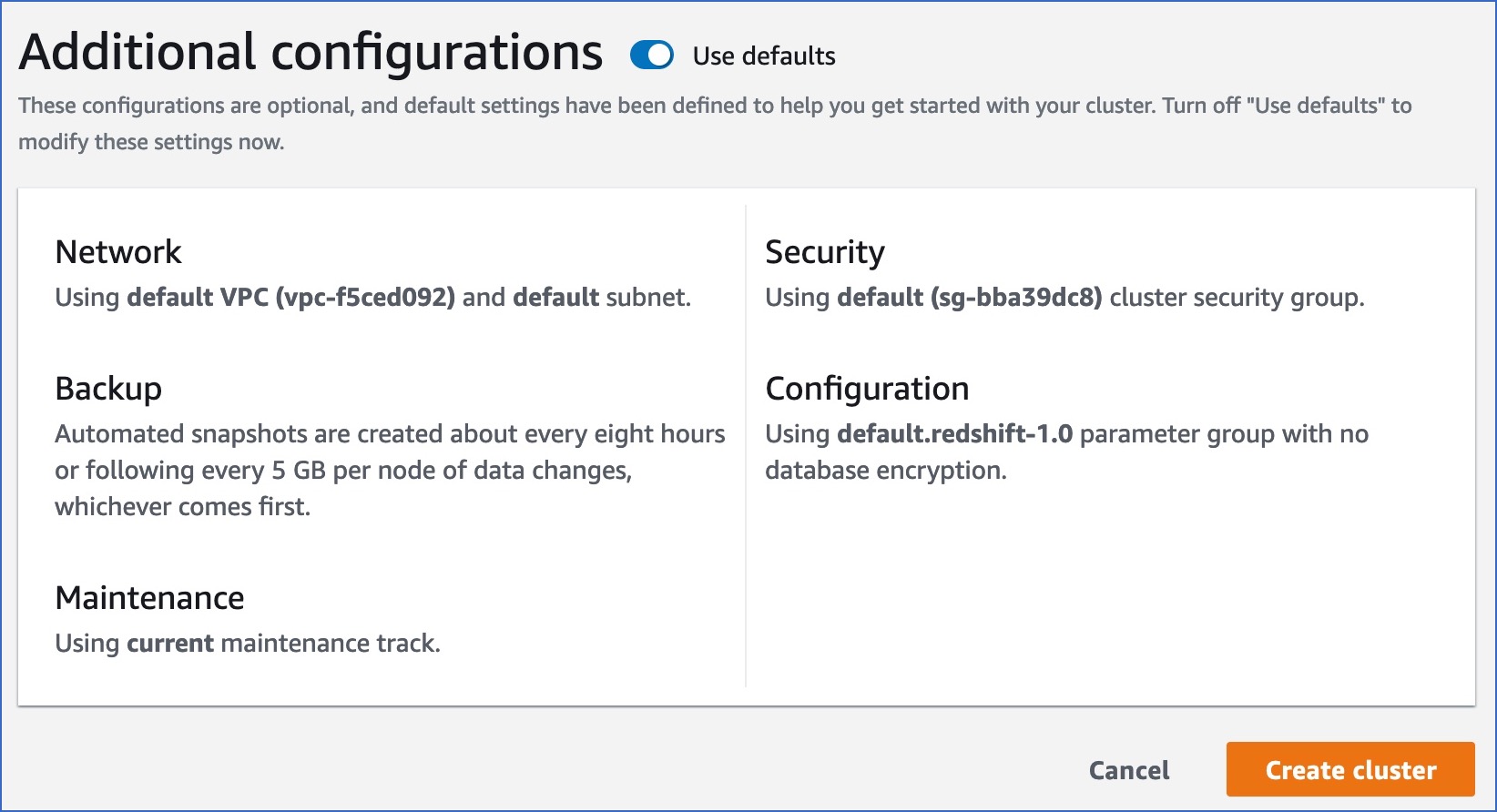

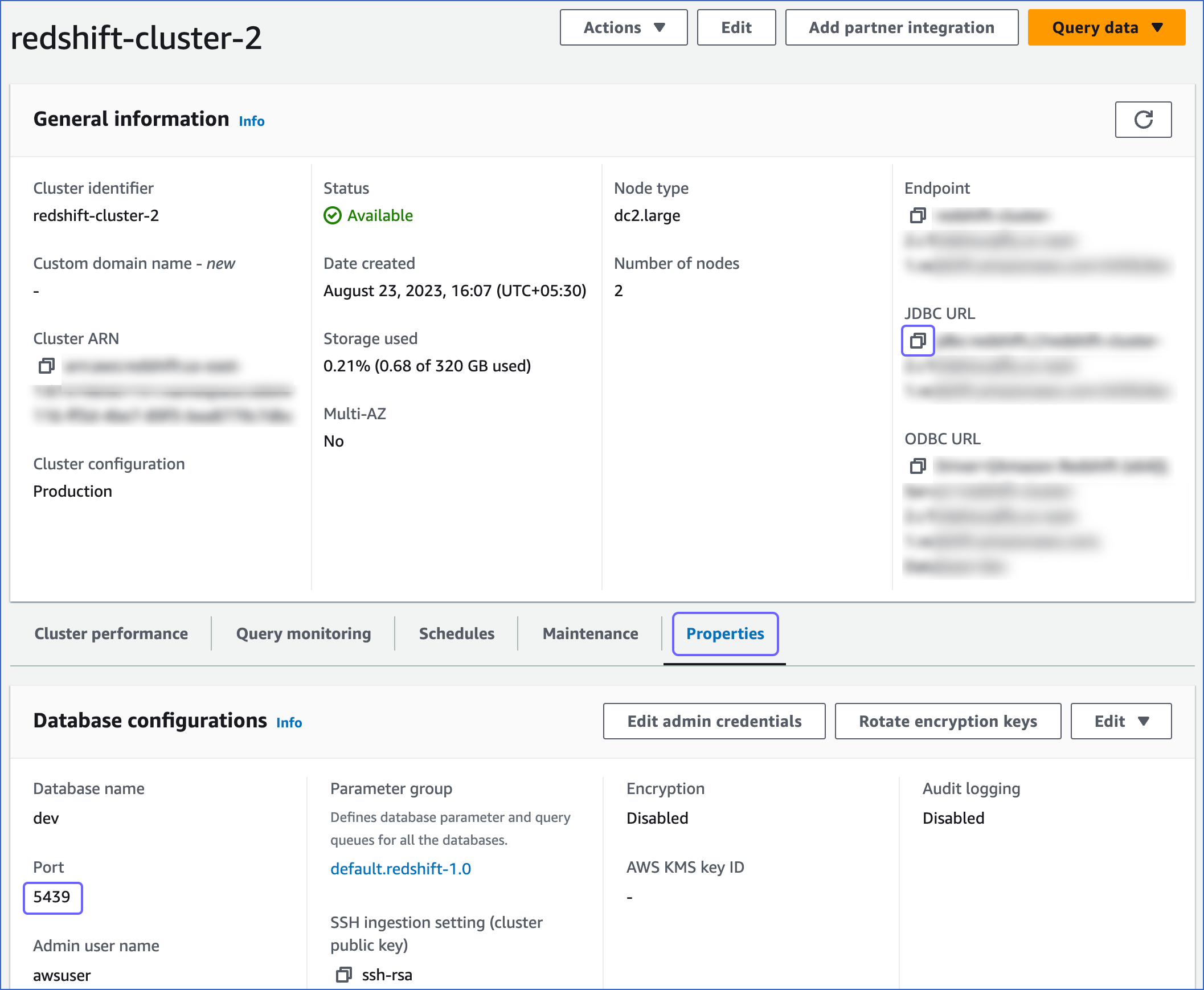

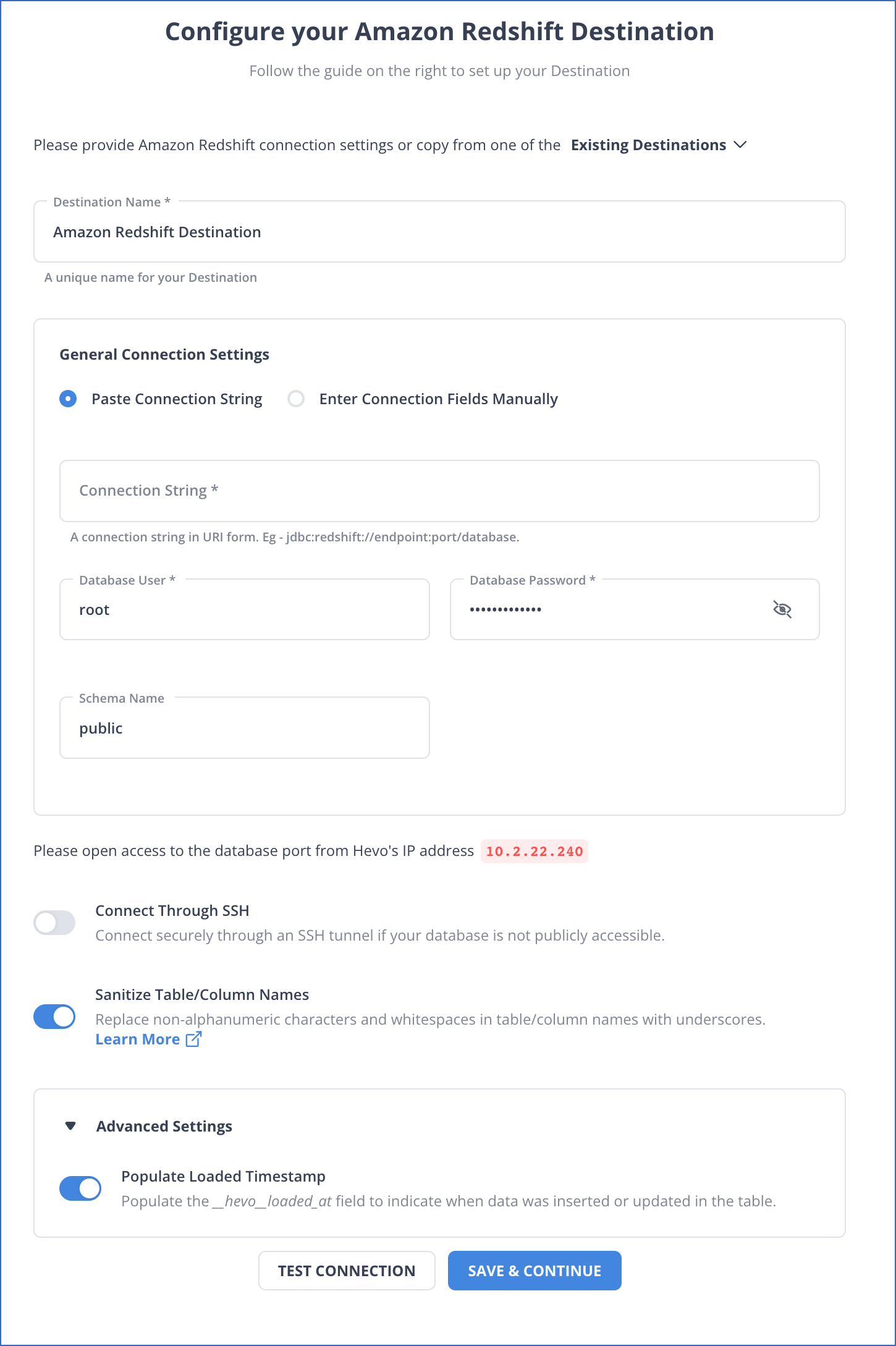

The following image illustrates the key steps that you need to complete to configure Amazon Redshift as a Destination in Hevo:

Handling Source Data with Different Data Types

For teams created in or after Hevo Release 1.60, Hevo automatically modifies the data type of an Amazon Redshift Destination table column to accommodate Source data with a different data type. Read Handling Different Data Types in Source Data.

Note: Your Hevo release version is mentioned at the bottom of the Navigation Bar.

Handling Source Data with JSON Fields

For Pipelines created in or after Hevo Release 1.74, Hevo uses Replicate JSON fields to JSON columns as the default parsing strategy to load the Source data to the Amazon Redshift Destination.

With the changed strategy, you can query your JSON data directly, eliminating the need to parse it as JSON strings. This change in strategy does not affect the functionality of your existing Pipelines. Therefore, if you want to apply the changed parsing strategy to your existing Pipelines, you need to recreate them.

In addition, the replication strategies, Flatten structs and split arrays to new Events and Replicate JSON fields as JSON strings and array fields as strings have been deprecated for newer Pipelines, and are no longer visible in the UI.

Read Parsing Nested JSON Fields in Events.

Destination Considerations

-

Amazon Redshift supports a maximum length of 65,535 bytes for VARCHAR field values. As a result, if a Source object has any ARRAY, JSON, XML, STRING, or VARCHAR field values that exceed this limit, Hevo fails those Events.

-

Amazon Redshift is case-insensitive to names of database objects, including tables and columns. For example, if your JSON field names are either in mixed or uppercase, such as Product or ITEMS, Amazon Redshift does not recognize these field names. Hence, it is unable to fetch data from them. Therefore, to enable Amazon Redshift to identify such JSON field names, you must set the session parameter

enable_case_sensitive_identifierto TRUE. Read SUPER configurations. -

Hevo stages the ingested data in an Amazon S3 bucket, from where it is loaded to the Destination tables using the COPY command. Hence, if you have enabled enhanced VPC routing, ensure that your VPC is configured correctly. Enhanced VPC routing affects the way your Amazon Redshift cluster accesses other resources in your AWS network, such as the S3 bucket, specifically for the COPY and UNLOAD commands. Read Enhanced VPC Routing in Amazon Redshift.

-

Hevo uses the Amazon Redshift COPY command to load data into the Destination tables. If a Source object has a row size exceeding 4 MB, Hevo cannot load the object, as the COPY command supports a maximum row size of 4 MB. For example, an object having a VARBYTE row with 6 MB of data cannot be loaded, even though the VARBYTE data type supports up to 16 MB. To avoid such a scenario, ensure that each row in your Source objects contains less than 4 MB of data.

-

Redshift may forcibly terminate a load operation if it runs longer than the workload management (WLM) query timeout limits set for the queue, typically due to high data volume, cluster load, or WLM constraints. These terminations can occur even when Hevo initiates data loading correctly, resulting in persistent load failures despite multiple retries. Hevo automatically retries the load operation. If a retry succeeds, the data is loaded as expected.

To reduce the risk of termination, review and optimize your Redshift WLM configuration:

-

Adjust queue settings such as memory allocation and concurrency levels.

-

Use manual WLM to prioritize critical load operations.

-

Implement Query Monitoring Rules (QMR) to detect and manage long-running or resource-intensive load operations, especially during peak usage periods.

-

Limitations

-

Hevo replicates a maximum of 1600 columns to each Amazon Redshift table. Read Limits on the Number of Destination Columns.

-

Hevo does not support writing to tables that have IDENTITY columns.

Let us suppose you create a table with a default IDENTITY column and manually map a Source table to it. When the Pipeline runs, Hevo issues

insertqueries to write all the values from the Source table to this table. However, the writes would fail, as Amazon Redshift does not permit writing values to the IDENTITY column. -

Hevo supports mapping of only JSON fields to the

SUPERdata type that Amazon Redshift uses to support JSON columns.Read SUPER type.

See Also

- Destination FAQs

- Enhanced VPC Routing in Amazon Redshift

- Loading Data to an Amazon Redshift Data Warehouse

- SUPER type

- SUPER configurations

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Jul-14-2025 | NA | - Updated the Destination Considerations section to add information about the size limit for the VARCHAR data type. |

| Jul-10-2025 | NA | Updated section, Destination Considerations to add information about COPY command terminating due to WLM constraints. |

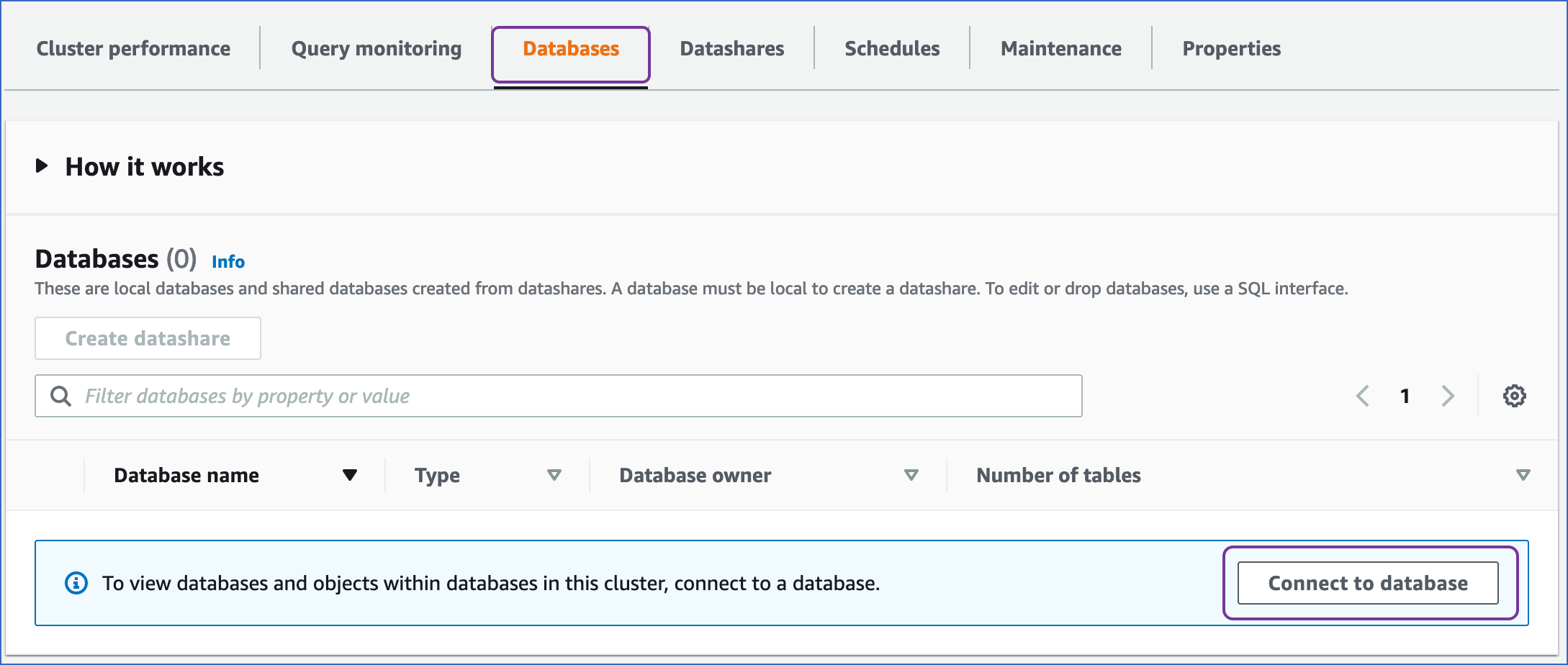

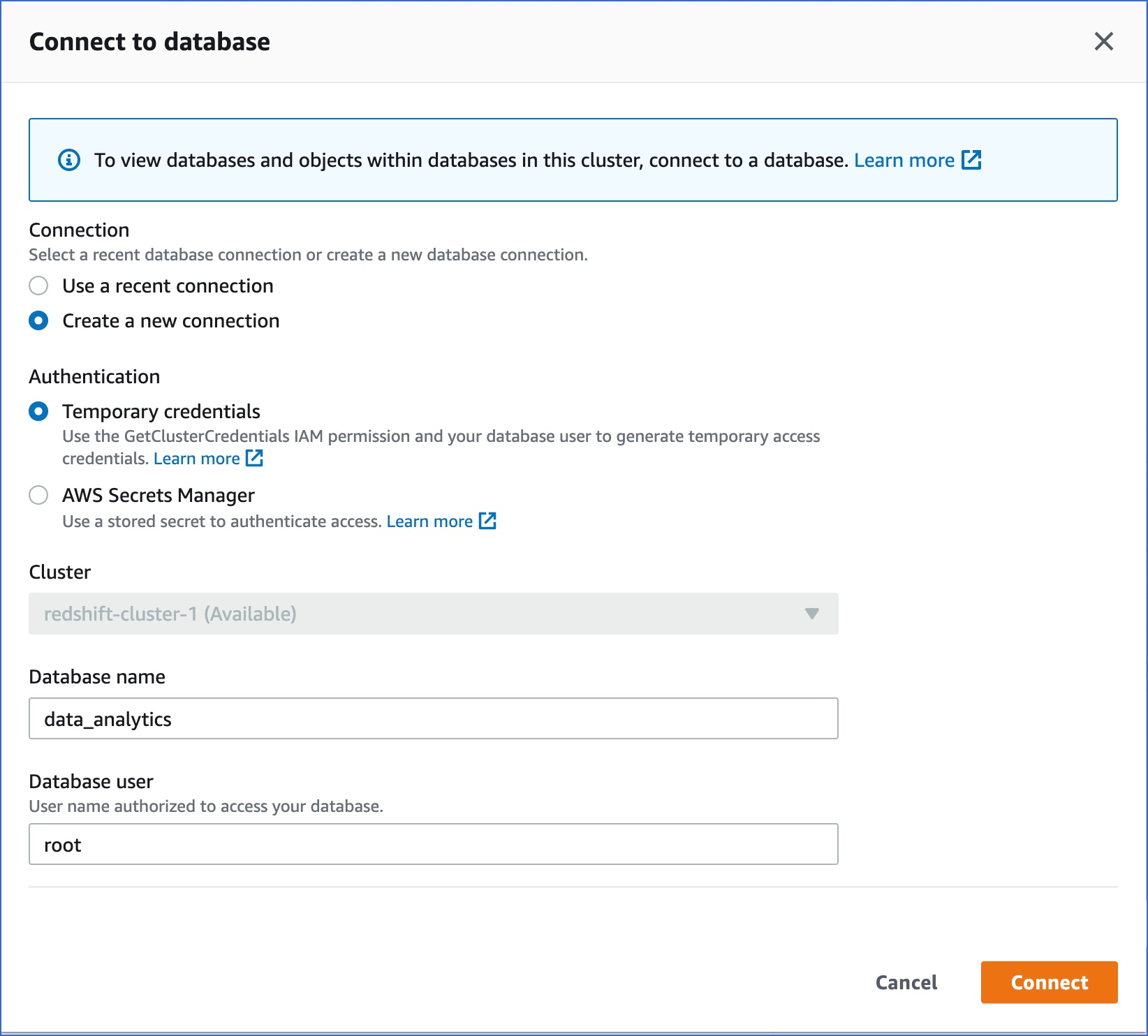

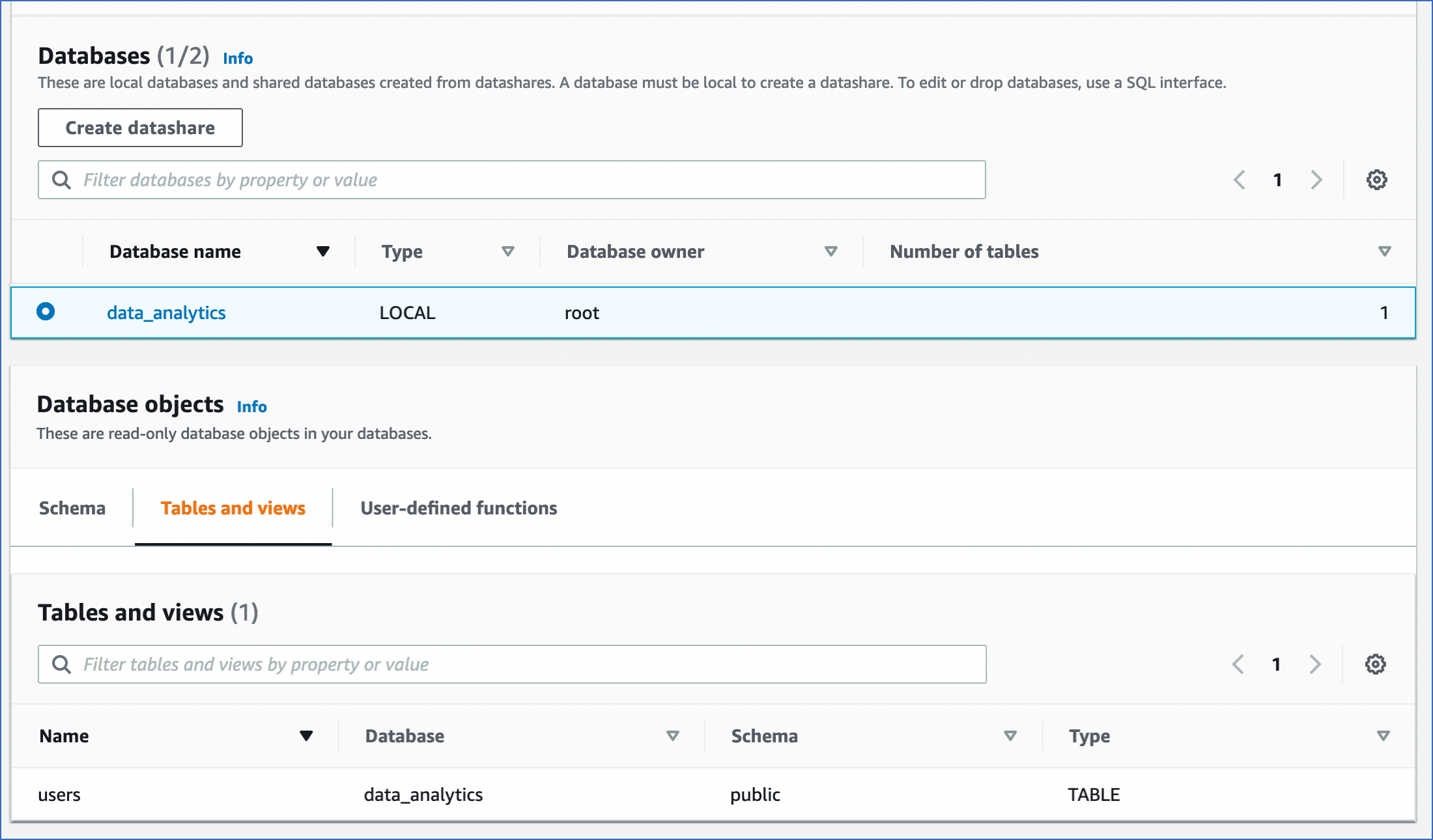

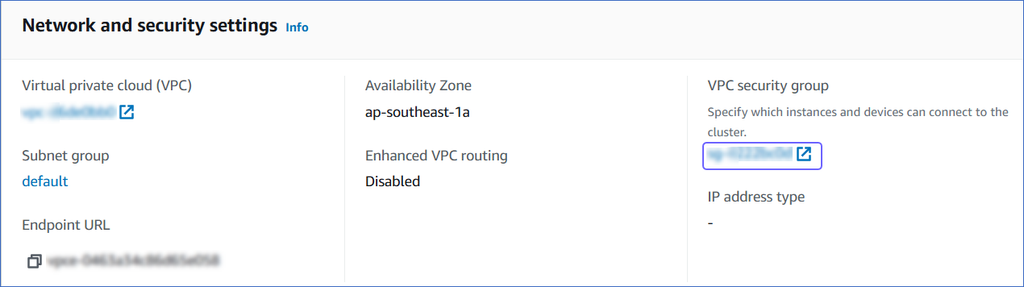

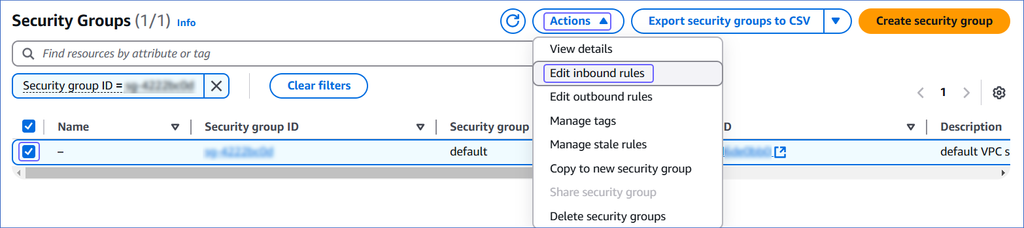

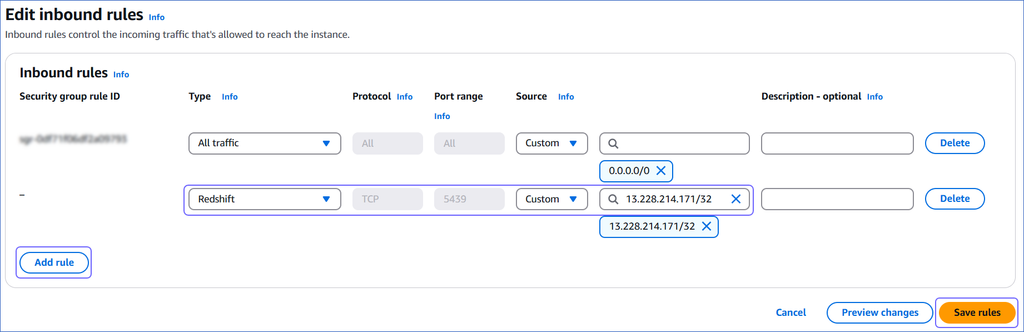

| Feb-24-2025 | NA | Updated sections, Create a Database (Optional), Retrieve the Hostname and Port Number (Optional), and Whitelist Hevo’s IP Addresses as per the latest Amazon Redshift UI. |

| Feb-18-2025 | NA | Updated the Destination Considerations section to: - Remove the consideration about the Super data type.- Add the consideration about the COPY command. |

| Sep-02-2024 | NA | Updated section, Create a Database (Optional) as per the latest Amazon Redshift UI. |

| Sep-04-2023 | NA | Updated the page contents to reflect the latest Amazon Redshift user interface (UI). |

| Aug-11-2023 | NA | Fixed broken links. |

| Apr-25-2023 | 2.12 | Updated section, Configure Amazon Redshift as a Destination to add information that you must specify all fields to create a Pipeline. |

| Feb-20-2023 | 2.08 | Updated section, Configure Amazon Redshift as a Destination to add steps for using the connection string to automatically fetch the database credentials. |

| Oct-10-2022 | NA | Added sections: - Set up an Amazon Redshift Instance - Create a Database |

| Sep-21-2022 | NA | Added a note in section, Configure Amazon Redshift as a Destination. |

| Mar-07-2022 | NA | Updated the section, Destination Considerations for actions to be taken when Enhanced VPC Routing is enabled. |

| Feb-07-2022 | 1.81 | Updated section, Whitelist Hevo’s IP Address to remove details about Outbound rules as they are not required. |

| Nov-09-2021 | NA | Updated section, Step 2. Create a Database User and Grant Privileges, with the list of commands to be run for granting privileges to the user. |

| Oct-25-2021 | 1.74 | Added sections: - Handling Source Data with JSON Fields. - Destination Considerations. Updated sections: - Limitations to add the limitation about Hevo mapping only JSON fields. - See Also. |

| Apr-06-2021 | 1.60 | - Added section, Handling Source Data with Different Data Types. |

| Feb-22-2021 | NA | - Added the limitation that Hevo does not support writing to tables that have identity columns. - Updated the page overview to state that the Pipeline stages the ingested data in Hevo’s S3 bucket, from where it is finally loaded to the Destination. - Revised the procedural sections to include detailed steps for configuring the Amazon Redshift Destination. |