Azure MySQL Database is an easy to set up, operate, and scale fully managed database service from Microsoft. It can automate your database management and maintenance, including routine updates, backups and security, enabling you to focusing on working with your data.

You can ingest data from your Azure MySQL database using Hevo Pipelines and replicate it to a Destination of your choice.

Prerequisites

Perform the following steps to configure your Azure MySQL Source:

Create a Read Replica (Optional)

To use an existing read-replica or connect Hevo to your master database, skip to Set up MySQL Binary Logs for Replication section.

Note: In order to create a Azure MySQL read-replica instance, your master instance must be a Flexible server.

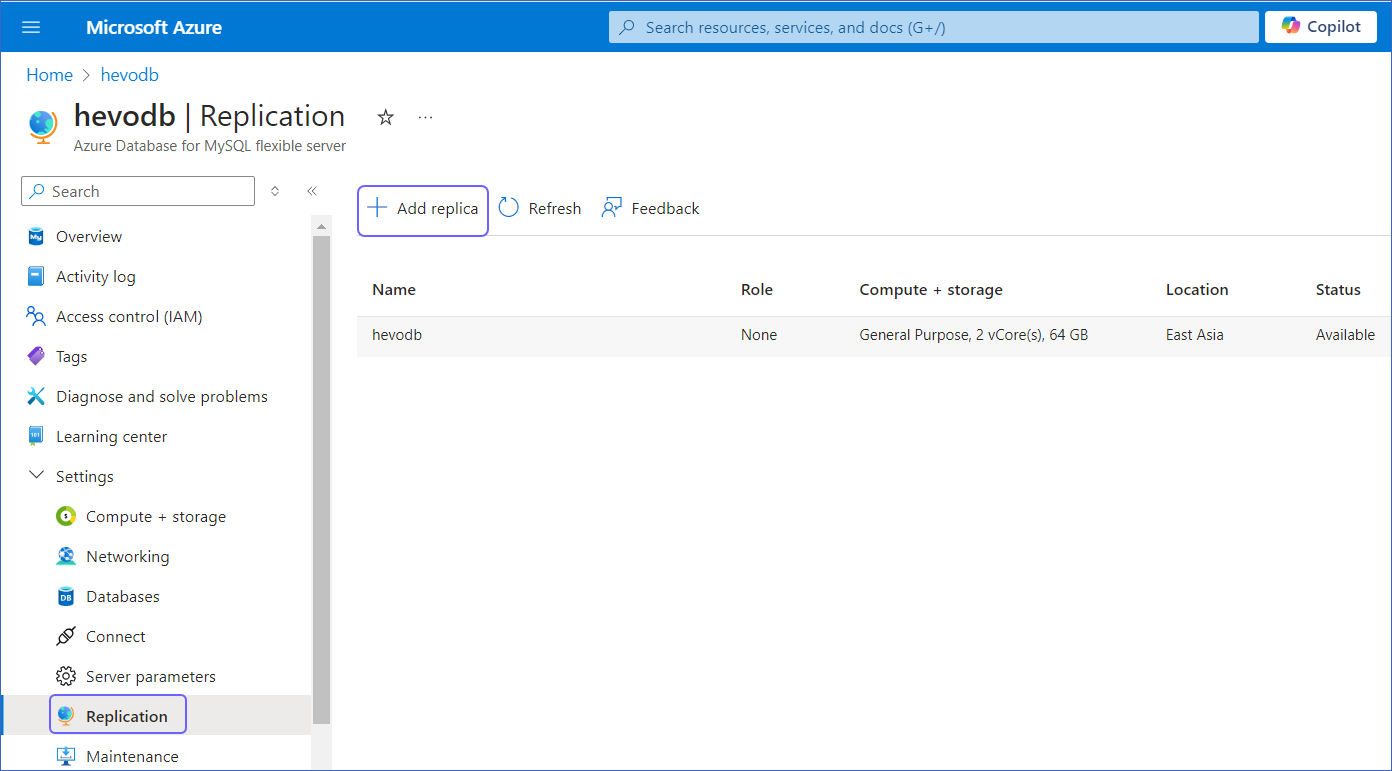

To create a read-replica:

-

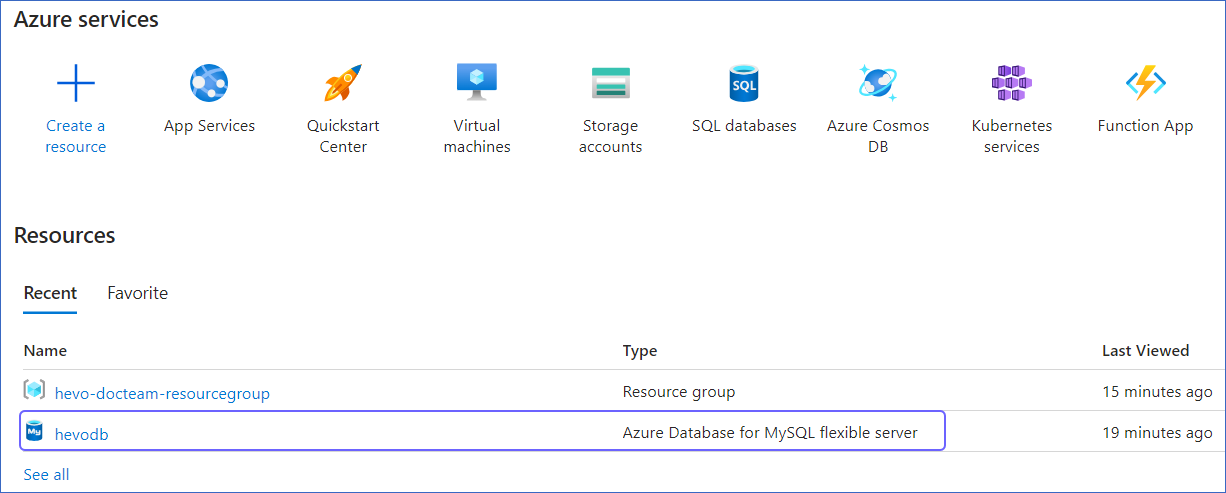

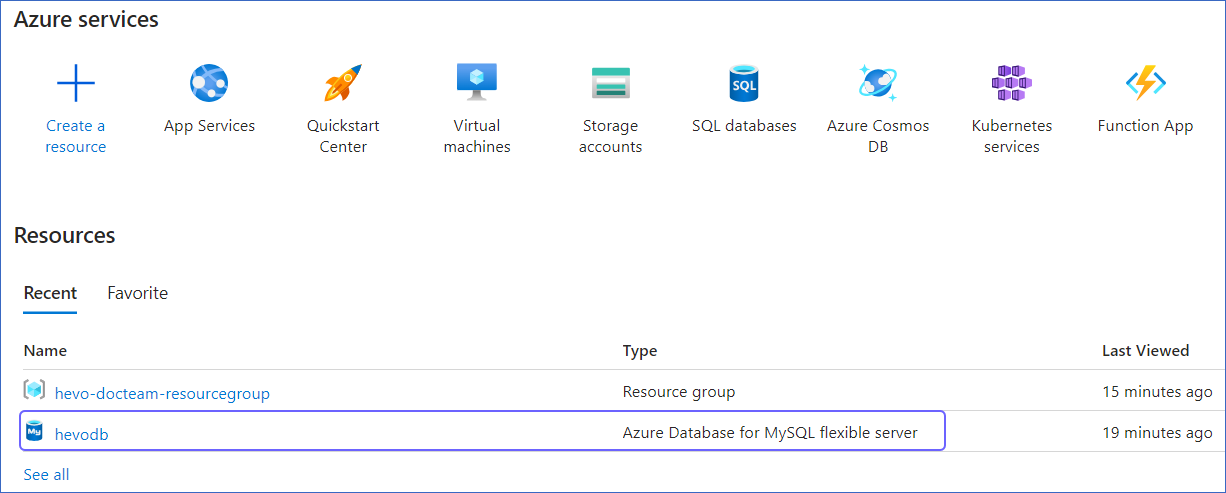

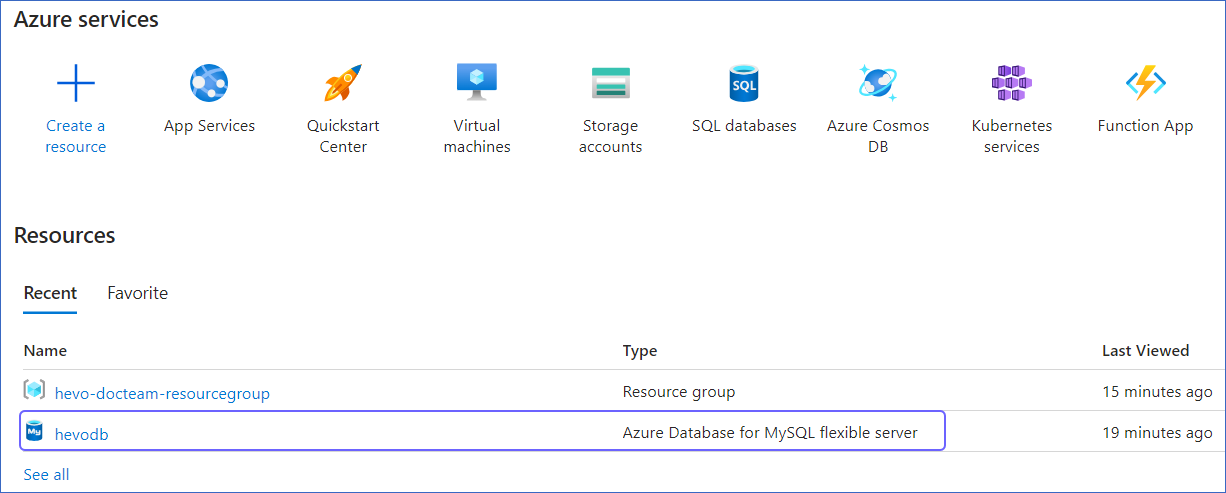

Log in to the Azure Portal.

-

Under Resources, Recent tab, select the database for which you want to create a read-replica.

-

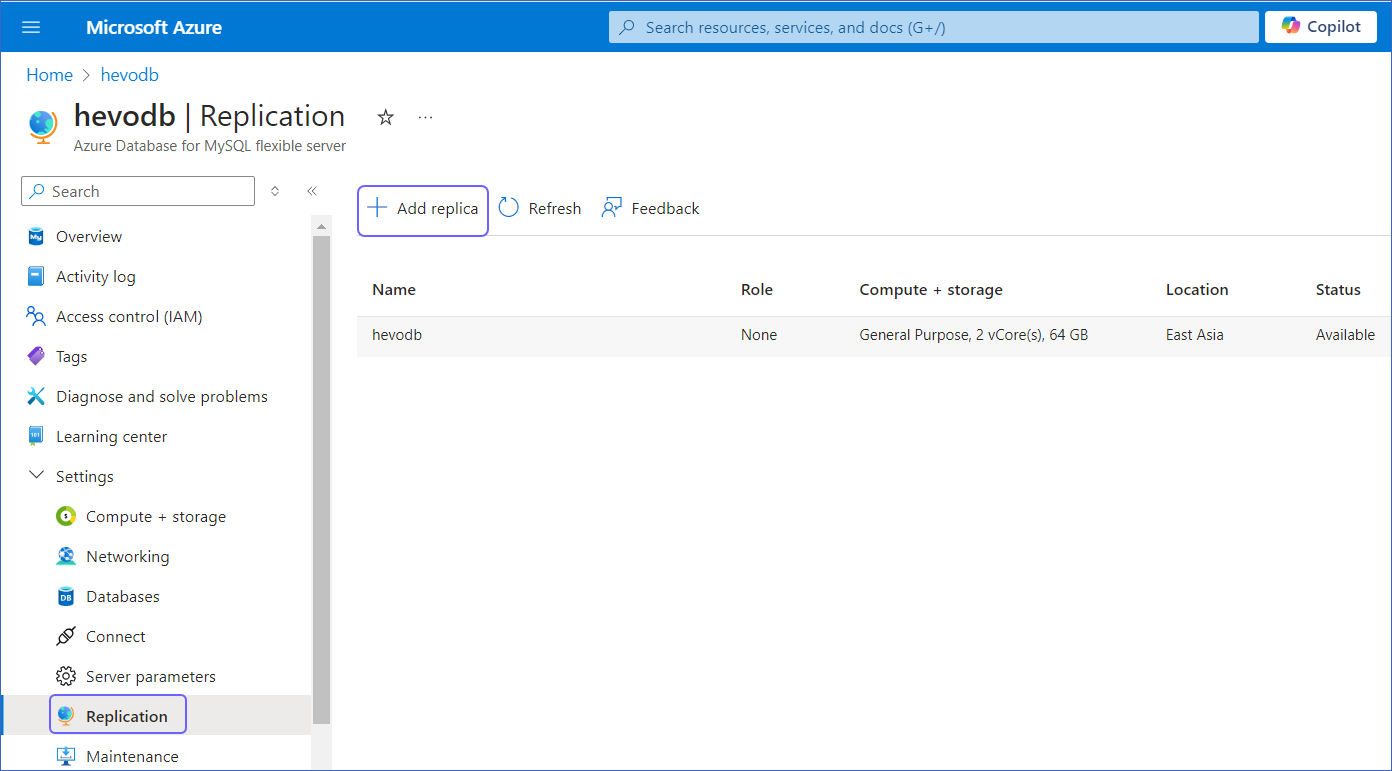

In the left navigation pane, under Settings, click Replication, and then click + Add Replica.

-

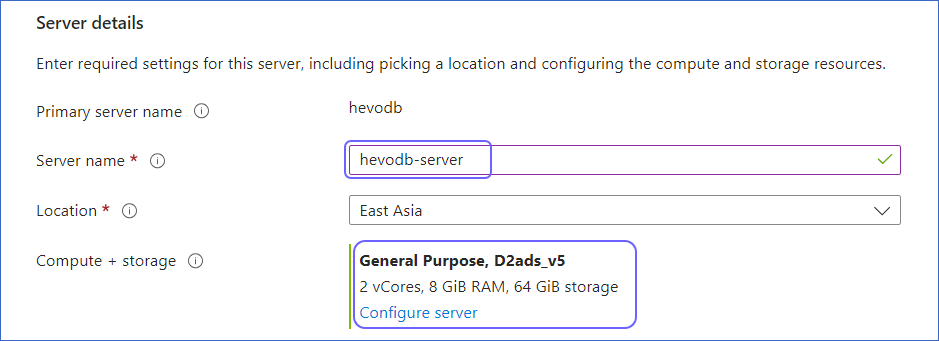

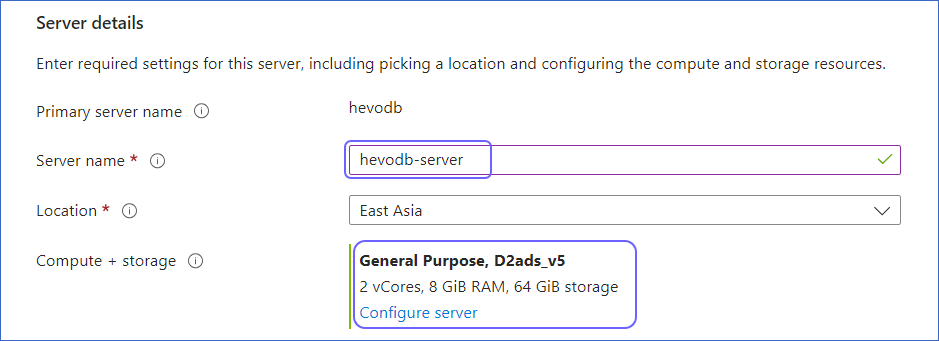

In the Server details section, specify the Server name and the Compute + Storage, and then click Review + Create to review your configuration.

-

Click Create to create a read-replica.

A notification is displayed to confirm that the read-replica was created successfully.

Set up MySQL Binary Logs for Replication

Hevo supports data ingestion from the MySQL database instance via binary logs (BinLog). A binary log is a collection of log files that records information about data modifications and data object modifications made on a MySQL database instance. Typically, binary logs are used for data replication and data recovery.

By default, Row-based BinLog Replication in Azure MySQL. To change this to capture the entire data:

-

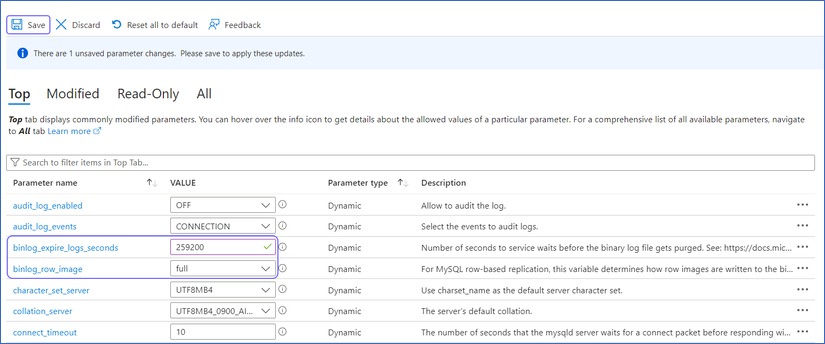

Access your Microsoft Azure MySQL instance.

-

Under Resources, Recent tab, select the database you want to synchronize with Hevo.

-

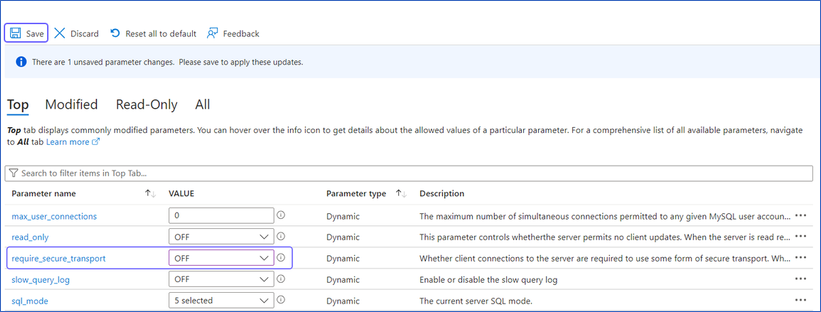

In the left navigation pane, under Settings, click Server Parameters.

-

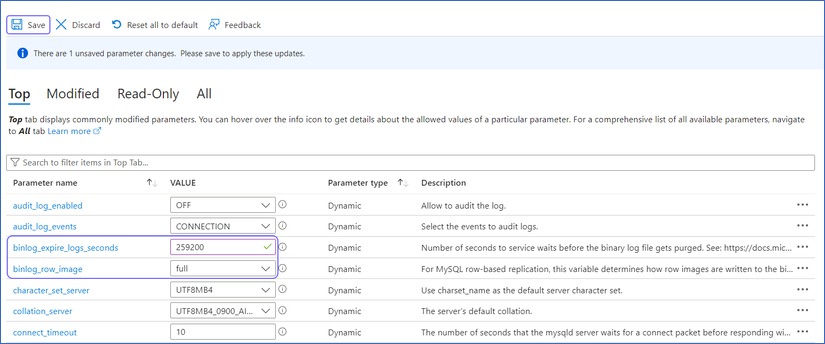

Under the Top tab, update the values of the parameters as follows:

| Parameter Name |

Value |

binlog_row_image |

full |

binlog_expire_logs_seconds |

A value greater than or equal to 259200 (three days). |

-

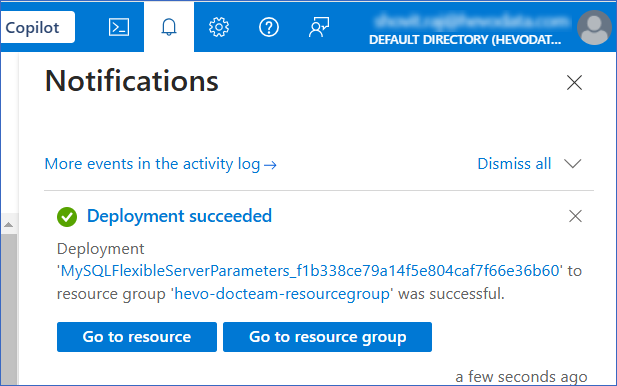

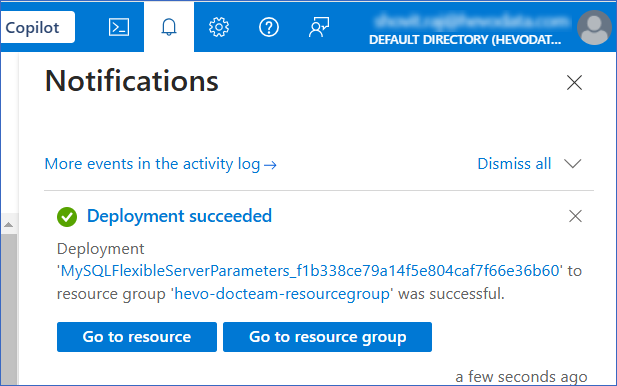

Click Save.

-

Confirm under Notifications that your changes have been applied and the instance has restarted successfully before running the Pipeline, to avoid errors.

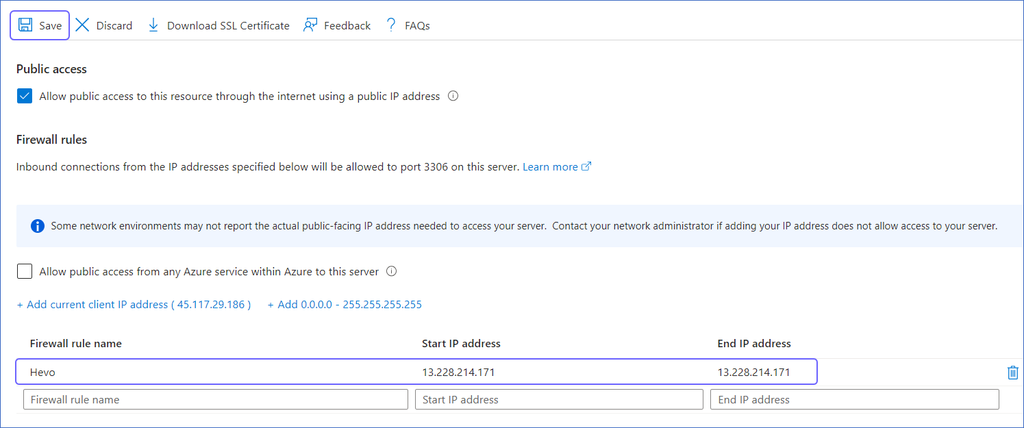

Whitelist Hevo’s IP Addresses

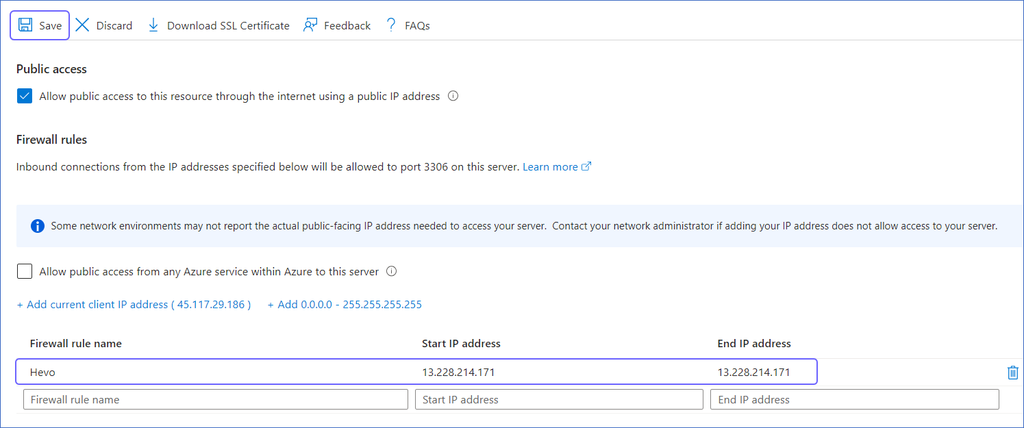

You need to whitelist the Hevo IP addresses for your region to enable Hevo to connect to your Microsoft Azure MySQL database. You can do this by creating firewall rules in your Microsoft Azure database settings as follows:

-

Access your Microsoft Azure MySQL instance.

-

Under Resources, Recent tab, select the database you want to synchronize with Hevo.

-

In the left navigation pane, under Settings, click Networking.

-

Create a Firewall Rule:

-

Specify a Firewall rule name.

-

Specify Hevo’s IP addresses in the Start IP address and End IP address fields as per your region, and then click Save to save the rule.

Note: As Hevo has specific IP addresses and not a range, the value in Start IP address and End IP address fields will be the same.

-

Repeat this step to add the IP address for each applicable Hevo region.

Create a Database User and Grant Privileges

1. Create a database user (Optional)

Perform the following steps to create a database user in your Azure MySQL database:

-

Connect to your Azure MySQL database as an admin user with an SQL client tool, such as MySQL Workbench.

-

Based on your MySQL database version, run one of the following commands to create a database user:

-

Versions 5.6 up to 8.0

CREATE USER <database_username>@'%' IDENTIFIED BY '<password>';

-

Versions 8.0 up to 8.4

CREATE USER <database_username>@'%' IDENTIFIED WITH mysql_native_password BY '<password>';

Note:

-

Replace the placeholder values in the command above with your own. For example, <database_username> with hevouser.

-

For versions 9 and above, MySQL does not allow adding new users with the mysql_native_password plugin. You can only alter existing users. Read Limitations for more information.

2. Grant privileges to the user

The database user specified in the Hevo Pipeline must have the following global privileges:

Perform the following steps to set up these privileges:

-

Connect to your Azure MySQL database as an admin user with an SQL client tool, such as MySQL Workbench.

-

Grant SELECT and REPLICATION privileges to the user:

GRANT SELECT, REPLICATION CLIENT, REPLICATION SLAVE ON *.* TO <database_username>@'%';

-

(Optional) View the grants for the user:

show grants for <database_username>@localhost;

Note:

-

Replace the placeholder values in the commands above with your own. For example, <database_username> with hevouser.

-

The REPLICATION SLAVE privilege is required only if you connect to a read replica. When granted to the authenticating user, the replica logs any updates received from the main database, and maintains a record of those changes in its log.

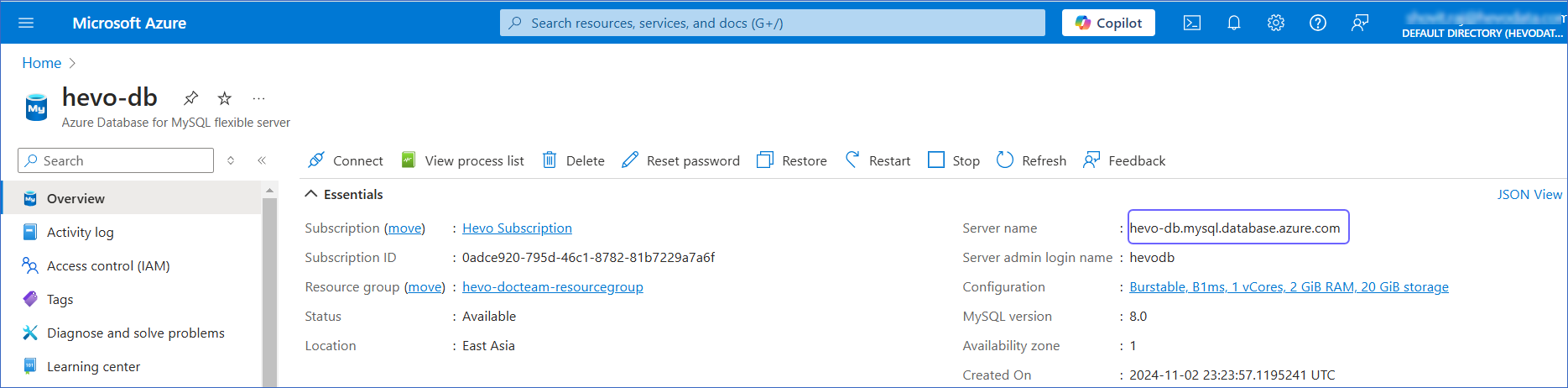

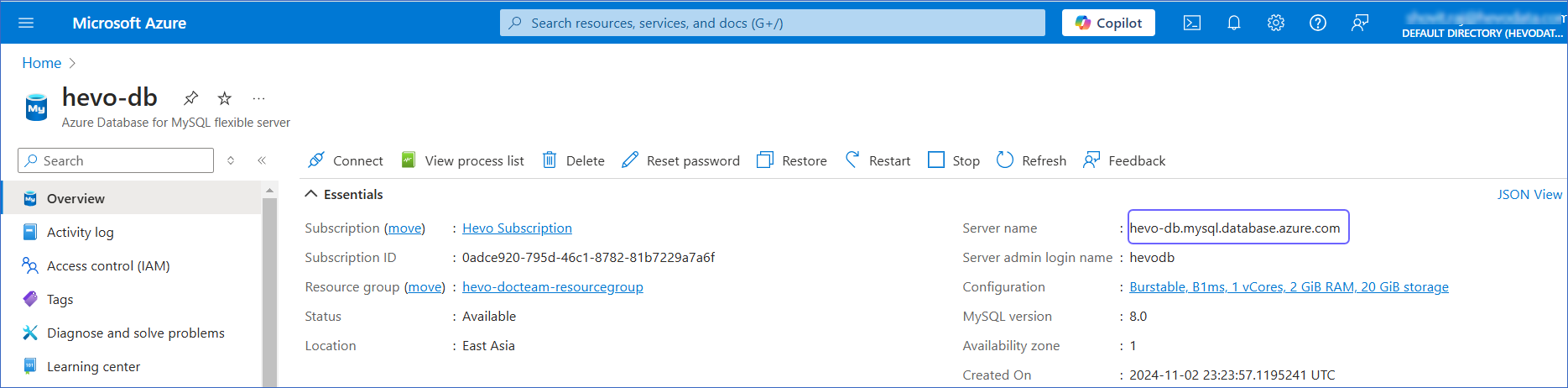

Retrieve the Hostname and Port Number (Optional)

Note: The Azure MySQL hostnames start with your database name and end with windows.net.

For example:

Host: mysql.database.windows.net

Port: 3306

To retrieve the hostname:

-

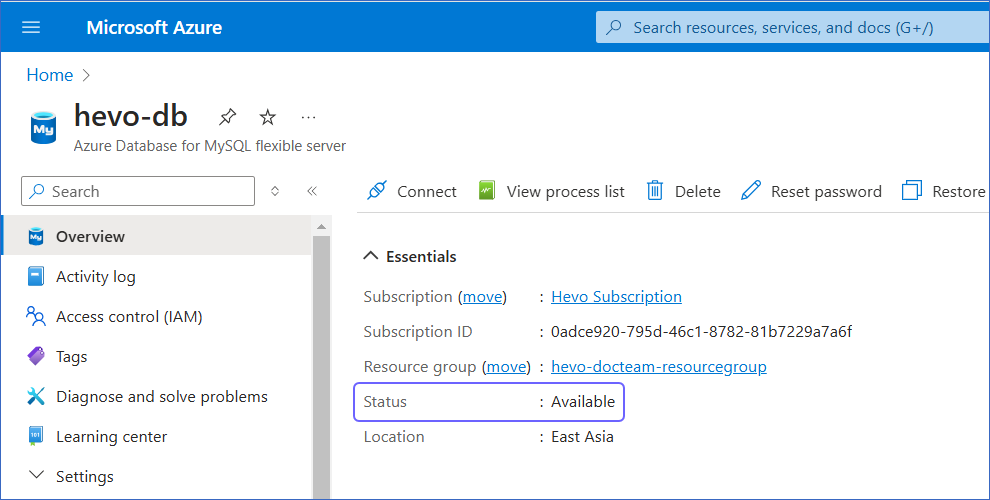

Log in to the Microsoft Azure Portal.

-

Under Resources, Recent tab select your Azure database for MySQL server.

-

Under Essentials panel, locate the Server name. Use this Server name as the hostname in Hevo while creating your Pipeline.

The default port is 3306.

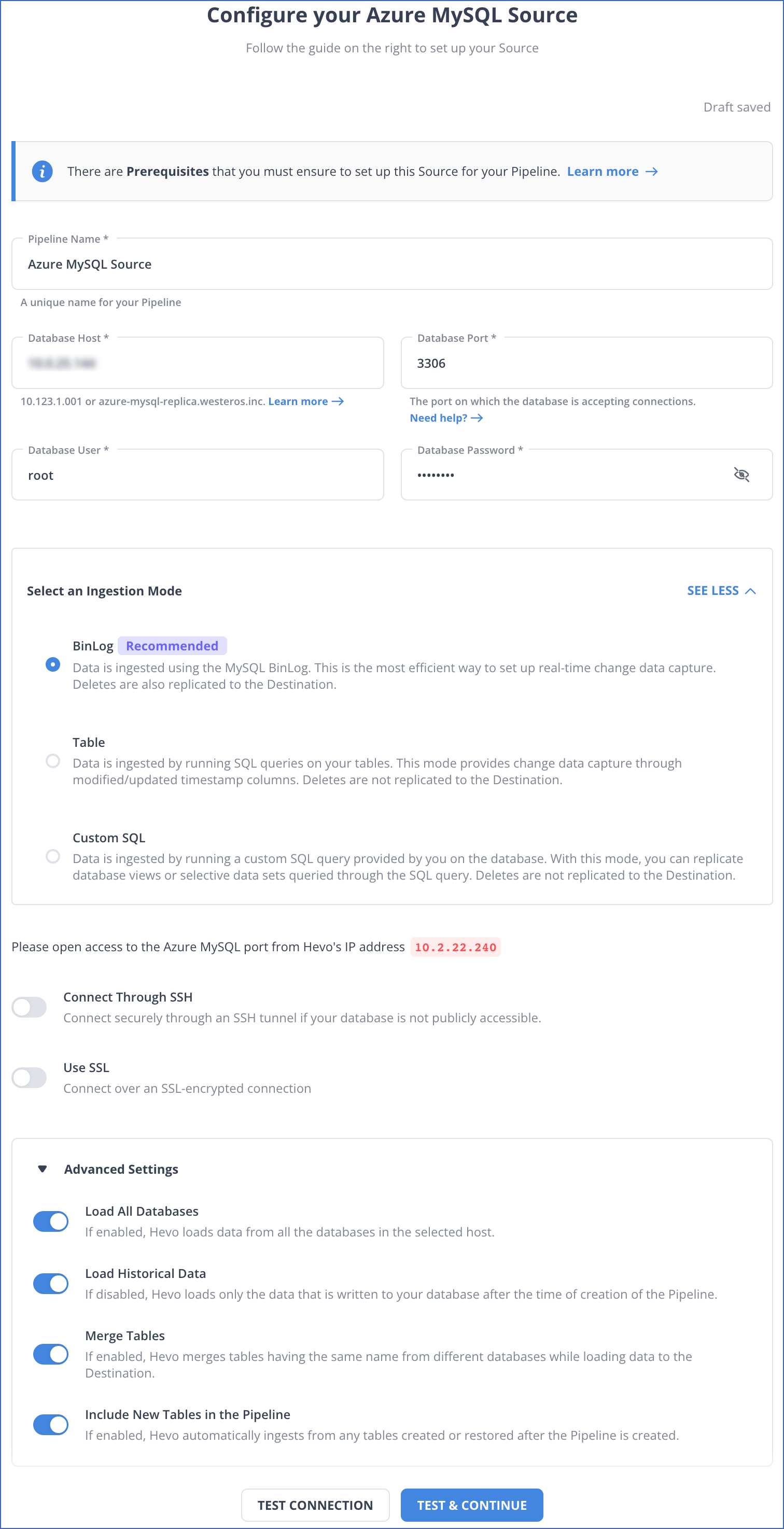

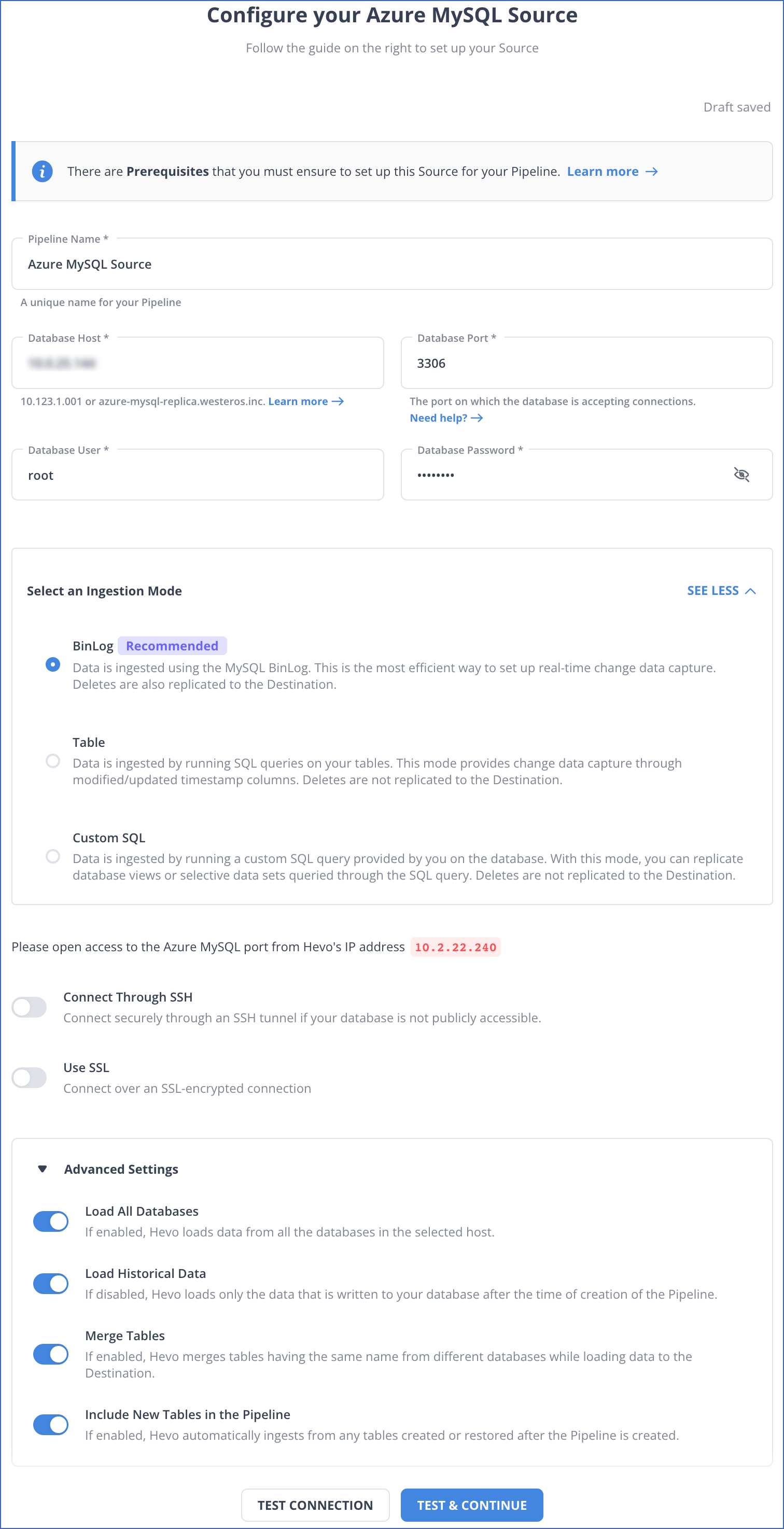

Specify Azure MySQL Connection Settings

Perform the following steps to configure Azure MySQL as a Source in Hevo:

-

Click PIPELINES in the Navigation Bar.

-

Click + CREATE PIPELINE in the Pipelines List View.

-

On the Select Source Type page, select Azure MySQL.

-

On the Configure your Azure MySQL Source page, specify the following:

-

Pipeline Name: A unique name for your Pipeline, not exceeding 255 characters.

-

Database Host: The MySQL host’s IP address or DNS.

The following table lists a few examples of MySQL hosts:

| Variant |

Host |

| Amazon RDS MySQL |

mysql-rds-1.xxxxx.rds.amazonaws.com |

| Azure MySQL |

mysql.database.windows.net |

| Generic MySQL |

10.123.10.001 or mysql-replica.westeros.inc |

| Google Cloud MySQL |

35.220.150.0 |

Note: For URL-based hostnames, exclude the http:// or https:// part. For example, if the hostname URL is http://mysql-replica.westeros.inc, enter mysql-replica.westeros.inc.

-

Database Port: The port on which your Azure MySQL server listens for connections. Default value: 3306.

-

Database User: The authenticated user who has the permissions to read tables in your database.

-

Database Password: The password for the database user.

-

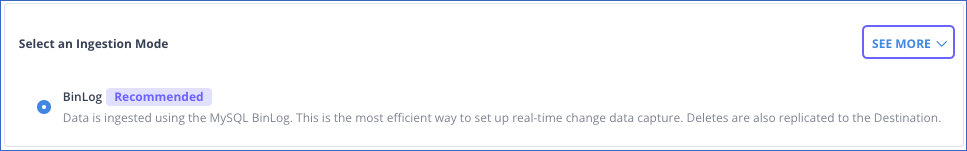

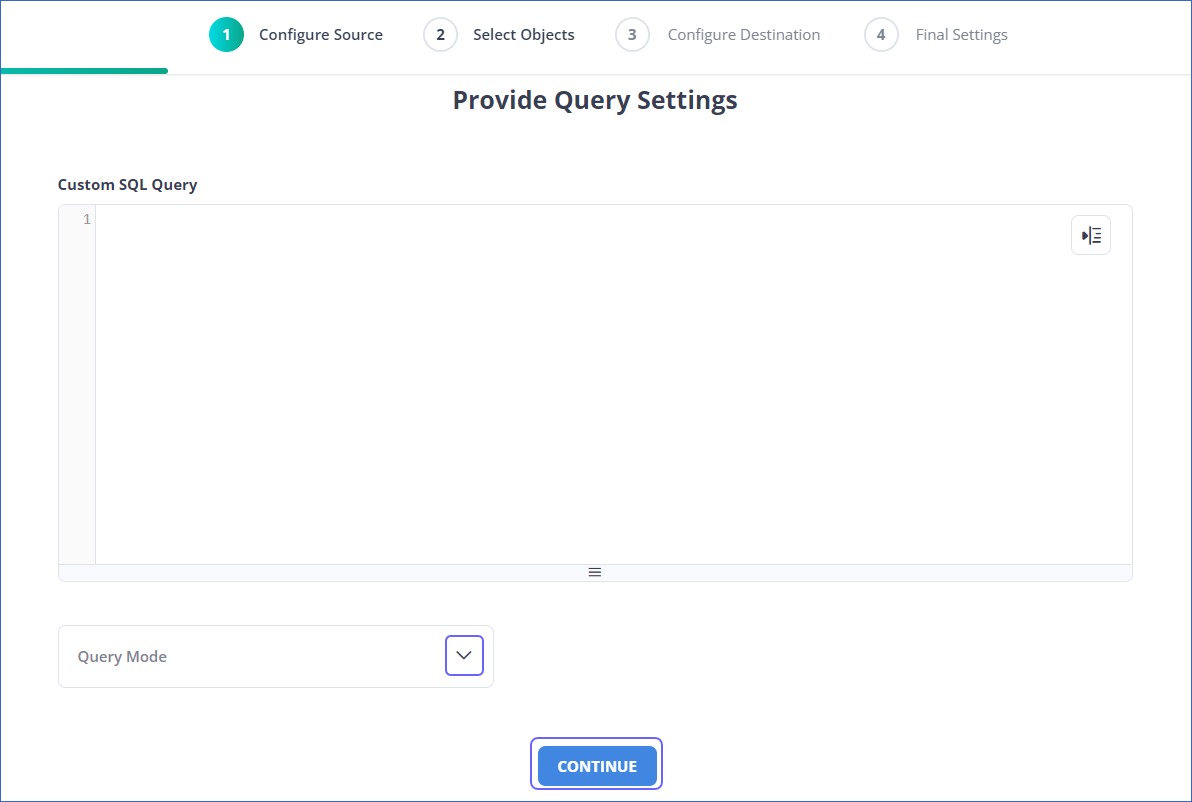

Select an Ingestion Mode: The desired mode by which you want to ingest data from the Source. You can expand this section by clicking SEE MORE to view the list of ingestion modes to choose from. Default value: BinLog. The available Ingestion Modes are BinLog, Table, and Custom SQL.

Depending on the ingestion mode you select, you must configure the objects to be replicated. Refer to section, Object and Query Mode Settings for the steps to do this.

Note: For Custom SQL ingestion mode, all Events loaded to the Destination are billable.

-

Database Name: The database you want to load data from if the Pipeline mode is Table or Custom SQL.

-

Connection Settings

-

Connect through SSH: Enable this option to connect to Hevo using an SSH tunnel, instead of directly connecting your MySQL database host to Hevo. This provides an additional level of security to your database by not exposing your MySQL setup to the public. Read Connecting Through SSH.

If this option is disabled, you must whitelist Hevo’s IP addresses. Refer to the content for your MySQL variant for steps to do this.

-

Use SSL: Enable it to use SSL encrypted connection. To enable this, specify the following:

-

CA File: The file containing the SSL server certificate authority (CA).

-

Load all CA Certificates: If selected, Hevo loads all CA certificates (up to 50) from the uploaded CA file, else it loads only the first certificate.

Note: Select this check box if you have more than one certificate in your CA file.

-

Client Certificate: The client public key certificate file.

-

Client Key: The client private key file.

-

Advanced Settings

-

Load All Databases: This option applies to log-based Pipelines. If enabled, Hevo loads the data from all databases on the selected host and fetches the schema for all the tables within these databases. If disabled, specify a comma-separated list of database names from which you want to load data. Hevo fetches the schema of tables only from the specified databases.

Note:

-

Hevo cannot access or read any tables that are not part of databases active in the Pipeline.

-

Hevo requires read access to the specified databases.

-

If access is restricted to certain databases, Hevo fetches the schema only of tables within those databases. For example, if Load All Databases option is enabled, but Hevo has permission to access only one database, it cannot fetch schemas from the others. This may lead to issues when the data required for queries or Transformations resides outside the accessible database. To avoid such cases, ensure that Hevo has permission to access all active databases.

-

Load Historical Data: Applicable for Pipelines with BinLog mode. If this option is enabled, the entire table data is fetched during the first run of the Pipeline. If disabled, Hevo loads only the data that was written in your database after the time of creation of the Pipeline.

-

Merge Tables: Applicable for Pipelines with BinLog mode. If this option is enabled, Hevo merges tables with the same name from different databases while loading the data to the warehouse. Hevo loads the Database Name field with each record. If disabled, the database name is prefixed to each table name. Read How does the Merge Tables feature work?.

-

Include New Tables in the Pipeline: Applicable for all Ingestion modes except Custom SQL.

If enabled, Hevo automatically ingests data from tables created in the Source after the Pipeline has been built. These may include completely new tables or previously deleted tables that have been re-created in the Source.

If disabled, new and re-created tables are not ingested automatically. They are added in SKIPPED state in the objects list, on the Pipeline Overview page. You can update their status to INCLUDED to ingest data. You can include these objects post-Pipeline creation to ingest data.

You can change this setting later.

-

Click TEST CONNECTION. This button is enabled once you specify all the mandatory fields. Hevo’s underlying connectivity checker validates the connection settings you provide.

-

Click TEST & CONTINUE to proceed for setting up the Destination. This button is enabled once you specify all the mandatory fields.

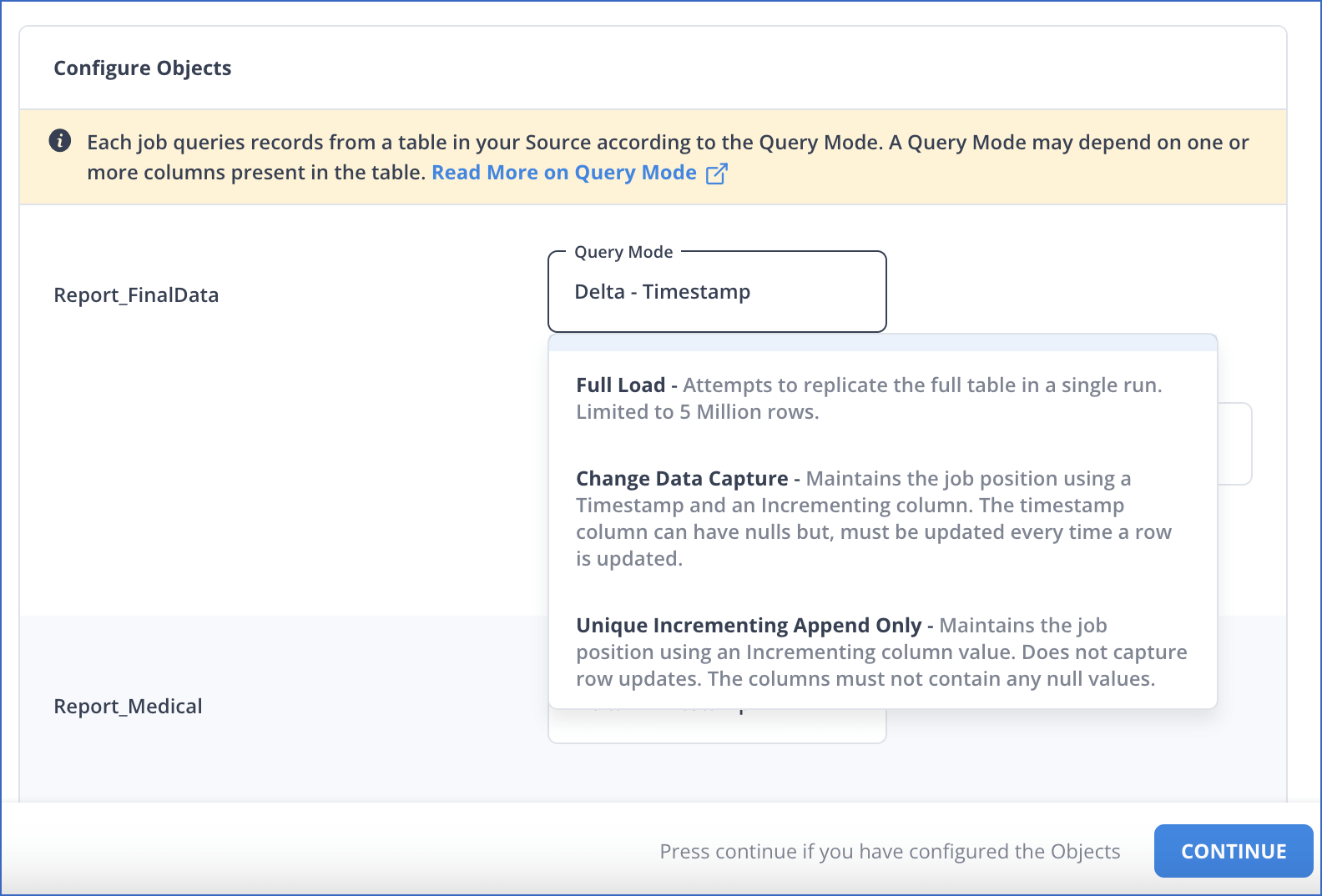

Object and Query Mode Settings

Once you have specified the Source connection settings in Step 6 above, do one of the following:

Data Replication

| For Teams Created |

Ingestion Mode |

Default Ingestion Frequency |

Minimum Ingestion Frequency |

Maximum Ingestion Frequency |

Custom Frequency Range (in Hrs) |

| Before Release 2.21 |

Table |

15 Mins |

15 Mins |

24 Hrs |

1-24 |

| |

Log-based |

5 Mins |

5 Mins |

1 Hr |

NA |

| After Release 2.21 |

Table |

6 Hrs |

30 Mins |

24 Hrs |

1-24 |

| |

Log-based |

30 Mins |

30 Mins |

12 Hrs |

1-24 |

Note: The custom frequency must be set in hours as an integer value. For example, 1, 2, or 3 but not 1.5 or 1.75.

-

Historical Data: In the first run of the Pipeline, Hevo ingests all available data for the selected objects from your Source database.

-

Incremental Data: Once the historical load is complete, data is ingested as per the ingestion frequency.

Read the detailed Hevo documentation for the following related topics:

Source Considerations

-

MySQL does not generate log entries for cascading deletes. So, Hevo cannot capture these deletes for log-based Pipelines.

-

If your Pipeline uses BinLog ingestion mode, MySQL replicates timestamp fields such as created_at and updated_at in Coordinated Universal Time (UTC). As BinLogs do not include time zone metadata, Hevo and the Destination interpret these values in UTC. Due to this, you may observe a time difference if the Source database uses a different timezone. For example, if the Source timezone is US Eastern Time (UTC-4) and the timestamp for created_at in MySQL is 2024-05-01 10:00:00, it appears as 2024-05-01 14:00:00 in BigQuery. This behavior applies only to incremental loads via BinLogs. Any data replicated using historical load retains the original timestamp values from the Source.

As a workaround, you can adjust the UTC timestamps to your local timezone using Python code-based Transformations by adding or subtracting the appropriate offset in the timestamp fields. For example, to convert UTC to UTC+7, add a 7-hour offset to the relevant fields before loading the data into the Destination.

Limitations

-

For MySQL versions 8.0.4 and above, based on the value of the default_authentication_plugin system variable, connecting database users are authenticated using the caching_sha2_password plugin by default. However, Hevo does not currently support this plugin, and you may see the Public Key Retrieval is not allowed error. In that case, you can change the authentication plugin to mysql_native_password for the user.

To do this, connect to your MySQL server as a root user and run the following command:

ALTER USER '<database_username>'@'%' IDENTIFIED WITH mysql_native_password BY '<password>';

Note: Replace the placeholder values in the command above with your own. For example, <database_username> with hevouser.

-

Currently, Hevo does not support transaction-based replication using Global Transaction Identifiers (GTIDs).

-

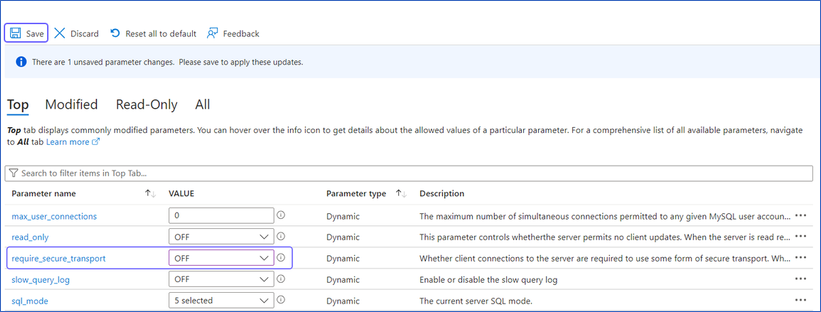

Logging in using SSL not supported. This setting is enabled by default. You can disable it as follows:

-

In the left navigation pane, under Settings, click Server Parameters.

-

Under the Top tab, update the value of require_secure_transport server parameter to OFF, and then click Save.

-

Hevo only fetches tables from the MySQL database. It does not fetch other entities such as functions, stored procedures, views, and triggers.

To fetch views, you can create individual Pipelines in Custom SQL mode. However, some limitations may arise based on the type of data synchronization, the query mode, or the number of Events. Contact Hevo Support for more details.

-

During the historical load, Hevo reads table definitions directly from the MySQL database schema, whereas for incremental updates, Hevo reads from the BinLog. As a result, certain fields, such as nested JSON, are parsed differently during historical and incremental loads. In the Destination tables, nested JSON fields are parsed as a struct or JSON during historical loads, but as a string during incremental loads. This leads to a data type mismatch between the Source and Destination data, causing Events to be sidelined.

To ensure JSON fields are parsed correctly during the historical load, you can apply transformations to every table containing nested JSON fields. Contact Hevo Support for more details.

-

Hevo Pipelines may fail to process transactions in the BinLog if the size of the transaction exceeds 4GB. This problem is due to a MySQL bug that affects the library used by Hevo to stream Events, resulting in ingestion failures. In such cases, Hevo attempts to restart the ingestion process from the beginning of the transaction, skipping already processed Events. If the problem of transaction processing persists and the BinLog remains stuck, contact Hevo Support for assistance.

-

Hevo does not load an Event into the Destination table if its size exceeds 128 MB, which may lead to discrepancies between your Source and Destination data. To avoid such a scenario, ensure that each row in your Source objects contains less than 100 MB of data.

See Also

Revision History

Refer to the following table for the list of key updates made to this page:

| Date |

Release |

Description of Change |

| Jun-30-2025 |

NA |

Updated section, Source Considerations to add a point about UTC replication of timestamp fields in BinLog mode. |

| Jun-23-2025 |

NA |

- Updated the Create a database user section to segregate the commands based on database version.

- Added a limitation about Hevo not supporting the caching_sha2_password authentication plugin. |

| May-19-2025 |

NA |

Updated section, Limitations to add note about GTID based replication. |

| Mar-13-2025 |

NA |

Updated section, Prerequisites to mention the supported MySQL versions for each ingestion mode. |

| Jan-20-2025 |

NA |

Added a note for Load All Databases in the Pipeline Advanced Settings in the Specify Azure MySQL Connection Settings section. |

| Jan-07-2025 |

NA |

Updated the Limitations section to add information on Event size. |

| Dec-18-2024 |

NA |

Updated section, Limitations to add information about Hevo handling transaction failures in the BinLog due to a MySQL bug affecting transactions exceeding 4GB. |

| Nov-18-2024 |

NA |

Updated sections, Create a Read Replica (Optional), Whitelist Hevo’s IP Addresses, and Limitations as per the latest Azure MySQL UI. |

| Jul-31-2024 |

NA |

Updated section, Limitations to add information about Hevo reading table definitions differently during historical and incremental loads. |

| Apr-29-2024 |

NA |

Updated section, Specify Azure MySQL Connection Settings to include more detailed steps. |

| Mar-18-2024 |

2.21.2 |

Updated section, Specify Azure MySQL Connection Settings to add information about the Load all CA certificates option. |

| Mar-05-2024 |

2.21 |

Added the Data Replication section. |

| Nov-03-2023 |

NA |

Renamed section, Object Settings to Object and Query Mode Settings. |

| Oct-27-2023 |

NA |

Updated section, Create a Database User and Grant Privileges with the latest steps. |

| Jun-26-2023 |

NA |

Added section, Source Considerations. |

| Apr-21-2023 |

NA |

Updated section, Specify Azure MySQL Connection Settings to add a note to inform users that all loaded Events are billable for Custom SQL mode-based Pipelines. |

| Mar-09-2023 |

2.09 |

Updated section, Specify Azure MySQL Connection Settings to mention about SEE MORE in the Select an Ingestion Mode section. |

| Dec-19-2022 |

2.04 |

Updated section, Specify Azure MySQL Connection Settings to add information that you must specify all fields to create a Pipeline. |

| Dec-07-2022 |

2.03 |

Updated section, Specify Azure MySQL Connection Settings to mention about including skipped objects post-Pipeline creation. |

| Dec-07-2022 |

2.03 |

Updated section, Specify Azure MySQL Connection Settings to mention about the connectivity checker. |

| Oct-13-2022 |

1.99 |

Updated section, Specify Azure MySQL Connection Settings to reflect the latest UI changes. |

| Apr-21-2022 |

1.86 |

Updated section, Specify Azure MySQL Connection Settings. |

| Aug-09-2021 |

NA |

Added a note in the Grant privileges to a user step. |

| Jul-26-2021 |

1.68 |

Added a note for the Database Host field. |

| Jul-12-2021 |

NA |

Added section, Specify Azure MySQL Connection Settings. |

| Feb-22-2021 |

1.57 |

Updated the Create a Read Replica section to provide UI-based steps. |