How can I migrate a Pipeline created with one PostgreSQL Source variant to another variant?

On This Page

Hevo provides dedicated connectors for each variant of the PostgreSQL Source. If you migrate your database, you must create a new Pipeline using the connector for the new variant. As the Destination already contains the historical data for the Source objects, you can do one of the following:

Suppose you are moving your Source database from Amazon RDS PostgreSQL database to Google Cloud PostgreSQL. The following sections provide detailed steps to migrate your Pipeline accordingly.

Migrating the Pipeline with Historical Data Replication

Perform the following steps to migrate your Pipeline and reload historical data into the Destination tables:

-

Pause the Pipeline created with the Amazon RDS PostgreSQL database.

-

For log-based Pipelines, drop the replication slot if the database instance is not being deleted after migration. If the instance is deleted, the slot is dropped automatically.

Skip this step if your Pipeline is created with Table or Custom SQL ingestion mode.

-

Migrate your Amazon RDS PostgreSQL database to Google Cloud PostgreSQL.

-

Refer to the Google Cloud PostgreSQL documentation and complete the prerequisites to configure your Source in the Hevo Pipeline.

-

Create a Pipeline using the configuration details of your Google Cloud PostgreSQL database.

-

Use the same Destination setup as the paused Pipeline, including the table prefix. This ensures that data continues to load into the same Destination tables.

Note: If the Source tables were mapped manually in the old Pipeline, apply those mappings in the new Pipeline to continue loading data into the same Destination tables.

-

Once the new Pipeline starts ingesting data, delete the old Pipeline.

Migrating the Pipeline without Historical Data Replication

Perform the following steps to migrate your Pipeline without replicating historical data:

Prepare for Migration

To prevent data loss during migration, perform the following steps:

-

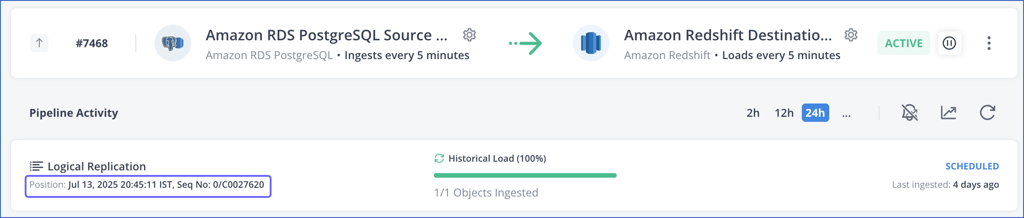

Verify that all data from your Amazon RDS PostgreSQL database was ingested by the Pipeline.

-

For log-based Pipelines, check the latest WAL file position in the Pipeline Activity section of the Pipeline Detailed View. This shows the offset up to which data was successfully ingested.

-

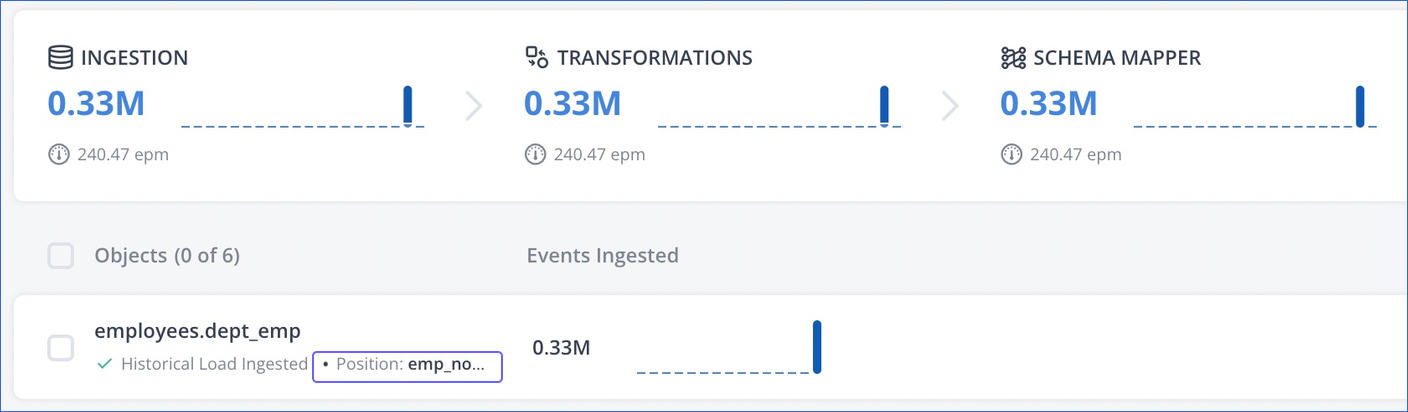

For Pipelines created with Table or Custom SQL ingestion mode, check the ingestion position for all the active objects listed in the Objects section of the Pipeline Detailed View.

-

-

Ensure that no write operations, such as inserts, updates, or deletes, are performed on the Amazon RDS PostgreSQL database until the database migration is complete and you have created a Pipeline with the Google Cloud PostgreSQL database. To enforce this, you can set the Amazon RDS PostgreSQL database to read-only mode so that no new transactions are performed on the database during database migration.

-

Ensure that all ingested data is loaded into the Destination.

-

For data warehouse Destinations, manually trigger the loading of Events.

-

For other Destinations, wait for a buffer period of 30 minutes to 1 hour for the data to be loaded into the Destination.

-

In case there are any failed Events, ensure that they are successfully retried and loaded into the Destination. Read Troubleshooting Failed Events in a Pipeline.

-

-

Pause the Pipeline created with the Amazon RDS PostgreSQL database.

-

For log-based Pipelines, drop the replication slot if the database instance is not being deleted after migration. If the instance is deleted, the slot is dropped automatically.

Skip this step if your Pipeline is created with Table or Custom SQL ingestion mode.

-

Migrate your Amazon RDS PostgreSQL database to Google Cloud PostgreSQL.

-

Ensure that no write operations are performed on the Google Cloud PostgreSQL database until the new Pipeline is created with it.

Set up a new Pipeline

Perform the following steps to create a Pipeline that continues ingesting incremental data into the same Destination tables:

-

Refer to the Google Cloud PostgreSQL documentation and complete the prerequisites to configure your Source in the Hevo Pipeline.

-

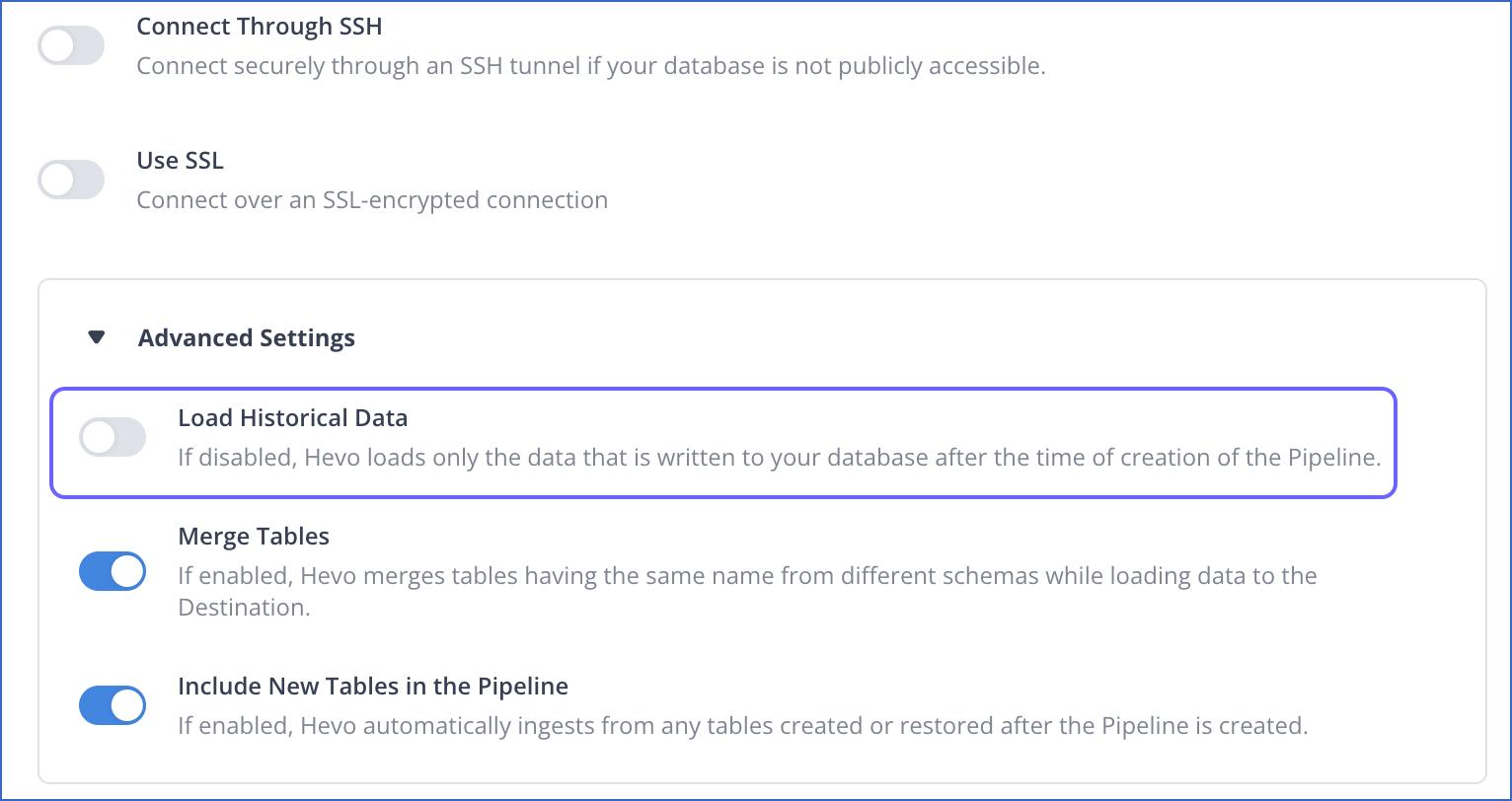

Create a Pipeline using the configuration details of your Google Cloud PostgreSQL database. During Source configuration, disable the Load Historical Data option under the Advanced Settings section. This ensures that the Pipeline ingests only incremental data.

-

Use the same Destination setup as the paused Pipeline, including the table prefix. This ensures that data continues to load into the same Destination tables.

Note:

-

If the Source tables were mapped manually in the old Pipeline, apply those mappings in the new Pipeline to continue loading data into the same Destination tables.

-

If any Transformations were applied in the old Pipeline, copy them to the new Pipeline to retain the data processing logic.

-

-

Once the Pipeline is created, resume write operations on the Google Cloud PostgreSQL database.

-

When the new Pipeline starts ingesting data, delete the old Pipeline.

Revision History

Refer to the following table for the list of key updates made to this page:

| Date | Release | Description of Change |

|---|---|---|

| Aug-19-2025 | NA | Updated sub-sections: - Prepare for Migration to add information about retrying failed Events. - Set up a new Pipeline to include a note about copying Transformations from the old Pipeline to the new one. |

| Aug-01-2025 | NA | New document. |