Release Version 2.23

On This Page

The content on this site may have changed or moved since you last viewed it. As a result, some of your bookmarks may become obsolete. Therefore, we recommend accessing the latest content via the Hevo Docs website.

This release note also includes the fixes provided in all the minor releases since 2.22.

To know the complete list of features available for early adoption before these are made generally available to all customers, read our Early Access page.

To know the list of features and integrations we are working on next, read our Upcoming Features page!

In this Release

- Upcoming Breaking Changes

- Early Access Features

- New and Changed Features

- Fixes and Improvements

- Documentation Updates

Upcoming Breaking Changes

Sources

-

Migration to API Version v2024-02-15 for Klaviyo Source

-

Effective June 30, 2024, Klaviyo’s legacy APIs v1-2 are scheduled to sunset. We will soon be upgrading to the newer API Version v2024-02-15, which contains new and improved capabilities. This enhancement will also include changes in the schema that may impact Pipelines created with this Source.

Please stay tuned for further communication about the enhancement and its release date.

-

Early Access Features

Destinations

-

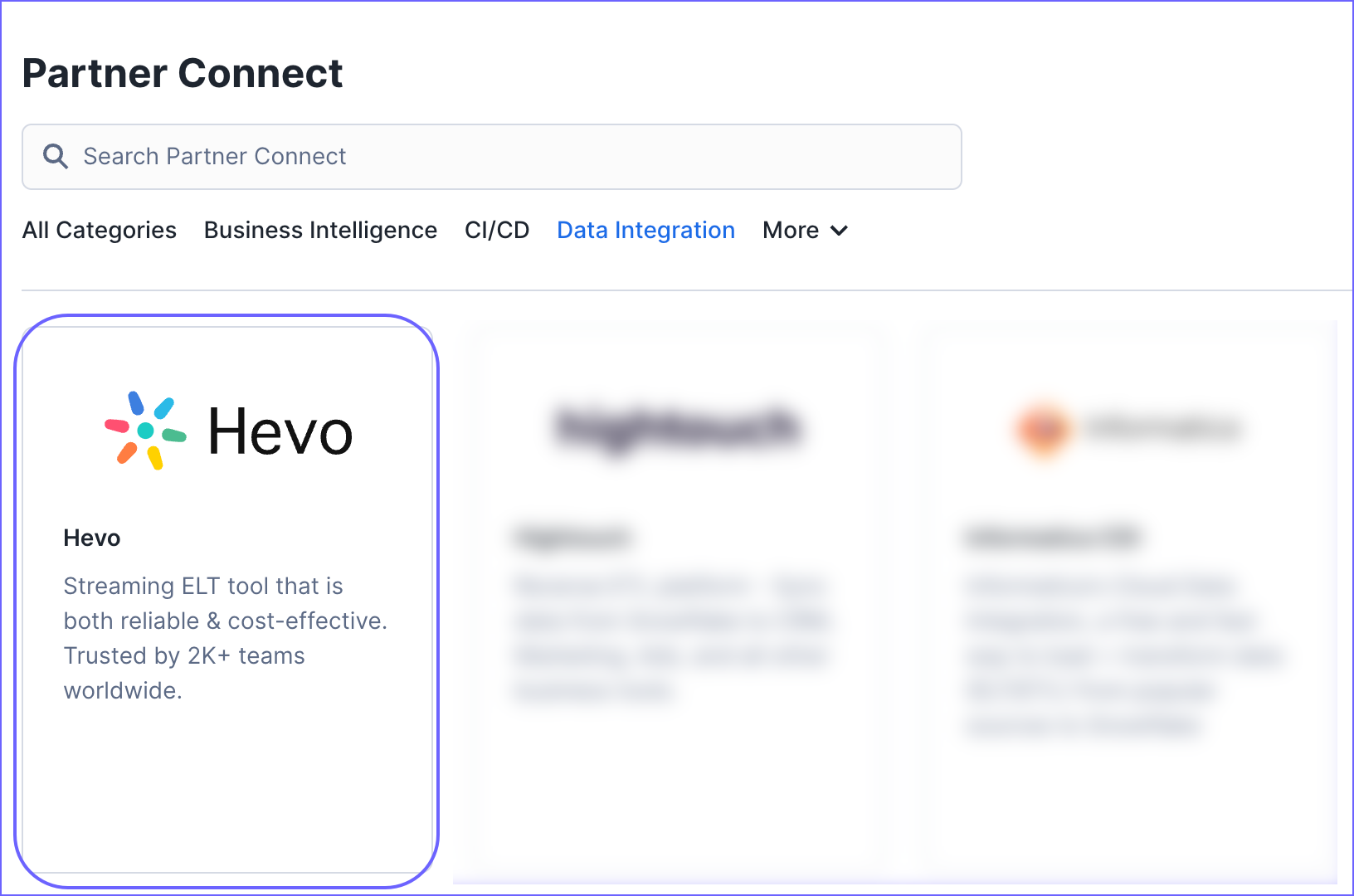

Connecting to Hevo using Snowflake Partner Connect

-

Collaborated with Snowflake to provide support for configuring Snowflake in Hevo using the Snowflake Partner Connect feature. Hevo can now be accessed from within the Snowflake platform, through which you can seamlessly set up an account and create data Pipelines with Snowflake as your Destination.

Read Snowflake.

Refer to the Early Access page for the complete list of features available for early access.

-

-

Prioritized Data Loading to Data Warehouse Destination Tables

-

Enhanced the functionality of the loading task to allow prioritization of data loading to your data warehouse Destination tables. Now, you can manually initiate data loading to a Destination table both within and outside of the defined load schedule, giving you more control over the process. This behavior is an improvement over the earlier one, which loaded data based on sequential hierarchy rather than urgency.

This feature is available for new and existing customers upon request.

Once this feature is enabled for you, you can trigger data loading to a Destination table that is either Queued or already In Progress. Read Manually Triggering the Loading of Events.

Refer to the Early Access page for the complete list of features available for early access.

-

New and Changed Features

Sources

-

Custom Headers for CSV files in Amazon S3 Source

-

Enhanced the Amazon S3 integration to allow using any row in your CSV file as the header row. Now, you can specify the exact row in your CSV file from which you want Hevo to start ingesting data. This behavior is an improvement over the earlier one in which, by default, the first row was used as a header.

This feature is available for new and existing Pipelines. However, for existing Pipelines, you must modify the Source configuration to specify this field.

-

-

Support for Flexible Server Deployment Mode in Azure PostgreSQL Source (Added in Release 2.22.2)

-

Added support for connecting to Azure PostgreSQL databases deployed in a flexible server. This ensures that users can efficiently manage their data integration needs regardless of their deployment mode. While configuring the Source for Pipeline creation, you now have the option to select the appropriate server type, Flexible Server or Single Server, based on the deployment mode of your server.

-

User Assistance

-

Connecting through AWS Transit Gateway (Added in Release 2.22.1)

-

Enhanced the product documentation to include information about connecting through an AWS transit gateway. Further, guidance has been provided to help you seamlessly connect through a transit gateway peering attachment to your Source or Destination database hosted within a VPC.

Read Connection Options and Connecting Through AWS Transit Gateway.

-

-

Connecting through AWS VPC Endpoint (Added in Release 2.22.2)

-

Enhanced the product documentation to include information about connecting through an AWS VPC endpoint. Further, guidance has been provided to help you seamlessly connect to your Source or Destination database hosted within a VPC through a VPC endpoint.

Read Connection Options and Connecting Through AWS VPC Endpoint

-

Fixes and Improvements

Refer to this section for the list of fixes and improvements implemented from Release 2.22.1 to 2.23.

Destinations

-

Handling of Corrupt Sink Files for Databricks Destination

- Fixed an issue whereby the file uploader task skipped corrupt sink files while loading data to the Databricks Destination, resulting in missed updates and data mismatch issues between the Source and the Destination. Data is temporarily stored in sink files created at the staging location before being loaded to the Destination. If a system failure occurs before the data is completely written to the sink file, Hevo considers the file corrupt and skips loading it. With this fix, Hevo now replays the backup file for any identified corrupt sink file to ensure no updates are missed.

-

Handling Loading Statistics Issue for Amazon S3 Destination (Fixed in Release 2.22.3)

-

Fixed an issue in the pending files uploader task, whereby the statistics (stats) of the uploaded files, such as the number of files processed, were not written to Hevo’s database. As the stats shown on the user interface (UI) are retrieved from this database, the information displayed on the UI was inaccurate.

This fix applies to new and existing Pipelines.

-

Sources

-

Enhanced Parsing Strategy for String Fields in Oracle Source (Fixed in Release 2.22.3)

-

Fixed an issue in the Oracle Source whereby single quotes were not parsed correctly in columns with string values, leading to a data mismatch between the Source and the Destination. Hevo now ensures that single quotes in string fields are escaped to parse the data as is to the Destintion table.

This fix applies to Pipelines created with any variant of Oracle after Release 2.22.3.

-

-

Handling of Auto-Mapping Issue in Oracle Sources

-

Fixed an issue whereby the incremental ingestion task failed to verify whether the Merge Tables option was disabled during the creation of log-based Pipelines. This caused Hevo to name the Destination tables incorrectly. While the historical ingestion task generated the table name with the correct syntax, schemaname.tablename, the incremental task dropped the schemaname part. As a result, two tables were created at the Destination for the same Source object, leading to the splitting of historical and incremental data into separate tables.

This fix applies to log-based Pipelines created with any variant of Oracle after Release 2.23.

-

-

Handling of Conversations Object in Front Source (Fixed in Release 2.22.2)

-

Fixed an issue in Front, whereby Pipelines got stuck while ingesting the Conversations object due to Hevo’s lack of access to certain private inboxes. Hevo now skips any inboxes without the required permissions enabled and proceeds to the next accessible inbox to ingest data.

This fix applies to new and existing Pipelines. If you want to ingest data from a skipped inbox, you can assign permissions to it and restart ingestion for the object.

-

-

Handling Parsing Issues for JSON Fields in MySQL Sources

-

Fixed an issue whereby JSON fields in the MySQL Source data were being incorrectly mapped to the Destination. With this fix, JSON fields are mapped either as Strings or as JSON based on the parsing strategy supported by the Destination.

This fix applies to new Pipelines created after Release 2.23, ensuring accurate data type mapping and alignment with the specified JSON parsing strategy.

-

-

Support for Geometry Data Type in MySQL Sources (Fixed in Release 2.22.1)

-

Fixed an issue in the MySQL Source, whereby incremental data containing the Geometry data type was being parsed as an encoded string, resulting in the actual field values not being visible at the Destination.

This fix applies to log-based Pipelines created after Release 2.22.1 with any variant of MySQL.

-

User Experience

-

Improved Alerts Usability for Destinations

- Fixed an issue whereby Destination-related alert messages sent via email and Slack referenced the correct Destination but displayed an incorrect ID. This fix is available to new and existing teams.

Documentation Updates

The following pages have been created, enhanced, or removed in Release 2.23:

Data Ingestion

Data Loading

Destinations

Getting Started

Pipelines

-

Schema Mapper