Release Version 2.04

On This Page

The content on this site may have changed or moved since you last viewed it. As a result, some of your bookmarks may become obsolete. Therefore, we recommend accessing the latest content via the Hevo Docs website.

In this Release

New and Changed Features

Alerts

-

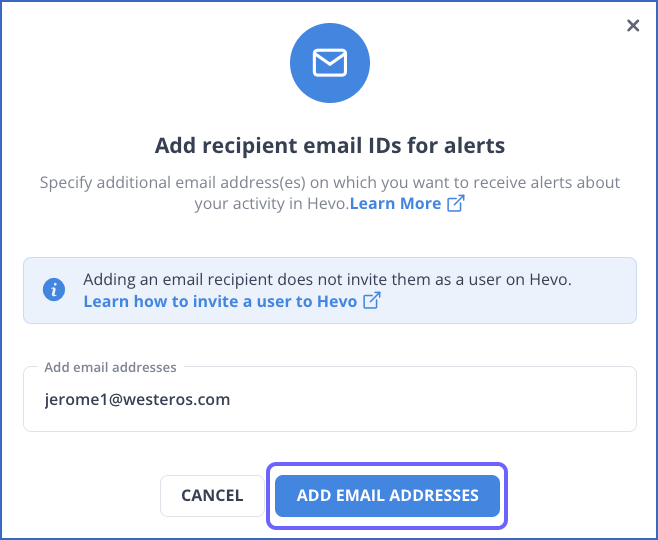

Alerts on External Email Addresses

-

Enhanced Hevo Alerts to support email addresses other than the ones registered with Hevo. You can now receive alerts on individual and group email IDs and alerting tools such as Opsgenie and PagerDuty.

Read Alerts.

-

Pipelines

-

Support for Geospatial Data Types for the MySQL and PostgreSQL Sources

- Added support for the geography, geometry data types for all the variants of MySQL or PostgreSQL Source and Snowflake, Google BigQuery, PostgreSQL, SQL Server, or MySQL as the Destination. This feature is applicable to new Pipelines.

Sources

-

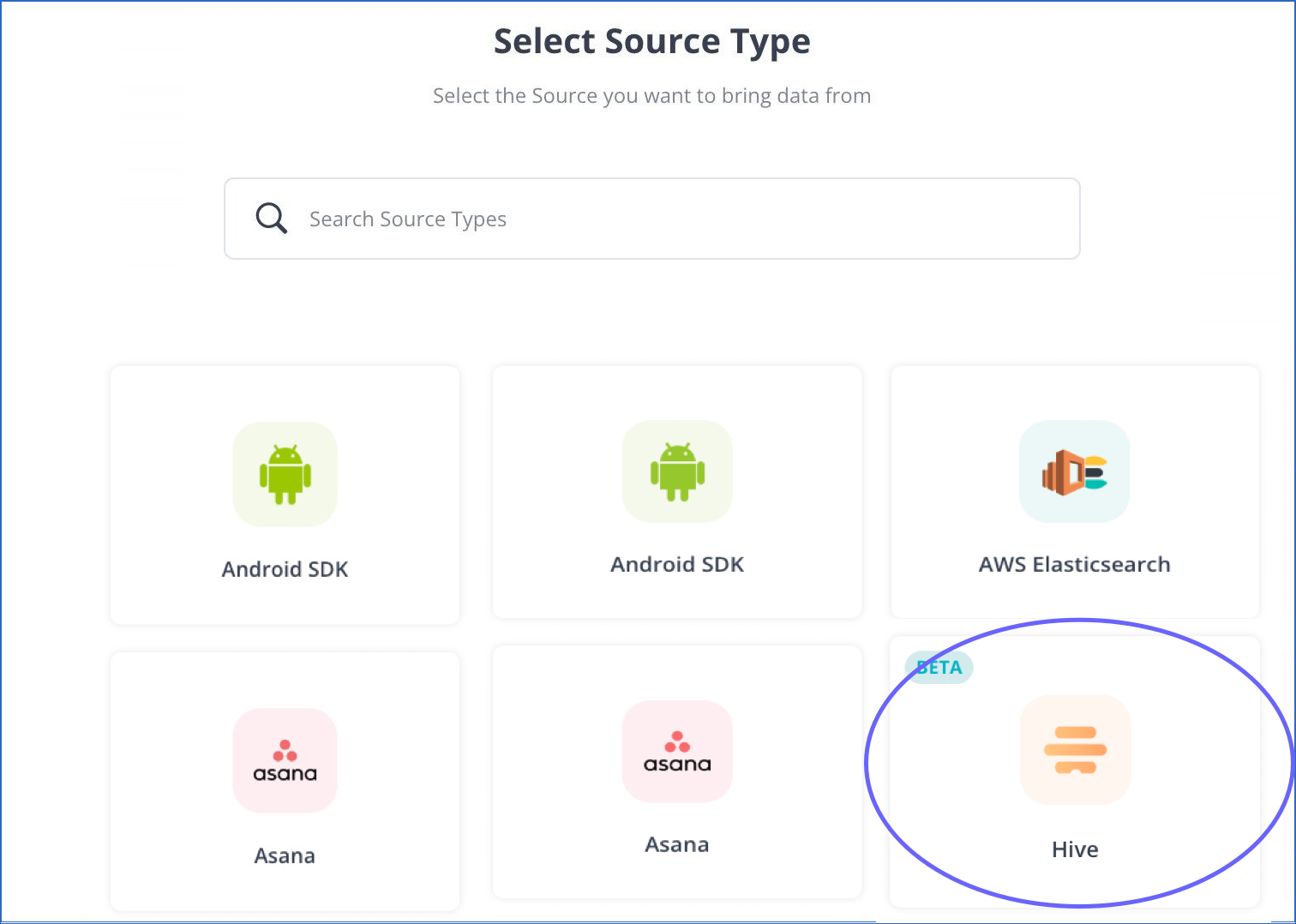

Hive as a Source

-

Integrated Hive, a project management and collaboration platform that allows you to manage your projects efficiently.

To use this integration, you must obtain the API credentials from the Hive account from where your data resides. The API credentials are used by Hevo for authentication.

Read Hive.

-

-

Improved Handling of Date Columns in Oracle

- Improved support for ingestion of a column of the Date data type for all the variants. Hevo now ingests the column as is, instead of the previous behavior of removing the time information from it. This change is applicable to all new Pipelines.

-

PandaDoc as a Source

-

Provided integration with PandaDoc, a sales platform, as a Source for creating Pipelines. Hevo uses the PandaDoc API to ingest data from your Source.

To use this integration, you must generate the API keys in the PandaDoc account from where you want to ingest the data. The API keys are used for authentication by Hevo to access the data.

Read PandaDoc.

-

-

Reduced Source Connection Errors

- Enhanced the Source configuration user interface to enable the TEST & CONTINUE and TEST CONNECTION buttons only after all the mandatory fields are filled in by the users. As a result, users encounter fewer errors while testing the connection. The feature is currently applicable to all variants of MySQL, MariaDB, Oracle PostgreSQL, and SQL Server.

-

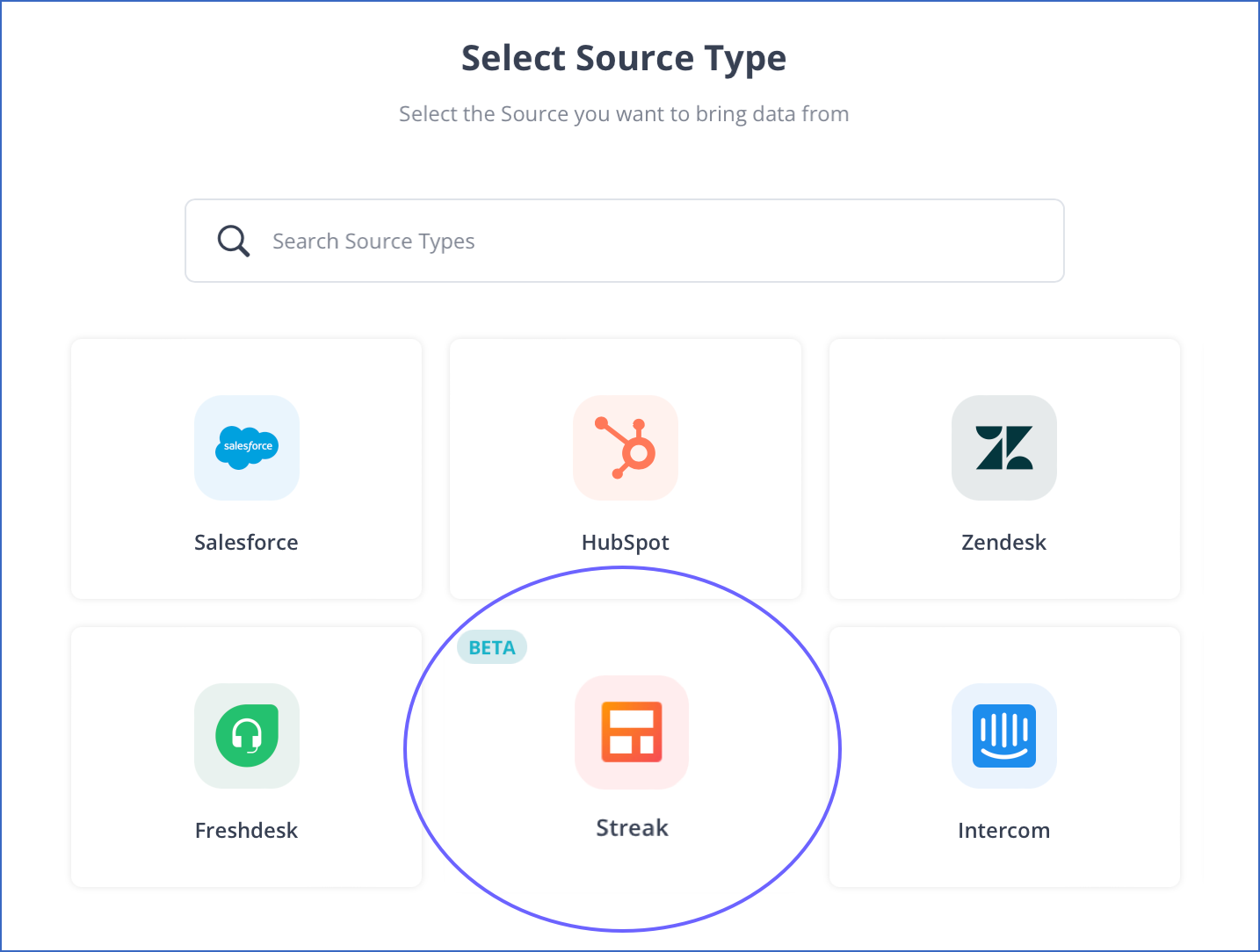

Streak as a Source

-

Integrated Streak, a CRM tool, as a Source for creating Pipelines.

To use this integration, you must add the Streak extension in your web browser and generate the API key to ingest the data. You must specify this key while configuring the Source, to authenticate Hevo for accessing your data.

Read Streak.

-

-

Support for Additional Objects in Freshdesk

-

Added support for ingesting data for three additional objects: Discussions, Products and Time entries. This feature is applicable to all new Pipelines.

Read Data Model.

-

User Experience

-

Auto-discovery of Data Roots for the REST API Source

-

Enhanced the integration to discover the data roots from the API response while configuring a REST API Source, and display the first suggested data root, thereby making the configuration process easier.

-

-

Basic Authentication for Webhook Pipelines

-

Enhanced the Webhook Source integration to support the Basic Authentication method for connecting your application. Basic auth Pipelines allow you to add an access key to the Webhook URL to make the Pipeline more secure.

Read Webhook.

-

Fixes and Improvements

Destinations

-

Name Sanitization in Snowflake Destinations

- Fixed an issue in the Snowflake Destination, whereby table and column names were being sanitized even though Hevo does not support name sanitization for Snowflake currently.

-

Support for Private Link in Snowflake Destinations

-

Added support for connecting to the Snowflake instance using a private link. This allows you to transfer data to Snowflake without going through the public Internet or using proxies to connect Snowflake to your cloud network.

Read Snowflake.

-

Documentation Updates

The following pages have been created, enhanced, or removed in Release 2.04:

Account Management

-

Is there a limit on the number of users in Hevo? (Deleted)

Activate

-

Snowflake

Alerts

-

Renamed Customizing Alert Preferences to Setting up Alert Preferences

-

Renamed Getting Alerts in Slack to Getting Alerts in Third-Party Applications

Data Loading

Destinations

Getting Started

Pipelines

-

Examples of Python Code-based Transformations

-

Transformation Blocks and Properties

Sources

-

Hive (New)

-

PandaDoc (New)

-

Streak (New)

-

Troubleshooting Database Sources

-

Troubleshooting Oracle Source

Transform

-

Can I use Models to transform and load data in real-time? (New)